The SURVEYLOGISTIC Procedure

- Overview

- Getting Started

-

Syntax

PROC SURVEYLOGISTIC StatementBY StatementCLASS StatementCLUSTER StatementCONTRAST StatementDOMAIN StatementEFFECT StatementESTIMATE StatementFREQ StatementLSMEANS StatementLSMESTIMATE StatementMODEL StatementOUTPUT StatementREPWEIGHTS StatementSLICE StatementSTORE StatementSTRATA StatementTEST StatementUNITS StatementWEIGHT Statement

PROC SURVEYLOGISTIC StatementBY StatementCLASS StatementCLUSTER StatementCONTRAST StatementDOMAIN StatementEFFECT StatementESTIMATE StatementFREQ StatementLSMEANS StatementLSMESTIMATE StatementMODEL StatementOUTPUT StatementREPWEIGHTS StatementSLICE StatementSTORE StatementSTRATA StatementTEST StatementUNITS StatementWEIGHT Statement -

Details

Missing ValuesModel SpecificationModel FittingSurvey Design InformationLogistic Regression Models and ParametersVariance EstimationDomain AnalysisHypothesis Testing and EstimationLinear Predictor, Predicted Probability, and Confidence LimitsOutput Data SetsDisplayed OutputODS Table NamesODS Graphics

Missing ValuesModel SpecificationModel FittingSurvey Design InformationLogistic Regression Models and ParametersVariance EstimationDomain AnalysisHypothesis Testing and EstimationLinear Predictor, Predicted Probability, and Confidence LimitsOutput Data SetsDisplayed OutputODS Table NamesODS Graphics -

Examples

- References

Hypothesis Testing and Estimation

Degrees of Freedom

In this section, degrees of freedom (df) refers to the denominator degrees of freedom for F statistics in hypothesis testing. It also refers to the degrees of freedom in t tests for parameter estimates and odds ratio estimates, and for computing t distribution percentiles for confidence limits of these estimates. The value of df is determined by the design degrees of freedom f and by what you specify in the DF= option in the MODEL statement.

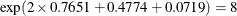

The default df is determined as

![\[ df=\left\{ { \begin{array}{ll} ~ f-r+1 & \mbox{for Taylor variance estimation method} \\ ~ f & \mbox{for replication variance estimation methods} \end{array}} \right. \]](images/statug_surveylogistic0257.png)

where f is the design degrees of freedom and r is the rank of the contrast of model parameters to be tested.

Design Degrees of Freedom f

The design degrees of freedom f is determined by the survey design and the variance estimation method.

Design Degrees of Freedom f for the Taylor Series Method

For Taylor series variance estimation, the design degrees of freedom f can depend on the number of clusters, the number of strata, and the number of observations. These numbers are based on the observations that are included in the analysis; they do not count observations that are excluded from the analysis because of missing values. If all values in a stratum are excluded from the analysis as missing values, then that stratum is called an empty stratum. Empty strata are not counted in the total number of strata for the analysis. Similarly, empty clusters and missing observations are not included in the totals counts of clusters and observations that are used to compute the f for the analysis.

If you specify the MISSING option in the CLASS statement, missing values are treated as valid nonmissing levels and are included in determining the f. If you specify the NOMCAR option for Taylor series variance estimation, observations that have missing values for variables in the regression model are included. For more information about missing values, see the section Missing Values.

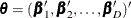

Using the notation that is defined in the section Notation, let  be the total number of clusters if the design has a CLUSTER

statement; let n be the total sample size; and let H be the number of strata if there is a STRATA

statement, or 1 otherwise. Then for Taylor series variance estimation, the design degrees of freedom is

be the total number of clusters if the design has a CLUSTER

statement; let n be the total sample size; and let H be the number of strata if there is a STRATA

statement, or 1 otherwise. Then for Taylor series variance estimation, the design degrees of freedom is

![\[ f=\left\{ { \begin{array}{ll}/* */ \tilde n - H & \mbox{if the design contains clusters} \\ n - H & \mbox{if the design does not contain clusters} \end{array}} \right. \]](images/statug_surveylogistic0259.png)

Design Degrees of Freedom f for the Replication Method

For the replication variance estimation method, the design degrees of freedom f depends on the replication method that you use or whether you use replication weights.

-

If you provide replicate weights but you do not specify DF=value in the REPWEIGHTS statement, f is the number of replicates.

-

If you specify the DF=value option in a REPWEIGHTS statement, then f=value.

-

If you do not provide replicate weights and use BRR (including Fay’s method ) method, then f=H, which is the number of strata.

-

If you do not provide replicate weights and use the jackknife method, then

, where R is the number of replicates and H is the number of strata if you specify a STRATA

statement or H = 1 otherwise.

, where R is the number of replicates and H is the number of strata if you specify a STRATA

statement or H = 1 otherwise.

Setting Design Degrees of Freedom f to a Specific Value

If you do not want to use the default design degrees of freedom, then you can specify the DF=DESIGN(value) or DF=PARMADJ(value) (for Taylor method only) option in the MODEL statement, where value is a positive number. Then f=value.

However, if you specify the value in the DF= option in the MODEL statement as well as with the DF= option in a REPWEIGHTS statement, then the df is determined by the value in the MODEL statement, and the DF= option in the REPWEIGHTS statement is ignored.

Setting Design Degrees of Freedom to Infinity

If you specify DF=INFINITY in the MODEL statement, then the df is set to be infinite.

As the denominator degrees of freedom grows, an F distribution approaches a chi-square distribution, and similarly a t distribution approaches a normal distribution. Therefore, when you specify DF=INFINITY in the MODEL statement), PROC SURVEYLOGISTIC uses chi-square tests and normal distribution percentiles to construct confidence intervals.

Modifying Degrees of Freedom with the Number of Parameters

When you use Taylor series variance estimation (by default or when you specify VARMETHOD=TAYLOR in the MODEL statement), and you are fitting a model that has many parameters relative to the design degrees of freedom, it is appropriate to modify the design degrees of freedom by using the number of nonsingular parameters p in the model (Korn and Graubard (1999, section 5.2), Rao, Scott, and Skinner (1998)). You can specify DF=PARMADJ or DF=PARMADJ(value) in the MODEL statement to request this modification only for Taylor series variance estimation method; and this option does not apply to the replication variance estimation method.

Let f be the design degrees of freedom that is described in the section Design Degrees of Freedom f for the Taylor Series Method. By default, or if you specify the DF=PARMADJ

option, the df is modified as  .

.

Testing Global Null Hypothesis: BETA=0

The global null hypothesis refers to the null hypothesis that all the explanatory effects can be eliminated and the model can contain only intercepts. By using the notations in the section Logistic Regression Models, the global null hypothesis is defined as follows:

-

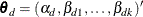

If you have a cumulative model whose model parameters are

, where

, where  are the parameters for the intercepts and

are the parameters for the intercepts and  are the parameters for the explanatory effects, then

are the parameters for the explanatory effects, then  . The number of restrictions r that are imposed on

. The number of restrictions r that are imposed on  is the number of parameters in slope parameter

is the number of parameters in slope parameter  :

:  .

.

-

If you have a generalized logit model whose model parameters are

and

and  , then

, then  . The number of restrictions r that are imposed on

. The number of restrictions r that are imposed on  is the total number of slope parameters in

is the total number of slope parameters in  :

:  .

.

PROC SURVEYLOGISTIC displays these tests in the "Testing Global Null Hypothesis: BETA=0" table.

Rao-Scott Likelihood Ratio Chi-Square Test

For complex survey design, you can use a design-adjusted Rao-Scott likelihood ratio chi-square test to test the global null hypothesis. For information about design-adjusted chi-square tests, see Lohr (2010, Section 10.3.2), Rao and Scott (1981), Rao and Scott (1984), Rao and Scott (1987), Thomas and Rao (1987), Rao and Thomas (1989), and Thomas, Singh, and Roberts (1996).

If you specify the CHISQ(NOADJUST) option, PROC SURVEYLOGISTIC computes the likelihood ratio chi-square test without the Rao-Scott design correction. If you specify the CHISQ(FIRSTORDER) option, PROC SURVEYLOGISTIC performs a first-order Rao-Scott likelihood ratio chi-square test. If you specify the CHISQ(SECONDORDER) option, PROC SURVEYLOGISTIC performs a second-order Rao-Scott likelihood ratio chi-square test.

If you do not specify the CHISQ option, the default test depends on the design and the model. By default, PROC SURVEYLOGISTIC performs a first-order or second-order Rao-Scott likelihood ratio chi-square (Satterthwaite) test if your design is not simple random sampling or when you provide replicate weights. Otherwise, if your design is simple random sampling and you do not provide replicate weights, PROC SURVEYLOGISTIC does not make any adjustment for the likelihood ratio test. In other words:

-

If your design does not contain stratification nor clustering, and you do not provide replicate weights, then by default PROC SURVEYLOGISTIC performs a likelihood ratio chi-square test without any adjustment.

-

If your design contains either stratification or clustering, or if you provide replicate weights, then by default PROC SURVEYLOGISTIC performs a likelihood ratio chi-square test with Rao-Scott adjustment. However, the default order of the adjustment depends on the number of model parameters excluding the intercepts.

-

If there is more than one nonintercept parameter in the model, the default is the second-order Rao-Scott likelihood ratio test.

-

If there is only one nonintercept parameter in the model, there is no need to compute the second-order adjustment. Therefore, the default is the first-order Rao-Scott likelihood ratio test.

-

Let  be the estimated parameters, let

be the estimated parameters, let  be the estimated parameters under the global null hypothesis, and let r be the restrictions imposed on

be the estimated parameters under the global null hypothesis, and let r be the restrictions imposed on  under the global null hypothesis

under the global null hypothesis  . Let

. Let  be the log-likelihood function.

be the log-likelihood function.

Denote the estimated covariance matrix of  under simple random sampling as

under simple random sampling as  , and its partition corresponding to the r slope parameters as

, and its partition corresponding to the r slope parameters as  . Similarly, denote the estimated covariance matrix of

. Similarly, denote the estimated covariance matrix of  under the sample design as

under the sample design as  , and its partition corresponding to the r slope parameters as

, and its partition corresponding to the r slope parameters as  .

.

Define the design effect matrix E as

![\[ E=\hat V_{rr}(\hat{\btheta }) \left({\hat V^{\mbox{srs}}_{rr}}(\hat{\btheta })\right)^{-1} \]](images/statug_surveylogistic0278.png)

Denote  as the rank of E and the positive eigenvalues of the design matrix E as

as the rank of E and the positive eigenvalues of the design matrix E as  .

.

Likelihood Ratio Chi-Square Test

Without the Rao-Scott design correction, the global null hypothesis is tested using either the chi-square statistics,

![\[ Q_{\chi ^{2}}= 2 \left[ L (\hat{\btheta }) - L(\hat{\btheta }_{H_0}) \right] \]](images/statug_surveylogistic0281.png)

with r degrees of freedom, or an equivalent F statistics,

![\[ F= 2 \left[ L (\hat{\btheta }) - L(\hat{\btheta }_{H_0}) \right] / r \]](images/statug_surveylogistic0282.png)

with  degrees of freedom.

degrees of freedom.

Rao-Scott First-Order Chi-Square Test

To address the impact of a complex survey design on the significance level of the likelihood ratio test, Rao and Scott (1984) proposed a first-order correction to the chi-square statistics as

![\[ Q_{RS1}=Q_{\chi ^{2}}/\bar\delta _\cdot \]](images/statug_surveylogistic0284.png)

where the first-order design correction,

![\[ \bar\delta _\cdot = \sum _{i=1}^{r^*} {\delta _ i}/r^* \]](images/statug_surveylogistic0285.png)

is the average of positive eigenvalues of the design effect matrix E.

Under the null hypothesis, the first-order Rao-Scott chi-square  approximately follows a chi-square distribution with

approximately follows a chi-square distribution with  degrees of freedom.

degrees of freedom.

The corresponding F statistic is

![\[ F_{RS1} = Q_{RS1} / r^* \]](images/statug_surveylogistic0287.png)

which has an F distribution with  and

and  degrees of freedom under the null hypothesis (Thomas and Rao 1984, 1987), and df is the design degrees of freedom as described in the section Design Degrees of Freedom f for the Taylor Series Method.

degrees of freedom under the null hypothesis (Thomas and Rao 1984, 1987), and df is the design degrees of freedom as described in the section Design Degrees of Freedom f for the Taylor Series Method.

Rao-Scott Second-Order Chi-Square Test

Rao and Scott (1987) further proposed the second-order (Satterthwaite) Rao-Scott chi-square statistic as

![\[ Q_{RS2}=Q_{RS1}/(1 + \hat a^2) \]](images/statug_surveylogistic0289.png)

where  is the first-order Rao-Scott chi-square statistic and the second-order design correction is computed from the coefficient of variation of the eigenvalues of the design effect matrix E as

is the first-order Rao-Scott chi-square statistic and the second-order design correction is computed from the coefficient of variation of the eigenvalues of the design effect matrix E as

![\[ \hat a^2=\frac{1}{r^*-1}\sum _{i=1}^{r^*} {(\delta _ i-\bar\delta _\cdot )^2} / {\bar\delta _\cdot ^2} \]](images/statug_surveylogistic0290.png)

Under the null hypothesis, the second-order Rao-Scott chi-square  approximately follows a chi-square distribution with

approximately follows a chi-square distribution with  degrees of freedom.

degrees of freedom.

The corresponding F statistic is

![\[ F_{RS2} = Q_{RS2} (1+\hat{a}^2) / r^* \]](images/statug_surveylogistic0293.png)

which has an F distribution with  and

and  degrees of freedom under the null hypothesis (Thomas and Rao 1984, 1987), and df is the design degrees of freedom as described in the section Design Degrees of Freedom f for the Taylor Series Method.

degrees of freedom under the null hypothesis (Thomas and Rao 1984, 1987), and df is the design degrees of freedom as described in the section Design Degrees of Freedom f for the Taylor Series Method.

Score Statistics and Tests

To express the general form of the score statistic, let  be the parameter vector you want to estimate and let

be the parameter vector you want to estimate and let  be the vector of first partial derivatives (gradient vector) of the log likelihood with respect to the parameter vector

be the vector of first partial derivatives (gradient vector) of the log likelihood with respect to the parameter vector  .

.

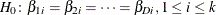

Consider a null hypothesis  that has r restrictions imposed on

that has r restrictions imposed on  . Let

. Let  be the MLE of

be the MLE of  under

under  , let

, let  be the gradient vector evaluated at

be the gradient vector evaluated at  , and let

, and let  be the estimated covariance matrix for

be the estimated covariance matrix for  , which is described in the section Variance Estimation.

, which is described in the section Variance Estimation.

For the Taylor series variance estimation method, by default (or when DF=PARMADJ

), PROC SURVEYLOGISTIC computes the score test statistic for the null hypothesis  as

as

![\[ W_{F}=\left( \frac{f-r+1}{f \, \, r} \right) \mb{g}(\hat{\btheta })’ \left[{\hat{\mb{V}}}(\hat{\btheta }) \right]^{-1} \mb{g}(\hat{\btheta }) \]](images/statug_surveylogistic0298.png)

where f is the design degrees of freedom as described in the section Design Degrees of Freedom f for the Taylor Series Method.

If you specify DF=DESIGN

option or if you use the replication variance estimation method, PROC SURVEYLOGISTIC computes the score test statistic for

the null hypothesis  as

as

![\[ W_{F}= \frac{1}{r} \mb{g}(\hat{\btheta })’ \left[{\hat{\mb{V}}}(\hat{\btheta }) \right]^{-1} \mb{g}(\hat{\btheta }) \]](images/statug_surveylogistic0299.png)

Under  ,

,  has an F distribution with

has an F distribution with  degrees of freedom, where the denominator degrees of freedom df is described in the section Degrees of Freedom.

degrees of freedom, where the denominator degrees of freedom df is described in the section Degrees of Freedom.

As the denominator degrees of freedom grows, an F distribution approaches a chi-square distribution, and similarly a t distribution approaches a normal distribution. If you specify DF=INFINITY

in the MODEL

statement, the score test statistic for both Taylor series and replication methods for testing the null hypothesis  can be expressed as

can be expressed as

![\[ W_{\chi ^{2}}=\mb{g}(\hat{\btheta })’\left[{\hat{\mb{V}}}(\hat{\btheta }) \right]^{-1} \mb{g}(\hat{\btheta }) \]](images/statug_surveylogistic0302.png)

has a chi-square distribution with r degrees of freedom under the null hypothesis

has a chi-square distribution with r degrees of freedom under the null hypothesis  .

.

Testing the Parallel Lines Assumption

For a model that has an ordinal response, the parallel lines assumption depends on the link function, which you can specify in the LINK= option in the MODEL statement. When the link function is probit or complementary log-log, the parallel lines assumption is the equal slopes assumption; PROC SURVEYLOGISTIC displays the corresponding test in the "Score Test for the Equal Slopes Assumption" table. When the link function is logit, the parallel lines assumption is the proportional odds assumption; PROC SURVEYLOGISTIC displays the corresponding test in the "Score Test for the Proportional Odds Assumption" table. This section describes the computation of the score tests of these assumptions.

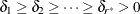

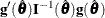

For this test, the number of response levels,  , is assumed to be strictly greater than 2. Let Y be the response variable taking values

, is assumed to be strictly greater than 2. Let Y be the response variable taking values  . Suppose there are k explanatory variables. Consider the general cumulative model without making the parallel lines assumption:

. Suppose there are k explanatory variables. Consider the general cumulative model without making the parallel lines assumption:

![\[ g(\mbox{Pr}(Y\leq d~ |~ \mb{x}))= (1,\mb{x})\btheta _ d, \quad 1 \leq d \leq D \]](images/statug_surveylogistic0305.png)

where  is the link function, and

is the link function, and  is a vector of unknown parameters consisting of an intercept

is a vector of unknown parameters consisting of an intercept  and k slope parameters

and k slope parameters  . The parameter vector for this general cumulative model is

. The parameter vector for this general cumulative model is

![\[ \btheta =(\btheta ’_1,\ldots ,\btheta ’_ D)’ \]](images/statug_surveylogistic0309.png)

Under the null hypothesis of parallelism  , there is a single common slope parameter for each of the k explanatory variables. Let

, there is a single common slope parameter for each of the k explanatory variables. Let  be the common slope parameters. Let

be the common slope parameters. Let  and

and  be the MLEs of the intercept parameters and the common slope parameters. Then, under

be the MLEs of the intercept parameters and the common slope parameters. Then, under  , the MLE of

, the MLE of  is

is

![\[ \hat{\btheta }=(\hat{\btheta }’_1,\ldots ,\hat{\btheta }’_ D)’ \quad \mbox{with} \quad \hat{\btheta }_ d=(\hat{\alpha }_ d,\hat{\beta }_1,\ldots , \hat{\beta }_ k)’, \quad 1 \leq d \leq D \]](images/statug_surveylogistic0314.png)

and the chi-square score statistic  has an asymptotic chi-square distribution with

has an asymptotic chi-square distribution with  degrees of freedom. This tests the parallel lines assumption by testing the equality of separate slope parameters simultaneously

for all explanatory variables.

degrees of freedom. This tests the parallel lines assumption by testing the equality of separate slope parameters simultaneously

for all explanatory variables.

Note that this test is the same as what PROC LOGISTIC produces. It does not compute a score test that uses the covariance matrix incorporated with the survey design information as described in the section Score Statistics and Tests.

Wald Confidence Intervals for Parameters

Wald confidence intervals are sometimes called normal confidence intervals. They are based on the asymptotic normality of

the parameter estimators. The  % Wald confidence interval for

% Wald confidence interval for  is given by

is given by

![\[ \hat{\theta }_ j \pm z_{1-\alpha /2}\hat{\sigma }_ j \]](images/statug_surveylogistic0318.png)

where  is the

is the  th percentile of the standard normal distribution,

th percentile of the standard normal distribution,  is the pseudo-estimate of

is the pseudo-estimate of  , and

, and  is the standard error estimate of

is the standard error estimate of  in the section Variance Estimation.

in the section Variance Estimation.

Testing Linear Hypotheses about the Regression Coefficients

Linear hypotheses for  can be expressed in matrix form as

can be expressed in matrix form as

![\[ H_0\colon \mb{L}\btheta = \mb{c} \]](images/statug_surveylogistic0323.png)

where  is a matrix of coefficients for the linear hypotheses and

is a matrix of coefficients for the linear hypotheses and  is a vector of constants whose rank is r. The vector of regression coefficients

is a vector of constants whose rank is r. The vector of regression coefficients  includes both slope parameters and intercept parameters.

includes both slope parameters and intercept parameters.

Let  be the MLE of

be the MLE of  , and let

, and let  be the estimated covariance matrix that is described in the section Variance Estimation.

be the estimated covariance matrix that is described in the section Variance Estimation.

For the Taylor series variance estimation method, PROC SURVEYLOGISTIC computes the test statistic for the null hypothesis

as

as

![\[ W_{F}=\left( \frac{f-r+1}{f \, \, r} \right) (\mb{L}\hat{\btheta } - \mb{c})’ [{\mb{L}\hat{\bV }(\hat{\btheta })\mb{L}’}]^{-1} (\mb{L}\hat{\btheta } - \mb{c}) \]](images/statug_surveylogistic0324.png)

where f is the design degrees of freedom as described in the section Design Degrees of Freedom f for the Taylor Series Method.

For the replication variance estimation method, PROC SURVEYLOGISTIC computes the test statistic for the null hypothesis  as

as

![\[ W_{F}= \frac{1}{r} (\mb{L}\hat{\btheta } - \mb{c})’ [{\mb{L}\hat{\bV }(\hat{\btheta })\mb{L}’}]^{-1} (\mb{L}\hat{\btheta } - \mb{c}) \]](images/statug_surveylogistic0325.png)

Under the  ,

,  has an F distribution with

has an F distribution with  degrees of freedom, and the denominator degrees of freedom df is described in the section Degrees of Freedom.

degrees of freedom, and the denominator degrees of freedom df is described in the section Degrees of Freedom.

As the denominator degrees of freedom grows, an F distribution approaches a chi-square distribution, and similarly a t distribution approaches a normal distribution. If you specify DF=INFINITY

in the MODEL

statement, PROC SURVEYLOGISTIC computes the test statistic for both Taylor series and replication methods for testing the

null hypothesis  as

as

![\[ W_{\chi ^{2}} = (\mb{L}\hat{\btheta } - \mb{c})’ [{\mb{L}\hat{\bV }(\hat{\btheta })\mb{L}’}]^{-1} (\mb{L}\hat{\btheta } - \mb{c}) \]](images/statug_surveylogistic0326.png)

Under  ,

,  has an asymptotic chi-square distribution with r degrees of freedom.

has an asymptotic chi-square distribution with r degrees of freedom.

Type 3 Tests

For models that use less-than-full-rank parameterization (as specified by the PARAM=GLM option in the CLASS statement), a Type 3 test of an effect of interest (main effect or interaction) is a test of the Type III estimable functions that are defined for that effect. When the model contains no missing cells, the Type 3 test of a main effect corresponds to testing the hypothesis of equal marginal means. For more information about Type III estimable functions, see Chapter 46: The GLM Procedure, and Chapter 15: The Four Types of Estimable Functions. Also see Littell, Freund, and Spector (1991).

For models that use full-rank parameterization, all parameters are estimable when there are no missing cells, so it is unnecessary to define estimable functions. The standard test of an effect of interest in this case is the joint test that the values of the parameters associated with that effect are zero. For a model that uses effects parameterization (as specified by the PARAM=EFFECT option in the CLASS statement), the joint test for a main effect is equivalent to testing the equality of marginal means. For a model that uses reference parameterization (as specified by the PARAM=REF option in the CLASS statement), the joint test is equivalent to testing the equality of cell means at the reference level of the other model effects. For more information about the coding scheme and the associated interpretation of results, see Muller and Fetterman (2002, Chapter 14).

If there is no interaction term, the Type 3 test of an effect for a model that uses GLM parameterization is the same as the joint test of the effect for the model that uses full-rank parameterization. In this situation, the joint test is also called the Type 3 test. For a model that contains an interaction term and no missing cells, the Type 3 test of a component main effect under GLM parameterization is the same as the joint test of the component main effect under effect parameterization. Both test the equality of cell means. But this Type 3 test differs from the joint test under reference parameterization, which tests the equality of cell means at the reference level of the other component main effect. If some cells are missing, you can obtain meaningful tests only by testing a Type III estimation function, so in this case you should use GLM parameterization.

The results of a Type 3 test or a joint test do not depend on the order in which you specify the terms in the MODEL statement.

Odds Ratio Estimation

Consider a dichotomous response variable with outcomes event and nonevent. Let a dichotomous risk factor variable X take the value 1 if the risk factor is present and 0 if the risk factor is absent.

According to the logistic model, the log odds function,  , is given by

, is given by

![\[ g(X) \equiv \log \biggl (\frac{\Pr (~ \mathit{event} ~ |~ X)}{\Pr (~ \mathit{nonevent} ~ |~ X)} \biggr ) = \beta _0 + \beta _1 X \\ \]](images/statug_surveylogistic0329.png)

The odds ratio  is defined as the ratio of the odds for those with the risk factor (X = 1) to the odds for those without the risk factor (X = 0). The log of the odds ratio is given by

is defined as the ratio of the odds for those with the risk factor (X = 1) to the odds for those without the risk factor (X = 0). The log of the odds ratio is given by

![\[ \log (\psi ) \equiv \log (\psi (X=1,X=0)) = g(X=1) - g(X=0) = \beta _1 \]](images/statug_surveylogistic0331.png)

The parameter,  , associated with X represents the change in the log odds

from X = 0 to X = 1. So the odds ratio is obtained by simply exponentiating the value of the parameter associated with the risk factor. The

odds ratio indicates how the odds of event change as you change X from 0 to 1. For instance,

, associated with X represents the change in the log odds

from X = 0 to X = 1. So the odds ratio is obtained by simply exponentiating the value of the parameter associated with the risk factor. The

odds ratio indicates how the odds of event change as you change X from 0 to 1. For instance,  means that the odds of an event when X = 1 are twice the odds of an event when X = 0.

means that the odds of an event when X = 1 are twice the odds of an event when X = 0.

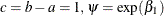

Suppose the values of the dichotomous risk factor are coded as constants a and b instead of 0 and 1. The odds when  become

become  , and the odds when

, and the odds when  become

become  . The odds ratio corresponding to an increase in X from a to b is

. The odds ratio corresponding to an increase in X from a to b is

![\[ \psi = \exp [(b - a) \beta _1] = [\exp (\beta _1)]^{b-a} \equiv [\exp (\beta _1)]^ c \]](images/statug_surveylogistic0338.png)

Note that for any a and b such that  . So the odds ratio can be interpreted as the change in the odds for any increase of one unit in the corresponding risk factor.

However, the change in odds for some amount other than one unit is often of greater interest. For example, a change of one

pound in body weight might be too small to be considered important, while a change of 10 pounds might be more meaningful.

The odds ratio for a change in X from a to b is estimated by raising the odds ratio estimate for a unit change in X to the power of

. So the odds ratio can be interpreted as the change in the odds for any increase of one unit in the corresponding risk factor.

However, the change in odds for some amount other than one unit is often of greater interest. For example, a change of one

pound in body weight might be too small to be considered important, while a change of 10 pounds might be more meaningful.

The odds ratio for a change in X from a to b is estimated by raising the odds ratio estimate for a unit change in X to the power of  , as shown previously.

, as shown previously.

For a polytomous risk factor, the computation of odds ratios depends on how the risk factor is parameterized. For illustration,

suppose that Race is a risk factor with four categories: White, Black, Hispanic, and Other.

For the effect parameterization scheme (PARAM=EFFECT) with White as the reference group, the design variables for Race are as follows.

|

Design Variables |

|||

|---|---|---|---|

|

Race |

|

|

|

|

Black |

1 |

0 |

0 |

|

Hispanic |

0 |

1 |

0 |

|

Other |

0 |

0 |

1 |

|

White |

–1 |

–1 |

–1 |

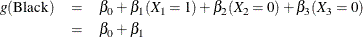

The log odds for Black is

The log odds for White is

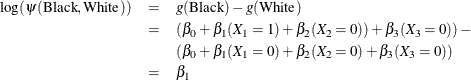

Therefore, the log odds ratio of Black versus White becomes

For the reference cell parameterization scheme (PARAM=REF) with White as the reference cell, the design variables for race are as follows.

|

Design Variables |

|||

|---|---|---|---|

|

Race |

|

|

|

|

Black |

1 |

0 |

0 |

|

Hispanic |

0 |

1 |

0 |

|

Other |

0 |

0 |

1 |

|

White |

0 |

0 |

0 |

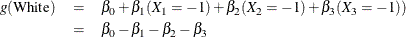

The log odds ratio of Black versus White is given by

For the GLM parameterization scheme (PARAM=GLM), the design variables are as follows.

|

Design Variables |

||||

|---|---|---|---|---|

|

Race |

|

|

|

|

|

Black |

1 |

0 |

0 |

0 |

|

Hispanic |

0 |

1 |

0 |

0 |

|

Other |

0 |

0 |

1 |

0 |

|

White |

0 |

0 |

0 |

1 |

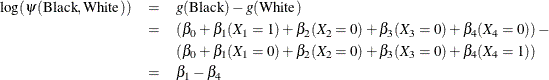

The log odds ratio of Black versus White is

Consider the hypothetical example of heart disease among race in Hosmer and Lemeshow (2000, p. 51). The entries in the following contingency table represent counts.

|

Race |

||||

|---|---|---|---|---|

|

Disease Status |

White |

Black |

Hispanic |

Other |

|

Present |

5 |

20 |

15 |

10 |

|

Absent |

20 |

10 |

10 |

10 |

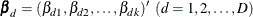

The computation of odds ratio of Black versus White for various parameterization schemes is shown in Table 111.9.

Table 111.9: Odds Ratio of Heart Disease Comparing Black to White

|

Parameter Estimates |

|||||

|---|---|---|---|---|---|

|

PARAM= |

|

|

|

|

Odds Ratio Estimates |

|

EFFECT |

0.7651 |

0.4774 |

0.0719 |

|

|

|

REF |

2.0794 |

1.7917 |

1.3863 |

|

|

|

GLM |

2.0794 |

1.7917 |

1.3863 |

0.0000 |

|

Since the log odds ratio ( ) is a linear function of the parameters, the Wald confidence interval for

) is a linear function of the parameters, the Wald confidence interval for  can be derived from the parameter estimates and the estimated covariance matrix. Confidence intervals for the odds ratios

are obtained by exponentiating the corresponding confidence intervals for the log odd ratios. In the displayed output of PROC

SURVEYLOGISTIC, the "Odds Ratio Estimates" table contains the odds ratio estimates and the corresponding t or Wald confidence intervals computed by using the covariance matrix in the section Variance Estimation. For continuous explanatory variables, these odds ratios correspond to a unit increase in the risk factors.

can be derived from the parameter estimates and the estimated covariance matrix. Confidence intervals for the odds ratios

are obtained by exponentiating the corresponding confidence intervals for the log odd ratios. In the displayed output of PROC

SURVEYLOGISTIC, the "Odds Ratio Estimates" table contains the odds ratio estimates and the corresponding t or Wald confidence intervals computed by using the covariance matrix in the section Variance Estimation. For continuous explanatory variables, these odds ratios correspond to a unit increase in the risk factors.

To customize odds ratios for specific units of change for a continuous risk factor, you can use the UNITS

statement to specify a list of relevant units for each explanatory variable in the model. Estimates of these customized odds

ratios are given in a separate table. Let  be a confidence interval for

be a confidence interval for  . The corresponding lower and upper confidence limits for the customized odds ratio

. The corresponding lower and upper confidence limits for the customized odds ratio  are

are  and

and  , respectively, (for

, respectively, (for  ); or

); or  and

and  , respectively, (for c < 0). You use the CLODDS

option in the MODEL statement to request confidence intervals for the odds ratios.

, respectively, (for c < 0). You use the CLODDS

option in the MODEL statement to request confidence intervals for the odds ratios.

For a generalized logit model, odds ratios are computed similarly, except D odds ratios are computed for each effect, corresponding to the D logits in the model.

Rank Correlation of Observed Responses and Predicted Probabilities

The predicted mean score of an observation is the sum of the ordered values (shown in the "Response Profile" table) minus

one, weighted by the corresponding predicted probabilities for that observation; that is, the predicted means score is  , where D + 1 is the number of response levels and

, where D + 1 is the number of response levels and  is the predicted probability of the dth (ordered) response.

is the predicted probability of the dth (ordered) response.

A pair of observations with different observed responses is said to be concordant if the observation with the lower-ordered response value has a lower predicted mean score than the observation with the higher-ordered

response value. If the observation with the lower-ordered response value has a higher predicted mean score than the observation

with the higher-ordered response value, then the pair is discordant. If the pair is neither concordant nor discordant, it is a tie. Enumeration of the total numbers of concordant and discordant pairs is carried out by categorizing the predicted mean score

into intervals of length  and accumulating the corresponding frequencies of observations.

and accumulating the corresponding frequencies of observations.

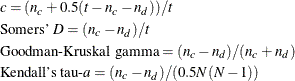

Let N be the sum of observation frequencies in the data. Suppose there are a total of t pairs with different responses,  of them are concordant,

of them are concordant,  of them are discordant, and

of them are discordant, and  of them are tied. PROC SURVEYLOGISTIC computes the following four indices of rank correlation for assessing the predictive

ability of a model:

of them are tied. PROC SURVEYLOGISTIC computes the following four indices of rank correlation for assessing the predictive

ability of a model:

Note that c also gives an estimate of the area under the receiver operating characteristic (ROC) curve when the response is binary (Hanley and McNeil 1982).

For binary responses, the predicted mean score is equal to the predicted probability for Ordered Value 2. As such, the preceding definition of concordance is consistent with the definition used in previous releases for the binary response model.