The ENTROPY Procedure (Experimental)

- Overview

-

Getting Started

-

Syntax

-

Details

Generalized Maximum EntropyGeneralized Cross EntropyMoment Generalized Maximum EntropyMaximum Entropy-Based Seemingly Unrelated RegressionGeneralized Maximum Entropy for Multinomial Discrete Choice ModelsCensored or Truncated Dependent VariablesInformation MeasuresParameter Covariance For GCEParameter Covariance For GCE-MStatistical TestsMissing ValuesInput Data SetsOutput Data SetsODS Table NamesODS Graphics

Generalized Maximum EntropyGeneralized Cross EntropyMoment Generalized Maximum EntropyMaximum Entropy-Based Seemingly Unrelated RegressionGeneralized Maximum Entropy for Multinomial Discrete Choice ModelsCensored or Truncated Dependent VariablesInformation MeasuresParameter Covariance For GCEParameter Covariance For GCE-MStatistical TestsMissing ValuesInput Data SetsOutput Data SetsODS Table NamesODS Graphics -

Examples

- References

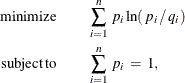

Kullback and Leibler (1951) cross entropy measures the "discrepancy" between one distribution and another. Cross entropy is called a measure of discrepancy rather than distance because it does not satisfy some of the properties one would expect of a distance measure. (See Kapur and Kesavan (1992) for a discussion of cross entropy as a measure of discrepancy.) Mathematically, cross entropy is written as

where ![]() is the probability associated with the ith point in the distribution from which the discrepancy is measured. The

is the probability associated with the ith point in the distribution from which the discrepancy is measured. The ![]() (in conjunction with the support) are often referred to as the prior distribution. The measure is nonnegative and is equal

to zero when

(in conjunction with the support) are often referred to as the prior distribution. The measure is nonnegative and is equal

to zero when ![]() equals

equals ![]() . The properties of the cross entropy measure are examined by Kapur and Kesavan (1992).

. The properties of the cross entropy measure are examined by Kapur and Kesavan (1992).

The principle of minimum cross entropy (Kullback, 1959; Good, 1963) states that one should choose probabilities that are as close as possible to the prior probabilities. That is, out of all

probability distributions that satisfy a given set of constraints which reflect known information about the distribution,

choose the distribution that is closest (as measured by ![]() ) to the prior distribution. When the prior distribution is uniform, maximum entropy and minimum cross entropy produce the

same results (Kapur and Kesavan, 1992), where the higher values for entropy correspond exactly with the lower values for cross entropy.

) to the prior distribution. When the prior distribution is uniform, maximum entropy and minimum cross entropy produce the

same results (Kapur and Kesavan, 1992), where the higher values for entropy correspond exactly with the lower values for cross entropy.

If the prior distributions are nonuniform, the problem can be stated as a generalized cross entropy (GCE) formulation. The

cross entropy terminology specifies weights, ![]() and

and ![]() , for the points Z and V, respectively. Given informative prior distributions on Z and V, the GCE problem is

, for the points Z and V, respectively. Given informative prior distributions on Z and V, the GCE problem is

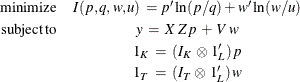

where y denotes the T column vector of observations of the dependent variables; ![]() denotes the

denotes the ![]() matrix of observations of the independent variables; q and p denote LK column vectors of prior and posterior weights, respectively, associated with the points in Z; u and w denote the LT column vectors of prior and posterior weights, respectively, associated with the points in V;

matrix of observations of the independent variables; q and p denote LK column vectors of prior and posterior weights, respectively, associated with the points in Z; u and w denote the LT column vectors of prior and posterior weights, respectively, associated with the points in V; ![]() ,

, ![]() , and

, and ![]() are K-, L-, and T-dimensional column vectors, respectively, of ones; and

are K-, L-, and T-dimensional column vectors, respectively, of ones; and ![]() and

and ![]() are (K

are (K ![]() K ) and (T

K ) and (T ![]() T ) dimensional identity matrices.

T ) dimensional identity matrices.

The optimization problem can be rewritten using set notation as follows

The subscript l denotes the support point (l=1, 2, ..., L), k denotes the parameter (k=1, 2, ..., K), and t denotes the observation (t=1, 2, ..., T).

The objective function is strictly convex; therefore, there is a unique global minimum for the problem (Golan, Judge, and

Miller, 1996). The optimal estimated weights, p and w, and the prior supports, Z and V, can be used to form the point estimates of the unknown parameters, ![]() , and the unknown errors, e, by using

, and the unknown errors, e, by using

![\[ \! \! \! \! \! \! \! \! \! \! \! \! \! \! \! \beta \: = \: Z \, p \: = \: \left[ \begin{array}{cccccccccc} z_{11} & \cdot \cdot \cdot & z_{L1} & 0 & 0 & 0 & 0 & 0 & 0 & 0\\ 0 & 0 & 0 & z_{12} & \cdot \cdot \cdot & z_{L2} & 0 & 0 & 0 & 0\\ 0 & 0 & 0 & 0 & 0 & 0 & \ddots & 0 & 0 & 0\\ 0 & 0 & 0 & 0 & 0 & 0 & 0 & z_{1K} & \cdot \cdot \cdot & z_{LK}\\ \end{array} \right] \: \left[ \begin{array}{c} p_{11} \\ \vdots \\ p_{L1} \\ p_{12} \\ \vdots \\ p_{L2} \\ \vdots \\ p_{1K} \\ \vdots \\ p_{LK} \end{array} \right] \]](images/etsug_entropy0075.png)

![\[ \! \! \! \! \! \! \! \! \! \! \! \! \! \! \! e \: = \: V \, w \: = \: \left[ \begin{array}{cccccccccc} v_{11} & \cdot \cdot \cdot & v_{L1} & 0 & 0 & 0 & 0 & 0 & 0 & 0\\ 0 & 0 & 0 & v_{12} & \cdot \cdot \cdot & v_{L2} & 0 & 0 & 0 & 0\\ 0 & 0 & 0 & 0 & 0 & 0 & \ddots & 0 & 0 & 0\\ 0 & 0 & 0 & 0 & 0 & 0 & 0 & v_{1T} & \cdot \cdot \cdot & v_{LT}\\ \end{array} \right] \: \left[ \begin{array}{c} w_{11} \\ \vdots \\ w_{L1} \\ w_{12} \\ \vdots \\ w_{L2} \\ \vdots \\ w_{1T} \\ \vdots \\ w_{LT} \end{array} \right] \]](images/etsug_entropy0076.png)

This constrained estimation problem can be solved either directly (primal) or by using the dual form. Either way, it is prudent

to factor out one probability for each parameter and each observation as the sum of the other probabilities. This factoring

reduces the computational complexity significantly. If the primal formalization is used and two support points are used for

the parameters and the errors, the resulting GME problem is O![]() . For the dual form, the problem is O

. For the dual form, the problem is O![]() . Therefore for large data sets, GME-M should be used instead of GME.

. Therefore for large data sets, GME-M should be used instead of GME.