The QLIM Procedure

- Overview

-

Getting Started

-

Syntax

-

Details

Ordinal Discrete Choice ModelingLimited Dependent Variable ModelsStochastic Frontier Production and Cost ModelsHeteroscedasticity and Box-Cox TransformationBivariate Limited Dependent Variable ModelingSelection ModelsMultivariate Limited Dependent ModelsVariable SelectionTests on ParametersEndogeneity and Instrumental VariablesBayesian AnalysisPrior DistributionsAutomated MCMCOutput to SAS Data SetOUTEST= Data SetNamingODS Table NamesODS Graphics

Ordinal Discrete Choice ModelingLimited Dependent Variable ModelsStochastic Frontier Production and Cost ModelsHeteroscedasticity and Box-Cox TransformationBivariate Limited Dependent Variable ModelingSelection ModelsMultivariate Limited Dependent ModelsVariable SelectionTests on ParametersEndogeneity and Instrumental VariablesBayesian AnalysisPrior DistributionsAutomated MCMCOutput to SAS Data SetOUTEST= Data SetNamingODS Table NamesODS Graphics -

Examples

- References

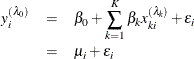

If the variance of regression disturbance, (![]() ), is heteroscedastic, the variance can be specified as a function of variables

), is heteroscedastic, the variance can be specified as a function of variables

The following table shows various functional forms of heteroscedasticity and the corresponding options to request each model.

|

No. |

Model |

Options |

|

|---|---|---|---|

|

1 |

|

LINK=EXP (default) |

|

|

2 |

|

LINK=EXP NOCONST |

|

|

3 |

|

LINK=LINEAR |

|

|

4 |

|

LINK=LINEAR SQUARE |

For discrete choice models, ![]() is normalized (

is normalized (![]() ) since this parameter is not identified. Note that in models 3 and 5, it may be possible that variances of some observations

are negative. Although the QLIM procedure assigns a large penalty to move the optimization away from such region, it is possible

that the optimization cannot improve the objective function value and gets locked in the region. Signs of such outcome include

extremely small likelihood values or missing standard errors in the estimates. In models 2 and 6, variances are guaranteed

to be greater or equal to zero, but it may be possible that variances of some observations are very close to zero. In these

scenarios, standard errors may be missing. Models 1 and 4 do not have such problems. Variances in these models are always

positive and never close to zero.

) since this parameter is not identified. Note that in models 3 and 5, it may be possible that variances of some observations

are negative. Although the QLIM procedure assigns a large penalty to move the optimization away from such region, it is possible

that the optimization cannot improve the objective function value and gets locked in the region. Signs of such outcome include

extremely small likelihood values or missing standard errors in the estimates. In models 2 and 6, variances are guaranteed

to be greater or equal to zero, but it may be possible that variances of some observations are very close to zero. In these

scenarios, standard errors may be missing. Models 1 and 4 do not have such problems. Variances in these models are always

positive and never close to zero.

The heteroscedastic regression model is estimated using the following log-likelihood function:

where ![]() .

.

The Box-Cox transformation on x is defined as

The Box-Cox regression model with heteroscedasticity is written as

where ![]() and transformed variables must be positive. In practice, too many transformation parameters cause numerical problems in model

fitting. It is common to have the same Box-Cox transformation performed on all the variables — that is,

and transformed variables must be positive. In practice, too many transformation parameters cause numerical problems in model

fitting. It is common to have the same Box-Cox transformation performed on all the variables — that is, ![]() . It is required for the magnitude of transformed variables to be in the tolerable range if the corresponding transformation

parameters are

. It is required for the magnitude of transformed variables to be in the tolerable range if the corresponding transformation

parameters are ![]() .

.

The log-likelihood function of the Box-Cox regression model is written as

where ![]() .

.

When the dependent variable is discrete, censored, or truncated, the Box-Cox transformation can be applied only to explanatory variables.