The PANEL Procedure

- Overview

- Getting Started

-

Syntax

-

Details

Specifying the Input DataSpecifying the Regression ModelUnbalanced DataMissing ValuesComputational ResourcesRestricted EstimatesNotationOne-Way Fixed-Effects ModelTwo-Way Fixed-Effects ModelBalanced PanelsUnbalanced PanelsFirst-Differenced Methods for One-Way and Two-Way ModelsBetween EstimatorsPooled EstimatorOne-Way Random-Effects ModelTwo-Way Random-Effects ModelParks Method (Autoregressive Model)Da Silva Method (Variance-Component Moving Average Model)Dynamic Panel EstimatorLinear Hypothesis TestingHeteroscedasticity-Corrected Covariance MatricesHeteroscedasticity- and Autocorrelation-Consistent Covariance MatricesR-SquareSpecification TestsPanel Data Poolability TestPanel Data Cross-Sectional Dependence TestPanel Data Unit Root TestsLagrange Multiplier (LM) Tests for Cross-Sectional and Time EffectsTests for Serial Correlation and Cross-Sectional EffectsTroubleshootingCreating ODS GraphicsOUTPUT OUT= Data SetOUTEST= Data SetOUTTRANS= Data SetPrinted OutputODS Table Names

Specifying the Input DataSpecifying the Regression ModelUnbalanced DataMissing ValuesComputational ResourcesRestricted EstimatesNotationOne-Way Fixed-Effects ModelTwo-Way Fixed-Effects ModelBalanced PanelsUnbalanced PanelsFirst-Differenced Methods for One-Way and Two-Way ModelsBetween EstimatorsPooled EstimatorOne-Way Random-Effects ModelTwo-Way Random-Effects ModelParks Method (Autoregressive Model)Da Silva Method (Variance-Component Moving Average Model)Dynamic Panel EstimatorLinear Hypothesis TestingHeteroscedasticity-Corrected Covariance MatricesHeteroscedasticity- and Autocorrelation-Consistent Covariance MatricesR-SquareSpecification TestsPanel Data Poolability TestPanel Data Cross-Sectional Dependence TestPanel Data Unit Root TestsLagrange Multiplier (LM) Tests for Cross-Sectional and Time EffectsTests for Serial Correlation and Cross-Sectional EffectsTroubleshootingCreating ODS GraphicsOUTPUT OUT= Data SetOUTEST= Data SetOUTTRANS= Data SetPrinted OutputODS Table Names -

Example

- References

For an example on dynamic panel estimation using GMM option, see The Cigarette Sales Data: Dynamic Panel Estimation with GMM.

Consider the case of the following general model:

The x variables can include ones that are correlated or uncorrelated to the individual effects, predetermined, or strictly exogenous.

The variable ![]() is defined as predetermined in the sense that

is defined as predetermined in the sense that ![]() for

for ![]() and zero otherwise. The variable

and zero otherwise. The variable ![]() is defined as strictly exogenous if

is defined as strictly exogenous if ![]() for all s and t. The

for all s and t. The ![]() and

and ![]() are cross-sectional and time series fixed effects, respectively. Arellano and Bond (1991) show that it is possible to define conditions that should result in a consistent estimator.

are cross-sectional and time series fixed effects, respectively. Arellano and Bond (1991) show that it is possible to define conditions that should result in a consistent estimator.

Consider the simple case of an autoregression in a panel setting (with only individual effects):

Differencing the preceding relationship results in:

where ![]() .

.

Obviously, ![]() is not exogenous. However, Arellano and Bond (1991) show that it is still useful as an instrument, if properly lagged. This instrument is required with the option DEPVAR(LEVEL).

is not exogenous. However, Arellano and Bond (1991) show that it is still useful as an instrument, if properly lagged. This instrument is required with the option DEPVAR(LEVEL).

For ![]() (assuming the first observation corresponds to time period 1) you have,

(assuming the first observation corresponds to time period 1) you have,

Using ![]() as an instrument is not a good idea since

as an instrument is not a good idea since ![]() . Therefore, since it is not possible to form a moment restriction, you discard this observation.

. Therefore, since it is not possible to form a moment restriction, you discard this observation.

For ![]() you have,

you have,

Clearly, you have every reason to suspect that ![]() . This condition forms one restriction.

. This condition forms one restriction.

For ![]() , both

, both ![]() and

and ![]() must hold.

must hold.

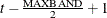

Proceeding in that fashion, you have the following matrix of instruments,

![\[ \mb{Z} _\mi {i} = \left( \begin{array}{*{10}{c}} \mi{y} _\mi {i1} & 0 & 0 & \cdots & 0 & 0 & 0 & 0 & 0 & 0 \\ 0 & \mi{y} _\mi {i1} & \mi{y} _\mi {i2} & 0 & \cdots & 0 & 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & \mi{y} _\mi {i1} & \mi{y} _\mi {i2} & \mi{y} _\mi {i3} & 0 & \cdots & 0 & 0 \\ \vdots & \vdots & \vdots & & & & \vdots & & \vdots & \vdots \\ 0 & 0 & 0 & 0 & 0 & 0 & 0 & \mi{y} _\mi {i1} & \cdots & \mi{y} _\mi {i(T-2)} \\ \end{array} \right) \]](images/etsug_panel0428.png)

Using the instrument matrix, you form the weighting matrix ![]() as

as

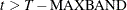

The initial weighting matrix is

![\[ \mb{H} _\mi {i} = \left( \begin{array}{*{10}{r}} 2 & -1 & 0 & \cdots & 0 & 0 & 0 & 0 & 0 & 0 \\ -1 & 2 & -1 & 0 & \cdots & 0 & 0 & 0 & 0 & 0 \\ 0 & -1 & 2 & -1 & 0 & \cdots & 0 & 0 & 0 & 0 \\ \vdots & \vdots & \vdots & & & & \vdots & & \vdots & \vdots \\ 0 & 0 & 0 & 0 & 0 & 0 & 0 & -1 & 2 & -1 \\ 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 & -1 & 2 \\ \end{array} \right) \]](images/etsug_panel0431.png)

Note that the maximum size of the ![]() matrix is T–2. The origins of the initial weighting matrix are the expected error covariances. Notice that on the diagonals,

matrix is T–2. The origins of the initial weighting matrix are the expected error covariances. Notice that on the diagonals,

and off diagonals,

If you let the vector of lagged differences (in the series ![]() ) be denoted as

) be denoted as ![]() and the dependent variable as

and the dependent variable as ![]() , then the optimal GMM estimator is

, then the optimal GMM estimator is

Using the estimate, ![]() , you can obtain estimates of the errors,

, you can obtain estimates of the errors, ![]() , or the differences,

, or the differences, ![]() . From the errors, the variance is calculated as,

. From the errors, the variance is calculated as,

where ![]() is the total number of observations. With differenced equations, since we lose the first two observations,

is the total number of observations. With differenced equations, since we lose the first two observations, ![]() .

.

Furthermore, you can calculate the variance of the parameter as,

Alternatively, you can view the initial estimate of the ![]() as a first step. That is, by using

as a first step. That is, by using ![]() , you can improve the estimate of the weight matrix,

, you can improve the estimate of the weight matrix, ![]() .

.

Instead of imposing the structure of the weighting, you form the ![]() matrix through the following:

matrix through the following:

You then complete the calculation as previously shown. The PROC PANEL option GMM2 specifies this estimation.

The case of multiple right-hand-side variables illustrates more clearly the power of Arellano and Bond (1991); Arellano and Bover (1995).

Considering the general case you have:

It is clear that lags of the dependent variable are both not exogenous and correlated to the fixed effects. However, the independent variables can fall into one of several categories. An independent variable can be correlated[2] and exogenous, uncorrelated and exogenous, correlated and predetermined, and uncorrelated and predetermined. The category in which an independent variable is found influences when or whether it becomes a suitable instrument. Note, however, that neither PROC PANEL nor Arellano and Bond require that a regressor be an instrument or that an instrument be a regressor.

First, suppose that the variables are all correlated with the individual effects ![]() . Consider the question of exogenous or predetermined. An exogenous variable is not correlated with the error term

. Consider the question of exogenous or predetermined. An exogenous variable is not correlated with the error term ![]() in the differenced equations. Therefore, all observations (on the exogenous variable) become valid instruments at all time

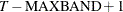

periods. If the model has only one instrument and it happens to be exogenous, then the optimal instrument matrix looks like,

in the differenced equations. Therefore, all observations (on the exogenous variable) become valid instruments at all time

periods. If the model has only one instrument and it happens to be exogenous, then the optimal instrument matrix looks like,

![\[ \mb{Z} _\mi {i} = \left( \begin{array}{*{5}{c}} \mi{x} _\mi {i1} \cdots \mi{x} _\mi {iT} & 0 & 0 & 0 & 0 \\ 0 & \mi{x} _\mi {i1} \cdots \mi{x} _\mi {iT} & 0 & 0 & 0 \\ 0 & 0 & \mi{x} _\mi {i1} \cdots \mi{x} _\mi {iT} & 0 & 0 \\ \vdots & \vdots & \vdots & \vdots & \vdots \\ 0 & 0 & 0 & 0 & \mi{x} _\mi {i1} \cdots \mi{x} _\mi {iT} \\ \end{array} \right) \]](images/etsug_panel0450.png)

The situation for the predetermined variables becomes a little more difficult. A predetermined variable is one whose future realizations can be correlated to current shocks in the dependent variable. With such an understanding, it is admissible to allow all current and lagged realizations as instruments. In other words you have,

![\[ \mb{Z} _\mi {i} = \left( \begin{array}{*{5}{c}} \mi{x} _\mi {i1} & 0 & 0 & 0 & 0 \\ 0 & \mi{x} _\mi {i1} \mi{x} _\mi {i2} & 0 & 0 & 0 \\ 0 & 0 & \mi{x} _\mi {i1} \cdots \mi{x} _\mi {i3} & 0 & 0 \\ \vdots & \vdots & \vdots & \vdots & \vdots \\ 0 & 0 & 0 & 0 & \mi{x} _\mi {i1} \cdots \mi{x} _\mi {i(T-1)} \\ \end{array} \right) \]](images/etsug_panel0451.png)

When the data contain a mix of endogenous, exogenous, and predetermined variables, the instrument matrix is formed by combining the three. For example, the third observation would have one observation on the dependent variable as an instrument, three observations on the predetermined variables as instruments, and all observations on the exogenous variables.

Now consider some variables, denoted as ![]() , that are not correlated with the individual effects

, that are not correlated with the individual effects ![]() . There is yet another set of moment restrictions that can be used. An uncorrelated variable means that the variable’s level

is not affected by the individual specific effect. You write the preceding general model as

. There is yet another set of moment restrictions that can be used. An uncorrelated variable means that the variable’s level

is not affected by the individual specific effect. You write the preceding general model as

where ![]() .

.

Because the variables are uncorrelated with ![]() and thus uncorrelated with the error term

and thus uncorrelated with the error term ![]() in the level equations, you can use the difference and level equations to perform a system estimation. That is, the uncorrelated

variables imply moment restrictions on the level equations. Given the previously used restrictions for the equations in first

differences, there are T extra restrictions. For predetermined variables, Arellano and Bond (1991) use the extra restrictions

in the level equations, you can use the difference and level equations to perform a system estimation. That is, the uncorrelated

variables imply moment restrictions on the level equations. Given the previously used restrictions for the equations in first

differences, there are T extra restrictions. For predetermined variables, Arellano and Bond (1991) use the extra restrictions ![]() and

and ![]() for

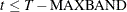

for ![]() . The instrument matrix becomes

. The instrument matrix becomes

![\[ \mb{Z} _\mi {i} ^{*} = \left( \begin{array}{*{9}{c}} \mb{Z} _\mi {i} & 0 & 0 & 0 & \cdots & 0 \\ 0 & x^\mi {p} _\mi {1i1} & x^\mi {p} _\mi {1i2} & 0 & \cdots & 0\\ 0 & 0 & 0& x^\mi {p} _\mi {1i3} & \cdots & 0\\ \vdots & \vdots & \vdots & \vdots & \vdots & \vdots \\ 0 & 0 & 0& 0& \cdots & x^\mi {p} _\mi {1iT} \\ \end{array} \right) \]](images/etsug_panel0459.png)

For exogenous variables ![]() Arellano and Bond (1991) use

Arellano and Bond (1991) use ![]() . PROC PANEL uses the same ones as the predetermined variables—that is,

. PROC PANEL uses the same ones as the predetermined variables—that is, ![]() and

and ![]() for

for ![]() . If you denote the new instrument matrix by using the full complement of instruments available by an asterisk and if both

. If you denote the new instrument matrix by using the full complement of instruments available by an asterisk and if both

![]() and

and ![]() are uncorrelated, then you have

are uncorrelated, then you have

![\[ \mb{Z} _\mi {i} ^{*} = \left( \begin{array}{*{9}{c}} \mb{Z} _\mi {i} & 0 & 0 & 0 & 0 & 0 & 0& 0 & 0 \\ 0 & x^\mi {p} _\mi {i1} & x^\mi {e} _\mi {i1} & x^\mi {p} _\mi {i2} & x^\mi {e} _\mi {i2}& 0 & 0 & 0 & 0\\ 0 & 0 & 0 & 0 & 0& x^\mi {p} _\mi {i3} & x^\mi {e} _\mi {i3}& 0 & 0\\ \vdots & \vdots & \vdots & \vdots & \vdots & \vdots & \vdots & \vdots & \vdots \\ 0 & 0 & 0 & 0 & 0 & 0& \cdots & x^\mi {p} _\mi {iT} & x^\mi {e} _\mi {iT} \\ \end{array} \right) \]](images/etsug_panel0466.png)

When the lagged dependent variable is included as the explanatory variable (as in the dynamic panel data models), Blundell

and Bond (1998) suggest the system GMM to use ![]() extra-moment restrictions, which use the lagged differences as the instruments for the level:

extra-moment restrictions, which use the lagged differences as the instruments for the level:

This additional set of moment conditions are required by DEPVAR(DIFF) option. The corresponding instrument matrix is

![\[ \mb{Z} _\mi {li} ^{y} = \left( \begin{array}{*{9}{c}} 0 & 0 & 0 & \cdots & 0\\ 0 & \Delta y_{i2} & 0 & \cdots & 0\\ 0 & 0 & \Delta y_{i3} & \cdots & 0\\ \vdots & \vdots & \vdots & \vdots & \vdots \\ 0 & 0 & 0 & \cdots & \Delta y_{i(T-1)} \\ \end{array} \right) \]](images/etsug_panel0469.png)

Blundell and Bond (1998) argue that the system GMM that uses these extra conditions significantly increases the efficiency of the estimator, especially under strong serial correlation in the dependent variables.[3]

Except for those GMM-type instruments, PROC PANEL can also handle standard instruments by using the lists that you specify

in the LEVELEQ= and DIFFEQ= options. Denote ![]() and

and ![]() as the standard instruments that are specified for the level equation and differenced equation, respectively. The additional

moment restrictions are

as the standard instruments that are specified for the level equation and differenced equation, respectively. The additional

moment restrictions are ![]() for

for ![]() for level equations and

for level equations and ![]() for

for ![]() for differenced equations. The instrument matrix for the level and differenced equations are

for differenced equations. The instrument matrix for the level and differenced equations are ![]() and

and ![]() , respectively, as follows:

, respectively, as follows:

![\[ \mb{Z} _\mi {li} = \left( \begin{array}{*{5}{c}} \mi{l} _\mi {i1}& 0 & 0 & 0 & 0 \\ 0 & \mi{l} _\mi {i2} & 0 & 0 & 0 \\ 0 & 0 & \mi{l} _\mi {i3} & 0 & 0 \\ \vdots & \vdots & \vdots & \vdots & \vdots \\ 0 & 0 & 0 & 0 & \mi{l} _\mi {iT} \\ \end{array} \right) \]](images/etsug_panel0481.png)

![\[ \mb{Z} _\mi {di} = \left( \begin{array}{*{5}{c}} \mi{d} _\mi {i1}& 0 & 0 & 0 & 0 \\ 0 & \mi{d} _\mi {i2} & 0 & 0 & 0 \\ 0 & 0 & \mi{d} _\mi {i3} & 0 & 0 \\ \vdots & \vdots & \vdots & \vdots & \vdots \\ 0 & 0 & 0 & 0 & \mi{d} _\mi {iT} \\ \end{array} \right) \]](images/etsug_panel0482.png)

To put the differenced and level equations together, for the system GMM estimator, the instrument matrix can be constructed as

where ![]() and

and ![]() correspond to the exogenous and predetermined uncorrelated variables, respectively.

correspond to the exogenous and predetermined uncorrelated variables, respectively.

The formation of the initial weighting matrix becomes somewhat problematic. If you denote the new weighting matrix with an asterisk, then you can write

where

![\[ \mb{H} _\mi {i} ^{*} = \left( \begin{array}{*{5}{c}} \mb{H} _\mi {i} & 0 & 0 & 0 & 0 \\ 0 & 1 & 0 & 0 & 0 \\ 0 & 0 & 1 & 0 & 0 \\ \vdots & \vdots & \vdots & \ddots & \vdots \\ 0 & 0 & 0 & \cdots & 1 \\ \end{array} \right) \]](images/etsug_panel0487.png)

To finish, you write out the two equations (or two stages) that are estimated,

where ![]() is the matrix of all explanatory variables—lagged endogenous, exogenous, and predetermined.

is the matrix of all explanatory variables—lagged endogenous, exogenous, and predetermined.

Let ![]() be given by

be given by

Using the preceding information, you can get the one-step GMM estimator,

If the GMM2 or ITGMM option is not specified in the MODEL statement, estimation terminates here. If it terminates, you can obtain the following information.

Variance of the error term comes from the second-stage (level) equations—that is,

![\[ \sigma ^{2} = \frac{{\hat\bmu }^{'}{\hat\bmu }}{M - p}=\frac{{\left(\mi{y} _\mi {it} - \hat{{\bbeta }}_{1}^{*}\mb{S} _\mi {it}\right)}^{'}{\left(\mi{y} _\mi {it} - \hat{{\bbeta }}_{1}^{*}\mb{S} _\mi {it}\right)}}{M - p} \]](images/etsug_panel0493.png)

where p is the number of regressors and M is the number of observations as defined before.

The variance covariance matrix can be obtained from

Alternatively, you can obtain a robust estimate of the variance covariance matrix by specifying the ROBUST option in the MODEL

statement. Without further reestimation of the model, the ![]() matrix is recalculated as

matrix is recalculated as

And the weighting matrix becomes

Using the preceding information, you construct the robust covariance matrix from the following.

Let ![]() denote a temporary matrix,

denote a temporary matrix,

The robust covariance estimate of ![]() is

is

Alternatively, you can use the new weighting matrix to form an updated estimate of the regression parameters, as requested by the GMM2 option in the MODEL statement. In short,

The covariance estimate of the two-step ![]() becomes

becomes

Similarly, you construct the robust covariance matrix from the following.

Let ![]() denote a temporary matrix,

denote a temporary matrix,

The robust covariance estimate of ![]() is

is

According to Arellano and Bond (1991), Blundell and Bond (1998), and many others, two-step standard errors are unreliable. Therefore, researchers often base inference on two-step parameter

estimates and one-step standard errors. Windmeijer (2005) derives a small-sample bias-corrected variance that uses the first-order Taylor series approximation of the two-step GMM

estimator ![]() around the true value

around the true value ![]() as a function of the one-step GMM estimator

as a function of the one-step GMM estimator ![]() ,

,

![\[ \begin{array}{*{3}{l}} {\hat{\bbeta }}_{2}^{*}-{\bbeta }^{*}& = & \left[ \left( \sum _{i}\mb{S} _\mi {i} ^{* '}\mb{Z} _\mi {i} ^{*} \right) \mb{A} _\mb {N} ^{*}\left({\hat{\bbeta }}_{1}^{*}\right)\left( \sum _{i} \mb{Z} _\mi {i} ^{* '}\mb{S} _\mi {i} ^{*} \right) \right]^{-1} \left( \sum _{i}\mb{S} _\mi {i} ^{* '}\mb{Z} _\mi {i} ^{*} \right) \mb{A} _\mb {N} ^{*}\left({\hat{\bbeta }}_{1}^{*}\right)\left( \sum _{i} \mb{Z} _\mi {i} ^{* '}\mb{e} _\mi {i} ^{*} \right)\\ & =& \left[ \left( \sum _{i}\mb{S} _\mi {i} ^{* '}\mb{Z} _\mi {i} ^{*} \right) \mb{A} _\mb {N} ^{*}\left({\bbeta }^{*}\right) \left( \sum _{i} \mb{Z} _\mi {i} ^{* '}\mb{S} _\mi {i} ^{*} \right) \right]^{-1} \left( \sum _{i}\mb{S} _\mi {i} ^{* '}\mb{Z} _\mi {i} ^{*} \right) \mb{A} _\mb {N} ^{*}\left({\bbeta }^{*}\right) \left( \sum _{i} \mb{Z} _\mi {i} ^{* '}\mb{e} _\mi {i} ^{*} \right) \\ & & +D_{{\bbeta }^{*},\mb{A} _\mb {N} ^{*}\left({\bbeta }^{*}\right)}\left({\hat{\bbeta }}_{1}^{*}-{\bbeta }^{*}\right) +O_{p}\left(N^{-1}\right)\\ \end{array} \]](images/etsug_panel0509.png)

where ![]() is the first derivative of

is the first derivative of ![]() with regard to

with regard to ![]() evaluated at the true value

evaluated at the true value ![]() . The kth column of

. The kth column of ![]() is

is

![\[ \begin{array}{*{1}{l}} \{ D_{{\bbeta }^{*},\mb{A} _\mb {N} ^{*}\left({\bbeta }^{*}\right)}\} _{k}=\\ \left[ \left( \sum _{i}\mb{S} _\mi {i} ^{* '}\mb{Z} _\mi {i} ^{*} \right) \mb{A} _\mb {N} ^{*}\left({\bbeta }^{*}\right)\left( \sum _{i} \mb{Z} _\mi {i} ^{* '}\mb{S} _\mi {i} ^{*} \right) \right]^{-1} \left( \sum _{i}\mb{S} _\mi {i} ^{* '}\mb{Z} _\mi {i} ^{*} \right) \mb{A} _\mb {N} ^{*}\left({\bbeta }^{*}\right)\frac{\partial \mb{A} _\mb {N} ^{* -1}\left(\bbeta \right)}{\partial \beta _{k}}|_{\bbeta ^{*}}\mb{A} _\mb {N} ^{*}\left({\bbeta }^{*}\right)\left( \sum _{i} \mb{Z} _\mi {i} ^{* '}\mb{S} _\mi {i} ^{*} \right)\\ \times \left[ \left( \sum _{i}\mb{S} _\mi {i} ^{* '}\mb{Z} _\mi {i} ^{*} \right) \mb{A} _\mb {N} ^{*}\left({\bbeta }^{*}\right) \left( \sum _{i} \mb{Z} _\mi {i} ^{* '}\mb{S} _\mi {i} ^{*} \right) \right]^{-1} \left( \sum _{i}\mb{S} _\mi {i} ^{* '}\mb{Z} _\mi {i} ^{*} \right) \mb{A} _\mb {N} ^{*}\left({\bbeta }^{*}\right)\left( \sum _{i} \mb{Z} _\mi {i} ^{* '}\mb{e} _\mi {i} ^{*} \right) \\ -\left[ \left( \sum _{i}\mb{S} _\mi {i} ^{* '}\mb{Z} _\mi {i} ^{*} \right) \mb{A} _\mb {N} ^{*}\left({\bbeta }^{*}\right)\left( \sum _{i} \mb{Z} _\mi {i} ^{* '}\mb{S} _\mi {i} ^{*} \right) \right]^{-1} \left( \sum _{i}\mb{S} _\mi {i} ^{* '}\mb{Z} _\mi {i} ^{*} \right) \mb{A} _\mb {N} ^{*}\left({\bbeta }^{*}\right)\frac{\partial \mb{A} _\mb {N} ^{* -1}\left(\bbeta \right)}{\partial \beta _{k}}|_{\bbeta ^{*}}\mb{A} _\mb {N} ^{*}\left({\bbeta }^{*}\right)\left( \sum _{i} \mb{Z} _\mi {i} ^{* '}\mb{e} _\mi {i} ^{*} \right) \end{array} \]](images/etsug_panel0514.png)

Because ![]() ,

, ![]() , and

, and ![]() are not feasible, you can replace them with their estimators,

are not feasible, you can replace them with their estimators, ![]() ,

, ![]() , and

, and ![]() , respectively. Denote

, respectively. Denote ![]() as the second-stage error term by

as the second-stage error term by

and

The first part vanishes and leaves

![\[ \begin{array}{*{4}{l}} \{ D_{{\hat{\bbeta }}_{2}^{*},\mb{A} _\mb {N} ^{*}\left({\hat{\bbeta }}_{1}^{*}\right)}\} _{k}& =& \frac{1}{N}\left[ \left( \sum _{i}\mb{S} _\mi {i} ^{* '}\mb{Z} _\mi {i} ^{*} \right) \mb{A} _\mb {N} ^{*}\left({\hat{\bbeta }}_{1}^{*}\right)\left( \sum _{i} \mb{Z} _\mi {i} ^{* '}\mb{S} _\mi {i} ^{*} \right) \right]^{-1} \left( \sum _{i}\mb{S} _\mi {i} ^{* '}\mb{Z} _\mi {i} ^{*} \right) \mb{A} _\mb {N} ^{*}\left({\hat{\bbeta }}_{1}^{*}\right)\\ & & \left(\sum _{i} \mb{Z} _\mi {i} ^{* '}\left( \begin{array}{*{2}{c}} \Delta \mb{S}_\mi {i,k}\hat{\bnu }_\mi {i,1} ^{'}+\hat{\bnu }_\mi {i,1}\Delta \mb{S}_\mi {i,k} ^{'}& 0 \\ 0 & \mb{S}_\mi {i,k}\hat{\bmu }_\mi {i,1}^{'}+\hat{\bmu }_\mi {i,1}\mb{S}_\mi {i,k} ^{'}\\ \end{array} \right)\mb{Z} _\mi {i} ^{*}\right)\mb{A} _\mb {N} ^{*}\left({\hat{\bbeta }}_{1}^{*}\right)\left( \sum _{i} \mb{Z} _\mi {i} ^{* '}\mb{\hat{e}} _\mi {i,2} ^{*} \right)\\ \end{array} \]](images/etsug_panel0522.png)

Plugging these into the Taylor expansion series yields

As a final note, it possible to iterate more than twice by specifying the ITGMM option. At each iteration, the parameter estimates

and its varian-covariance matrix (standard or robust) can be constructed as the one-step and/or two-step GMM estimators. Such

a multiple iteration should result in a more stable estimate of the covariance estimate. PROC PANEL allows two convergence

criteria. Convergence can occur in the parameter estimates or in the weighting matrices. Let ![]() denote the robust covariance matrix from iteration k, which is used as the weighting matrix in iteration

denote the robust covariance matrix from iteration k, which is used as the weighting matrix in iteration ![]() . Iterate until

. Iterate until

![\[ \max _{i,j\leq \mr{dim}\left( \mb{A}^{*}_\mb {N, k} \right) } \frac{\left| \mb{A} _\mb {N, k+1} ^{*}(i,j)- \mb{A} _\mb {N, k} ^{*}(i,j) \right|}{ \left| \mb{A} _\mb {N, k} ^{*}(i,j) \right| } \leq \mr{ATOL} \]](images/etsug_panel0526.png)

or

where ATOL is the tolerance for convergence in the weighting matrix and BTOL is the tolerance for convergence in the parameter estimate matrix. The default convergence criteria is BTOL = 1E–8 for PROC PANEL.

Specification tests under the GMM in PROC PANEL follow Arellano and Bond (1991) very generally. The first test available is a Sargan/Hansen test of over-identification. The test for a one-step estimation is constructed as

where ![]() is the stacked error term (of the differenced equation and level equation).

is the stacked error term (of the differenced equation and level equation).

When the robust weighting matrix is used, the test statistic is computed as

This definition of the Sargan test is used for all iterated estimations. The Sargan test is distributed as a ![]() with degrees of freedom equal to the number of moment conditions minus the number of parameters.

with degrees of freedom equal to the number of moment conditions minus the number of parameters.

In addition to the Sargan test, PROC PANEL tests for autocorrelation in the residuals. These tests are distributed as standard

normal. PROC PANEL tests the hypothesis that the autocorrelation of the ![]() th lag is significant.

th lag is significant.

Define ![]() as the lag of the differenced error, with zero padding for the missing values generated. Symbolically,

as the lag of the differenced error, with zero padding for the missing values generated. Symbolically,

![\[ {\bomega }_\mi {l,i} = \left( \begin{array}{*{1}{c}} 0 \\ \vdots \\ 0 \\ \bnu _{i,2} \\ \vdots \\ \bnu _{i,T - 1 - \mi{l}} \end{array} \right) \]](images/etsug_panel0534.png)

You define the constant ![]() as

as

You next define the constant ![]() as

as

Note that the choice of ![]() is dependent on the stage of estimation. If the estimation is first stage, then you would use the matrix with twos along

the main diagonal, and minus ones along the primary subdiagonals. In a robust estimation or multi-step estimation, this matrix

would be formed from the outer product of the residuals (from the previous step).

is dependent on the stage of estimation. If the estimation is first stage, then you would use the matrix with twos along

the main diagonal, and minus ones along the primary subdiagonals. In a robust estimation or multi-step estimation, this matrix

would be formed from the outer product of the residuals (from the previous step).

Define the constant ![]() as

as

The matrix ![]() is defined as

is defined as

The constant ![]() is defined as

is defined as

Using the four quantities, the test for autoregressive structure in the differenced residual is

The m statistic is distributed as a normal random variable with mean zero and standard deviation of one.

Arellano and Bond’s technique is a very useful method for dealing with any autoregressive characteristics in the data. However, there is one caveat to consider. Too many instruments bias the estimator to the within estimate. Furthermore, many instruments make this technique not scalable. The weighting matrix becomes very large, so every operation that involves it becomes more computationally intensive. The PANEL procedure enables you to specify a bandwidth for instrument selection. For example, specifying MAXBAND=10 means that at most there will be ten time observations for each variable that enters as an instrument. The default is to follow the Arellano-Bond methodology.

In specifying a maximum bandwidth, you can also specify the selection of the time observations. There are three possibilities: leading, trailing (default), and centered. The exact consequence of choosing any of those possibilities depends on the variable type (correlated, exogenous, or predetermined) and the time period of the current observation.

If the MAXBAND option is specified, then the following is true under any selection criterion (let t be the time subscript for the current observation). The first observation for the endogenous variable (as instrument) is

max![]() and the last instrument is

and the last instrument is ![]() . The first observation for a predetermined variable is max

. The first observation for a predetermined variable is max![]() and the last is

and the last is ![]() . The first and last observation for an exogenous variable is given in the following list:

. The first and last observation for an exogenous variable is given in the following list:

-

Trailing: If

, then the first instrument is for the first time period and the last observation is

, then the first instrument is for the first time period and the last observation is  . Otherwise, if

. Otherwise, if  , then the first observation is

, then the first observation is  and the last instrument to enter is t.

and the last instrument to enter is t.

-

Centered: If

, then the first observation is the first time period and the last observation is

, then the first observation is the first time period and the last observation is  . If

. If  , then the first instrument included is

, then the first instrument included is  and the last observation is T. If

and the last observation is T. If  , then the first included instrument is

, then the first included instrument is  and the last observation is

and the last observation is  . If the

. If the  value is an odd number, the procedure decrements by one.

value is an odd number, the procedure decrements by one.

-

Leading : If

, then the first instrument corresponds to time period

, then the first instrument corresponds to time period  and the last observation is T. Otherwise, if

and the last observation is T. Otherwise, if  , then the first observation is t and the last observation is

, then the first observation is t and the last observation is  .

.

The PANEL procedure enables you to include dummy variables to deal with the presence of time effects that are not captured

by including the lagged dependent variable. The dummy variables directly affect the level equations. However, this implies

that the difference of the dummy variable for time period t and ![]() enters the difference equation. The first usable observation occurs at

enters the difference equation. The first usable observation occurs at ![]() . If the level equation is not used in the estimation, then there is no way to identify the dummy variables. Selecting the

TIME option gives the same result as that which would be obtained by creating dummy variables in the data set and using those

in the regression.

. If the level equation is not used in the estimation, then there is no way to identify the dummy variables. Selecting the

TIME option gives the same result as that which would be obtained by creating dummy variables in the data set and using those

in the regression.

The PANEL procedure gives you several options when it comes to missing values and unbalanced panel. By default, any time period

for which there are missing values is skipped. The corresponding rows and columns of ![]() matrices are zeroed, and the calculation is continued. Alternatively, you can elect to replace missing values and missing

observations with zeros (ZERO), the overall mean of the series (OAM), the cross-sectional mean (CSM), or the time series mean

(TSM).

matrices are zeroed, and the calculation is continued. Alternatively, you can elect to replace missing values and missing

observations with zeros (ZERO), the overall mean of the series (OAM), the cross-sectional mean (CSM), or the time series mean

(TSM).