The NLIN Procedure

- Overview

-

Getting Started

-

Syntax

-

Details

Automatic DerivativesMeasures of Nonlinearity, Diagnostics and InferenceMissing ValuesSpecial VariablesTroubleshootingComputational MethodsOutput Data SetsConfidence IntervalsCovariance Matrix of Parameter EstimatesConvergence MeasuresDisplayed OutputIncompatibilities with SAS 6.11 and Earlier Versions of PROC NLINODS Table NamesODS Graphics

Automatic DerivativesMeasures of Nonlinearity, Diagnostics and InferenceMissing ValuesSpecial VariablesTroubleshootingComputational MethodsOutput Data SetsConfidence IntervalsCovariance Matrix of Parameter EstimatesConvergence MeasuresDisplayed OutputIncompatibilities with SAS 6.11 and Earlier Versions of PROC NLINODS Table NamesODS Graphics -

Examples

- References

Example 81.4 Affecting Curvature through Parameterization

The work of Ratkowsky (1983, 1990) has brought into focus the importance of close-to-linear behavior of parameters in nonlinear regression models. The curvature in a nonlinear model consists of two components: the intrinsic curvature and the parameter-effects curvature. See the section Relative Curvature Measures of Nonlinearity for details. Intrinsic curvature expresses the degree to which the nonlinear model bends as values of the parameters change. This is not the same as the curviness of the model as a function of the covariates (the x variables). Intrinsic curvature is a function of the type of model you are fitting and the data. This curvature component cannot be affected by reparameterization of the model. According to Ratkowsky (1983), the intrinsic curvature component is typically smaller than the parameter-effects curvature, which can be affected by altering the parameterization of the model.

In models with low curvature, the nonlinear least squares parameter estimators behave similarly to least squares estimators in linear regression models, which have a number of desirable properties. If the model is correct, they are best linear unbiased estimators and are normally distributed if the model errors are normal (otherwise they are asymptotically normal). As you lower the curvature of a nonlinear model, you can expect that the parameter estimators approach the behavior of the linear regression model estimators: they behave "close to linear."

This example uses a simple data set and a commonly applied model for dose-response relationships to examine how the parameter-effects curvature can be reduced. The statistics by which an estimator’s behavior is judged are Box’s bias (Box 1971) and Hougaard’s measure of skewness (Hougaard 1982, 1985).

The log-logistic model

![\[ \mr{E}[Y|x] = \delta + \frac{\alpha - \delta }{1+\gamma \exp \left\{ \beta \ln (x)\right\} } \]](images/statug_nlin0335.png)

is a popular model to express the response Y as a function of dose x. The response is bounded between the asymptotes  and

and  . The term in the denominator governs the transition between the asymptotes and depends on two parameters,

. The term in the denominator governs the transition between the asymptotes and depends on two parameters,  and

and  . The log-logistic model can be viewed as a member of a broader class of dose-response functions, those relying on switch-on or switch-off mechanisms (see, for example, Schabenberger and Pierce 2002, sec. 5.8.6). A switch function is usually a monotonic function

. The log-logistic model can be viewed as a member of a broader class of dose-response functions, those relying on switch-on or switch-off mechanisms (see, for example, Schabenberger and Pierce 2002, sec. 5.8.6). A switch function is usually a monotonic function  that takes values between 0 and 1. A switch-on function increases in x; a switch-off function decreases in x. In the log-logistic case, the function

that takes values between 0 and 1. A switch-on function increases in x; a switch-off function decreases in x. In the log-logistic case, the function

![\[ S(x,[\beta ,\gamma ]) = \frac{1}{1+\gamma \exp \left\{ \beta \ln (x)\right\} } \]](images/statug_nlin0338.png)

is a switch-off function for  and a switch-on function for

and a switch-on function for  . You can write general dose-response functions with asymptotes simply as

. You can write general dose-response functions with asymptotes simply as

![\[ \mr{E}[Y|x] = \mu _{\min } + (\mu _{\max }-\mu _{\min })S(x,\btheta ) \]](images/statug_nlin0341.png)

The following DATA step creates a small data set from a dose-response experiment with response y:

data logistic; input dose y; logdose = log(dose); datalines; 0.009 106.56 0.035 94.12 0.07 89.76 0.15 60.21 0.20 39.95 0.28 21.88 0.50 7.46 ;

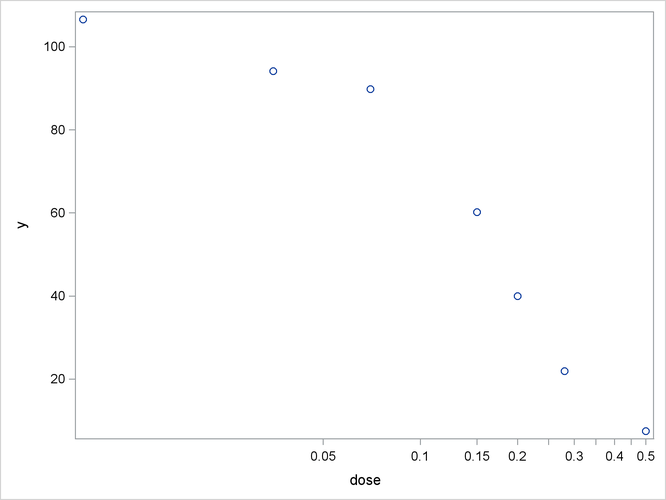

A graph of these data is produced with the following statements:

proc sgplot data=logistic; scatter y=y x=dose; xaxis type=log logstyle=linear; run;

Output 81.4.1: Observed Data in Dose-Response Experiment

When dose is expressed on the log scale, the sigmoidal shape of the dose-response relationship is clearly visible (Output 81.4.1). The log-logistic switching model in the preceding parameterization is fit with the following statements in the NLIN procedure:

proc nlin data=logistic bias hougaard nlinmeasures; parameters alpha=100 beta=3 gamma=300; delta = 0; Switch = 1/(1+gamma*exp(beta*log(dose))); model y = delta + (alpha - delta)*Switch; run;

The lower asymptote  is assumed to be 0 in this case. Since

is assumed to be 0 in this case. Since  is not listed in the PARAMETERS

statement and is assigned a value in the program, it is assumed to be constant. Note that the term

is not listed in the PARAMETERS

statement and is assigned a value in the program, it is assumed to be constant. Note that the term Switch is the switch-off function in the log-logistic model. The BIAS

and HOUGAARD

options in the PROC NLIN

statement request that Box’s bias, percentage bias, and Hougaard’s skewness measure be added to the table of parameter estimates,

and the NLINMEASURES

option requests that the global nonlinearity measures be produced.

The NLIN procedure converges after 10 iterations and achieves a residual mean squared error of 15.1869 (Output 81.4.2). This value is not that important by itself, but it is worth noting since this model fit is compared to the fit with other parameterizations later on.

Output 81.4.2: Iteration History and Analysis of Variance

| Iterative Phase | ||||

|---|---|---|---|---|

| Iter | alpha | beta | gamma | Sum of Squares |

| 0 | 100.0 | 3.0000 | 300.0 | 386.4 |

| 1 | 100.4 | 2.8011 | 162.8 | 129.1 |

| 2 | 100.8 | 2.6184 | 101.4 | 69.2710 |

| 3 | 101.3 | 2.4266 | 69.7579 | 68.2167 |

| 4 | 101.7 | 2.3790 | 69.0358 | 60.8223 |

| 5 | 101.8 | 2.3621 | 67.3709 | 60.7516 |

| 6 | 101.8 | 2.3582 | 67.0044 | 60.7477 |

| 7 | 101.8 | 2.3573 | 66.9150 | 60.7475 |

| 8 | 101.8 | 2.3571 | 66.8948 | 60.7475 |

| 9 | 101.8 | 2.3570 | 66.8902 | 60.7475 |

| 10 | 101.8 | 2.3570 | 66.8892 | 60.7475 |

The table of parameter estimates displays the estimates of the three model parameters, their approximate standard errors,

95% confidence limits, Hougaard’s skewness measure, Box’s bias, and percentage bias (Output 81.4.3). Parameters for which the skewness measure is less than 0.1 in absolute value and with percentage bias less than 1% exhibit

very close-to-linear behavior, and skewness values less than 0.25 in absolute value indicate reasonably close-to-linear behavior

(Ratkowsky 1990). According to these rules, the estimators  and

and  suffer from substantial curvature. The estimator

suffer from substantial curvature. The estimator  is especially "far-from-linear." Inferences that involve

is especially "far-from-linear." Inferences that involve  and rely on the reported standard errors or confidence limits (or both) for this parameter might be questionable.

and rely on the reported standard errors or confidence limits (or both) for this parameter might be questionable.

Output 81.4.3: Parameter Estimates, Hougaard’s Skewness, and Box’s Bias

The related global nonlinearity measures output table (Output 81.4.4) shows that both the maximum and RMS parameter-effects curvature are substantially larger than the critical curvature value recommended by Bates and Watts (1980). In contrast, the intrinsic curvatures of the model are less than the critical value. This implies that most of the nonlinearity can be removed by reparameterization.

Output 81.4.4: Global Nonlinearity Measures

One method of reducing the parameter-effects curvature, and thereby reduce the bias and skewness of the parameter estimators,

is to replace a parameter with its expected-value parameterization. Schabenberger et al. (1999) and Schabenberger and Pierce (2002, sec. 5.7.2) refer to this method as reparameterization through defining relationships. A defining relationship is obtained by equating the mean response at a chosen value of x (say,  ) to the model:

) to the model:

![\[ \mr{E}[Y|x^*] = \delta + \frac{\alpha - \delta }{1+\gamma \exp \left\{ \beta \ln (x^*)\right\} } \]](images/statug_nlin0345.png)

This equation is then solved for a parameter that is subsequently replaced in the original equation. This method is particularly

useful if  has an interesting interpretation. For example, let

has an interesting interpretation. For example, let  denote the value that reduces the response by

denote the value that reduces the response by  %,

%,

![\[ \mr{E}[Y|\lambda _ K] = \delta + \left(\frac{100-K}{100}\right)(\alpha -\delta ) \]](images/statug_nlin0348.png)

Because  exhibits large bias and skewness, it is the target in the first round of reparameterization. Setting the expression for the

conditional mean at

exhibits large bias and skewness, it is the target in the first round of reparameterization. Setting the expression for the

conditional mean at  equal to the mean function when

equal to the mean function when  yields the following expression:

yields the following expression:

![\[ \delta + \left(\frac{100-K}{100}\right)(\alpha -\delta ) = \delta + \frac{\alpha - \delta }{1+\gamma \exp \left\{ \beta \ln (\lambda _ K)\right\} } \]](images/statug_nlin0350.png)

This expression is solved for  , and the result is substituted back into the model equation. This leads to a log-logistic model in which

, and the result is substituted back into the model equation. This leads to a log-logistic model in which  is replaced by the parameter

is replaced by the parameter  , the dose at which the response was reduced by

, the dose at which the response was reduced by  %. The new model equation is

%. The new model equation is

![\[ \mr{E}[Y|x] = \delta + \frac{\alpha -\delta }{1+K/(100-K)\exp \{ \beta \ln (x/\lambda _ K)\} } \]](images/statug_nlin0351.png)

A particularly interesting choice is K = 50, since  is the dose at which the response is halved. In studies of mortality, this concentration is also known as the LD50. For the

special case of

is the dose at which the response is halved. In studies of mortality, this concentration is also known as the LD50. For the

special case of  the model equation becomes

the model equation becomes

![\[ \mr{E}[Y|x] = \delta + \frac{\alpha -\delta }{1+\exp \{ \beta \ln (x/\lambda _{50})\} } \]](images/statug_nlin0353.png)

You can fit the model in the LD50 parameterization with the following statements:

proc nlin data=logistic bias hougaard; parameters alpha=100 beta=3 LD50=0.15; delta = 0; Switch = 1/(1+exp(beta*log(dose/LD50))); model y = delta + (alpha - delta)*Switch; output out=nlinout pred=p lcl=lcl ucl=ucl; run;

Partial results from this NLIN run are shown in Output 81.4.5. The analysis of variance tables in Output 81.4.2 and Output 81.4.5 are identical. Changing the parameterization of a model does not affect the model fit. It does, however, affect the interpretation

of the parameters and the statistical properties (close-to-linear behavior) of the parameter estimators. The skewness and

bias measures of the parameter LD50 is considerably reduced compared to those values for the parameter  in the previous parameterization. Also,

in the previous parameterization. Also,  has been replaced by a parameter with a useful interpretation, the dose that yields a 50% reduction in mean response. Also

notice that the bias and skewness measures of

has been replaced by a parameter with a useful interpretation, the dose that yields a 50% reduction in mean response. Also

notice that the bias and skewness measures of  and

and  are not affected by the

are not affected by the

LD50 reparameterization.

Output 81.4.5: ANOVA Table and Parameter Estimates in LD50 Parameterization

To reduce the parameter-effects curvature of the  parameter, you can use the technique of defining relationships again. This can be done generically, by solving

parameter, you can use the technique of defining relationships again. This can be done generically, by solving

![\[ \mu ^* = \delta + \frac{\alpha -\delta }{1+\exp \left\{ \beta \ln (x/\lambda )\right\} } \]](images/statug_nlin0355.png)

for  , treating

, treating  as the new parameter (in lieu of

as the new parameter (in lieu of  ), and choosing a value for

), and choosing a value for  that leads to low skewness. This results in the expected-value parameterization of

that leads to low skewness. This results in the expected-value parameterization of  . Solving for

. Solving for  yields

yields

![\[ \beta = \frac{\log \left( \frac{\alpha -\mu ^*}{\mu ^*-\delta }\right)}{\log \left( x^* / \lambda \right)} \]](images/statug_nlin0357.png)

The interpretation of the parameter  that replaces

that replaces  in the model equation is simple: it is the mean dose response when the dose is

in the model equation is simple: it is the mean dose response when the dose is  . Fixing

. Fixing  , the following PROC NLIN statements fit this model:

, the following PROC NLIN statements fit this model:

proc nlin data=logistic bias hougaard nlinmeasures; parameters alpha=100 mustar=20 LD50=0.15; delta = 0; xstar = 0.3; beta = log((alpha - mustar)/(mustar - delta)) / log(xstar/LD50); Switch = 1/(1+exp(beta*log(dose/LD50))); model y = delta + (alpha - delta)*Switch; output out=nlinout pred=p lcl=lcl ucl=ucl; run;

Note that the switch-off function continues to be written in terms of  and the LD50. The only difference from the previous model is that

and the LD50. The only difference from the previous model is that  is now expressed as a function of the parameter

is now expressed as a function of the parameter  . Using expected-value parameterizations is a simple mechanism to lower the curvature in a model and to arrive at starting

values. The starting value for

. Using expected-value parameterizations is a simple mechanism to lower the curvature in a model and to arrive at starting

values. The starting value for  can be gleaned from Output 81.4.1 at x = 0.3.

can be gleaned from Output 81.4.1 at x = 0.3.

Output 81.4.6 shows selected results from this NLIN run. The ANOVA table is again unaffected by the change in parameterization. The skewness

for  is significantly reduced in comparison to those of the

is significantly reduced in comparison to those of the  parameter in the previous model (compare Output 81.4.6 and Output 81.4.5), while its bias remains on the same scale from Output 81.4.5 to Output 81.4.6. Also note the substantial reduction in the parameter-effects curvature values. As expected, the intrinsic curvature values

remain intact.

parameter in the previous model (compare Output 81.4.6 and Output 81.4.5), while its bias remains on the same scale from Output 81.4.5 to Output 81.4.6. Also note the substantial reduction in the parameter-effects curvature values. As expected, the intrinsic curvature values

remain intact.

Output 81.4.6: ANOVA Table and Parameter Estimates in Expected-Value Parameterization