The PANEL Procedure

- Overview

- Getting Started

-

Syntax

-

Details

Specifying the Input DataSpecifying the Regression ModelUnbalanced DataMissing ValuesComputational ResourcesRestricted EstimatesNotationOne-Way Fixed-Effects ModelTwo-Way Fixed-Effects ModelBalanced PanelsUnbalanced PanelsFirst-Differenced Methods for One-Way and Two-Way ModelsBetween EstimatorsPooled EstimatorOne-Way Random-Effects ModelTwo-Way Random-Effects ModelHausman-Taylor EstimationAmemiya-MaCurdy EstimationParks Method (Autoregressive Model)Da Silva Method (Variance-Component Moving Average Model)Dynamic Panel EstimatorsLinear Hypothesis TestingHeteroscedasticity-Corrected Covariance MatricesHeteroscedasticity- and Autocorrelation-Consistent Covariance MatricesR-SquareSpecification TestsPanel Data Poolability TestPanel Data Cross-Sectional Dependence TestPanel Data Unit Root TestsLagrange Multiplier (LM) Tests for Cross-Sectional and Time EffectsTests for Serial Correlation and Cross-Sectional EffectsTroubleshootingCreating ODS GraphicsOUTPUT OUT= Data SetOUTEST= Data SetOUTTRANS= Data SetPrinted OutputODS Table Names

Specifying the Input DataSpecifying the Regression ModelUnbalanced DataMissing ValuesComputational ResourcesRestricted EstimatesNotationOne-Way Fixed-Effects ModelTwo-Way Fixed-Effects ModelBalanced PanelsUnbalanced PanelsFirst-Differenced Methods for One-Way and Two-Way ModelsBetween EstimatorsPooled EstimatorOne-Way Random-Effects ModelTwo-Way Random-Effects ModelHausman-Taylor EstimationAmemiya-MaCurdy EstimationParks Method (Autoregressive Model)Da Silva Method (Variance-Component Moving Average Model)Dynamic Panel EstimatorsLinear Hypothesis TestingHeteroscedasticity-Corrected Covariance MatricesHeteroscedasticity- and Autocorrelation-Consistent Covariance MatricesR-SquareSpecification TestsPanel Data Poolability TestPanel Data Cross-Sectional Dependence TestPanel Data Unit Root TestsLagrange Multiplier (LM) Tests for Cross-Sectional and Time EffectsTests for Serial Correlation and Cross-Sectional EffectsTroubleshootingCreating ODS GraphicsOUTPUT OUT= Data SetOUTEST= Data SetOUTTRANS= Data SetPrinted OutputODS Table Names -

Examples

- References

One-Way Random-Effects Model

The specification for the one-way random-effects model is

![\[ u_{it}={\nu }_{i}+{\epsilon }_{it} \]](images/etsug_panel0175.png)

Let  (lowercase j) be a vector of ones of dimension

(lowercase j) be a vector of ones of dimension  , and

, and  (uppercase J) be a square matrix of ones of dimension

(uppercase J) be a square matrix of ones of dimension  . Define

. Define  ),

),  , and

, and  , with

, with  and

and  . Define the transformations

. Define the transformations  and

and  .

.

In the one-way model, estimation proceeds in a two-step fashion. First, you obtain estimates of the variance of the  and

and  . There are multiple ways to derive these estimates; PROC PANEL provides four options. All four options are valid for balanced

or unbalanced panels. Once these estimates are in hand, they are used to form a weighting factor

. There are multiple ways to derive these estimates; PROC PANEL provides four options. All four options are valid for balanced

or unbalanced panels. Once these estimates are in hand, they are used to form a weighting factor  , and estimation proceeds via OLS on partial deviations from group means.

, and estimation proceeds via OLS on partial deviations from group means.

PROC PANEL provides four options for estimating variance components, as described in what follows.

Fuller and Battese Method

The Fuller and Battese method for estimating variance components can be obtained with the option VCOMP = FB and the option RANONE. The variance components are given by the following equations (For the approach in the two-way model, see Baltagi and Chang (1994); Fuller and Battese (1974)). Let

![\[ \mi{R} ({\nu })=\mb{y} ^{'}\mb{Z} _{0}( \mb{Z} ^{'}_{0}\mb{Z} _{0})^{-1} \mb{Z} ^{'}_{0}\mb{y} \]](images/etsug_panel0188.png)

![\[ \mi{R} ({\beta }|{\nu })= \tilde{\mb{y}}^{'} \tilde{\mb{X}}^{'}_ s (\tilde{\mb{X}}^{'}_{s} \tilde{\mb{X} }_{s})^{-1} \tilde{\mb{X} }^{'}_{s}\tilde{\mb{y}} \]](images/etsug_panel0189.png)

![\[ \mi{R} ({\beta })=\mb{y}^{'}\mb{X}^{'} (\mb{X} ^{'}\mb{X} ) ^{-1}\mb{X} ^{'}\mb{y} \]](images/etsug_panel0190.png)

![\[ \mi{R} ({\nu }|{\beta })=\mi{R} ({\beta }|{\nu })+\mi{R} ({\nu })-\mi{R} ({\beta }) \]](images/etsug_panel0191.png)

The estimator of the error variance is given by

![\[ \hat{{\sigma }}_{{\epsilon }}^{2}= \left\{ \mb{y} ^{'}\mb{y}- \mi{R} ({\beta }|{\nu })- \mi{R} ({\nu })\right\} / \{ \mi{M}-\mi{N}-(\mi{K} -1)\} \]](images/etsug_panel0192.png)

If the NOINT option is specified, the estimator is

![\[ \hat{{\sigma }}_{{\epsilon }}^{2}= \left\{ \mb{y} ^{'}\mb{y}- \mi{R} ({\beta }|{\nu })- \mi{R} ({\nu }) \right\} / (\mi{M}-\mi{N}-\mi{K}) \]](images/etsug_panel0193.png)

The estimator of the cross-sectional variance component is given by

![\[ \hat{{\sigma }}_{{\nu }}^{2}= \left\{ \mi{R} ({\nu }|{\beta })- (\mi{N} -1)\hat{{\sigma }}_{{\epsilon }}^{2} \right\} / \left\{ \mi{M}- \mr{tr}( \mb{Z} ^{'}_{0}\mb{X} (\mb{X} ^{'}\mb{X} )^{-1}\mb{X} ^{'}\mb{Z} _{0} )\right\} \]](images/etsug_panel0194.png)

Note that the error variance is the variance of the residual of the within estimator.

According to Baltagi and Chang (1994), the Fuller and Battese method is appropriate to apply to both balanced and unbalanced data. The Fuller and Battese method is the default for estimation of one-way random-effects models with balanced panels. However, the Fuller and Battese method does not always obtain nonnegative estimates for the cross section (or group) variance. In the case of a negative estimate, a warning is printed and the estimate is set to zero.

Wansbeek and Kapteyn Method

The Wansbeek and Kapteyn method for estimating variance components can be obtained by setting VCOMP = WK (together with the option RANONE). The estimation of the one-way unbalanced data model is performed by using a specialization (Baltagi and Chang 1994) of the approach used by Wansbeek and Kapteyn (1989) for unbalanced two-way models. The Wansbeek and Kapteyn method is the default for unbalanced data. If just RANONE is specified, without the VCOMP= option, PROC PANEL estimates the variance component under Wansbeek and Kapteyn’s method.

The estimation of the variance components is performed by using a quadratic unbiased estimation (QUE) method. This involves focusing on quadratic forms of the centered residuals, equating their expected values to the realized quadratic forms, and solving for the variance components.

Let

![\[ q_{1}=\tilde{\mb{u} }^{'}\mb{Q} _{0}\tilde{\mb{u} } \]](images/etsug_panel0195.png)

![\[ q_{2}=\tilde{\mb{u} }^{'}\mb{P} _{0}\tilde{\mb{u} } \]](images/etsug_panel0196.png)

where the residuals  are given by

are given by  if there is an intercept and by

if there is an intercept and by  if there is not.

if there is not.

Consider the expected values

![\[ \mi{E} (q_{1})=(\mi{M}-\mi{N}-(\mi{K} -1)) {\sigma }^{2}_{{\epsilon }} \]](images/etsug_panel0200.png)

![\[ \mi{E} (q_{2})=(\mi{N} -1+ \mr{tr}[( \mb{X} ^{'}_{s}\mb{Q} _{0}\mb{X} _{s})^{-1} \mb{X} ^{'}_{s}\mb{P} _{0}\mb{X} _{s}]- \mr{tr}[( \mb{X} ^{'}_{s}\mb{Q} _{0}\mb{X} _{s})^{-1} \mb{X} ^{'}_{s} {\bar{\mb{J}}}_{M}\mb{X} _{s}] ) {\sigma }^{2}_{{\epsilon }} \]](images/etsug_panel0201.png)

![\[ \; \; \; \; [\mi{M}-(\sum _{i} \mi{T} ^{2}_{i}/\mi{M} )] {\sigma }^{2}_{{\nu }} \]](images/etsug_panel0202.png)

where  and

and  are obtained by equating the quadratic forms to their expected values.

are obtained by equating the quadratic forms to their expected values.

The estimator of the error variance is the residual variance of the within estimate. The Wansbeek and Kapteyn method can also generate negative variance components estimates.

Wallace and Hussain Method

The Wallace and Hussain method for estimating variance components can be obtained by setting VCOMP = WH (together with the option RANONE). Wallace-Hussain estimates start from OLS residuals on a data that are assumed to exhibit groupwise heteroscedasticity. As in the Wansbeek and Kapteyn method, you start with

![\[ q_{1}=\tilde{\mb{u}}_{OLS}^{'}\mb{Q} _{0}\tilde{\mb{u}}_{OLS} \]](images/etsug_panel0205.png)

![\[ q_{2}=\tilde{\mb{u}}_{OLS}^{'}\mb{P} _{0}\tilde{\mb{u}}_{OLS} \]](images/etsug_panel0206.png)

However, instead of using the 'true' errors, you substitute the OLS residuals. You solve the system

![\[ \mi{E} (\hat{q}_{1}) = \mi{E} (\hat{u}_{OLS}^{'}\mb{Q} _{0}\hat{u}_{OLS}) = \delta _{11} \hat{{\sigma }}^{2}_{{\nu }} + \delta _{12} \hat{{\sigma }}^{2}_{{\epsilon }} \]](images/etsug_panel0207.png)

![\[ \mi{E} (\hat{q}_{2}) = \mi{E} (\hat{u}_{OLS}^{'}\mb{P} _{0}\hat{u}_{OLS}) = \delta _{21} \hat{{\sigma }}^{2}_{{\nu }} + \delta _{22} \hat{{\sigma }}^{2}_{{\epsilon }} \]](images/etsug_panel0208.png)

The constants  are given by

are given by

![\[ \delta _{11} = \mr{tr}\left(\left(\mb{X} ^{'}\mb{X} \right)^{-1}\mb{X} ^{'}\mb{Z}_0 \mb{Z}_0 ^{'}\mb{X} \right) - \mr{tr}\left(\left(\mb{X} ^{'}\mb{X} \right)^{-1}\mb{X} ^{'}\mb{P}_0 \mb{X} \left(\mb{X} ^{'}\mb{X} \right)^{-1}\mb{X} ^{'}\mb{Z}_0 \mb{Z}_0 ^{'}\mb{X} \right) \]](images/etsug_panel0210.png)

![\[ \delta _{12} = M - N - K + \mr{tr}\left(\left(\mb{X} ^{'}\mb{X} \right)^{-1}\mb{X} ^{'}\mb{P}_0 \mb{X} \right) \]](images/etsug_panel0211.png)

![\[ \delta _{21} = M - 2tr\left(\left(\mb{X} ^{'}\mb{X} \right)^{-1}\mb{X} ^{'}\mb{Z}_0 \mb{Z}_0 ^{'}\mb{X} \right) + \mr{tr}\left(\left(\mb{X} ^{'}\mb{X} \right)^{-1}\mb{X} ^{'}\mb{P}_0 \mb{X} \right) \]](images/etsug_panel0212.png)

![\[ \delta _{22} = N - \mr{tr}\left(\left(\mb{X} ^{'}\mb{X} \right)^{-1}\mb{X} ^{'}\mb{P}_0 \mb{X} \right) \]](images/etsug_panel0213.png)

where tr() is the trace operator on a square matrix.

Solving this system produces the variance components. This method is applicable to balanced and unbalanced panels. However, there is no guarantee of positive variance components. Any negative values are fixed equal to zero.

Nerlove Method

The Nerlove method for estimating variance components can be obtained by setting VCOMP = NL. The Nerlove method (see Baltagi 2008, p. 20) is assured to give estimates of the variance components that are always positive. Furthermore, it is simple in contrast to the previous estimators.

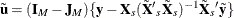

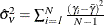

If  is the

is the  th fixed effect, Nerlove’s method uses the variance of the fixed effects as the estimate of

th fixed effect, Nerlove’s method uses the variance of the fixed effects as the estimate of  . You have

. You have  , where

, where  is the mean fixed effect. The estimate of

is the mean fixed effect. The estimate of  is simply the residual sum of squares of the one-way fixed-effects regression divided by the number of observations.

is simply the residual sum of squares of the one-way fixed-effects regression divided by the number of observations.

Transformation and Estimation

After you calculate the variance components from any method, the next task is to estimate the regression model of interest.

For each individual, you form a weight ( ) as

) as

![\[ \theta _\mi {i} = 1 - \sigma _{\epsilon } / w_\mi {i} \]](images/etsug_panel0220.png)

![\[ w_{i}^{2} = T_{i}{\sigma }^{2}_{{\nu }} + {\sigma }^{2}_{{\epsilon }} \]](images/etsug_panel0221.png)

where  is the

is the  th cross section’s time observations.

th cross section’s time observations.

Taking the  , you form the partial deviations,

, you form the partial deviations,

![\[ \tilde{y}_\mi {it} = y_\mi {it}- \theta _\mi {i} \bar{y}_\mi {i \cdot } \]](images/etsug_panel0223.png)

![\[ \tilde{x}_\mi {it} = x_\mi {it}- \theta _\mi {i} \bar{x}_\mi {i \cdot } \]](images/etsug_panel0224.png)

where  and

and  are cross-sectional means of the dependent variable and independent variables (including the constant if any), respectively.

are cross-sectional means of the dependent variable and independent variables (including the constant if any), respectively.

The random effects  is then the result of simple OLS on the transformed data.

is then the result of simple OLS on the transformed data.