The QUANTREG Procedure

Quantile Regression

Quantile regression generalizes the concept of a univariate quantile to a conditional quantile given one or more covariates.

Recall that a student’s score on a test is at the  quantile if his or her score is better than that of

quantile if his or her score is better than that of  of the students who took the test. The score is also said to be at the 100

of the students who took the test. The score is also said to be at the 100 th percentile.

th percentile.

For a random variable Y with probability distribution function

![\[ F(y) = \mbox{ Prob } (Y\leq y) \]](images/statug_qreg0004.png)

the  quantile of Y is defined as the inverse function

quantile of Y is defined as the inverse function

![\[ Q(\tau ) = \mbox{ inf }\{ y: F(y)\geq \tau \} \]](images/statug_qreg0005.png)

where the quantile level  ranges between 0 and 1. In particular, the median is

ranges between 0 and 1. In particular, the median is  .

.

For a random sample  of Y, it is well known that the sample median minimizes the sum of absolute deviations:

of Y, it is well known that the sample median minimizes the sum of absolute deviations:

![\[ \mbox{median} = {\arg \min }_{\xi \in \mb{R}} \sum _{i=1}^ n |y_ i-\xi | \]](images/statug_qreg0008.png)

Likewise, the general  sample quantile

sample quantile  , which is the analog of

, which is the analog of  , is formulated as the minimizer

, is formulated as the minimizer

![\[ \xi (\tau ) = {\arg \min }_{\xi \in \mb{R}} \sum _{i=1}^ n \rho _\tau (y_ i-\xi ) \]](images/statug_qreg0011.png)

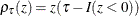

where  ,

,  , and where

, and where  denotes the indicator function. The loss function

denotes the indicator function. The loss function  assigns a weight of

assigns a weight of  to positive residuals

to positive residuals  and a weight of

and a weight of  to negative residuals.

to negative residuals.

Using this loss function, the linear conditional quantile function extends the  sample quantile

sample quantile  to the regression setting in the same way that the linear conditional mean function extends the sample mean. Recall that

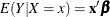

OLS regression estimates the linear conditional mean function

to the regression setting in the same way that the linear conditional mean function extends the sample mean. Recall that

OLS regression estimates the linear conditional mean function  by solving for

by solving for

![\[ \hat\bbeta = {\arg \min }_{\bbeta \in \mb{R}^ p} \sum _{i=1}^ n(y_ i-\mb{x}_ i^{\prime }\bbeta )^2 \]](images/statug_qreg0019.png)

The estimated parameter  minimizes the sum of squared residuals in the same way that the sample mean

minimizes the sum of squared residuals in the same way that the sample mean  minimizes the sum of squares:

minimizes the sum of squares:

![\[ \hat\mu = {\arg \min }_{\mu \in \mb{R}} \sum _{i=1}^ n(y_ i-\mu )^2 \]](images/statug_qreg0022.png)

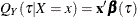

Likewise, quantile regression estimates the linear conditional quantile function,  , by solving the following equation for

, by solving the following equation for  :

:

![\[ \hat\bbeta (\tau ) = {\arg \min }_{\bbeta \in \mb{R}^ p} \sum _{i=1}^ n\rho _\tau (y_ i- \mb{x}_ i^{\prime } \bbeta ) \]](images/statug_qreg0025.png)

The quantity  is called the

is called the  regression quantile. The case

regression quantile. The case  (which minimizes the sum of absolute residuals) corresponds to median regression (which is also known as

(which minimizes the sum of absolute residuals) corresponds to median regression (which is also known as  regression).

regression).

The following set of regression quantiles is referred to as the quantile process:

![\[ \{ \bbeta (\tau ): \tau \in (0, 1) \} \]](images/statug_qreg0029.png)

The QUANTREG procedure computes the quantile function  and conducts statistical inference on the estimated parameters

and conducts statistical inference on the estimated parameters  .

.