The NLP Procedure

- Overview

-

Getting Started

-

Syntax

Functional SummaryDictionary of OptionsPROC NLP StatementARRAY StatementBOUNDS StatementBY StatementCRPJAC StatementDECVAR StatementGRADIENT StatementHESSIAN StatementINCLUDE StatementJACNLC StatementJACOBIAN StatementLABEL StatementLINCON StatementMATRIX StatementMIN, MAX, and LSQ StatementsMINQUAD and MAXQUAD StatementsNLINCON StatementPROFILE StatementProgram Statements

Functional SummaryDictionary of OptionsPROC NLP StatementARRAY StatementBOUNDS StatementBY StatementCRPJAC StatementDECVAR StatementGRADIENT StatementHESSIAN StatementINCLUDE StatementJACNLC StatementJACOBIAN StatementLABEL StatementLINCON StatementMATRIX StatementMIN, MAX, and LSQ StatementsMINQUAD and MAXQUAD StatementsNLINCON StatementPROFILE StatementProgram Statements -

Details

Criteria for OptimalityOptimization AlgorithmsFinite-Difference Approximations of DerivativesHessian and CRP Jacobian ScalingTesting the Gradient SpecificationTermination CriteriaActive Set MethodsFeasible Starting PointLine-Search MethodsRestricting the Step LengthComputational ProblemsCovariance MatrixInput and Output Data SetsDisplayed OutputMissing ValuesComputational ResourcesMemory LimitRewriting NLP Models for PROC OPTMODEL

Criteria for OptimalityOptimization AlgorithmsFinite-Difference Approximations of DerivativesHessian and CRP Jacobian ScalingTesting the Gradient SpecificationTermination CriteriaActive Set MethodsFeasible Starting PointLine-Search MethodsRestricting the Step LengthComputational ProblemsCovariance MatrixInput and Output Data SetsDisplayed OutputMissing ValuesComputational ResourcesMemory LimitRewriting NLP Models for PROC OPTMODEL -

Examples

- References

Example 6.9 Minimize Total Delay in a Network

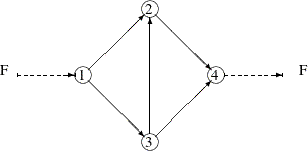

The following example is taken from the user’s guide of GINO (Liebman et al. 1986). A simple network of five roads (arcs) can be illustrated by the path diagram:

Output 6.9.1: Simple Road Network

The five roads connect four intersections illustrated by numbered nodes. Each minute F vehicles enter and leave the network. Arc  refers to the road from intersection i to intersection j, and the parameter

refers to the road from intersection i to intersection j, and the parameter  refers to the flow from i to j. The law that traffic flowing into each intersection j must also flow out is described by the linear equality constraint

refers to the flow from i to j. The law that traffic flowing into each intersection j must also flow out is described by the linear equality constraint

![\[ \sum _ i x_{ij} = \sum _ i x_{ji} \: , \quad j=1,\ldots ,n \]](images/ormplpug_nlp0517.png)

In general, roads also have an upper capacity, which is the number of vehicles which can be handled per minute. The upper

limits  can be enforced by boundary constraints

can be enforced by boundary constraints

![\[ 0 \le x_{ij} \le c_{ij} \: , \quad i,j=1,\ldots ,n \]](images/ormplpug_nlp0519.png)

Finding the maximum flow through a network is equivalent to solving a simple linear optimization problem, and for large problems, PROC LP or PROC NETFLOW can be used. The objective function is

![\[ \max \quad f = x_{24} + x_{34} \]](images/ormplpug_nlp0520.png)

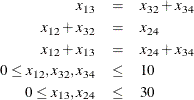

and the constraints are

The three linear equality constraints are linearly dependent. One of them is deleted automatically by the PROC NLP subroutines. Even though the default technique is used for this small example, any optimization subroutine can be used.

proc nlp all initial=.5;

max y;

parms x12 x13 x32 x24 x34;

bounds x12 <= 10,

x13 <= 30,

x32 <= 10,

x24 <= 30,

x34 <= 10;

/* what flows into an intersection must flow out */

lincon x13 = x32 + x34,

x12 + x32 = x24,

x24 + x34 = x12 + x13;

y = x24 + x34 + 0*x12 + 0*x13 + 0*x32;

run;

The iteration history is given in Output 6.9.2, and the optimal solution is given in Output 6.9.3.

Output 6.9.2: Iteration History

| Newton-Raphson Ridge Optimization |

| Without Parameter Scaling |

| Iteration | Restarts | Function Calls |

Active Constraints |

Objective Function |

Objective Function Change |

Max Abs Gradient Element |

Ridge | Ratio Between Actual and Predicted Change |

||

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | * | 0 | 2 | 4 | 20.25000 | 19.2500 | 0.5774 | 0.0313 | 0.860 | |

| 2 | * | 0 | 3 | 5 | 30.00000 | 9.7500 | 0 | 0.0313 | 1.683 |

Output 6.9.3: Optimization Results

Finding a traffic pattern that minimizes the total delay to move F vehicles per minute from node 1 to node 4 introduces nonlinearities that, in turn, demand nonlinear optimization techniques.

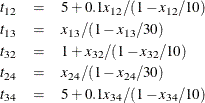

As traffic volume increases, speed decreases. Let  be the travel time on arc

be the travel time on arc  and assume that the following formulas describe the travel time as decreasing functions of the amount of traffic:

and assume that the following formulas describe the travel time as decreasing functions of the amount of traffic:

These formulas use the road capacities (upper bounds), assuming  vehicles per minute have to be moved through the network. The objective function is now

vehicles per minute have to be moved through the network. The objective function is now

![\[ \min \quad f = t_{12} x_{12} + t_{13} x_{13} + t_{32} x_{32} + t_{24} x_{24} + t_{34} x_{34} \]](images/ormplpug_nlp0525.png)

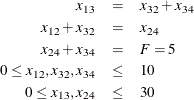

and the constraints are

Again, the default algorithm is used:

proc nlp all initial=.5;

min y;

parms x12 x13 x32 x24 x34;

bounds x12 x13 x32 x24 x34 >= 0;

lincon x13 = x32 + x34, /* flow in = flow out */

x12 + x32 = x24,

x24 + x34 = 5; /* = f = desired flow */

t12 = 5 + .1 * x12 / (1 - x12 / 10);

t13 = x13 / (1 - x13 / 30);

t32 = 1 + x32 / (1 - x32 / 10);

t24 = x24 / (1 - x24 / 30);

t34 = 5 + .1 * x34 / (1 - x34 / 10);

y = t12*x12 + t13*x13 + t32*x32 + t24*x24 + t34*x34;

run;

The iteration history is given in Output 6.9.4, and the optimal solution is given in Output 6.9.5.

Output 6.9.4: Iteration History

Output 6.9.5: Optimization Results

The active constraints and corresponding Lagrange multiplier estimates (costs) are given in Output 6.9.6 and Output 6.9.7, respectively.

Output 6.9.6: Linear Constraints at Solution

Output 6.9.7: Lagrange Multipliers at Solution

Output 6.9.8 shows that the projected gradient is very small, satisfying the first-order optimality criterion.

Output 6.9.8: Projected Gradient at Solution

The projected Hessian matrix (shown in Output 6.9.9) is positive definite, satisfying the second-order optimality criterion.

Output 6.9.9: Projected Hessian at Solution