The HPLMIXED Procedure

Statistical Properties

If  and

and  are known,

are known,  is the best linear unbiased estimator (BLUE) of

is the best linear unbiased estimator (BLUE) of  , and

, and  is the best linear unbiased predictor (BLUP) of

is the best linear unbiased predictor (BLUP) of  (Searle 1971; Harville 1988, 1990; Robinson 1991; McLean, Sanders, and Stroup 1991). Here, "best" means minimum mean squared error. The covariance matrix of

(Searle 1971; Harville 1988, 1990; Robinson 1991; McLean, Sanders, and Stroup 1991). Here, "best" means minimum mean squared error. The covariance matrix of  is

is

![\[ \mb{C} = \left[\begin{array}{cc} \mb{X}’\mb{R}^{-1}\mb{X} & \mb{X}’\mb{R}^{-1}\mb{Z} \\*\mb{Z}’\mb{R}^{-1}\mb{X} & \mb{Z}’\mb{R}^{-1}\mb{Z} + \mb{G}^{-1} \end{array}\right]^{-} \]](images/stathpug_hplmixed0204.png)

where  denotes a generalized inverse (

Searle 1971).

denotes a generalized inverse (

Searle 1971).

However,  and

and  are usually unknown and are estimated by using one of the aforementioned methods. These estimates,

are usually unknown and are estimated by using one of the aforementioned methods. These estimates,  and

and  , are therefore simply substituted into the preceding expression to obtain

, are therefore simply substituted into the preceding expression to obtain

![\[ \widehat{\mb{C}} = \left[\begin{array}{cc} \bX ’\widehat{\bR }^{-1}\bX & \bX ’\widehat{\bR }^{-1}\bZ \\*\bZ ’\widehat{\bR }^{-1}\bX & \bZ ’\widehat{\bR }^{-1}\bZ + \widehat{\bG }^{-1} \end{array}\right]^{-} \]](images/stathpug_hplmixed0206.png)

as the approximate variance-covariance matrix of  ). In this case, the BLUE and BLUP acronyms no longer apply, but the word empirical is often added to indicate such an approximation. The appropriate acronyms thus become EBLUE and EBLUP.

). In this case, the BLUE and BLUP acronyms no longer apply, but the word empirical is often added to indicate such an approximation. The appropriate acronyms thus become EBLUE and EBLUP.

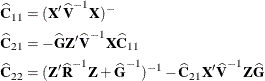

McLean and Sanders (1988) show that  can also be written as

can also be written as

![\[ \widehat{\bC } = \left[\begin{array}{cc} \widehat{\bC }_{11} & \widehat{\bC }_{21}’ \\ \widehat{\bC }_{21} & \widehat{\bC }_{22} \end{array}\right] \]](images/stathpug_hplmixed0209.png)

where

Note that  is the familiar estimated generalized least squares formula for the variance-covariance matrix of

is the familiar estimated generalized least squares formula for the variance-covariance matrix of  .

.