The SURVEYLOGISTIC Procedure

- Overview

- Getting Started

-

Syntax

PROC SURVEYLOGISTIC Statement BY Statement CLASS Statement CLUSTER Statement CONTRAST Statement DOMAIN Statement EFFECT Statement ESTIMATE Statement FREQ Statement LSMEANS Statement LSMESTIMATE Statement MODEL Statement OUTPUT Statement REPWEIGHTS Statement SLICE Statement STORE Statement STRATA Statement TEST Statement UNITS Statement WEIGHT Statement

PROC SURVEYLOGISTIC Statement BY Statement CLASS Statement CLUSTER Statement CONTRAST Statement DOMAIN Statement EFFECT Statement ESTIMATE Statement FREQ Statement LSMEANS Statement LSMESTIMATE Statement MODEL Statement OUTPUT Statement REPWEIGHTS Statement SLICE Statement STORE Statement STRATA Statement TEST Statement UNITS Statement WEIGHT Statement -

Details

Missing Values Model Specification Model Fitting Survey Design Information Logistic Regression Models and Parameters Variance Estimation Domain Analysis Hypothesis Testing and Estimation Linear Predictor, Predicted Probability, and Confidence Limits Output Data Sets Displayed Output ODS Table Names ODS Graphics

Missing Values Model Specification Model Fitting Survey Design Information Logistic Regression Models and Parameters Variance Estimation Domain Analysis Hypothesis Testing and Estimation Linear Predictor, Predicted Probability, and Confidence Limits Output Data Sets Displayed Output ODS Table Names ODS Graphics -

Examples

- References

Iterative Algorithms for Model Fitting

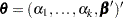

Two iterative maximum likelihood algorithms are available in PROC SURVEYLOGISTIC to obtain the pseudo-estimate  of the model parameter

of the model parameter  . The default is the Fisher scoring method, which is equivalent to fitting by iteratively reweighted least squares. The alternative algorithm is the Newton-Raphson method. Both algorithms give the same parameter estimates; the covariance matrix of

. The default is the Fisher scoring method, which is equivalent to fitting by iteratively reweighted least squares. The alternative algorithm is the Newton-Raphson method. Both algorithms give the same parameter estimates; the covariance matrix of  is estimated in the section Variance Estimation. For a generalized logit model, only the Newton-Raphson technique is available. You can use the TECHNIQUE= option in the MODEL statement to select a fitting algorithm.

is estimated in the section Variance Estimation. For a generalized logit model, only the Newton-Raphson technique is available. You can use the TECHNIQUE= option in the MODEL statement to select a fitting algorithm.

Iteratively Reweighted Least Squares Algorithm (Fisher Scoring)

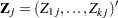

Let  be the response variable that takes values

be the response variable that takes values

. Let

. Let  index all observations and

index all observations and  be the value of response for the

be the value of response for the  th observation. Consider the multinomial variable

th observation. Consider the multinomial variable  such that

such that

|

|

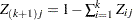

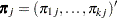

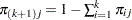

and  . With

. With  denoting the probability that the jth observation has response value i, the expected value of

denoting the probability that the jth observation has response value i, the expected value of  is

is  , and

, and  . The covariance matrix of

. The covariance matrix of  is

is  , which is the covariance matrix of a multinomial random variable for one trial with parameter vector

, which is the covariance matrix of a multinomial random variable for one trial with parameter vector  . Let

. Let  be the vector of regression parameters—for example,

be the vector of regression parameters—for example,  for cumulative logit model. Let

for cumulative logit model. Let  be the matrix of partial derivatives of

be the matrix of partial derivatives of  with respect to

with respect to  . The estimating equation for the regression parameters is

. The estimating equation for the regression parameters is

|

where  , and

, and  and

and  are the WEIGHT and FREQ values of the

are the WEIGHT and FREQ values of the  th observation.

th observation.

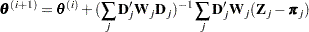

With a starting value of  , the pseudo-estimate of

, the pseudo-estimate of  is obtained iteratively as

is obtained iteratively as

|

where  ,

,  , and

, and  are evaluated at the

are evaluated at the  th iteration

th iteration  . The expression after the plus sign is the step size. If the log likelihood evaluated at

. The expression after the plus sign is the step size. If the log likelihood evaluated at  is less than that evaluated at

is less than that evaluated at  , then

, then  is recomputed by step-halving or ridging. The iterative scheme continues until convergence is obtained—that is, until

is recomputed by step-halving or ridging. The iterative scheme continues until convergence is obtained—that is, until  is sufficiently close to

is sufficiently close to  . Then the maximum likelihood estimate of

. Then the maximum likelihood estimate of  is

is  .

.

By default, starting values are zero for the slope parameters, and starting values are the observed cumulative logits (that is, logits of the observed cumulative proportions of response) for the intercept parameters. Alternatively, the starting values can be specified with the INEST= option in the PROC SURVEYLOGISTIC statement.

Newton-Raphson Algorithm

Let

|

|

|

|||

|

|

|

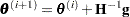

be the gradient vector and the Hessian matrix, where  is the log likelihood for the

is the log likelihood for the  th observation. With a starting value of

th observation. With a starting value of  , the pseudo-estimate

, the pseudo-estimate  of

of  is obtained iteratively until convergence is obtained:

is obtained iteratively until convergence is obtained:

|

where  and

and  are evaluated at the

are evaluated at the  th iteration

th iteration  . If the log likelihood evaluated at

. If the log likelihood evaluated at  is less than that evaluated at

is less than that evaluated at  , then

, then  is recomputed by step-halving or ridging. The iterative scheme continues until convergence is obtained—that is, until

is recomputed by step-halving or ridging. The iterative scheme continues until convergence is obtained—that is, until  is sufficiently close to

is sufficiently close to  . Then the maximum likelihood estimate of

. Then the maximum likelihood estimate of  is

is  .

.