Working with the Data Management Job Node

Overview of the Data Management Job Node

You can add a Data Management

Job node to a Flow tab in an orchestration

job to run DataFlux Data Management Studio jobs on DataFlux Data Management

Servers. The available jobs are automatically populated into the Data

management job field in the Data Management

Job tab of the node. The following types of batch jobs

are available:

-

DataFlux Data Management Studio data jobs

-

DataFlux Data Management Studio process jobs

-

architect jobs from dfPower Studio

For the most seamless

integration possible, these jobs should be located on a DataFlux Data

Management Server 2.5.

Inputs and Outputs to the Data Management Job Node

The Data

Management Job node can take the inputs and outputs listed

in the following table:

|

Name

|

Description

|

|---|---|

|

Inputs:

|

|

|

DWB_DELETE_SERVER_LOGS

|

(TRUE, FALSE) Determines

whether the server log and status files are deleted at completion.

Defaults to null, which deletes the log and status files

|

|

DWB_JOBNAME

|

The name of the batch

job to run on the server

|

|

DWB_PROXY_HOST

|

The proxy server host

name

|

|

DWB_PROXY_PORT

|

The proxy server port

name

|

|

DWB_SAVE_LOG

|

(TRUE, FALSE) Determines

whether the log file is stored locally or placed in the temp directory

|

|

DWB_SAVE_STATUS

|

(TRUE, FALSE) Determines

whether the status file is stored locally or placed in the temp directory.

Only works with DWB_WAIT = true.

|

|

DWB_SERVER_NAME

|

The DataFlux Data Management

Server name to connect to the server

|

|

DWB_TIMEOUT

|

The time-out length

for each call to the server. This value is the time to actually start

the process running. Values >0 = seconds, <0 = microseconds

(10^-6), 0 = no time-out

|

|

DWB_WAIT

|

(TRUE, FALSE) Determines

whether this node waits (stay running) until a finished state is returned

|

|

Outputs:

|

|

|

DWB_AUTHTYPE

|

The resolved authentication

type

|

|

DWB_JOBID

|

The job request ID for

this run of the job

|

|

DWB_JOBSTATUS

|

The status message from

the server

|

|

DWB_LOGFILE

|

The path to the log

file, if requested

|

|

DWB_RESOLVED_DOMAIN

|

The resolved authentication

domain

|

|

DWB_RESOLVED_HOST

|

The DataFlux Data Management

Server resolved host name, including the URI scheme (http:// or https://)

|

|

DWB_RESOLVED_PORT

|

The DataFlux Data Management

Server listen port

|

|

DWB_RESOLVED_PROXY_HOST

|

The resolved proxy host

name

|

|

DWB_RESOLVED_PROXY_PORT

|

The resolved proxy port

|

|

DWB_STATUSFILE

|

The path to the status

file, if requested

|

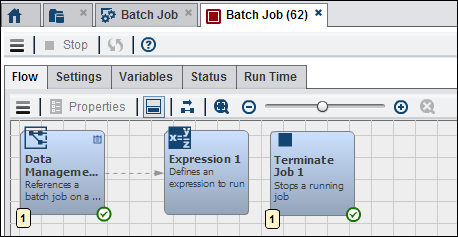

Using the Data Management Job Node

You can create an orchestration

job that uses a Data Management Job node

in the Flow tab to add a batch job to the

overall job. For example, you could create a sample orchestration

job that contains a Data Management Job node,

an Expression node, and a Terminate

Job node.

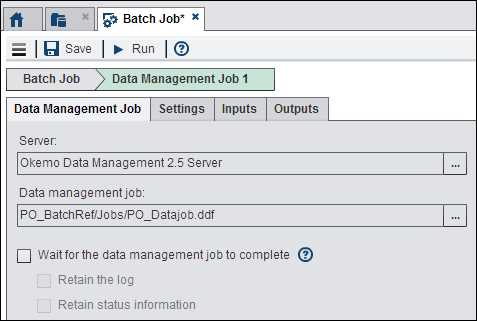

The settings for the

batch job are entered in the Batch Job tab

in the Data Management Job node, as shown

in the following display:

Data Management Job Settings

Select the DataFlux

Data Management Server that contains the job that you need to run

from the list of registered servers. These servers must be registered

in SAS Management Console. Then navigate to the job on the selected

server. The batch job for this particular example is a DataFlux Data

Management Studio data job named PO_Datajob.

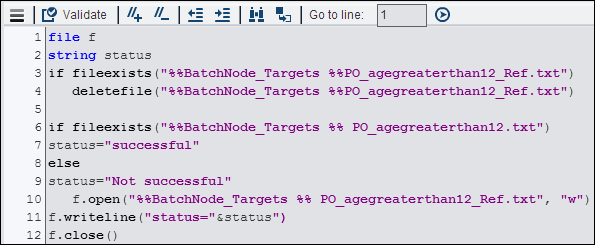

The expression validates

the success of the job. For more information about Expressions nodes,

see

Working with the Expression Node.

The Terminate

Job node is used to stop processing in the job after

all of the nodes have successfully run. For information about Terminate

Job nodes, see

Working with the Terminate Job Node.

Copyright © SAS Institute Inc. All rights reserved.