| The NLP Procedure |

Introductory Examples

The following introductory examples illustrate how to get started using the NLP procedure.

An Unconstrained Problem

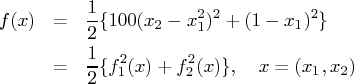

Consider the simple example of minimizing the Rosenbrock function (Rosenbrock 1960):

The following statements can be used to solve this problem:

proc nlp;

min f;

decvar x1 x2;

f1 = 10 * (x2 - x1 * x1);

f2 = 1 - x1;

f = .5 * (f1 * f1 + f2 * f2);

run;

The MIN statement identifies the symbol f that characterizes the objective function in terms of f1 and f2, and the DECVAR statement names the decision variables x1 and x2. Because there is no explicit optimizing algorithm option specified (TECH=), PROC NLP uses the Newton-Raphson method with ridging, the default algorithm when there are no constraints.

A better way to solve this problem is to take advantage of the fact

that ![]() is a sum of squares of

is a sum of squares of ![]() and

and ![]() and to treat

it as a least-squares problem.

Using the LSQ statement instead of the

MIN statement tells

the procedure that this is a least-squares problem, which results

in the use of

one of the specialized algorithms for solving least-squares

problems (for example, Levenberg-Marquardt).

and to treat

it as a least-squares problem.

Using the LSQ statement instead of the

MIN statement tells

the procedure that this is a least-squares problem, which results

in the use of

one of the specialized algorithms for solving least-squares

problems (for example, Levenberg-Marquardt).

proc nlp;

lsq f1 f2;

decvar x1 x2;

f1 = 10 * (x2 - x1 * x1);

f2 = 1 - x1;

run;

The LSQ statement results in the minimization of a function that is the sum of squares of functions that appear in the LSQ statement. The least-squares specification is preferred because it enables the procedure to exploit the structure in the problem for numerical stability and performance.

PROC NLP displays the iteration history and the solution to this

least-squares problem as shown in Figure 4.1.

It shows that the solution has ![]() and

and ![]() .

As expected in an unconstrained problem,

the gradient at the solution is very close to

.

As expected in an unconstrained problem,

the gradient at the solution is very close to ![]() .

.

Figure 4.1: Least-Squares Minimization

Boundary Constraints on the Decision Variables

Bounds on the decision variables can be used. Suppose, for example, that it is necessary to constrain the decision variables in the previous example to be less than 0.5. That can be done by adding a BOUNDS statement.

proc nlp;

lsq f1 f2;

decvar x1 x2;

bounds x1-x2 <= .5;

f1 = 10 * (x2 - x1 * x1);

f2 = 1 - x1;

run;

The solution in Figure 4.2 shows that the decision variables

meet the constraint bounds.

Figure 4.2: Least-Squares with Bounds Solution

Linear Constraints on the Decision Variables

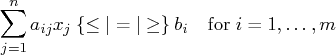

More general linear equality or inequality constraints of the form

proc nlp;

lsq f1 f2;

decvar x1 x2;

bounds x1-x2 <= .5;

lincon x1 + x2 <= .6;

f1 = 10 * (x2 - x1 * x1);

f2 = 1 - x1;

run;

The output in Figure 4.3 displays the iteration history and

the convergence criterion.

PROC NLP: Least Squares Minimization

Levenberg-Marquardt Optimization

Scaling Update of More (1978)

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Figure 4.3: Least-Squares with Bounds and Linear Constraints Iteration History

Figure 4.4 shows that the solution satisfies the linear constraint. Note that the procedure displays the active constraints (the constraints that are tight) at optimality.

PROC NLP: Least Squares Minimization

Value of Objective Function = 0.1665792899

| ||||||||||||||||||||||||||||||||||||||||||||||

Figure 4.4: Least-Squares with Bounds and Linear Constraints Solution

Nonlinear Constraints on the Decision Variables

More general nonlinear equality or inequality constraints can be specified using an NLINCON statement. Consider the least-squares problem with the additional constraint

proc nlp tech=QUANEW;

min f;

decvar x1 x2;

bounds x1-x2 <= .5;

lincon x1 + x2 <= .6;

nlincon c1 >= 0;

c1 = x1 * x1 - 2 * x2;

f1 = 10 * (x2 - x1 * x1);

f2 = 1 - x1;

f = .5 * (f1 * f1 + f2 * f2);

run;

Figure 4.5 shows the iteration history, and Figure 4.6 shows the solution

to this problem.

PROC NLP: Nonlinear Minimization

Dual Quasi-Newton Optimization

Modified VMCWD Algorithm of Powell (1978, 1982)

Dual Broyden - Fletcher - Goldfarb - Shanno Update (DBFGS)

Lagrange Multiplier Update of Powell(1982)

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Figure 4.5: Least-Squares with Bounds, Linear and Nonlinear Constraints, Iteration History

PROC NLP: Nonlinear Minimization

Value of Objective Function = 0.3300307303

Value of Lagrange Function = 0.3300307155

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Figure 4.6: Least-Squares with Bounds, Linear and Nonlinear Constraints, Solution

Not all of the optimization methods support nonlinear constraints. In particular the Levenberg-Marquardt method, the default for LSQ, does not support nonlinear constraints. (For more information about the particular algorithms, see the section "Optimization Algorithms".) The Quasi-Newton method is the prime choice for solving nonlinear programs with nonlinear constraints. The option TECH=QUANEW in the PROC NLP statement causes the Quasi-Newton method to be used.

A Simple Maximum Likelihood Example

The following is a very simple example of a maximum likelihood estimation problem with the log likelihood function:

The maximum likelihood estimates of the parameters ![]() and

and ![]() form the solution to

form the solution to

In the following DATA step, values for ![]() are input into SAS data set X;

this data set provides the values of

are input into SAS data set X;

this data set provides the values of ![]() .

.

data x;

input x @@;

datalines;

1 3 4 5 7

;

In the following statements, the DATA=X specification drives the

building of the objective function.

When each observation in the DATA=X data set is read,

a new term ![]() using the value of

using the value of ![]() is added to the objective function LOGLIK specified in the

MAX statement.

is added to the objective function LOGLIK specified in the

MAX statement.

proc nlp data=x vardef=n covariance=h pcov phes;

profile mean sigma / alpha=.5 .1 .05 .01;

max loglik;

parms mean=0, sigma=1;

bounds sigma > 1e-12;

loglik=-0.5*((x-mean)/sigma)**2-log(sigma);

run;

After a few iterations of the default Newton-Raphson optimization

algorithm, PROC NLP produces the results shown in Figure 4.7.

PROC NLP: Nonlinear Maximization

Value of Objective Function = -5.965735903

| |||||||||||||||||||||||||||||||||||

Figure 4.7: Maximum Likelihood Estimates

In unconstrained maximization, the gradient (that is, the vector of

first derivatives) at the solution must be very close to zero

and the Hessian matrix at the solution

(that is, the matrix of second derivatives) must have

nonpositive eigenvalues. The Hessian matrix is displayed in Figure 4.8.

Figure 4.8: Hessian Matrix

Under reasonable assumptions, the approximate standard errors

of the estimates are the square roots of the

diagonal elements of the covariance matrix of the

parameter estimates, which (because of the

COV=H specification)

is the same as the inverse of the Hessian matrix. The covariance matrix

is shown in Figure 4.9.

Figure 4.9: Covariance Matrix

The PROFILE statement computes the values of

the profile likelihood confidence limits on SIGMA and MEAN,

as shown in Figure 4.10.

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Figure 4.10: Confidence Limits

Copyright © 2008 by SAS Institute Inc., Cary, NC, USA. All rights reserved.