| The NLPC Nonlinear Optimization Solver |

Example 10.1: Least-Squares Problem

Although the current release of the NLPC solver does not implement techniques

specialized for least-squares problems, this example illustrates how the NLPC solver can

solve least-squares problems by using general nonlinear optimization techniques. The

following Bard function (see Moré, Garbow, and Hillstrom 1981) is a least-squares problem with

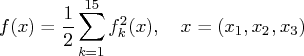

3 parameters and 15 residual functions ![]() :

:

The minimum function value ![]() E - 3 is at the point

E - 3 is at the point ![]() .

The starting point

.

The starting point ![]() is used.

is used.

You can use the following SAS code to formulate and solve this least-squares problem:

proc optmodel;

set S = 1..15;

number u{k in S} = k;

number v{k in S} = 16 - k;

number w{k in S} = min(u[k], v[k]);

number y{S} = [ .14 .18 .22 .25 .29 .32 .35 .39 .37 .58

.73 .96 1.34 2.10 4.39 ];

var x{1..3} init 1;

min f = 0.5*sum{k in S} ( y[k] -

( x[1] + u[k]/(v[k]*x[2] + w[k]*x[3]) )

)^2;

solve with nlpc / printfreq=1;

print x;

quit;

A problem summary is displayed in Figure 10.1.1. Since there is no explicit optimization technique specified (using the TECH= option), the default algorithm of the trust region method is used. Figure 10.1.2 displays the iteration log. The solution summary and the solution are shown in Figure 10.1.3.

Output 10.1.1: Least-Squares Problem Solved with TRUREG: Problem SummaryOutput 10.1.2: Least-Squares Problem Solved with TRUREG: Iteration Log

Output 10.1.3: Least-Squares Problem Solved with TRUREG: Solution

Alternatively, you can specify the Newton-type method with line search by using the following statement:

solve with nlpc / tech=newtyp printfreq=1;

You get the output for the NEWTYP method as shown in Figure 10.1.4 and Figure 10.1.5.

Output 10.1.4: Least-Squares Problem Solved with NEWTYP: Iteration Log

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Output 10.1.5: Least-Squares Problem Solved with NEWTYP: Solution

You can also select the conjugate gradient method as follows:

solve with nlpc / tech=congra printfreq=2;

Note that the PRINTFREQ= option was used to reduce the number of rows in the iteration log. As Figure 10.1.6 shows, only every other iteration is displayed. Figure 10.1.7 gives a summary of the solution and prints the solution.

Output 10.1.6: Least-Squares Problem Solved with CONGRA: Iteration Log

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Output 10.1.7: Least-Squares Problem Solved with CONGRA: Solution

| ||||||||||||||||||||||||||||||

Although the number of iterations required for each optimization technique to converge varies, all three techniques produce the identical solution, given the same starting point.

Copyright © 2008 by SAS Institute Inc., Cary, NC, USA. All rights reserved.