The AUTOREG Procedure

- Overview

-

Getting Started

-

Syntax

-

Details

Missing ValuesAutoregressive Error ModelAlternative Autocorrelation Correction MethodsGARCH ModelsHeteroscedasticity- and Autocorrelation-Consistent Covariance Matrix EstimatorGoodness-of-Fit Measures and Information CriteriaTestingPredicted ValuesOUT= Data SetOUTEST= Data SetPrinted OutputODS Table NamesODS Graphics

Missing ValuesAutoregressive Error ModelAlternative Autocorrelation Correction MethodsGARCH ModelsHeteroscedasticity- and Autocorrelation-Consistent Covariance Matrix EstimatorGoodness-of-Fit Measures and Information CriteriaTestingPredicted ValuesOUT= Data SetOUTEST= Data SetPrinted OutputODS Table NamesODS Graphics -

Examples

- References

Testing

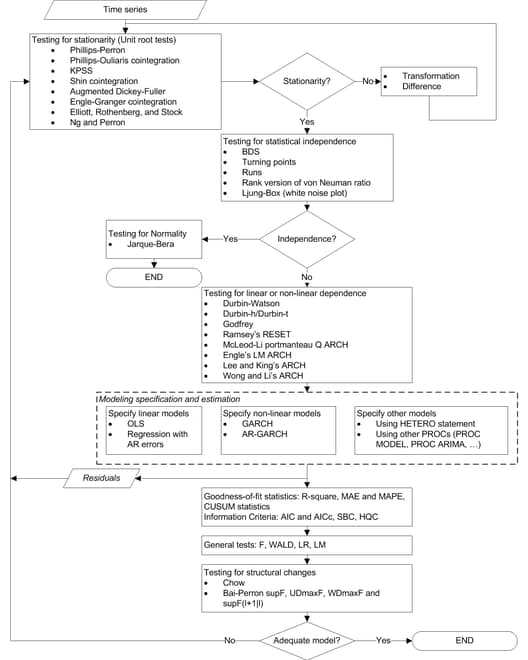

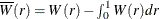

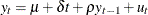

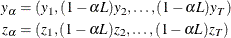

The modeling process consists of four stages: identification, specification, estimation, and diagnostic checking (Cromwell, Labys, and Terraza 1994). The AUTOREG procedure supports tens of statistical tests for identification and diagnostic checking. Figure 9.15 illustrates how to incorporate these statistical tests into the modeling process.

Figure 9.15: Statistical Tests in the AUTOREG Procedure

Testing for Stationarity

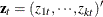

Most of the theories of time series require stationarity; therefore, it is critical to determine whether a time series is stationary. Two nonstationary time series are fractionally integrated time series and autoregressive series with random coefficients. However, more often some time series are nonstationary due to an upward trend over time. The trend can be captured by either of the following two models.

-

The difference stationary process

![\[ (1- L)y_ t =\delta +\psi (L) \epsilon _ t \]](images/etsug_autoreg0399.png)

where L is the lag operator,

, and

, and  is a white noise sequence with mean zero and variance

is a white noise sequence with mean zero and variance  . Hamilton (1994) also refers to this model the unit root process.

. Hamilton (1994) also refers to this model the unit root process.

-

The trend stationary process

![\[ y_ t =\alpha +\delta t +\psi (L) \epsilon _ t \]](images/etsug_autoreg0402.png)

When a process has a unit root, it is said to be integrated of order one or I(1). An I(1) process is stationary after differencing once. The trend stationary process and difference stationary process require different treatment to transform the process into stationary one for analysis. Therefore, it is important to distinguish the two processes. Bhargava (1986) nested the two processes into the following general model

However, a difficulty is that the right-hand side is nonlinear in the parameters. Therefore, it is convenient to use a different parametrization

The test of null hypothesis that  against the one-sided alternative of

against the one-sided alternative of  is called a unit root test.

is called a unit root test.

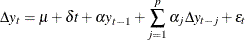

Dickey-Fuller unit root tests are based on regression models similar to the previous model

where  is assumed to be white noise. The t statistic of the coefficient

is assumed to be white noise. The t statistic of the coefficient  does not follow the normal distribution asymptotically. Instead, its distribution can be derived using the functional central

limit theorem. Three types of regression models including the preceding one are considered by the Dickey-Fuller test. The

deterministic terms that are included in the other two types of regressions are either null or constant only.

does not follow the normal distribution asymptotically. Instead, its distribution can be derived using the functional central

limit theorem. Three types of regression models including the preceding one are considered by the Dickey-Fuller test. The

deterministic terms that are included in the other two types of regressions are either null or constant only.

An assumption in the Dickey-Fuller unit root test is that it requires the errors in the autoregressive model to be white noise, which is often not true. There are two popular ways to account for general serial correlation between the errors. One is the augmented Dickey-Fuller (ADF) test, which uses the lagged difference in the regression model. This was originally proposed by Dickey and Fuller (1979) and later studied by Said and Dickey (1984) and Phillips and Perron (1988). Another method is proposed by Phillips and Perron (1988); it is called Phillips-Perron (PP) test. The tests adopt the original Dickey-Fuller regression with intercept, but modify the test statistics to take account of the serial correlation and heteroscedasticity. It is called nonparametric because no specific form of the serial correlation of the errors is assumed.

A problem of the augmented Dickey-Fuller and Phillips-Perron unit root tests is that they are subject to size distortion and low power. It is reported in Schwert (1989) that the size distortion is significant when the series contains a large moving average (MA) parameter. DeJong et al. (1992) find that the ADF has power around one third and PP test has power less than 0.1 against the trend stationary alternative, in some common settings. Among some more recent unit root tests that improve upon the size distortion and the low power are the tests described by Elliott, Rothenberg, and Stock (1996) and Ng and Perron (2001). These tests involve a step of detrending before constructing the test statistics and are demonstrated to perform better than the traditional ADF and PP tests.

Most testing procedures specify the unit root processes as the null hypothesis. Tests of the null hypothesis of stationarity have also been studied, among which Kwiatkowski et al. (1992) is very popular.

Economic theories often dictate that a group of economic time series are linked together by some long-run equilibrium relationship.

Statistically, this phenomenon can be modeled by cointegration. When several nonstationary processes  are cointegrated, there exists a

are cointegrated, there exists a  cointegrating vector

cointegrating vector  such that

such that  is stationary and

is stationary and  is a nonzero vector. One way to test the relationship of cointegration is the residual based cointegration test, which assumes the regression model

is a nonzero vector. One way to test the relationship of cointegration is the residual based cointegration test, which assumes the regression model

![\[ y_{t} = {\beta }_{1} + {\mb{x} _{t}’}{\beta } + u_{t} \]](images/etsug_autoreg0413.png)

where  ,

,  , and

, and  = (

= (

,

, ,

,

. The OLS residuals from the regression model are used to test for the null hypothesis of no cointegration. Engle and Granger

(1987) suggest using ADF on the residuals while Phillips and Ouliaris (1990) study the tests using PP and other related test statistics.

. The OLS residuals from the regression model are used to test for the null hypothesis of no cointegration. Engle and Granger

(1987) suggest using ADF on the residuals while Phillips and Ouliaris (1990) study the tests using PP and other related test statistics.

Augmented Dickey-Fuller Unit Root and Engle-Granger Cointegration Testing

Common unit root tests have the null hypothesis that there is an autoregressive unit root  , and the alternative is

, and the alternative is  , where

, where  is the autoregressive coefficient of the time series

is the autoregressive coefficient of the time series

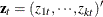

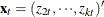

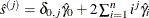

This is referred to as the zero mean model. The standard Dickey-Fuller (DF) test assumes that errors  are white noise. There are two other types of regression models that include a constant or a time trend as follows:

are white noise. There are two other types of regression models that include a constant or a time trend as follows:

These two models are referred to as the constant mean model and the trend model, respectively. The constant mean model includes

a constant mean  of the time series. However, the interpretation of

of the time series. However, the interpretation of  depends on the stationarity in the following sense: the mean in the stationary case when

depends on the stationarity in the following sense: the mean in the stationary case when  is the trend in the integrated case when

is the trend in the integrated case when  . Therefore, the null hypothesis should be the joint hypothesis that

. Therefore, the null hypothesis should be the joint hypothesis that  and

and  . However for the unit root tests, the test statistics are concerned with the null hypothesis of

. However for the unit root tests, the test statistics are concerned with the null hypothesis of  . The joint null hypothesis is not commonly used. This issue is address in Bhargava (1986) with a different nesting model.

. The joint null hypothesis is not commonly used. This issue is address in Bhargava (1986) with a different nesting model.

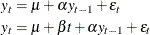

There are two types of test statistics. The conventional t ratio is

![\[ DF_\tau = \frac{\hat{\alpha }-1}{sd(\hat{\alpha })} \]](images/etsug_autoreg0425.png)

and the second test statistic, called  -test, is

-test, is

![\[ T(\hat{\alpha }-1) \]](images/etsug_autoreg0426.png)

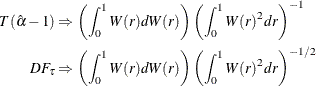

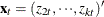

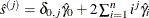

For the zero mean model, the asymptotic distributions of the Dickey-Fuller test statistics are

For the constant mean model, the asymptotic distributions are

![\begin{align*} T(\hat{\alpha }-1) & \Rightarrow \left( [W(1)^2-1]/2 -W(1)\int _0^1 W(r)dr \right)\left( \int _0^1 W(r)^2 dr - \left(\int _0^1 W(r)dr \right)^2\right)^{-1}\\ DF_\tau & \Rightarrow \left([W(1)^2-1]/2 -W(1)\int _0^1 W(r)dr \right)\left( \int _0^1 W(r)^2 dr - \left(\int _0^1 W(r)dr \right)^2\right)^{-1/2} \end{align*}](images/etsug_autoreg0428.png)

For the trend model, the asymptotic distributions are

![\begin{equation*} \begin{split} T(\hat{\alpha }-1) & \Rightarrow \left[ W(r)dW +12\left(\int _0^1 rW(r)dr -\frac{1}{2}\int _0^1 W(r)dr \right) \left( \int _0^1 W(r)dr - \frac12 W(1)\right) \right. \\ & \quad \left. -W(1) \int _0^1 W(r)dr \right] D^{-1} \\ DF_\tau & \Rightarrow \left[ W(r)dW +12\left(\int _0^1 rW(r)dr -\frac{1}{2}\int _0^1 W(r)dr \right) \left( \int _0^1 W(r)dr - \frac12 W(1)\right) \right. \\ & \quad \left. -W(1) \int _0^1 W(r)dr \right] D^{1/2} \end{split}\end{equation*}](images/etsug_autoreg0429.png)

where

![\[ D= \int _0^1 W(r)^2 dr - 12\left( \int _0^1 r(W(r)dr \right)^2 +12 \int _0^1 W(r)dr \int _0^1 rW(r)dr -4\left( \int _0^1 W(r) dr \right) ^2 \]](images/etsug_autoreg0430.png)

One problem of the Dickey-Fuller and similar tests that employ three types of regressions is the difficulty in the specification of the deterministic trends. Campbell and Perron (1991) claimed that "the proper handling of deterministic trends is a vital prerequisite for dealing with unit roots". However the "proper handling" is not obvious since the distribution theory of the relevant statistics about the deterministic trends is not available. Hayashi (2000) suggests to using the constant mean model when you think there is no trend, and using the trend model when you think otherwise. However no formal procedure is provided.

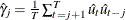

The null hypothesis of the Dickey-Fuller test is a random walk, possibly with drift. The differenced process is not serially correlated under the null of I(1). There is a great need for the generalization of this specification. The augmented Dickey-Fuller (ADF) test, originally proposed in Dickey and Fuller (1979), adjusts for the serial correlation in the time series by adding lagged first differences to the autoregressive model,

where the deterministic terms  and

and  can be absent for the models without drift or linear trend. As previously, there are two types of test statistics. One is

the OLS t value

can be absent for the models without drift or linear trend. As previously, there are two types of test statistics. One is

the OLS t value

and the other is given by

![\[ \frac{T(\hat{\alpha } -1)}{1- \hat{\alpha }_1 -\ldots -\hat{\alpha }_ p} \]](images/etsug_autoreg0434.png)

The asymptotic distributions of the test statistics are the same as those of the standard Dickey-Fuller test statistics.

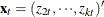

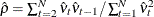

Nonstationary multivariate time series can be tested for cointegration, which means that a linear combination of these time

series is stationary. Formally, denote the series by  . The null hypothesis of cointegration is that there exists a vector

. The null hypothesis of cointegration is that there exists a vector  such that

such that  is stationary. Residual-based cointegration tests were studied in Engle and Granger (1987) and Phillips and Ouliaris (1990). The latter are described in the next subsection. The first step regression is

is stationary. Residual-based cointegration tests were studied in Engle and Granger (1987) and Phillips and Ouliaris (1990). The latter are described in the next subsection. The first step regression is

where  ,

,  , and

, and  = (

= (

,

, ,

,

. This regression can also include an intercept or an intercept with a linear trend. The residuals are used to test for the

existence of an autoregressive unit root. Engle and Granger (1987) proposed augmented Dickey-Fuller type regression without an intercept on the residuals to test the unit root. When the first

step OLS does not include an intercept, the asymptotic distribution of the ADF test statistic

. This regression can also include an intercept or an intercept with a linear trend. The residuals are used to test for the

existence of an autoregressive unit root. Engle and Granger (1987) proposed augmented Dickey-Fuller type regression without an intercept on the residuals to test the unit root. When the first

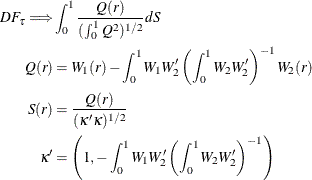

step OLS does not include an intercept, the asymptotic distribution of the ADF test statistic  is given by

is given by

where  is a k vector standard Brownian motion and

is a k vector standard Brownian motion and

![\[ W(r)=\Bigl (W_1(r), W_2(r) \Bigr ) \]](images/etsug_autoreg0440.png)

is a partition such that  is a scalar and

is a scalar and  is

is  dimensional. The asymptotic distributions of the test statistics in the other two cases have the same form as the preceding

formula. If the first step regression includes an intercept, then

dimensional. The asymptotic distributions of the test statistics in the other two cases have the same form as the preceding

formula. If the first step regression includes an intercept, then  is replaced by the demeaned Brownian motion

is replaced by the demeaned Brownian motion  . If the first step regression includes a time trend, then

. If the first step regression includes a time trend, then  is replaced by the detrended Brownian motion. The critical values of the asymptotic distributions are tabulated in Phillips

and Ouliaris (1990) and MacKinnon (1991).

is replaced by the detrended Brownian motion. The critical values of the asymptotic distributions are tabulated in Phillips

and Ouliaris (1990) and MacKinnon (1991).

The residual based cointegration tests have a major shortcoming. Different choices of the dependent variable in the first

step OLS might produce contradictory results. This can be explained theoretically. If the dependent variable is in the cointegration

relationship, then the test is consistent against the alternative that there is cointegration. On the other hand, if the dependent

variable is not in the cointegration system, the OLS residual  do not converge to a stationary process. Changing the dependent variable is more likely to produce conflicting results in

finite samples.

do not converge to a stationary process. Changing the dependent variable is more likely to produce conflicting results in

finite samples.

Phillips-Perron Unit Root and Cointegration Testing

Besides the ADF test, there is another popular unit root test that is valid under general serial correlation and heteroscedasticity,

developed by Phillips (1987) and Phillips and Perron (1988). The tests are constructed using the AR(1) type regressions, unlike ADF tests, with corrected estimation of the long run

variance of  . In the case without intercept, consider the driftless random walk process

. In the case without intercept, consider the driftless random walk process

![\[ y_{t} = y_{t-1} + u_{t} \]](images/etsug_autoreg0447.png)

where the disturbances might be serially correlated with possible heteroscedasticity. Phillips and Perron (1988) proposed the unit root test of the OLS regression model,

![\[ y_{t} = {\rho }y_{t-1} + u_{t} \]](images/etsug_autoreg0109.png)

Denote the OLS residual by  . The asymptotic variance of

. The asymptotic variance of  can be estimated by using the truncation lag l.

can be estimated by using the truncation lag l.

![\[ \hat{{\lambda }} = \sum _{j=0}^{l}{{\kappa }_{j}[1- j/(l+1)]\hat{{\gamma }}_{j}} \]](images/etsug_autoreg0450.png)

where  ,

,  for

for  , and

, and  . This is a consistent estimator suggested by Newey and West (1987).

. This is a consistent estimator suggested by Newey and West (1987).

The variance of  can be estimated by

can be estimated by  . Let

. Let  be the variance estimate of the OLS estimator

be the variance estimate of the OLS estimator  . Then the Phillips-Perron

. Then the Phillips-Perron  test (zero mean case) is written

test (zero mean case) is written

![\[ \hat{\mr{Z}}_{\rho } = \mi{T} (\hat{{\rho }}-1) - \frac{1}{2} \mi{T} ^{2}\hat{{\sigma }}^{2}(\hat{{\lambda }}-\hat{{\gamma }}_{0}) / {s^{2}} \]](images/etsug_autoreg0460.png)

The  statistic is just the ordinary Dickey-Fuller

statistic is just the ordinary Dickey-Fuller  statistic with a correction term that accounts for the serial correlation. The correction term goes to zero asymptotically

if there is no serial correlation.

statistic with a correction term that accounts for the serial correlation. The correction term goes to zero asymptotically

if there is no serial correlation.

Note that  as

as  , which shows that the limiting distribution is skewed to the left.

, which shows that the limiting distribution is skewed to the left.

Let  be the

be the  statistic for

statistic for  . The Phillips-Perron

. The Phillips-Perron  (defined here as

(defined here as  ) test is written

) test is written

![\[ \hat{\mr{Z}}_\tau = ({\hat{{\gamma }}_{0}}/{\hat{{\lambda }}})^{1/2}t_{\hat{{\rho }}} - \frac{1}{2} {\mi{T} \hat{{\sigma }}(\hat{{\lambda }}-\hat{{\gamma }}_{0})} /{(s \hat{{\lambda }}^{1/2})} \]](images/etsug_autoreg0466.png)

To incorporate a constant intercept, the regression model  is used (single mean case) and null hypothesis the series is a driftless random walk with nonzero unconditional mean. To

incorporate a time trend, we used the regression model

is used (single mean case) and null hypothesis the series is a driftless random walk with nonzero unconditional mean. To

incorporate a time trend, we used the regression model  and under the null the series is a random walk with drift.

and under the null the series is a random walk with drift.

The limiting distributions of the test statistics for the zero mean case are

![\begin{align*} \hat{\mr{Z}}_{\rho } & \Rightarrow \frac{\frac{1}{2}\{ {\mi{B} (1)} ^{2}-1\} }{\int _{0}^{1}{{[\mi{B} (s)} ]^{2}ds}} \\ \hat{\mr{Z}}_\tau & \Rightarrow \frac{\frac{1}{2}\{ {[\mi{B} (1)} ]^{2}-1\} }{\{ \int _{0}^{1}{{[\mi{B} (x)} ]^{2}dx}\} ^{1/2} } \end{align*}](images/etsug_autoreg0469.png)

where B( ) is a standard Brownian motion.

) is a standard Brownian motion.

The limiting distributions of the test statistics for the intercept case are

![\begin{align*} \hat{Z}_{\rho } & \Rightarrow \frac{\frac{1}{2}\{ {[\mi{B} (1)} ]^{2}-1\} -\mi{B} (1)\int _{0}^{1}{\mi{B} (x)dx}}{\int _{0}^{1}{{[\mi{B} (x)} ]^{2}dx} -\left[{\int _{0}^{1}{\mi{B} (x)dx}}\right]^{2}} \\ {\hat{\mr{Z}}_\tau } & \Rightarrow \frac{\frac{1}{2}\{ {[\mi{B} (1)} ]^{2}-1\} -\mi{B} (1)\int _{0}^{1}{\mi{B} (x)dx}}{{\{ \int _{0}^{1}{{[\mi{B} (x)}]^{2}dx} -\left[{\int _{0}^{1}{\mi{B} (x)dx}}\right]^{2} }\} ^{1/2} } \end{align*}](images/etsug_autoreg0471.png)

Finally, The limiting distributions of the test statistics for the trend case are can be derived as

![\[ \begin{bmatrix} 0 & c & 0 \end{bmatrix} V^{-1} \begin{bmatrix} \mi{B} (1) \\ \left({\mi{B} (1)}^{2}-1 \right)/2 \\ \mi{B} (1)-\int _{0}^{1}{\mi{B} (x)dx} \end{bmatrix} \]](images/etsug_autoreg0472.png)

where  for

for  and

and  for

for  ,

,

![\[ V = \left[\begin{matrix} 1 & \int _{0}^{1}{\mi{B} (x)dx} & 1/2 \\ \int _{0}^{1}{\mi{B} (x)dx} & \int _{0}^{1}{{\mi{B} (x)} ^{2}dx} & \int _{0}^{1}{x\mi{B} (x)dx} \\ 1/2 & \int _{0}^{1}{x\mi{B} (x)dx} & 1/3 \end{matrix}\right] \]](images/etsug_autoreg0475.png)

![\[ Q = \left[\begin{matrix} 0 & c & 0 \end{matrix}\right] V^{-1} \left[\begin{matrix} 0 & c & 0 \end{matrix}\right]^ T \]](images/etsug_autoreg0476.png)

The finite sample performance of the PP test is not satisfactory ( see Hayashi (2000) ).

When several variables  are cointegrated, there exists a

are cointegrated, there exists a  cointegrating vector

cointegrating vector  such that

such that  is stationary and

is stationary and  is a nonzero vector. The residual based cointegration test assumes the following regression model:

is a nonzero vector. The residual based cointegration test assumes the following regression model:

![\[ y_{t} = {\beta }_{1} + {\mb{x} _{t}’}{\beta } + u_{t} \]](images/etsug_autoreg0413.png)

where  ,

,  , and

, and  = (

= (

,

, ,

,

. You can estimate the consistent cointegrating vector by using OLS if all variables are difference stationary — that is,

I(1). The estimated cointegrating vector is

. You can estimate the consistent cointegrating vector by using OLS if all variables are difference stationary — that is,

I(1). The estimated cointegrating vector is  . The Phillips-Ouliaris test is computed using the OLS residuals from the preceding regression model, and it uses the PP unit

root tests

. The Phillips-Ouliaris test is computed using the OLS residuals from the preceding regression model, and it uses the PP unit

root tests  and

and  developed in Phillips (1987), although in Phillips and Ouliaris (1990) the asymptotic distributions of some other leading unit root tests are also derived. The null hypothesis is no cointegration.

developed in Phillips (1987), although in Phillips and Ouliaris (1990) the asymptotic distributions of some other leading unit root tests are also derived. The null hypothesis is no cointegration.

You need to refer to the tables by Phillips and Ouliaris (1990) to obtain the p-value of the cointegration test. Before you apply the cointegration test, you may want to perform the unit root test for each variable (see the option STATIONARITY= ).

As in the Engle-Granger cointegration tests, the Phillips-Ouliaris test can give conflicting results for different choices of the regressand. There are other cointegration tests that are invariant to the order of the variables, including Johansen (1988), Johansen (1991), Stock and Watson (1988).

ERS and Ng-Perron Unit Root Tests

As mentioned earlier, ADF and PP both suffer severe size distortion and low power. There is a class of newer tests that improve both size and power. These are sometimes called efficient unit root tests, and among them tests by Elliott, Rothenberg, and Stock (1996) and Ng and Perron (2001) are prominent.

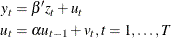

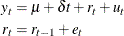

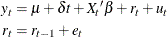

Elliott, Rothenberg, and Stock (1996) consider the data generating process

where  is either

is either  or

or  and

and  is an unobserved stationary zero-mean process with positive spectral density at zero frequency. The null hypothesis is

is an unobserved stationary zero-mean process with positive spectral density at zero frequency. The null hypothesis is  , and the alternative is

, and the alternative is  . The key idea of Elliott, Rothenberg, and Stock (1996) is to study the asymptotic power and asymptotic power envelope of some new tests. Asymptotic power is defined with a sequence

of local alternatives. For a fixed alternative hypothesis, the power of a test usually goes to one when sample size goes to

infinity; however, this says nothing about the finite sample performance. On the other hand, when the data generating process

under the alternative moves closer to the null hypothesis as the sample size increases, the power does not necessarily converge

to one. The local-to-unity alternatives in ERS are

. The key idea of Elliott, Rothenberg, and Stock (1996) is to study the asymptotic power and asymptotic power envelope of some new tests. Asymptotic power is defined with a sequence

of local alternatives. For a fixed alternative hypothesis, the power of a test usually goes to one when sample size goes to

infinity; however, this says nothing about the finite sample performance. On the other hand, when the data generating process

under the alternative moves closer to the null hypothesis as the sample size increases, the power does not necessarily converge

to one. The local-to-unity alternatives in ERS are

![\[ \alpha = 1+ \frac{c}{T} \]](images/etsug_autoreg0485.png)

and the power against the local alternatives has a limit as T goes to infinity, which is called asymptotic power. This value is strictly between 0 and 1. Asymptotic power indicates the adequacy of a test to distinguish small deviations from the null hypothesis.

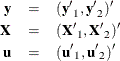

Define

Let  be the sum of squared residuals from a least squares regression of

be the sum of squared residuals from a least squares regression of  on

on  . Then the point optimal test against the local alternative

. Then the point optimal test against the local alternative  has the form

has the form

![\[ P_ T^{GLS} =\frac{S(\bar{\alpha }) -\bar{\alpha }S(1)}{\hat{\omega }^2} \]](images/etsug_autoreg0491.png)

where  is an estimator for

is an estimator for  . The autoregressive (AR) estimator is used for

. The autoregressive (AR) estimator is used for  (Elliott, Rothenberg, and Stock 1996, equations (13) and (14)):

(Elliott, Rothenberg, and Stock 1996, equations (13) and (14)):

![\[ \hat{\omega }^2 = \frac{\hat{\sigma }^2_{\eta }}{(1-\sum _{i=1}^{p}{\hat{a}_ i})^2} \]](images/etsug_autoreg0494.png)

where  and

and  are OLS estimates from the regression

are OLS estimates from the regression

![\[ \Delta y_ t = a_0 y_{t-1} + \sum _{i=1}^ p a_ i \Delta y_{t-i} + a_{p+1} + \eta _{t} \]](images/etsug_autoreg0497.png)

where p is selected according to the Schwarz Bayesian information criterion. The test rejects the null when  is small. The asymptotic power function for the point optimal test that is constructed with

is small. The asymptotic power function for the point optimal test that is constructed with  under local alternatives with c is denoted by

under local alternatives with c is denoted by  . Then the power envelope is

. Then the power envelope is  because the test formed with

because the test formed with  is the most powerful against the alternative

is the most powerful against the alternative  . In other words, the asymptotic function

. In other words, the asymptotic function  is always below the power envelope

is always below the power envelope  except that at one point,

except that at one point,  , they are tangent. Elliott, Rothenberg, and Stock (1996) show that choosing some specific values for

, they are tangent. Elliott, Rothenberg, and Stock (1996) show that choosing some specific values for  can cause the asymptotic power function

can cause the asymptotic power function  of the point optimal test to be very close to the power envelope. The optimal

of the point optimal test to be very close to the power envelope. The optimal  is

is  when

when  , and

, and  when

when  . This choice of

. This choice of  corresponds to the tangent point where

corresponds to the tangent point where  . This is also true of the DF-GLS test.

. This is also true of the DF-GLS test.

Elliott, Rothenberg, and Stock (1996) also propose the DF-GLS test, given by the t statistic for testing  in the regression

in the regression

where  is obtained in a first step detrending

is obtained in a first step detrending

![\[ y_ t^ d =y_ t -\hat{\beta }_{\bar{\alpha }} ’ z_ t \]](images/etsug_autoreg0512.png)

and  is least squares regression coefficient of

is least squares regression coefficient of  on

on  . Regarding the lag length selection, Elliott, Rothenberg, and Stock (1996) favor the Schwarz Bayesian information criterion. The optimal selection of the lag length p and the estimation of

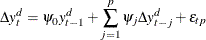

. Regarding the lag length selection, Elliott, Rothenberg, and Stock (1996) favor the Schwarz Bayesian information criterion. The optimal selection of the lag length p and the estimation of  is further discussed in Ng and Perron (2001). The lag length is selected from the interval

is further discussed in Ng and Perron (2001). The lag length is selected from the interval ![$[0, p_{max}]$](images/etsug_autoreg0515.png) for some fixed

for some fixed  by using the modified Akaike’s information criterion,

by using the modified Akaike’s information criterion,

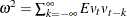

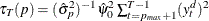

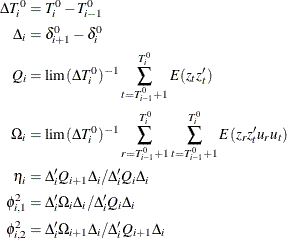

where  and

and  . For fixed lag length p, an estimate of

. For fixed lag length p, an estimate of  is given by

is given by

![\[ \hat{\omega }^2= \frac{(T-1-p)^{-1} \sum _{t=p+2}^{T} \hat{\epsilon }_{tp}^2 }{ \left(1- \sum _{j=1}^ p \hat{\psi }_ j \right)^2} \]](images/etsug_autoreg0520.png)

DF-GLS is indeed a superior unit root test, according to Stock (1994), Schwert (1989), and Elliott, Rothenberg, and Stock (1996). In terms of the size of the test, DF-GLS is almost as good as the ADF t test  and better than the PP

and better than the PP  and

and  test. In addition, the power of the DF-GLS test is greater than that of both the ADF t test and the

test. In addition, the power of the DF-GLS test is greater than that of both the ADF t test and the  -test.

-test.

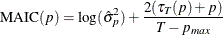

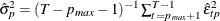

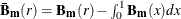

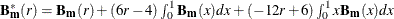

Ng and Perron (2001) also apply GLS detrending to obtain the following M-tests:

The first one is a modified version of the Phillips-Perron  test,

test,

![\[ MZ_\rho = \mr{Z}_\rho +\frac T2 (\hat{\alpha }-1)^2 \]](images/etsug_autoreg0526.png)

where the detrended data  is used. The second is a modified Bhargava (1986)

is used. The second is a modified Bhargava (1986)  test statistic. The third can be perceived as a modified Phillips-Perron

test statistic. The third can be perceived as a modified Phillips-Perron  statistic because of the relationship

statistic because of the relationship  .

.

The modified point optimal tests that use the GLS detrended data are

![\[ \begin{array}{ll} MP_ T^{GLS}=\frac{ \bar{c}^2(T-1)^{-2} \sum _{t=1}^{T-1} (y_{t}^ d)^2 -\bar{c} (T-1)^{-1} (y_{T}^ d)^2}{\hat{\omega }^2} \quad & \text {for} \; z_ t =1 \\ MP_ T^{GLS}=\frac{ \bar{c}^2(T-1)^{-2} \sum _{t=1}^{T-1} (y_{t}^ d)^2 +(1-\bar{c}) (T-1)^{-1} (y_{T}^ d)^2}{\hat{\omega }^2}\quad & \text {for}\; z_ t =(1,t) \\ \end{array} \]](images/etsug_autoreg0531.png)

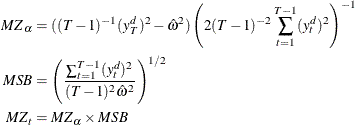

The DF-GLS test and the  test have the same limiting distribution:

test have the same limiting distribution:

![\[ \begin{array}{ll} \text {DF-GLS} \approx MZ_ t \Rightarrow 0.5\frac{(J_ c(1)^2 -1)}{ \left(\int _0^1 J_ c(r)^2dr \right)^{1/2}} \quad & \text {for} \; z_ t =1\\ \text {DF-GLS} \approx MZ_ t \Rightarrow 0.5\frac{(V_{c,\bar{c}}(1)^2 -1)}{\left( \int _0^1 V_{c,\bar{c}}(r)^2dr \right)^{1/2}} \quad & \text {for}\; z_ t =(1,t)\\ \end{array} \]](images/etsug_autoreg0533.png)

The point optimal test and the modified point optimal test have the same limiting distribution:

![\[ \begin{array}{ll} P_ T^{GLS} \approx MP_ T^{GLS} \Rightarrow \bar{c}^2 \int _0^1 J_ c(r)^2 dr -\bar{c} J_ c(1)^2 \quad & \text {for} \; z_ t =1\\ P_ T^{GLS} \approx MP_ T^{GLS} \Rightarrow \bar{c}^2 \int _0^1 V_{c,\bar{c}}(r)^2 dr +(1-\bar{c})V_{c,\bar{c}}(1)^2 \quad & \text {for}\; z_ t =(1,t) \end{array} \]](images/etsug_autoreg0534.png)

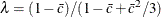

where  is a standard Brownian motion and

is a standard Brownian motion and  is an Ornstein-Uhlenbeck process defined by

is an Ornstein-Uhlenbeck process defined by  with

with  ,

, ![$ V_{c,\bar{c}}(r) = J_ c(r) -r\left[ \lambda J_ c(1) +3(1-\lambda ) \int _0^1 s J_ c(s) ds \right]$](images/etsug_autoreg0538.png) , and

, and  .

.

Overall, the M-tests have the smallest size distortion, with the ADF t test having the next smallest. The ADF  -test,

-test,  , and

, and  have the largest size distortion. In addition, the power of the DF-GLS and M-tests is greater than that of the ADF t test and

have the largest size distortion. In addition, the power of the DF-GLS and M-tests is greater than that of the ADF t test and  -test. The ADF

-test. The ADF  has more severe size distortion than the ADF

has more severe size distortion than the ADF  , but it has more power for a fixed lag length.

, but it has more power for a fixed lag length.

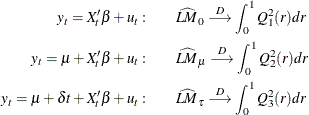

Kwiatkowski, Phillips, Schmidt, and Shin (KPSS) Unit Root Test and Shin Cointegration Test

There are fewer tests available for the null hypothesis of trend stationarity I(0). The main reason is the difficulty of theoretical

development. The KPSS test was introduced in Kwiatkowski et al. (1992) to test the null hypothesis that an observable series is stationary around a deterministic trend. For consistency, the notation

used here differs from the notation in the original paper. The setup of the problem is as follows: it is assumed that the

series is expressed as the sum of the deterministic trend, random walk  , and stationary error

, and stationary error  ; that is,

; that is,

where  iid

iid  , and an intercept

, and an intercept  (in the original paper, the authors use

(in the original paper, the authors use  instead of

instead of  ; here we assume

; here we assume  .) The null hypothesis of trend stationarity is specified by

.) The null hypothesis of trend stationarity is specified by  , while the null of level stationarity is the same as above with the model restriction

, while the null of level stationarity is the same as above with the model restriction  . Under the alternative that

. Under the alternative that  , there is a random walk component in the observed series

, there is a random walk component in the observed series  .

.

Under stronger assumptions of normality and iid of  and

and  , a one-sided LM test of the null that there is no random walk (

, a one-sided LM test of the null that there is no random walk ( ) can be constructed as follows:

) can be constructed as follows:

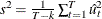

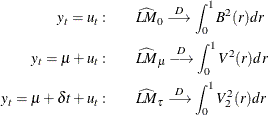

Under the null hypothesis,  can be estimated by ordinary least squares regression of

can be estimated by ordinary least squares regression of  on an intercept and the time trend. Following the original work of Kwiatkowski et al. (1992), under the null (

on an intercept and the time trend. Following the original work of Kwiatkowski et al. (1992), under the null ( ), the

), the  statistic converges asymptotically to three different distributions depending on whether the model is trend-stationary, level-stationary

(

statistic converges asymptotically to three different distributions depending on whether the model is trend-stationary, level-stationary

( ), or zero-mean stationary (

), or zero-mean stationary ( ,

,  ). The trend-stationary model is denoted by subscript

). The trend-stationary model is denoted by subscript  and the level-stationary model is denoted by subscript

and the level-stationary model is denoted by subscript  . The case when there is no trend and zero intercept is denoted as 0. The last case, although rarely used in practice, is

considered in Hobijn, Franses, and Ooms (2004):

. The case when there is no trend and zero intercept is denoted as 0. The last case, although rarely used in practice, is

considered in Hobijn, Franses, and Ooms (2004):

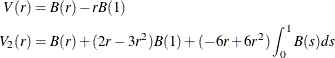

with

where  is a Brownian motion (Wiener process) and

is a Brownian motion (Wiener process) and  is convergence in distribution.

is convergence in distribution.  is a standard Brownian bridge, and

is a standard Brownian bridge, and  is a second-level Brownian bridge.

is a second-level Brownian bridge.

Using the notation of Kwiatkowski et al. (1992), the  statistic is named as

statistic is named as  . This test depends on the computational method used to compute the long-run variance

. This test depends on the computational method used to compute the long-run variance  ; that is, the window width l and the kernel type

; that is, the window width l and the kernel type  . You can specify the kernel used in the test by using the KERNEL option:

. You can specify the kernel used in the test by using the KERNEL option:

-

Newey-West/Bartlett (KERNEL=NW

BART) (this is the default)

BART) (this is the default)

![\[ w(s,l)=1-\frac{s}{l+1} \]](images/etsug_autoreg0102.png)

-

quadratic spectral (KERNEL=QS)

![\[ w(s,l) = \tilde{w}\left(\frac sl\right)=\tilde{w}(x)=\frac{25}{12\pi ^2 x^2}\left(\frac{\sin \left(6\pi x/5\right)}{6\pi x/5}-\cos \left( \frac65\pi x\right)\right) \]](images/etsug_autoreg0567.png)

You can specify the number of lags, l, in three different ways:

-

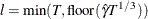

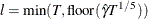

Schwert (SCHW = c) (default for NW, c=12)

![\[ l=\max \left\{ 1,\text {floor}\left[c\left(\frac{T}{100}\right)^{1/4}\right]\right\} \]](images/etsug_autoreg0104.png)

-

manual (LAG = l)

-

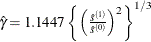

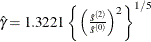

automatic selection (AUTO) (default for QS), from Hobijn, Franses, and Ooms (2004). The number of lags, l, is calculated as in the following table:

KERNEL=NW

KERNEL=QS

where T is the number of observations,

if

if  and 0 otherwise, and

and 0 otherwise, and  .

.

Simulation evidence shows that the KPSS has size distortion in finite samples. For an example, see Caner and Kilian (2001). The power is reduced when the sample size is large; this can be derived theoretically (see Breitung (1995)). Another problem of the KPSS test is that the power depends on the truncation lag used in the Newey-West estimator of the

long-run variance  .

.

Shin (1994) extends the KPSS test to incorporate the regressors to be a cointegration test. The cointegrating regression becomes

where  and

and  are scalar and m-vector

are scalar and m-vector  variables. There are still three cases of cointegrating regressions: without intercept and trend, with intercept only, and

with intercept and trend. The null hypothesis of the cointegration test is the same as that for the KPSS test,

variables. There are still three cases of cointegrating regressions: without intercept and trend, with intercept only, and

with intercept and trend. The null hypothesis of the cointegration test is the same as that for the KPSS test,  . The test statistics for cointegration in the three cases of cointegrating regressions are exactly the same as those in the

KPSS test; these test statistics are then ignored here. Under the null hypothesis, the statistics converge asymptotically

to three different distributions:

. The test statistics for cointegration in the three cases of cointegrating regressions are exactly the same as those in the

KPSS test; these test statistics are then ignored here. Under the null hypothesis, the statistics converge asymptotically

to three different distributions:

with

where  and

and  are independent scalar and m-vector standard Brownian motion, and

are independent scalar and m-vector standard Brownian motion, and  is convergence in distribution.

is convergence in distribution.  is a standard Brownian bridge,

is a standard Brownian bridge,  is a Brownian bridge of a second-level,

is a Brownian bridge of a second-level,  is an m-vector standard demeaned Brownian motion, and

is an m-vector standard demeaned Brownian motion, and  is an m-vector standard demeaned and detrended Brownian motion.

is an m-vector standard demeaned and detrended Brownian motion.

The p-values that are reported for the KPSS test and Shin test are calculated via a Monte Carlo simulation of the limiting distributions, using a sample size of 2,000 and 1,000,000 replications.

Testing for Statistical Independence

Independence tests are widely used in model selection, residual analysis, and model diagnostics because models are usually based on the assumption of independently distributed errors. If a given time series (for example, a series of residuals) is independent, then no deterministic model is necessary for this completely random process; otherwise, there must exist some relationship in the series to be addressed. In the following section, four independence tests are introduced: the BDS test, the runs test, the turning point test, and the rank version of von Neumann ratio test.

BDS Test

Brock, Dechert, and Scheinkman (1987) propose a test (BDS test) of independence based on the correlation dimension. Brock et al. (1996) show that the first-order asymptotic distribution of the test statistic is independent of the estimation error provided

that the parameters of the model under test can be estimated  -consistently. Hence, the BDS test can be used as a model selection tool and as a specification test.

-consistently. Hence, the BDS test can be used as a model selection tool and as a specification test.

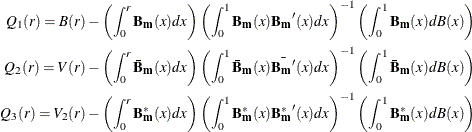

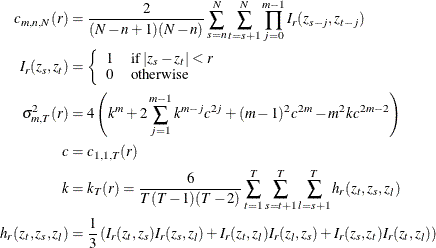

Given the sample size T, the embedding dimension m, and the value of the radius r, the BDS statistic is

![\[ S_{\mr{BDS}}(T,m,r)=\sqrt {T-m+1}\frac{c_{m,m,T}(r)-c_{1,m,T}^{m}(r)}{\sigma _{m,T}(r)} \]](images/etsug_autoreg0589.png)

where

The statistic has a standard normal distribution if the sample size is large enough. For small sample size, the distribution can be approximately obtained through simulation. Kanzler (1999) has a comprehensive discussion on the implementation and empirical performance of BDS test.

Runs Test and Turning Point Test

The runs test and turning point test are two widely used tests for independence (Cromwell, Labys, and Terraza 1994).

The runs test needs several steps. First, convert the original time series into the sequence of signs,  , that is, map

, that is, map  into

into  where

where  is the sample mean of

is the sample mean of  and

and  is "

is " " if x is nonnegative and "

" if x is nonnegative and " " if x is negative. Second, count the number of runs, R, in the sequence. A run of a sequence is a maximal non-empty segment of the sequence that consists of adjacent equal elements.

For example, the following sequence contains

" if x is negative. Second, count the number of runs, R, in the sequence. A run of a sequence is a maximal non-empty segment of the sequence that consists of adjacent equal elements.

For example, the following sequence contains  runs:

runs:

![\[ \underbrace{+++}_{1}\underbrace{---}_{1}\underbrace{++}_{1}\underbrace{--}_{1} \underbrace{+}_{1}\underbrace{-}_{1}\underbrace{+++++}_{1}\underbrace{--}_{1} \]](images/etsug_autoreg0599.png)

Third, count the number of pluses and minuses in the sequence and denote them as  and

and  , respectively. In the preceding example sequence,

, respectively. In the preceding example sequence,  and

and  . Note that the sample size

. Note that the sample size  . Finally, compute the statistic of runs test,

. Finally, compute the statistic of runs test,

![\[ S_{\mr{runs}}=\frac{R-\mu }{\sigma } \]](images/etsug_autoreg0605.png)

where

![\[ \mu =\frac{2 N_{+} N_{-}}{T}+1 \]](images/etsug_autoreg0606.png)

![\[ \sigma ^2=\frac{(\mu -1)(\mu -2)}{T-1} \]](images/etsug_autoreg0607.png)

The statistic of the turning point test is defined as follows:

![\[ S_{\mr{TP}}=\frac{\sum _{t=2}^{T-1}{TP_ t}-2(T-2)/3}{\sqrt {(16T-29)/90}} \]](images/etsug_autoreg0608.png)

where the indicator function of the turning point  is 1 if

is 1 if  or

or  (that is, both the previous and next values are greater or less than the current value); otherwise, 0.

(that is, both the previous and next values are greater or less than the current value); otherwise, 0.

The statistics of both the runs test and the turning point test have the standard normal distribution under the null hypothesis of independence.

Rank Version of the von Neumann Ratio Test

Because the runs test completely ignores the magnitudes of the observations, Bartels (1982) proposes a rank version of the von Neumann Ratio test for independence,

![\[ S_{\mr{RVN}}=\frac{\sqrt {T}}{2}\left(\frac{\sum _{t=1}^{T-1}{(R_{t+1}-R_{t})^2}}{(T(T^2-1)/12)}-2\right) \]](images/etsug_autoreg0612.png)

where  is the rank of tth observation in the sequence of T observations. For large samples, the statistic follows the standard normal distribution under the null hypothesis of independence.

For small samples of size between 11 and 100, the critical values that have been simulated would be more precise. For samples

of size less than or equal to 10, the exact CDF of the statistic is available. Hence, the VNRRANK=(PVALUE=SIM) option is recommended

for small samples whose size is no more than 100, although it might take longer to obtain the p-value than if you use the VNRRANK=(PVALUE=DIST) option.

is the rank of tth observation in the sequence of T observations. For large samples, the statistic follows the standard normal distribution under the null hypothesis of independence.

For small samples of size between 11 and 100, the critical values that have been simulated would be more precise. For samples

of size less than or equal to 10, the exact CDF of the statistic is available. Hence, the VNRRANK=(PVALUE=SIM) option is recommended

for small samples whose size is no more than 100, although it might take longer to obtain the p-value than if you use the VNRRANK=(PVALUE=DIST) option.

Testing for Normality

Based on skewness and kurtosis, Jarque and Bera (1980) calculated the test statistic

![\[ T_{N} = \left[\frac{N}{6} b^{2}_{1}+ \frac{N}{24} ( b_{2}-3)^{2}\right] \]](images/etsug_autoreg0614.png)

where

![\[ b_{1} = \frac{\sqrt {N}\sum _{t=1}^{N}{\hat{u}^{3}_{t}}}{{\left(\sum _{t=1}^ N \hat{u}^{2}_{t}\right)}^{\frac{3}{2}}} \]](images/etsug_autoreg0615.png)

![\[ b_{2} = \frac{N\sum _{t=1}^{N}{\hat{u}^{4}_{t}}}{\left(\sum _{t=1}^{N}{\hat{u}^{2}_{t}}\right)^{2}} \]](images/etsug_autoreg0616.png)

The

(2) distribution gives an approximation to the normality test

(2) distribution gives an approximation to the normality test  .

.

When the GARCH model is estimated, the normality test is obtained using the standardized residuals  . The normality test can be used to detect misspecification of the family of ARCH models.

. The normality test can be used to detect misspecification of the family of ARCH models.

Testing for Linear Dependence

Generalized Durbin-Watson Tests

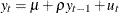

Consider the following linear regression model:

![\[ \mb{Y} =\mb{X}{\bbeta }+{\bnu } \]](images/etsug_autoreg0620.png)

where  is an

is an  data matrix,

data matrix,  is a

is a  coefficient vector, and

coefficient vector, and  is a

is a  disturbance vector. The error term

disturbance vector. The error term  is assumed to be generated by the jth-order autoregressive process

is assumed to be generated by the jth-order autoregressive process  where

where  ,

,  is a sequence of independent normal error terms with mean 0 and variance

is a sequence of independent normal error terms with mean 0 and variance  . Usually, the Durbin-Watson statistic is used to test the null hypothesis

. Usually, the Durbin-Watson statistic is used to test the null hypothesis  against

against  . Vinod (1973) generalized the Durbin-Watson statistic:

. Vinod (1973) generalized the Durbin-Watson statistic:

![\[ d_{j}=\frac{\sum _{t=j+1}^{N}{(\hat{{\nu }}_{t}-\hat{{\nu }}_{t-j})^{2} }}{\sum _{t=1}^{N}{\hat{{\nu }}_{t}^{2}} } \]](images/etsug_autoreg0630.png)

where  are OLS residuals. Using the matrix notation,

are OLS residuals. Using the matrix notation,

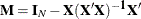

![\[ d_{j}=\frac{\mb{{Y}} '\mb{M} \mb{A} _{j}' \mb{A} _{j}\mb{M} \mb{{Y}} }{\mb{{Y}} '\mb{M} \mb{{Y}} } \]](images/etsug_autoreg0632.png)

where  and

and  is a

is a  matrix:

matrix:

![\[ \mb{A} _{j} = \begin{bmatrix} -1 & 0 & {\cdots } & 0 & 1 & 0 & {\cdots } & 0 \\ 0 & -1 & 0 & {\cdots } & 0 & 1 & 0 & {\cdots } \\ {\vdots } & {\vdots } & {\vdots } & {\vdots } & {\vdots } & {\vdots } & {\vdots } & {\vdots } \\ 0 & {\cdots } & 0 & -1 & 0 & {\cdots } & 0 & 1 \end{bmatrix} \]](images/etsug_autoreg0636.png)

and there are  zeros between

zeros between  and 1 in each row of matrix

and 1 in each row of matrix  .

.

The QR factorization of the design matrix  yields a

yields a  orthogonal matrix

orthogonal matrix  :

:

![\[ \mb{X} = \mb{QR} \]](images/etsug_autoreg0642.png)

where R is an  upper triangular matrix. There exists an

upper triangular matrix. There exists an  submatrix of

submatrix of  such that

such that  and

and  . Consequently, the generalized Durbin-Watson statistic is stated as a ratio of two quadratic forms:

. Consequently, the generalized Durbin-Watson statistic is stated as a ratio of two quadratic forms:

![\[ d_{j}=\frac{\sum _{l=1}^{n}{{\lambda }_{jl} {\xi }_{l}}^{2}}{\sum _{l=1}^{n}{{\xi }_{l}^{2}}} \]](images/etsug_autoreg0646.png)

where  are upper n eigenvalues of

are upper n eigenvalues of  and

and  is a standard normal variate, and

is a standard normal variate, and  . These eigenvalues are obtained by a singular value decomposition of

. These eigenvalues are obtained by a singular value decomposition of  (Golub and Van Loan 1989; Savin and White 1978).

(Golub and Van Loan 1989; Savin and White 1978).

The marginal probability (or p-value) for  given

given  is

is

![\[ \mr{Prob}(\frac{\sum _{l=1}^{n}{{\lambda }_{jl} {\xi }_{l}^{2}}}{\sum _{l=1}^{n}{{\xi }_{l}^{2}}} < c_{0})=\mr{Prob}(q_{j} < 0) \]](images/etsug_autoreg0654.png)

where

![\[ q_{j}=\sum _{l=1}^{n}{( {\lambda }_{jl}-c_{0}) {\xi }_{l}^{2}} \]](images/etsug_autoreg0655.png)

When the null hypothesis  holds, the quadratic form

holds, the quadratic form  has the characteristic function

has the characteristic function

![\[ {\phi }_{j}(t)={\prod _{l=1}^ n (1-2( {\lambda }_{jl}-c_{0})it)^{-1/2}} \]](images/etsug_autoreg0658.png)

The distribution function is uniquely determined by this characteristic function:

![\[ F(x) = \frac{1}{2} + \frac{1}{2{\pi }}\int _{0}^{{\infty }}{\frac{e^{itx}{\phi }_{j}(-t)- e^{-itx}{\phi }_{j}(t)}{it}dt} \]](images/etsug_autoreg0659.png)

For example, to test  given

given  against

against  , the marginal probability (p-value) can be used:

, the marginal probability (p-value) can be used:

![\[ F(0) = \frac{1}{2} + \frac{1}{2{\pi }}\int _{0}^{{\infty }}{\frac{({\phi }_{4}(-t)-{\phi }_{4}(t))}{it}dt} \]](images/etsug_autoreg0663.png)

where

![\[ {\phi }_{4}(t) = {\prod _{l=1}^ n (1-2({\lambda }_{4\mi{l} }-\hat{d}_{4})it )^{-1/2}} \]](images/etsug_autoreg0664.png)

and  is the calculated value of the fourth-order Durbin-Watson statistic.

is the calculated value of the fourth-order Durbin-Watson statistic.

In the Durbin-Watson test, the marginal probability indicates positive autocorrelation ( ) if it is less than the level of significance (

) if it is less than the level of significance ( ), while you can conclude that a negative autocorrelation (

), while you can conclude that a negative autocorrelation ( ) exists if the marginal probability based on the computed Durbin-Watson statistic is greater than

) exists if the marginal probability based on the computed Durbin-Watson statistic is greater than  . Wallis (1972) presented tables for bounds tests of fourth-order autocorrelation, and Vinod (1973) has given tables for a 5% significance level for orders two to four. Using the AUTOREG procedure, you can calculate the

exact p-values for the general order of Durbin-Watson test statistics. Tests for the absence of autocorrelation of order p can be performed sequentially; at the jth step, test

. Wallis (1972) presented tables for bounds tests of fourth-order autocorrelation, and Vinod (1973) has given tables for a 5% significance level for orders two to four. Using the AUTOREG procedure, you can calculate the

exact p-values for the general order of Durbin-Watson test statistics. Tests for the absence of autocorrelation of order p can be performed sequentially; at the jth step, test  given

given  against

against  . However, the size of the sequential test is not known.

. However, the size of the sequential test is not known.

The Durbin-Watson statistic is computed from the OLS residuals, while that of the autoregressive error model uses residuals that are the difference between the predicted values and the actual values. When you use the Durbin-Watson test from the residuals of the autoregressive error model, you must be aware that this test is only an approximation. See Autoregressive Error Model earlier in this chapter. If there are missing values, the Durbin-Watson statistic is computed using all the nonmissing values and ignoring the gaps caused by missing residuals. This does not affect the significance level of the resulting test, although the power of the test against certain alternatives may be adversely affected. Savin and White (1978) have examined the use of the Durbin-Watson statistic with missing values.

The Durbin-Watson probability calculations have been enhanced to compute the p-value of the generalized Durbin-Watson statistic for large sample sizes. Previously, the Durbin-Watson probabilities were only calculated for small sample sizes.

Consider the following linear regression model:

![\[ \mb{Y} = \mb{X} \beta + \mb{u} \]](images/etsug_autoreg0672.png)

![\[ u_ t + \varphi _ j u_{t-j} = \epsilon _ t, \qquad t = 1, \dots , N \]](images/etsug_autoreg0673.png)

where  is an

is an  data matrix,

data matrix,  is a

is a  coefficient vector,

coefficient vector,  is a

is a  disturbance vector, and

disturbance vector, and  is a sequence of independent normal error terms with mean 0 and variance

is a sequence of independent normal error terms with mean 0 and variance  .

.

The generalized Durbin-Watson statistic is written as

![\[ \mr{DW}_ j = \frac{\hat{\mb{u}}^\prime \mb{A}_ j^\prime \mb{A}_ j \hat{\mb{u}} }{\hat{\mb{u}}^\prime \hat{\mb{u}} } \]](images/etsug_autoreg0678.png)

where  is a vector of OLS residuals and

is a vector of OLS residuals and  is a

is a  matrix. The generalized Durbin-Watson statistic DW

matrix. The generalized Durbin-Watson statistic DW can be rewritten as

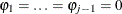

can be rewritten as

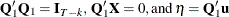

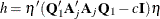

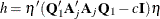

![\[ \mr{DW} _ j = \frac{\mb{Y} ^\prime \mb{M} \mb{A} _ j^\prime \mb{A} _ j \mb{M} \mb{Y} }{\mb{Y} ^\prime \mb{M} \mb{Y} } = \frac{ \eta ^\prime ( \mb{Q} _1^\prime \mb{A} _ j^\prime \mb{A} _ j \mb{Q} _1 ) \eta }{\eta ^\prime \eta } \]](images/etsug_autoreg0683.png)

where  .

.

The marginal probability for the Durbin-Watson statistic is

![\[ \Pr (\mr{DW} _ j < c) = \Pr (h < 0) \]](images/etsug_autoreg0685.png)

where  .

.

The p-value or the marginal probability for the generalized Durbin-Watson statistic is computed by numerical inversion of the characteristic

function  of the quadratic form

of the quadratic form  . The trapezoidal rule approximation to the marginal probability

. The trapezoidal rule approximation to the marginal probability  is

is

![\[ \Pr (h < 0) = \frac{1}{2} - \sum _{k=0}^ K \frac{\mr{Im} \left[ \phi ((k + \frac{1}{2})\Delta ) \right]}{\pi (k + \frac{1}{2}) } + \mr{E} _ I(\Delta ) + \mr{E} _ T(K) \]](images/etsug_autoreg0689.png)

where ![$\mr{Im} \left[ \phi (\cdot ) \right]$](images/etsug_autoreg0690.png) is the imaginary part of the characteristic function,

is the imaginary part of the characteristic function,  and

and  are integration and truncation errors, respectively. Refer to Davies (1973) for numerical inversion of the characteristic function.

are integration and truncation errors, respectively. Refer to Davies (1973) for numerical inversion of the characteristic function.

Ansley, Kohn, and Shively (1992) proposed a numerically efficient algorithm that requires O(N) operations for evaluation of the characteristic function  . The characteristic function is denoted as

. The characteristic function is denoted as

where  and

and  . By applying the Cholesky decomposition to the complex matrix

. By applying the Cholesky decomposition to the complex matrix  , you can obtain the lower triangular matrix

, you can obtain the lower triangular matrix  that satisfies

that satisfies  . Therefore, the characteristic function can be evaluated in O(N) operations by using the following formula:

. Therefore, the characteristic function can be evaluated in O(N) operations by using the following formula:

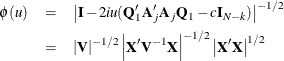

![\[ \phi (u) = \left| \mb{G} \right|^{-1} \left| \mb{X} ^{\ast \prime } \mb{X} ^\ast \right|^{-1/2} \left| \mb{X} ^\prime \mb{X} \right|^{1/2} \]](images/etsug_autoreg0698.png)

where  . Refer to Ansley, Kohn, and Shively (1992) for more information on evaluation of the characteristic function.

. Refer to Ansley, Kohn, and Shively (1992) for more information on evaluation of the characteristic function.

Tests for Serial Correlation with Lagged Dependent Variables

When regressors contain lagged dependent variables, the Durbin-Watson statistic ( ) for the first-order autocorrelation is biased toward 2 and has reduced power. Wallis (1972) shows that the bias in the Durbin-Watson statistic (

) for the first-order autocorrelation is biased toward 2 and has reduced power. Wallis (1972) shows that the bias in the Durbin-Watson statistic ( ) for the fourth-order autocorrelation is smaller than the bias in

) for the fourth-order autocorrelation is smaller than the bias in  in the presence of a first-order lagged dependent variable. Durbin (1970) proposes two alternative statistics (Durbin h and t ) that are asymptotically equivalent. The h statistic is written as

in the presence of a first-order lagged dependent variable. Durbin (1970) proposes two alternative statistics (Durbin h and t ) that are asymptotically equivalent. The h statistic is written as

![\[ h = \hat{{\rho }}\sqrt {N / (1-N\hat{V})} \]](images/etsug_autoreg0702.png)

where  and

and  is the least squares variance estimate for the coefficient of the lagged dependent variable. Durbin’s t test consists of regressing the OLS residuals

is the least squares variance estimate for the coefficient of the lagged dependent variable. Durbin’s t test consists of regressing the OLS residuals  on explanatory variables and

on explanatory variables and  and testing the significance of the estimate for coefficient of

and testing the significance of the estimate for coefficient of  .

.

Inder (1984) shows that the Durbin-Watson test for the absence of first-order autocorrelation is generally more powerful than the h test in finite samples. Refer to Inder (1986) and King and Wu (1991) for the Durbin-Watson test in the presence of lagged dependent variables.

Godfrey LM test

The GODFREY= option in the MODEL statement produces the Godfrey Lagrange multiplier test for serially correlated residuals for each equation (Godfrey 1978b, 1978a). r is the maximum autoregressive order, and specifies that Godfrey’s tests be computed for lags 1 through r. The default number of lags is four.

Testing for Nonlinear Dependence: Ramsey’s Reset Test

Ramsey’s reset test is a misspecification test associated with the functional form of models to check whether power transforms need to be added to a model. The original linear model, henceforth called the restricted model, is

![\[ y_ t={\mb{x_ t}} \beta + u_ t \]](images/etsug_autoreg0707.png)

To test for misspecification in the functional form, the unrestricted model is

![\[ y_ t={\mb{x_ t}} \beta + \sum _{j=2}^{p}{\phi _ j \hat{y}_ t^ j + u_ t} \]](images/etsug_autoreg0708.png)

where  is the predicted value from the linear model and p is the power of

is the predicted value from the linear model and p is the power of  in the unrestricted model equation starting from 2. The number of higher-ordered terms to be chosen depends on the discretion

of the analyst. The RESET option produces test results for

in the unrestricted model equation starting from 2. The number of higher-ordered terms to be chosen depends on the discretion

of the analyst. The RESET option produces test results for  2, 3, and 4.

2, 3, and 4.

The reset test is an F statistic for testing  , for all

, for all  , against

, against  for at least one

for at least one  in the unrestricted model and is computed as follows:

in the unrestricted model and is computed as follows:

![\[ F_{(p-1,n-k-p+1)}= \frac{(SSE_{R}-SSE_{U})/(p-1)}{SSE_ U/(n-k-p+1)} \]](images/etsug_autoreg0714.png)

where  is the sum of squared errors due to the restricted model,

is the sum of squared errors due to the restricted model,  is the sum of squared errors due to the unrestricted model, n is the total number of observations, and k is the number of parameters in the original linear model.

is the sum of squared errors due to the unrestricted model, n is the total number of observations, and k is the number of parameters in the original linear model.

Ramsey’s test can be viewed as a linearity test that checks whether any nonlinear transformation of the specified independent variables has been omitted, but it need not help in identifying a new relevant variable other than those already specified in the current model.

Testing for Nonlinear Dependence: Heteroscedasticity Tests

Portmanteau Q Test

For nonlinear time series models, the portmanteau test statistic based on squared residuals is used to test for independence of the series (McLeod and Li 1983):

![\[ Q(q) = N(N+2)\sum _{i=1}^{q}{\frac{r(i; \hat{{\nu }}^{2}_{t})}{(N-i)}} \]](images/etsug_autoreg0717.png)

where

![\[ r(i; \hat{{\nu }}^{2}_{t}) = \frac{\sum _{t=i+1}^{N}{( \hat{{\nu }}^{2}_{t}-\hat{{\sigma }}^{2}) ( \hat{{\nu }}^{2}_{t-i}-\hat{{\sigma }}^{2})}}{\sum _{t=1}^{N}{( \hat{{\nu }}^{2}_{t}- \hat{{\sigma }}^{2})^{2}}} \]](images/etsug_autoreg0718.png)

![\[ \hat{{\sigma }}^{2} = \frac{1}{N} \sum _{t=1}^{N}{\hat{{\nu }}^{2}_{t}} \]](images/etsug_autoreg0719.png)

This Q statistic is used to test the nonlinear effects (for example, GARCH effects) present in the residuals. The GARCH process can be considered as an ARMA

process can be considered as an ARMA process. See the section Predicting the Conditional Variance. Therefore, the Q statistic calculated from the squared residuals can be used to identify the order of the GARCH process.

process. See the section Predicting the Conditional Variance. Therefore, the Q statistic calculated from the squared residuals can be used to identify the order of the GARCH process.

Engle’s Lagrange Multiplier Test for ARCH Disturbances

Engle (1982) proposed a Lagrange multiplier test for ARCH disturbances. The test statistic is asymptotically equivalent to the test used by Breusch and Pagan (1979). Engle’s Lagrange multiplier test for the qth order ARCH process is written

![\[ LM(q) = \frac{N\mb{W} '\mb{Z} (\mb{Z} '\mb{Z} )^{-1}\mb{Z} '\mb{W} }{\mb{W} '\mb{W} } \]](images/etsug_autoreg0721.png)

where

![\[ \mb{W} = \left( \frac{\hat{{\nu }}^{2}_{1}}{\hat{{\sigma }}^{2}}-1, {\ldots }, \frac{\hat{{\nu }}^{2}_{N}}{\hat{{\sigma }}^{2}}-1\right)’ \]](images/etsug_autoreg0722.png)

and

![\[ \mb{Z} = \begin{bmatrix} 1 & \hat{{\nu }}_{0}^{2} & {\cdots } & \hat{{\nu }}_{-q+1}^{2} \\ {\vdots } & {\vdots } & {\vdots } & {\vdots } \\ {\vdots } & {\vdots } & {\vdots } & {\vdots } \\ 1 & \hat{{\nu }}_{N-1}^{2} & {\cdots } & \hat{{\nu }}_{N-q}^{2} \end{bmatrix} \]](images/etsug_autoreg0723.png)

The presample values (  ,

, ,

,  ) have been set to 0. Note that the LM

) have been set to 0. Note that the LM tests might have different finite-sample properties depending on the presample values, though they are asymptotically equivalent

regardless of the presample values.

tests might have different finite-sample properties depending on the presample values, though they are asymptotically equivalent

regardless of the presample values.

Lee and King’s Test for ARCH Disturbances

Engle’s Lagrange multiplier test for ARCH disturbances is a two-sided test; that is, it ignores the inequality constraints

for the coefficients in ARCH models. Lee and King (1993) propose a one-sided test and prove that the test is locally most mean powerful. Let  , denote the residuals to be tested. Lee and King’s test checks

, denote the residuals to be tested. Lee and King’s test checks

![\[ H_0: \alpha _ i=0, i=1,...,q \]](images/etsug_autoreg0727.png)

![\[ H_1: \alpha _ i>0, i=1,...,q \]](images/etsug_autoreg0728.png)

where  are in the following ARCH(q) model:

are in the following ARCH(q) model:

![\[ \varepsilon _ t=\sqrt {h_ t}e_ t, e_ t~ iid(0,1) \]](images/etsug_autoreg0730.png)

![\[ h_ t=\alpha _0+\sum _{i=1}^{q}{\alpha _ i \varepsilon _{t-i}^2} \]](images/etsug_autoreg0731.png)

The statistic is written as

![\[ S=\frac{\sum _{t=q+1}^{T}{(\frac{\varepsilon _ t^2}{h_0}-1)\sum _{i=1}^{q}{\varepsilon _{t-i}^2}}}{\left[2\sum _{t=q+1}^{T}{(\sum _{i=1}^{q}{\varepsilon _{t-i}^2})^2}-\frac{2(\sum _{t=q+1}^{T} {\sum _{i=1}^{q}{\varepsilon _{t-i}^2}})^2}{T-q}\right]^{1/2}} \]](images/etsug_autoreg0732.png)

Wong and Li’s Test for ARCH Disturbances

Wong and Li (1995) propose a rank portmanteau statistic to minimize the effect of the existence of outliers in the test for ARCH disturbances.

They first rank the squared residuals; that is,  . Then they calculate the rank portmanteau statistic

. Then they calculate the rank portmanteau statistic

![\[ Q_ R=\sum _{i=1}^{q}{\frac{(r_ i-\mu _ i)^2}{\sigma _ i^2}} \]](images/etsug_autoreg0734.png)

where  ,

,  , and

, and  are defined as follows:

are defined as follows:

![\[ r_ i=\frac{\sum _{t=i+1}^{T}{(R_{t}-(T+1)/2)(R_{t-i}-(T+1)/2)}}{T(T^2-1)/12} \]](images/etsug_autoreg0738.png)

![\[ \mu _ i=-\frac{T-i}{T(T-1)} \]](images/etsug_autoreg0739.png)

![\[ \sigma _ i^2=\frac{5T^4-(5i+9)T^3+9(i-2)T^2+2i(5i+8)T+16i^2}{5(T-1)^2T^2(T+1)} \]](images/etsug_autoreg0740.png)

The Q, Engle’s LM, Lee and King’s, and Wong and Li’s statistics are computed from the OLS residuals, or residuals if the NLAG=

option is specified, assuming that disturbances are white noise. The Q, Engle’s LM, and Wong and Li’s statistics have an approximate

distribution under the white-noise null hypothesis, while the Lee and King’s statistic has a standard normal distribution

under the white-noise null hypothesis.

distribution under the white-noise null hypothesis, while the Lee and King’s statistic has a standard normal distribution

under the white-noise null hypothesis.

Testing for Structural Change

Chow Test

Consider the linear regression model

![\[ \mb{y} = \mb{X}{\beta } + \mb{u} \]](images/etsug_autoreg0742.png)

where the parameter vector  contains k elements.

contains k elements.

Split the observations for this model into two subsets at the break point specified by the CHOW= option, so that

Now consider the two linear regressions for the two subsets of the data modeled separately,

![\[ \mb{y} _{1} = \mb{X}_{1}{\beta }_{1} + \mb{u} _{1} \]](images/etsug_autoreg0744.png)

![\[ \mb{y} _{2} = \mb{X}_{2}{\beta }_{2} + \mb{u} _{2} \]](images/etsug_autoreg0745.png)

where the number of observations from the first set is  and the number of observations from the second set is

and the number of observations from the second set is  .

.

The Chow test statistic is used to test the null hypothesis  conditional on the same error variance

conditional on the same error variance  . The Chow test is computed using three sums of square errors:

. The Chow test is computed using three sums of square errors:

![\[ \mr{F}_\mi {chow} = \frac{({\mb{\hat{u}} ’}\mb{\hat{u}} - {\mb{\hat{u}}’}_{1}\mb{\hat{u}} _{1} - {\mb{\hat{u}}’}_{2}\mb{\hat{u}} _{2}) / {k}}{( {\mb{\hat{u}}’}_{1} \mb{\hat{u}} _{1} + {\mb{\hat{u}}’}_{2} \mb{\hat{u}} _{2}) / (n_{1}+n_{2}-2k)} \]](images/etsug_autoreg0750.png)

where  is the regression residual vector from the full set model,

is the regression residual vector from the full set model,  is the regression residual vector from the first set model, and

is the regression residual vector from the first set model, and  is the regression residual vector from the second set model. Under the null hypothesis, the Chow test statistic has an F distribution with

is the regression residual vector from the second set model. Under the null hypothesis, the Chow test statistic has an F distribution with  and

and  degrees of freedom, where

degrees of freedom, where  is the number of elements in

is the number of elements in  .

.

Chow (1960) suggested another test statistic that tests the hypothesis that the mean of prediction errors is 0. The predictive Chow

test can also be used when  .

.

The PCHOW= option computes the predictive Chow test statistic

![\[ \mr{F}_\mi {pchow} = \frac{({\mb{\hat{u}} ’}\mb{\hat{u}} - {\mb{\hat{u}}’}_{1}\mb{\hat{u}} _{1}) / {n_2}}{ {\mb{\hat{u}}’}_{1} \mb{\hat{u}} _{1} / (n_{1}-k)} \]](images/etsug_autoreg0756.png)

The predictive Chow test has an F distribution with  and

and  degrees of freedom.

degrees of freedom.

Bai and Perron’s Multiple Structural Change Tests

Bai and Perron (1998) propose several kinds of multiple structural change tests: (1) the test of no break versus a fixed number of breaks ( test), (2) the equal and unequal weighted versions of double maximum tests of no break versus an unknown number of breaks

given some upper bound (

test), (2) the equal and unequal weighted versions of double maximum tests of no break versus an unknown number of breaks

given some upper bound ( test and

test and  test), and (3) the test of l versus

test), and (3) the test of l versus  breaks (

breaks ( test). Bai and Perron (2003a, 2003b, 2006) also show how to implement these tests, the commonly used critical values, and the simulation analysis on these tests.

test). Bai and Perron (2003a, 2003b, 2006) also show how to implement these tests, the commonly used critical values, and the simulation analysis on these tests.

Consider the following partial structural change model with m breaks ( regimes):

regimes):

![\[ y_ t = x_ t’\beta + z_ t’\delta _ j+u_ t,\quad \quad t=T_{j-1}+1,...,T_ j,j=1,...,m \]](images/etsug_autoreg0760.png)

Here,  is the dependent variable observed at time t,

is the dependent variable observed at time t,  is a vector of covariates with coefficients

is a vector of covariates with coefficients  unchanged over time, and

unchanged over time, and  is a vector of covariates with coefficients

is a vector of covariates with coefficients  at regime j,

at regime j,  . If

. If  (that is, there are no x regressors), the regression model becomes the pure structural change model. The indices

(that is, there are no x regressors), the regression model becomes the pure structural change model. The indices  (that is, the break dates or break points) are unknown, and the convenient notation

(that is, the break dates or break points) are unknown, and the convenient notation  and

and  applies. For any given m-partition

applies. For any given m-partition  , the associated least squares estimates of

, the associated least squares estimates of  and

and  are obtained by minimizing the sum of squared residuals (SSR),

are obtained by minimizing the sum of squared residuals (SSR),

![\[ S_ T(T_1,...,T_ m) = \sum _{i=1}^{m+1}{\sum _{t=T_{i-1}+1}^{T_ i}{(y_ t - x_ t’\beta - z_ t’\delta _ i)^2 }} \]](images/etsug_autoreg0769.png)

Let  denote the minimized SSR for a given

denote the minimized SSR for a given  . The estimated break dates

. The estimated break dates  are such that

are such that

![\[ (\hat{T}_1,...,\hat{T}_ m) = \arg \min _{T_1,...,T_ m}{\hat{S}_ T(T_1,...,T_ m)} \]](images/etsug_autoreg0772.png)

where the minimization is taken over all partitions  such that

such that  . Bai and Perron (2003a) propose an efficient algorithm, based on the principle of dynamic programming, to estimate the preceding model.

. Bai and Perron (2003a) propose an efficient algorithm, based on the principle of dynamic programming, to estimate the preceding model.

In the case that the data are nontrending, as stated in Bai and Perron (1998), the limiting distribution of the break dates is as follows:

![\[ \frac{(\Delta _ i'Q_ i\Delta _ i)^2}{(\Delta _ i'\Omega _ i\Delta _ i)}(\hat{T}_ i-T_ i^0)\Rightarrow \arg \max _ s{V^{(i)}(s)},\quad \quad i=1,...,m \]](images/etsug_autoreg0774.png)

where

![\[ V^{(i)}(s)= \left\{ \begin{array}{ l l } W_1^{(i)}(-s)-|s|/2 & \text {if}\ s \leq 0 \\ \sqrt {\eta _ i}(\phi _{i,2}/\phi _{i,1})W_2^{(i)}(s)-\eta _ i|s|/2 & \text {if}\ s > 0 \end{array} \right. \]](images/etsug_autoreg0775.png)

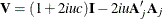

and

Also,  and

and  are independent standard Weiner processes that are defined on

are independent standard Weiner processes that are defined on  , starting at the origin when

, starting at the origin when  ; these processes are also independent across i. The cumulative distribution function of

; these processes are also independent across i. The cumulative distribution function of  is shown in Bai (1997). Hence, with the estimates of

is shown in Bai (1997). Hence, with the estimates of  ,

,  , and

, and  , the relevant critical values for confidence interval of break dates

, the relevant critical values for confidence interval of break dates  can be calculated. The estimate of

can be calculated. The estimate of  is

is  . The estimate of

. The estimate of  is either

is either

![\[ \hat{Q}_ i = (\Delta \hat{T}_ i)^{-1} \sum _{t=\hat{T}_{i-1}^0+1}^{\hat{T}_ i^0}{z_ tz_ t’} \]](images/etsug_autoreg0785.png)

if the regressors are assumed to have heterogeneous distributions across regimes (that is, the HQ option is specified), or

![\[ \hat{Q}_ i = \hat{Q} = (T)^{-1} \sum _{t=1}^{T}{z_ tz_ t’} \]](images/etsug_autoreg0786.png)

if the regressors are assumed to have identical distributions across regimes (that is, the HQ option is not specified). The

estimate of  can also be constructed with data over regime i only or the whole sample, depending on whether the vectors

can also be constructed with data over regime i only or the whole sample, depending on whether the vectors  are heterogeneously distributed across regimes (that is, the HO option is specified). If the HAC option is specified,

are heterogeneously distributed across regimes (that is, the HO option is specified). If the HAC option is specified,  is estimated through the heteroscedasticity- and autocorrelation-consistent (HAC) covariance matrix estimator applied to

vectors

is estimated through the heteroscedasticity- and autocorrelation-consistent (HAC) covariance matrix estimator applied to

vectors  .

.

The  test of no structural break

test of no structural break  versus the alternative hypothesis that there are a fixed number,

versus the alternative hypothesis that there are a fixed number,  , of breaks is defined as follows:

, of breaks is defined as follows:

![\[ supF(k) = \frac{1}{T}\left( \frac{T-(k+1)q-p}{kq} \right) (R\hat{\theta })’ (R\hat{V}(\hat{\theta })R’)^{-1} (R\hat{\theta }) \]](images/etsug_autoreg0791.png)

where

![\[ R_{(kq)\times (p+(k+1)q)} = \left( \begin{array}{ ccccccc } 0_{q \times p} & I_ q & -I_ q & 0 & 0 & \cdots & 0 \\ 0_{q \times p} & 0 & I_ q & -I_ q & 0 & \cdots & 0 \\ \vdots & \cdots & \ddots & \ddots & \ddots & \ddots & \cdots \\ 0_{q \times p} & 0 & \cdots & \cdots & 0 & I_ q & -I_ q \end{array} \right) \]](images/etsug_autoreg0792.png)

and  is the

is the  identity matrix;

identity matrix;  is the coefficient vector

is the coefficient vector  , which together with the break dates

, which together with the break dates  minimizes the global sum of squared residuals; and

minimizes the global sum of squared residuals; and  is an estimate of the variance-covariance matrix of

is an estimate of the variance-covariance matrix of  , which could be estimated by using the HAC estimator or another way, depending on how the HAC, HR, and HE options are specified.

The output

, which could be estimated by using the HAC estimator or another way, depending on how the HAC, HR, and HE options are specified.