The VARMAX Procedure

- Overview

-

Getting Started

-

Syntax

-

Details

Missing ValuesVARMAX ModelDynamic Simultaneous Equations ModelingImpulse Response FunctionForecastingTentative Order SelectionVAR and VARX ModelingBayesian VAR and VARX ModelingVARMA and VARMAX ModelingModel Diagnostic ChecksCointegrationVector Error Correction ModelingI(2) ModelMultivariate GARCH ModelingOutput Data SetsOUT= Data SetOUTEST= Data SetOUTHT= Data SetOUTSTAT= Data SetPrinted OutputODS Table NamesODS GraphicsComputational Issues

Missing ValuesVARMAX ModelDynamic Simultaneous Equations ModelingImpulse Response FunctionForecastingTentative Order SelectionVAR and VARX ModelingBayesian VAR and VARX ModelingVARMA and VARMAX ModelingModel Diagnostic ChecksCointegrationVector Error Correction ModelingI(2) ModelMultivariate GARCH ModelingOutput Data SetsOUT= Data SetOUTEST= Data SetOUTHT= Data SetOUTSTAT= Data SetPrinted OutputODS Table NamesODS GraphicsComputational Issues -

Examples

- References

The optimal (minimum MSE) ![]() -step-ahead forecast of

-step-ahead forecast of ![]() is

is

with ![]() and

and ![]() for

for ![]() . For the forecasts

. For the forecasts ![]() , see the section State-Space Representation.

, see the section State-Space Representation.

Under the stationarity assumption, the optimal (minimum MSE) ![]() -step-ahead forecast of

-step-ahead forecast of ![]() has an infinite moving-average form,

has an infinite moving-average form, ![]() . The prediction error of the optimal

. The prediction error of the optimal ![]() -step-ahead forecast is

-step-ahead forecast is ![]() , with zero mean and covariance matrix,

, with zero mean and covariance matrix,

where ![]() with a lower triangular matrix

with a lower triangular matrix ![]() such that

such that ![]() . Under the assumption of normality of the

. Under the assumption of normality of the ![]() , the

, the ![]() -step-ahead prediction error

-step-ahead prediction error ![]() is also normally distributed as multivariate

is also normally distributed as multivariate ![]() . Hence, it follows that the diagonal elements

. Hence, it follows that the diagonal elements ![]() of

of ![]() can be used, together with the point forecasts

can be used, together with the point forecasts ![]() , to construct

, to construct ![]() -step-ahead prediction intervals of the future values of the component series,

-step-ahead prediction intervals of the future values of the component series, ![]() .

.

The following statements use the COVPE option to compute the covariance matrices of the prediction errors for a VAR(1) model. The parts of the VARMAX procedure output are shown in Figure 35.36 and Figure 35.37.

proc varmax data=simul1;

model y1 y2 / p=1 noint lagmax=5

printform=both

print=(decompose(5) impulse=(all) covpe(5));

run;

Figure 35.36 is the output in a matrix format associated with the COVPE option for the prediction error covariance matrices.

Figure 35.36: Covariances of Prediction Errors (COVPE Option)

| Prediction Error Covariances | |||

|---|---|---|---|

| Lead | Variable | y1 | y2 |

| 1 | y1 | 1.28875 | 0.39751 |

| y2 | 0.39751 | 1.41839 | |

| 2 | y1 | 2.92119 | 1.00189 |

| y2 | 1.00189 | 2.18051 | |

| 3 | y1 | 4.59984 | 1.98771 |

| y2 | 1.98771 | 3.03498 | |

| 4 | y1 | 5.91299 | 3.04856 |

| y2 | 3.04856 | 4.07738 | |

| 5 | y1 | 6.69463 | 3.85346 |

| y2 | 3.85346 | 5.07010 | |

Figure 35.37 is the output in a univariate format associated with the COVPE option for the prediction error covariances. This printing format more easily explains the prediction error covariances of each variable.

Figure 35.37: Covariances of Prediction Errors

| Prediction Error Covariances by Variable | |||

|---|---|---|---|

| Variable | Lead | y1 | y2 |

| y1 | 1 | 1.28875 | 0.39751 |

| 2 | 2.92119 | 1.00189 | |

| 3 | 4.59984 | 1.98771 | |

| 4 | 5.91299 | 3.04856 | |

| 5 | 6.69463 | 3.85346 | |

| y2 | 1 | 0.39751 | 1.41839 |

| 2 | 1.00189 | 2.18051 | |

| 3 | 1.98771 | 3.03498 | |

| 4 | 3.04856 | 4.07738 | |

| 5 | 3.85346 | 5.07010 | |

Exogenous variables can be both stochastic and nonstochastic (deterministic) variables. Considering the forecasts in the

VARMAX(![]() ,

,![]() ,

,![]() ) model, there are two cases.

) model, there are two cases.

When exogenous (independent) variables are stochastic (future values not specified):

As defined in the section State-Space Representation, ![]() has the representation

has the representation

and hence

Therefore, the covariance matrix of the ![]() -step-ahead prediction error is given as

-step-ahead prediction error is given as

where ![]() is the covariance of the white noise series

is the covariance of the white noise series ![]() , and

, and ![]() is the white noise series for the VARMA(

is the white noise series for the VARMA(![]() ,

,![]() ) model of exogenous (independent) variables, which is assumed not to be correlated with

) model of exogenous (independent) variables, which is assumed not to be correlated with ![]() or its lags.

or its lags.

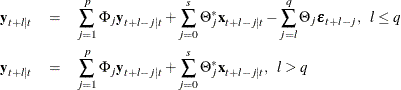

When future exogenous (independent) variables are specified:

The optimal forecast ![]() of

of ![]() conditioned on the past information and also on known future values

conditioned on the past information and also on known future values ![]() can be represented as

can be represented as

and the forecast error is

Thus, the covariance matrix of the ![]() -step-ahead prediction error is given as

-step-ahead prediction error is given as

In the relation ![]() , the diagonal elements can be interpreted as providing a decomposition of the

, the diagonal elements can be interpreted as providing a decomposition of the ![]() -step-ahead prediction error covariance

-step-ahead prediction error covariance ![]() for each component series

for each component series ![]() into contributions from the components of the standardized innovations

into contributions from the components of the standardized innovations ![]() .

.

If you denote the (![]() )th element of

)th element of ![]() by

by ![]() , the MSE of

, the MSE of ![]() is

is

Note that ![]() is interpreted as the contribution of innovations in variable

is interpreted as the contribution of innovations in variable ![]() to the prediction error covariance of the

to the prediction error covariance of the ![]() -step-ahead forecast of variable

-step-ahead forecast of variable ![]() .

.

The proportion, ![]() , of the

, of the ![]() -step-ahead forecast error covariance of variable

-step-ahead forecast error covariance of variable ![]() accounting for the innovations in variable

accounting for the innovations in variable ![]() is

is

The following statements use the DECOMPOSE option to compute the decomposition of prediction error covariances and their proportions for a VAR(1) model:

proc varmax data=simul1;

model y1 y2 / p=1 noint print=(decompose(15))

printform=univariate;

run;

The proportions of decomposition of prediction error covariances of two variables are given in Figure 35.38. The output explains that about 91.356% of the one-step-ahead prediction error covariances of the variable ![]() is accounted for by its own innovations and about 8.644% is accounted for by

is accounted for by its own innovations and about 8.644% is accounted for by ![]() innovations.

innovations.

Figure 35.38: Decomposition of Prediction Error Covariances (DECOMPOSE Option)

| Proportions of Prediction Error Covariances by Variable |

|||

|---|---|---|---|

| Variable | Lead | y1 | y2 |

| y1 | 1 | 1.00000 | 0.00000 |

| 2 | 0.88436 | 0.11564 | |

| 3 | 0.75132 | 0.24868 | |

| 4 | 0.64897 | 0.35103 | |

| 5 | 0.58460 | 0.41540 | |

| y2 | 1 | 0.08644 | 0.91356 |

| 2 | 0.31767 | 0.68233 | |

| 3 | 0.50247 | 0.49753 | |

| 4 | 0.55607 | 0.44393 | |

| 5 | 0.53549 | 0.46451 | |

If the CENTER option is specified, the sample mean vector is added to the forecast.

If dependent (endogenous) variables are differenced, the final forecasts and their prediction error covariances are produced by integrating those of the differenced series. However, if the PRIOR option is specified, the forecasts and their prediction error variances of the differenced series are produced.

Let ![]() be the original series with some appended zero values that correspond to the unobserved past observations. Let

be the original series with some appended zero values that correspond to the unobserved past observations. Let ![]() be the

be the ![]() matrix polynomial in the backshift operator that corresponds to the differencing specified by the MODEL statement. The off-diagonal

elements of

matrix polynomial in the backshift operator that corresponds to the differencing specified by the MODEL statement. The off-diagonal

elements of ![]() are zero, and the diagonal elements can be different. Then

are zero, and the diagonal elements can be different. Then ![]() .

.

This gives the relationship

where ![]() and

and ![]() .

.

The ![]() -step-ahead prediction of

-step-ahead prediction of ![]() is

is

The ![]() -step-ahead prediction error of

-step-ahead prediction error of ![]() is

is

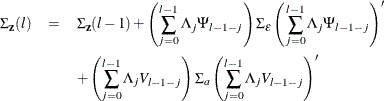

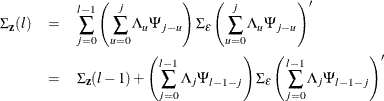

Letting ![]() , the covariance matrix of the l-step-ahead prediction error of

, the covariance matrix of the l-step-ahead prediction error of ![]() ,

, ![]() , is

, is

If there are stochastic exogenous (independent) variables, the covariance matrix of the l-step-ahead prediction error of ![]() ,

, ![]() , is

, is