The HPSEVERITY Procedure

- Overview

-

Getting Started

-

Syntax

-

Details

Predefined DistributionsCensoring and TruncationParameter Estimation MethodParameter InitializationEstimating Regression EffectsLevelization of Classification VariablesSpecification and Parameterization of Model EffectsEmpirical Distribution Function Estimation MethodsStatistics of FitDistributed and Multithreaded ComputationDefining a Severity Distribution Model with the FCMP ProcedurePredefined Utility FunctionsScoring FunctionsCustom Objective FunctionsInput Data SetsOutput Data SetsDisplayed OutputODS Graphics

Predefined DistributionsCensoring and TruncationParameter Estimation MethodParameter InitializationEstimating Regression EffectsLevelization of Classification VariablesSpecification and Parameterization of Model EffectsEmpirical Distribution Function Estimation MethodsStatistics of FitDistributed and Multithreaded ComputationDefining a Severity Distribution Model with the FCMP ProcedurePredefined Utility FunctionsScoring FunctionsCustom Objective FunctionsInput Data SetsOutput Data SetsDisplayed OutputODS Graphics -

Examples

Defining a Model for Gaussian DistributionDefining a Model for the Gaussian Distribution with a Scale ParameterDefining a Model for Mixed-Tail DistributionsFitting a Scaled Tweedie Model with RegressorsFitting Distributions to Interval-Censored DataBenefits of Distributed and Multithreaded ComputingEstimating Parameters Using Cramér-von Mises EstimatorDefining a Finite Mixture Model That Has a Scale ParameterPredicting Mean and Value-at-Risk by Using Scoring FunctionsScale Regression with Rich Regression Effects

Defining a Model for Gaussian DistributionDefining a Model for the Gaussian Distribution with a Scale ParameterDefining a Model for Mixed-Tail DistributionsFitting a Scaled Tweedie Model with RegressorsFitting Distributions to Interval-Censored DataBenefits of Distributed and Multithreaded ComputingEstimating Parameters Using Cramér-von Mises EstimatorDefining a Finite Mixture Model That Has a Scale ParameterPredicting Mean and Value-at-Risk by Using Scoring FunctionsScale Regression with Rich Regression Effects - References

The HPSEVERITY procedure enables you to estimate the influence of regression (exogenous) effects while fitting a distribution if the distribution has a scale parameter or a log-transformed scale parameter.

Let ![]() ,

, ![]() , denote the k regression effects. Let

, denote the k regression effects. Let ![]() denote the regression parameter that corresponds to the effect

denote the regression parameter that corresponds to the effect ![]() . If you do not specify regression effects, then the model for the response variable Y is of the form

. If you do not specify regression effects, then the model for the response variable Y is of the form

where ![]() is the distribution of Y with parameters

is the distribution of Y with parameters ![]() . This model is usually referred to as the error model. The regression effects are modeled by extending the error model to

the following form:

. This model is usually referred to as the error model. The regression effects are modeled by extending the error model to

the following form:

Under this model, the distribution of Y is valid and belongs to the same parametric family as ![]() if and only if

if and only if ![]() has a scale parameter. Let

has a scale parameter. Let ![]() denote the scale parameter and

denote the scale parameter and ![]() denote the set of nonscale distribution parameters of

denote the set of nonscale distribution parameters of ![]() . Then the model can be rewritten as

. Then the model can be rewritten as

such that ![]() is modeled by the regression effects as

is modeled by the regression effects as

where ![]() is the base value of the scale parameter. Thus, the scale regression model consists of the following parameters:

is the base value of the scale parameter. Thus, the scale regression model consists of the following parameters: ![]() ,

, ![]() , and

, and ![]() .

.

Given this form of the model, distributions without a scale parameter cannot be considered when regression effects are to

be modeled. If a distribution does not have a direct scale parameter, then PROC HPSEVERITY accepts it only if it has a log-transformed

scale parameter—that is, if it has a parameter ![]() .

.

You can specify that an offset variable be included in the scale regression model by specifying it in the OFFSET=

option of the SCALEMODEL statement. The offset variable is a regressor whose regression coefficient is known to be 1. If

![]() denotes the offset variable, then the scale regression model becomes

denotes the offset variable, then the scale regression model becomes

The regression coefficient of the offset variable is fixed at 1 and not estimated, so it is not reported in the ParameterEstimates ODS table. However, if you specify the OUTEST= data set, then the regression coefficient is added as a variable to that data set. The value of the offset variable in OUTEST= data set is equal to 1 for the estimates row (_TYPE_='EST') and is equal to a special missing value (.F) for the standard error (_TYPE_='STDERR') and covariance (_TYPE_='COV') rows.

An offset variable is useful to model the scale parameter per unit of some measure of exposure. For example, in the automobile

insurance context, measure of exposure can be the number of car-years insured or the total number of miles driven by a fleet

of cars at a rental car company. For worker’s compensation insurance, if you want to model the expected loss per enterprise,

then you can use the number of employees or total employee salary as the measure of exposure. For epidemiological data, measure

of exposure can be the number of people who are exposed to a certain pathogen when you are modeling the loss associated with

an epidemic. In general, if e denotes the value of the exposure measure and if you specify ![]() as the offset variable, then you are modeling the influence of other regression effects (

as the offset variable, then you are modeling the influence of other regression effects (![]() ) on the size of the scale of the distribution per unit of exposure.

) on the size of the scale of the distribution per unit of exposure.

Another use for an offset variable is when you have a priori knowledge of the influence of some exogenous variables that cannot be included in the SCALEMODEL statement. You can model the combined influence of such variables as an offset variable in order to correct for the omitted variable bias.

The regression parameters are initialized either by using the values that you specify or by the default method.

-

If you provide initial values for the regression parameters, then you must provide valid, nonmissing initial values for

and

and  parameters for all j.

parameters for all j.

You can specify the initial value for

by using either the INEST= data set, the INSTORE= item store, or the INIT= option in the DIST statement. If the distribution

has a direct scale parameter (no transformation), then the initial value for the first parameter of the distribution is used

as an initial value for

by using either the INEST= data set, the INSTORE= item store, or the INIT= option in the DIST statement. If the distribution

has a direct scale parameter (no transformation), then the initial value for the first parameter of the distribution is used

as an initial value for  . If the distribution has a log-transformed scale parameter, then the initial value for the first parameter of the distribution

is used as an initial value for

. If the distribution has a log-transformed scale parameter, then the initial value for the first parameter of the distribution

is used as an initial value for  .

.

You can use only the INEST= data set or the INSTORE= item store, but not both, to specify the initial values for

. The requirements for each option are as follows:

. The requirements for each option are as follows:

-

If you use the INEST= data set, then it must contain nonmissing initial values for all the regressors that you specify in the SCALEMODEL statement. The only missing value that is allowed is the special missing value .R, which indicates that the regressor is linearly dependent on other regressors. If you specify .R for a regressor for one distribution in a BY group, you must specify it the same way for all the distributions in that BY group.

Note that you cannot specify INEST= data set if the regression model contains effects that have CLASS variables or interaction effects.

-

The parameter estimates in the INSTORE= item store are used to initialize the parameters of a model if the item store contains a model specification that matches the model specification in the current PROC HPSEVERITY step according to the following rules:

-

The distribution name and the number and names of the distribution parameters must match.

-

The model in the item store must include a scale regression model whose regression parameters match as follows:

-

If the regression model in the item store does not contain any redundant parameters, then at least one regression parameter must match. Initial values of the parameters that match are set equal to the estimates that are read from the item store, and initial values of the other regression parameters are set equal to the default value of 0.001.

-

If the regression model in the item store contains any redundant parameters, then all the regression parameters must match, and the initial values of all parameters are set equal to the estimates that are read from the item store.

Note that a regression parameter is defined by the variables that form the underlying regression effect and by the levels of the CLASS variables if the effect contains any CLASS variables.

-

-

-

-

If you do not specify valid initial values for

or

or  parameters for all j, then PROC HPSEVERITY initializes those parameters by using the following method:

parameters for all j, then PROC HPSEVERITY initializes those parameters by using the following method:

Let a random variable Y be distributed as

, where

, where  is the scale parameter. By the definition of the scale parameter, a random variable

is the scale parameter. By the definition of the scale parameter, a random variable  is distributed as

is distributed as  such that

such that  . Given a random error term e that is generated from a distribution

. Given a random error term e that is generated from a distribution  , a value y from the distribution of Y can be generated as

, a value y from the distribution of Y can be generated as

![\[ y = \theta \cdot e \]](images/etshpug_hpseverity0290.png)

Taking the logarithm of both sides and using the relationship of

with the regression effects yields:

with the regression effects yields:

![\[ \log (y) = \log (\theta _0) + \sum _{j=1}^{k} \beta _ j x_ j + \log (e) \]](images/etshpug_hpseverity0291.png)

PROC HPSEVERITY makes use of the preceding relationship to initialize parameters of a regression model with distribution dist as follows:

-

The following linear regression problem is solved to obtain initial estimates of

and

and  :

:

![\[ \log (y) = \beta _0 + \sum _{j=1}^{k} \beta _ j x_ j \]](images/etshpug_hpseverity0293.png)

The estimates of

in the solution of this regression problem are used to initialize the respective regression parameters of the model. The

estimate of

in the solution of this regression problem are used to initialize the respective regression parameters of the model. The

estimate of  is later used to initialize the value of

is later used to initialize the value of  .

.

The results of this regression are also used to detect whether any regression parameters are linearly dependent on the other regression parameters. If any such parameters are found, then a warning is written to the SAS log and the corresponding parameter is eliminated from further analysis. The estimates for linearly dependent regression parameters are denoted by a special missing value of .R in the OUTEST= data set and in any displayed output.

-

Let

denote the initial value of the scale parameter.

denote the initial value of the scale parameter.

If the distribution model of dist does not contain the dist_PARMINIT subroutine, then

and all the nonscale distribution parameters are initialized to the default value of 0.001.

and all the nonscale distribution parameters are initialized to the default value of 0.001.

However, it is strongly recommended that each distribution’s model contain the dist_PARMINIT subroutine. For more information, see the section Defining a Severity Distribution Model with the FCMP Procedure. If that subroutine is defined, then

is initialized as follows:

is initialized as follows:

Each input value

of the response variable is transformed to its scale-normalized version

of the response variable is transformed to its scale-normalized version  as

as

![\[ w_ i = \frac{y_ i}{\exp (\beta _0 + \sum _{j=1}^{k} \beta _ j x_{ij})} \]](images/etshpug_hpseverity0296.png)

where

denotes the value of jth regression effect in the ith input observation. These

denotes the value of jth regression effect in the ith input observation. These  values are used to compute the input arguments for the dist_PARMINIT subroutine. The values that are computed by the subroutine for nonscale parameters are used as their respective

initial values. If the distribution has an untransformed scale parameter, then

values are used to compute the input arguments for the dist_PARMINIT subroutine. The values that are computed by the subroutine for nonscale parameters are used as their respective

initial values. If the distribution has an untransformed scale parameter, then  is set to the value of the scale parameter that is computed by the subroutine. If the distribution has a log-transformed

scale parameter P, then

is set to the value of the scale parameter that is computed by the subroutine. If the distribution has a log-transformed

scale parameter P, then  is computed as

is computed as  , where

, where  is the value of P computed by the subroutine.

is the value of P computed by the subroutine.

-

The value of

is initialized as

is initialized as

![\[ \theta _0 = s_0 \cdot \exp (\beta _0) \]](images/etshpug_hpseverity0300.png)

-

When you request estimates to be written to the output (either ODS displayed output or in the OUTEST= data set), the estimate

of the base value of the first distribution parameter is reported. If the first parameter is the log-transformed scale parameter,

then the estimate of ![]() is reported; otherwise, the estimate of

is reported; otherwise, the estimate of ![]() is reported. The transform of the first parameter of a distribution dist is controlled by the dist_SCALETRANSFORM

function that is defined for it.

is reported. The transform of the first parameter of a distribution dist is controlled by the dist_SCALETRANSFORM

function that is defined for it.

When regression effects are estimated, the estimate of the scale parameter depends on the values of the regressors and the estimates of the regression parameters. This dependency results in a potentially different distribution for each observation. To make estimates of the cumulative distribution function (CDF) and probability density function (PDF) comparable across distributions and comparable to the empirical distribution function (EDF), PROC HPSEVERITY computes and reports the CDF and PDF estimates from a representative distribution. The representative distribution is a mixture of a certain number of distributions, where each distribution differs only in the value of the scale parameter. You can specify the number of distributions in the mixture and how their scale values are chosen by using the DFMIXTURE= option in the SCALEMODEL statement.

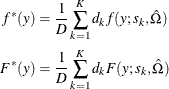

Let N denote the number of observations that are used for estimation, K denote the number of components in the mixture distribution, ![]() denote the scale parameter of the kth mixture component, and

denote the scale parameter of the kth mixture component, and ![]() denote the weight associated with kth mixture component.

denote the weight associated with kth mixture component.

Let ![]() and

and ![]() denote the PDF and CDF, respectively, of the kth component distribution, where

denote the PDF and CDF, respectively, of the kth component distribution, where ![]() denotes the set of estimates of all parameters of the distribution other than the scale parameter. Then, the PDF and CDF

estimates,

denotes the set of estimates of all parameters of the distribution other than the scale parameter. Then, the PDF and CDF

estimates, ![]() and

and ![]() , respectively, of the mixture distribution at y are computed as

, respectively, of the mixture distribution at y are computed as

where D is the normalization factor (![]() ).

).

PROC HPSEVERITY uses the ![]() values to compute the EDF-based statistics of fit and to create the OUTCDF= data set and the CDF plots. The PDF estimates

that it plots in the PDF plots are the

values to compute the EDF-based statistics of fit and to create the OUTCDF= data set and the CDF plots. The PDF estimates

that it plots in the PDF plots are the ![]() values.

values.

The scale values ![]() for the K mixture components are derived from the set

for the K mixture components are derived from the set ![]() (

(![]() ) of N linear predictor values, where

) of N linear predictor values, where ![]() denotes the estimate of the linear predictor due to observation i. It is computed as

denotes the estimate of the linear predictor due to observation i. It is computed as

where ![]() is an estimate of the base value of the scale parameter,

is an estimate of the base value of the scale parameter, ![]() are the estimates of regression coefficients, and

are the estimates of regression coefficients, and ![]() is the value of jth regression effect in observation i.

is the value of jth regression effect in observation i.

Let ![]() denote the weight of observation i. If you specify the WEIGHT statement, then the weight is equal to the value of the specified weight variable for the corresponding

observation in the DATA= data set; otherwise, the weight is set to 1.

denote the weight of observation i. If you specify the WEIGHT statement, then the weight is equal to the value of the specified weight variable for the corresponding

observation in the DATA= data set; otherwise, the weight is set to 1.

You can specify one of the following method-names in the DFMIXTURE=

option in the SCALEMODEL statement to specify the method of choosing K and the corresponding ![]() and

and ![]() values:

values:

- FULL

-

In this method, there are as many mixture components as the number of observations that are used for estimation. In other words, K = N,

, and

, and  (

( ). This is the slowest method, because it requires

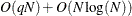

). This is the slowest method, because it requires  computations to compute the mixture CDF

computations to compute the mixture CDF  or the mixture PDF

or the mixture PDF  of one observation. For N observations, the computational complexity in terms of number of CDF or PDF evaluations is

of one observation. For N observations, the computational complexity in terms of number of CDF or PDF evaluations is  . Even for moderately large values of N, the time that is taken to compute the mixture CDF and PDF can significantly exceed the time that is taken to estimate the

model parameters. So it is recommended that you use the FULL method only for small data sets.

. Even for moderately large values of N, the time that is taken to compute the mixture CDF and PDF can significantly exceed the time that is taken to estimate the

model parameters. So it is recommended that you use the FULL method only for small data sets.

- MEAN

-

In this method, the mixture contains only one distribution, whose scale value is determined by the mean of the linear predictor values that are implied by all the observations. In other words,

is computed as

is computed as

![\[ s_1 = \exp \left(\frac{1}{N} \sum _{i=1}^{N} \hat{\lambda }_ i \right) \]](images/etshpug_hpseverity0324.png)

The component’s weight

is set to 1.

is set to 1.

This method is the fastest because it requires only one CDF or PDF evaluation per observation. The computational complexity is

for N observations.

for N observations.

If you do not specify the DFMIXTURE= option in the SCALEMODEL statement, then this is the default method.

- QUANTILE

-

In this method, a certain number of quantiles are chosen from the set of all linear predictor values. If you specify a value of

for the K= option when specifying this method, then

for the K= option when specifying this method, then  and

and  (

( ) is computed as

) is computed as  , where

, where  is the kth

is the kth  -quantile from the set

-quantile from the set  (

( ). The weight of each of the components (

). The weight of each of the components ( ) is assumed to be 1 for this method.

) is assumed to be 1 for this method.

The default value of

is 2, which implies a one-point mixture that has a distribution whose scale value is equal to the median scale value.

is 2, which implies a one-point mixture that has a distribution whose scale value is equal to the median scale value.

For this method, PROC HPSEVERITY needs to sort the N linear predictor values in the set

; the sorting requires

; the sorting requires  computations. Then, computing the mixture estimate of one observation requires

computations. Then, computing the mixture estimate of one observation requires  CDF or PDF evaluations. Hence, the computational complexity of this method is

CDF or PDF evaluations. Hence, the computational complexity of this method is  for computing a mixture CDF or PDF of N observations. For

for computing a mixture CDF or PDF of N observations. For  , the QUANTILE method is significantly faster than the FULL method.

, the QUANTILE method is significantly faster than the FULL method.

- RANDOM

-

In this method, a uniform random sample of observations is chosen, and the mixture contains the distributions that are implied by those observations. If you specify a value of

for the K= option when specifying this method, then the size of the sample is

for the K= option when specifying this method, then the size of the sample is  . Hence,

. Hence,  . If

. If  denotes the index of jth observation in the sample (

denotes the index of jth observation in the sample ( ), such that

), such that  , then the scale of kth component distribution in the mixture is

, then the scale of kth component distribution in the mixture is  . The weight of each of the components (

. The weight of each of the components ( ) is assumed to be 1 for this method.

) is assumed to be 1 for this method.

You can also specify the seed to be used for generating the random sample by using the SEED= option for this method. The same sample of observations is used for all models.

Computing a mixture estimate of one observation requires

CDF or PDF evaluations. Hence, the computational complexity of this method is

CDF or PDF evaluations. Hence, the computational complexity of this method is  for computing a mixture CDF or PDF of N observations. For

for computing a mixture CDF or PDF of N observations. For  , the RANDOM method is significantly faster than the FULL method.

, the RANDOM method is significantly faster than the FULL method.