Cleanse Data

Introduction

Use the Cleanse Data

directive to create jobs that improve the quality of your Hadoop data.

Your jobs can combine any of the data quality transformations in any

order. When you run your job, the transformations will be executed

in the order in which you defined them.

About Locales, Definitions, and the Quality Knowledge Base

Most of the data quality

transformations ask you to select a source column, a locale, and a

definition. A locale represents a distinct

alphabetical language, combined with a specified regional usage of

that language. For example, the English, United States locale applies

only to that region. The locale English, England addresses different

usage or data content for the same alphabetic language.

A locale consists of

a collection of definitions. Definitions

tell SAS how to cleanse data. For example, the Street Address definition

for the English, United States locale describes the structure of the

first part of an American mailing address. In the locale Spanish,

Mexico, the Street Address definition accommodates differences in

mailing address structure as well as the differences in language and

alphabet.

Locales and definitions

make up a SAS Quality Knowledge Base. A

Quality Knowledge Base is deployed on your Hadoop cluster. When you

run a data cleansing job in Hadoop, the SAS software on your cluster

accesses the Quality Knowledge Base to transform your data.

In SAS Data Loader you

specify a default locale, which should match the typical locale of

your source data. The default locale is selected in the QKB panel

of the Configuration window, as described in QKB Panel. You can override the default locale in any of the data

quality transformations. The override applies only to the current

transformation.

To learn more about

the Quality Knowledge Base, refer to the related document titles in Recommended Reading.

To learn about the output

that is generated by a given definition, refer to the online Help

for the SAS Quality Knowledge Base, in the topic Global Definitions.

Enable the Spark Runtime Target

Support for the Apache

Spark runtime target is enabled in the Hadoop Configuration panel

of the Configuration window. When Spark is

enabled, new instances of the following directives use Spark by default:

The default runtime target can be overridden using

the Settings menu. To learn more about runtime

targets, see Enable Support for Impala and Spark.

-

Cleanse Data

-

Transform Data

-

Cluster-Survive Data

Note: Changing the default runtime

target does not change the runtime target for saved directives. Saved

directives continue to run with their existing runtime target unless

they are opened, reconfigured, and saved.

Note: Enabling Spark changes the

truncation of character columns, as described in the Usage Notes for Spark.

Select a Source Table

When you use a data

cleansing directive to create and run a job, you begin by selecting

a source table.

Follow these steps to

select a source table:

-

Scroll through the list or grid of data sources or schemas, and then click the data source (also known as a schema) that contains your source table. Or you can click Select a Recent Table and quickly choose from that list.

-

If you opened a data source, click the source table and then click Next.Note: To explore the contents of source tables, click a table and click Data Sample

, Table Viewer

, Table Viewer , or (if available) View Profile

, or (if available) View Profile  .

Note: To override the default maximum length of SAS character columns, click a source table and click Edit Advanced Options

.

Note: To override the default maximum length of SAS character columns, click a source table and click Edit Advanced Options . If your directive uses the Spark runtime target

(click Settings to check), then see String Truncation in Spark-Enabled Directives.

. If your directive uses the Spark runtime target

(click Settings to check), then see String Truncation in Spark-Enabled Directives. -

In the Transformation task, click a data cleansing transformation to begin building your job.

Select a Data Cleansing Transformation

In a new job, after

you select a source table, you click a data cleansing transformation.

Click below to find usage information for your selected transformation:

Filter Data Transformation

Use the Filter Data

transformation at the beginning of a job to decrease the number of

rows that will be processed in subsequent transformations. The filter

is specified as a user-written expression. The expression uses SAS

DS2 functions and the MapReduce runtime environment, or DataFlux Expression

Engine Language functions (EEL functions) and the Spark runtime environment.

For a given source row, if the filter evaluates to true, then the

row is included in the target table.

Follow these steps to

use the Filter Data transformation:

-

If this is the first transformation in a new job, select a source table.

-

In the Transformation task, click Filter Data.

-

In the Filter Data transformation, choose one of the following:

-

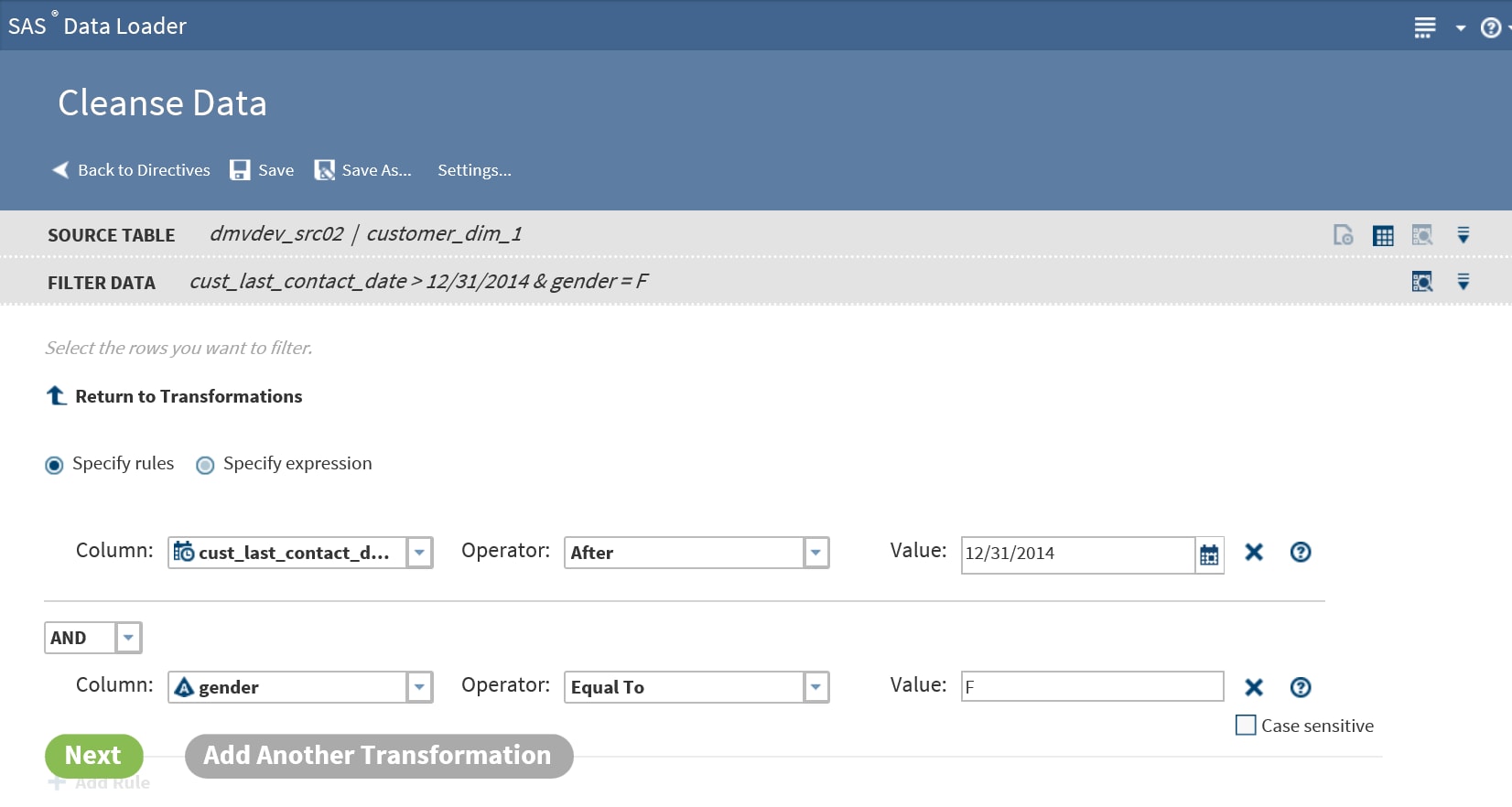

To filter rows using one or more rules, click Specify rules and proceed to the next step. You can specify multiple rules and apply them using logical AND and OR operators.

-

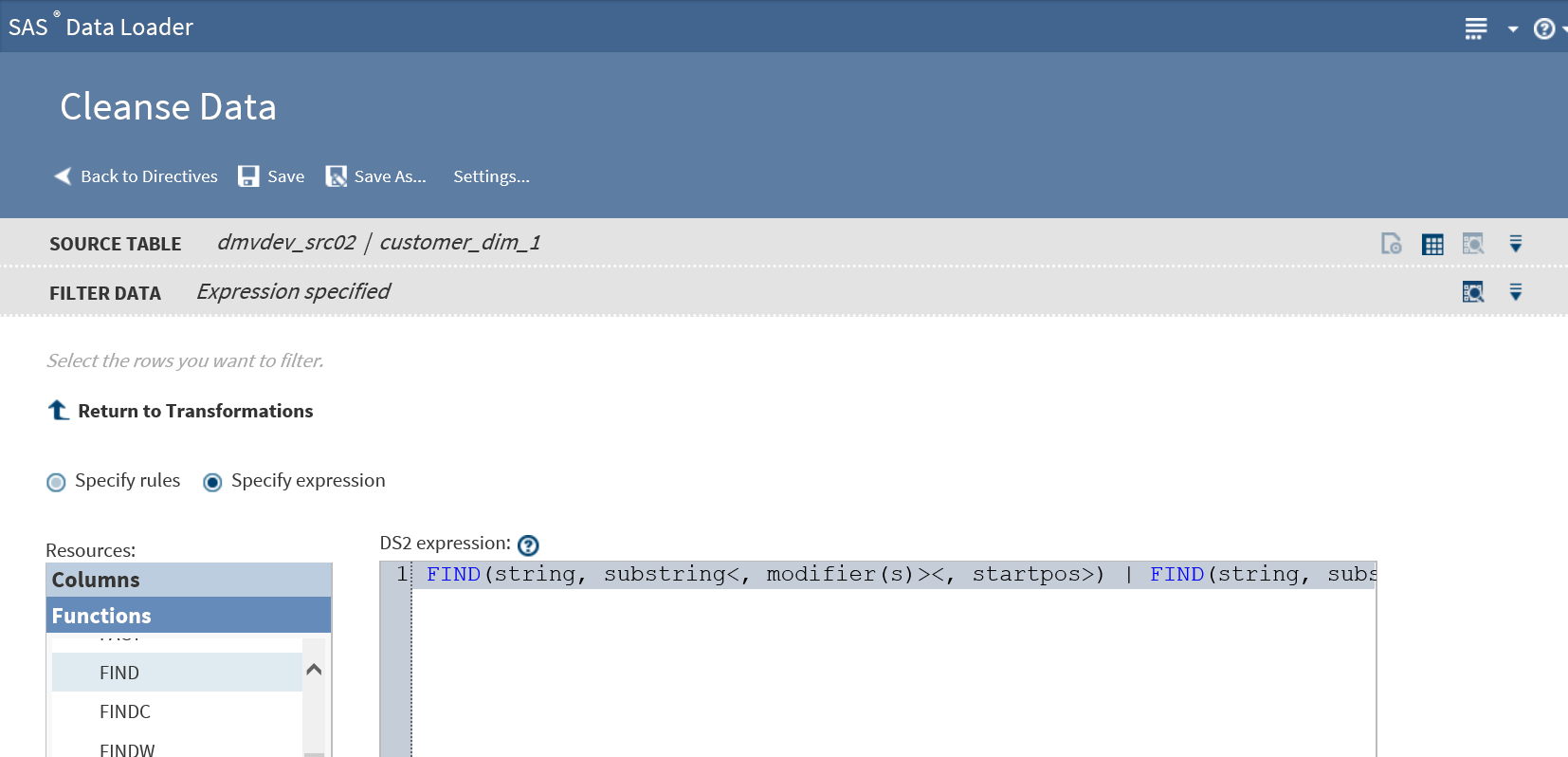

To filter rows with a user-written expression, click Specify expression and go to Step 5.

TipIf the table that you selected has been profiled, an ellipsis button ( ) appears next to the filter value selection. Click

that button to view profile results while building your filters. For more information

about generating profile reports for tables, see Profile Data.

) appears next to the filter value selection. Click

that button to view profile results while building your filters. For more information

about generating profile reports for tables, see Profile Data. -

-

To filter rows by specifying one or more rules, follow these steps:

-

Click Select a column and choose the source column that forms the basis of your rule.

-

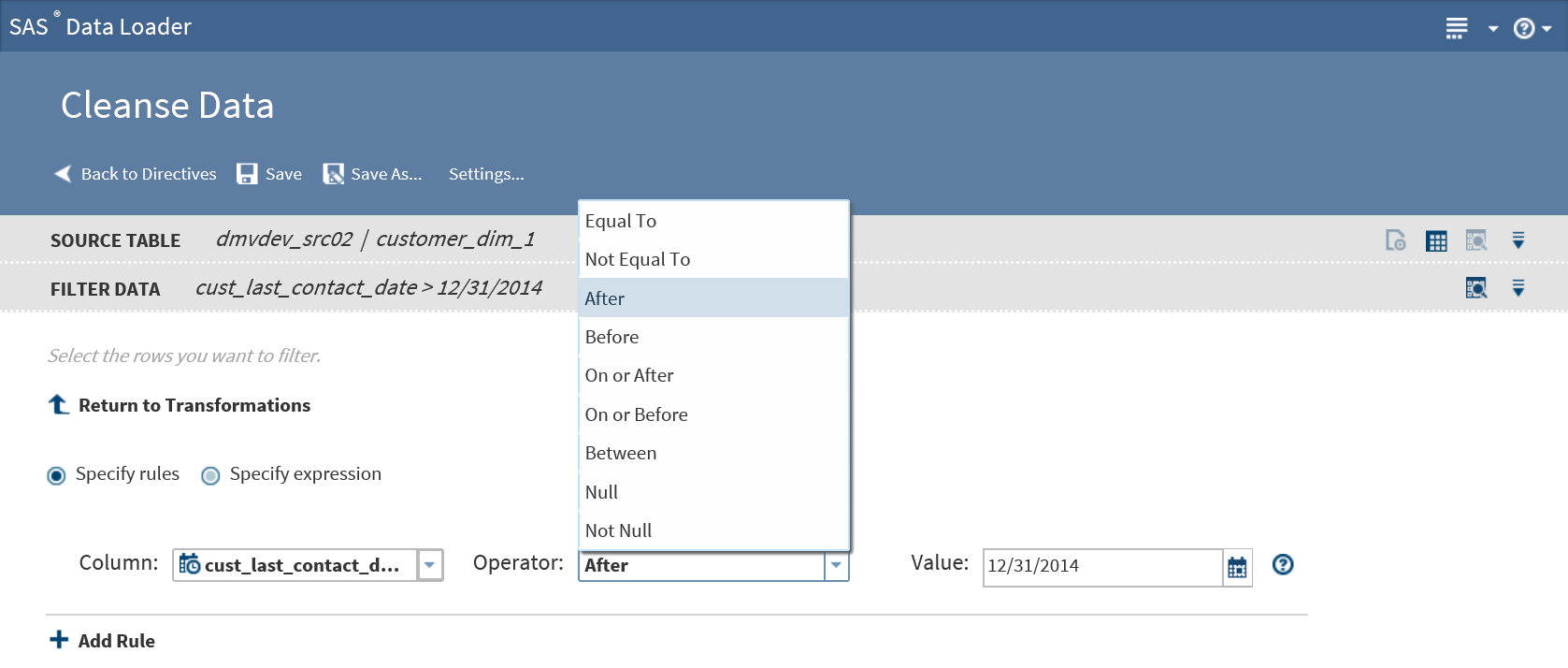

Click and select a logical Operator. The operators that are available depend on the type of the data in the source column. For example, the following image shows the operators that are available for the date/time data type:

-

In the Value field, add the source column value that completes the expression. In the preceding example, the rule can be read as “Filter from the target all source rows with a last contact date after December 31, 2014.”

-

-

To filter rows when either the new rule or the preceding rule are true, change the AND condition to OR.

-

When your rules are complete, go to Step 6.

-

-

To filter rows using an expression, follow these steps:

-

In the expression text box, enter or paste your expression.Your expression can use either SAS DS2 functions (with the MapReduce runtime target,) or DataFlux EEL functions (with the Spark runtime target). Click Settings to display the selected runtime target. To learn more about runtime targets, see Enable Support for Impala and Spark.To learn the requirements for expressions, see Develop Expressions for Directives .

-

To add a function to your expression, click Functions in the Resources box, expand a category, select a function, and click

.

.

To add column names to your expression, position the cursor in the expression text box, click Columns in the Resources box, click a source column, and then click

To add column names to your expression, position the cursor in the expression text box, click Columns in the Resources box, click a source column, and then click .

.

-

-

When your rules or expression are complete, click Next to select a target table and run your job.To add another data cleansing transformation, click Add Another Transformation and see Select a Data Cleansing Transformation.

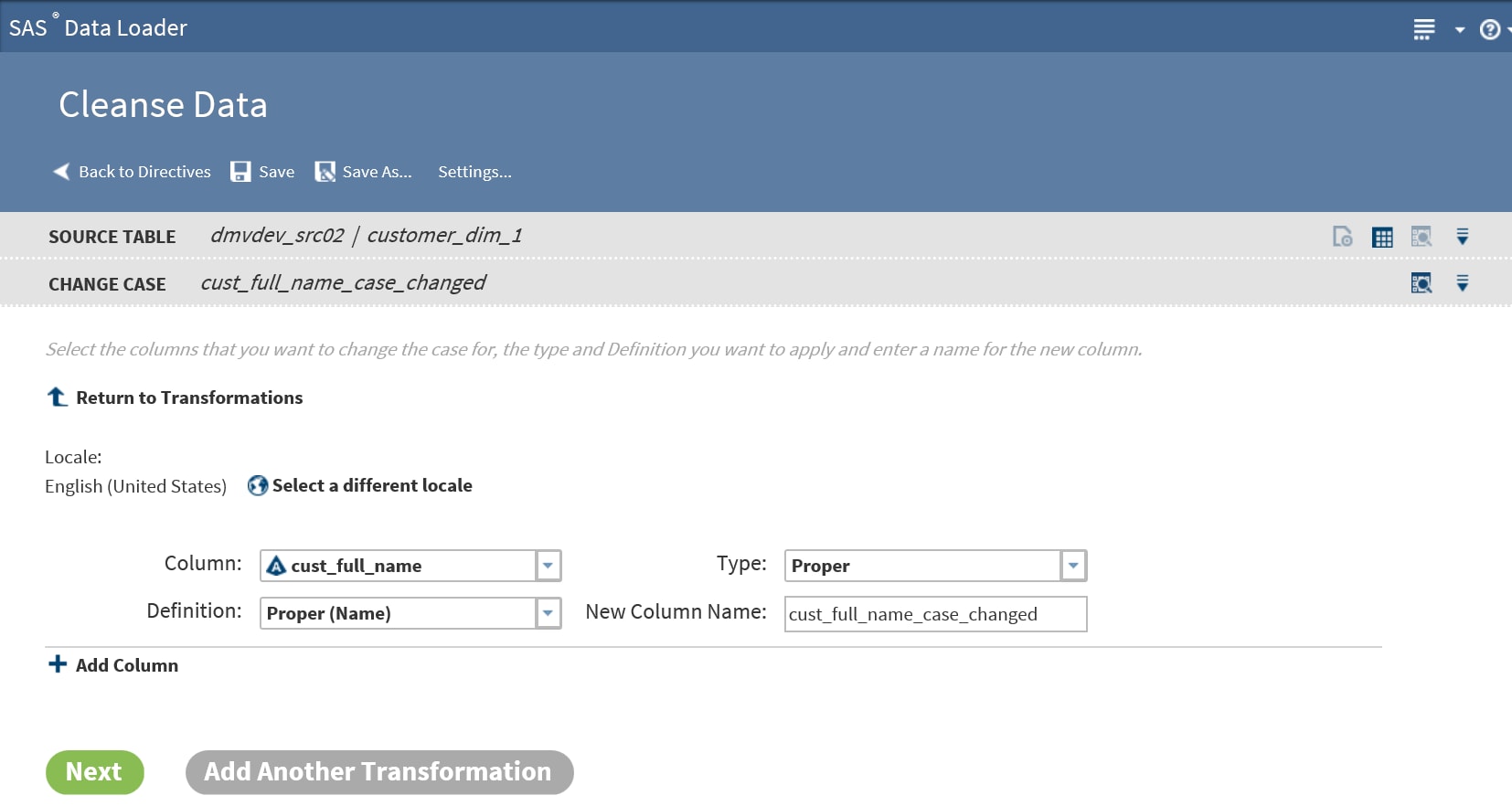

Change Case Transformation

Use the Change Case

transformation to standardize the casing of selected character columns.

You can convert to ALL UPPERCASE, all lowercase, or Initial Capital

Letters (or Proper Case).

Follow these steps to

use the Change Case transformation:

-

If this is the first transformation in a new job, select a source table.

-

In the Transformation task, click Change Case.

-

In the Change Case transformation, accept or change the default Locale. The selected locale needs to reflect the language and region that applies to the content in the source table.

-

Select a Type of casing for the selected column.

-

Select the case Definition that best fits the content of your column. For the source column cust_full_name, and for Proper casing, you would select the case definition Proper (Name).The case definition is part of the SAS Quality Knowledge Base that is installed on your Hadoop cluster. The case definition determines how case changes are applied to your data, based on your data content and selected locale.

-

Accept or change the default value in the field New Column Name.

-

Click Add Column

to define another case change.

to define another case change.

-

Click Next to select a target table and run your job.To add another data cleansing transformation, click Add Another Transformation and see Select a Data Cleansing Transformation.

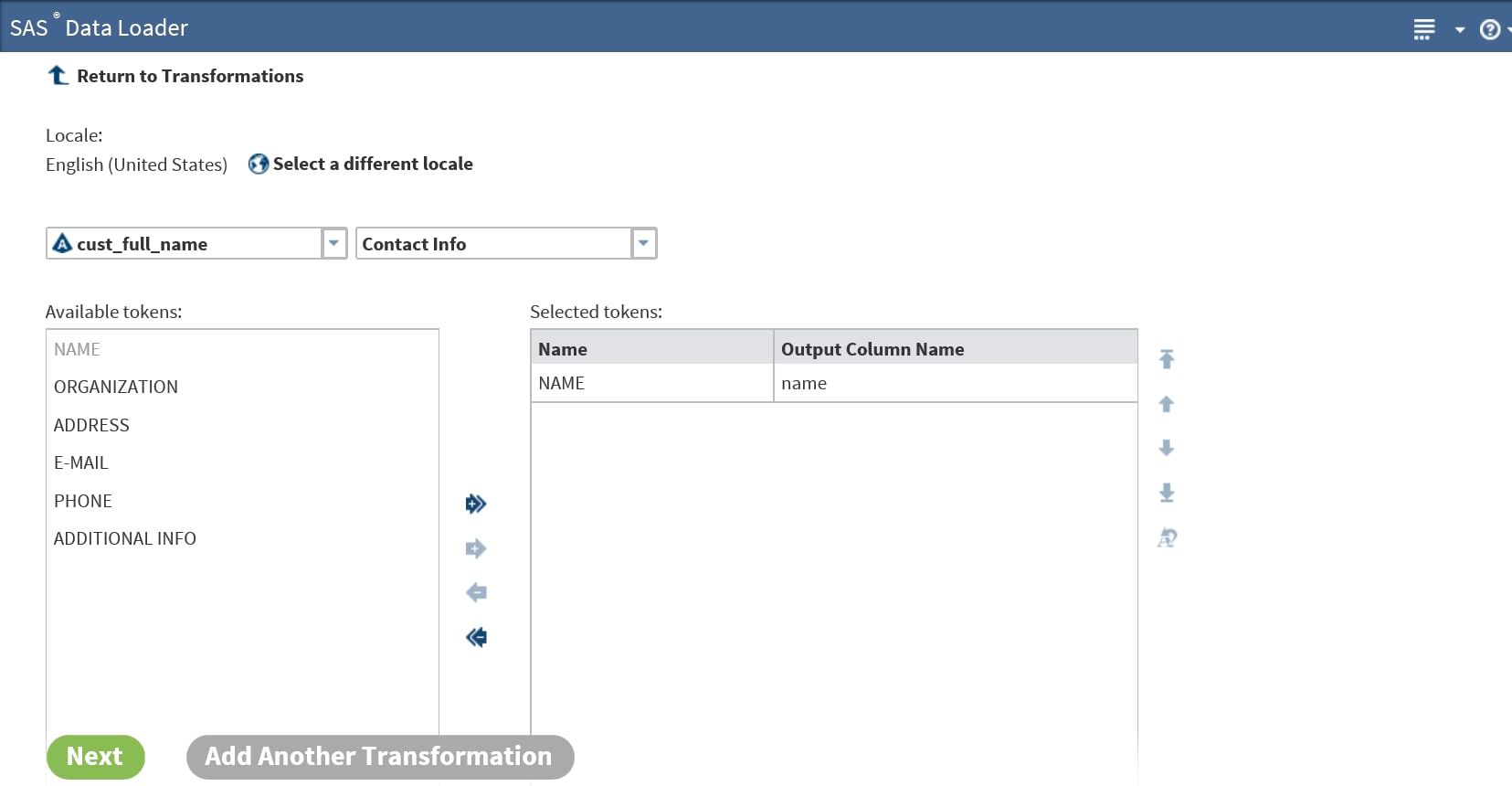

Field Extraction Transformation

Use the Field Extraction

transformation to copy tokens from a source column to new columns

in the target. Tokens represent types of content that can be extracted

using an extraction definition. The available extraction definitions

provide locale-specific information that enables extraction.

Follow these steps to

use the Field Extraction transformation:

-

If this is the first transformation in a new job, select a source table.

-

In the Transformation task, click Field Extraction.

-

In the Field Extraction transformation, accept or change the default Locale.

-

Click Column and select a column from which you want to copy data to the target.

-

Click Definition and select the set of Field Extraction definitions that best fit your source data. Typical available selections include Contact Info and Product Data. The list of tokens that appear after you make your selection will show if you selected the appropriate definition.The tokens that you select are used to parse each source row and extract values of the specified type.

-

Click one or more tokens that you want to extract from the selected column and click

. The tokens and default new column names appear in Selected

Tokens.

To select all tokens, click

. The tokens and default new column names appear in Selected

Tokens.

To select all tokens, click .

.

-

To change the default column name, click on the name in Output Column Name.

-

To reorder the columns in the target, click a row in Selected tokens and then click the up and down icons to the right of Selected tokens. The top row in Selected tokens specifies the first row in the target.

-

Click Next to select a target table and run your job.To add another data cleansing transformation, click Add Another Transformation and see Select a Data Cleansing Transformation.

Parse Data Transformation

Use the Parse Data transformation

to extract tokens from a source column and add the token to a new

column. A token is a meaningful subset of a data value that provides

a basis for analysis. For example, for a column that contains phone

numbers, you could extract the area code token and insert that value

in a new column. You could then analyze the source table by grouping

rows by area code.

Follow these steps to

learn how to use the Parse Data transformation:

-

If this is the first transformation in a new job, select a source table.

-

In the Transformation task, click Parse Data.

-

In the Parse Data transformation, click Select a column and select a source column from the list.

-

Click the Definition field and click the definition that you will apply to the selected column.

-

In the Available tokens list, click the token that you will copy out to a new target column.

-

Click the right plus arrow

to apply the token to a new column. You can change

the suggested Output Column Name.

to apply the token to a new column. You can change

the suggested Output Column Name.

-

At this point you can choose other tokens to add to other new columns in the target table.

-

If you have multiple tokens, you can arrange the target columns using the up and down arrow icons.

-

To remove a token column, select it and click the minus arrow icon

-

Click Next to select a target table and run your job.To add another data cleansing transformation, click Add Another Transformation and see Select a Data Cleansing Transformation.

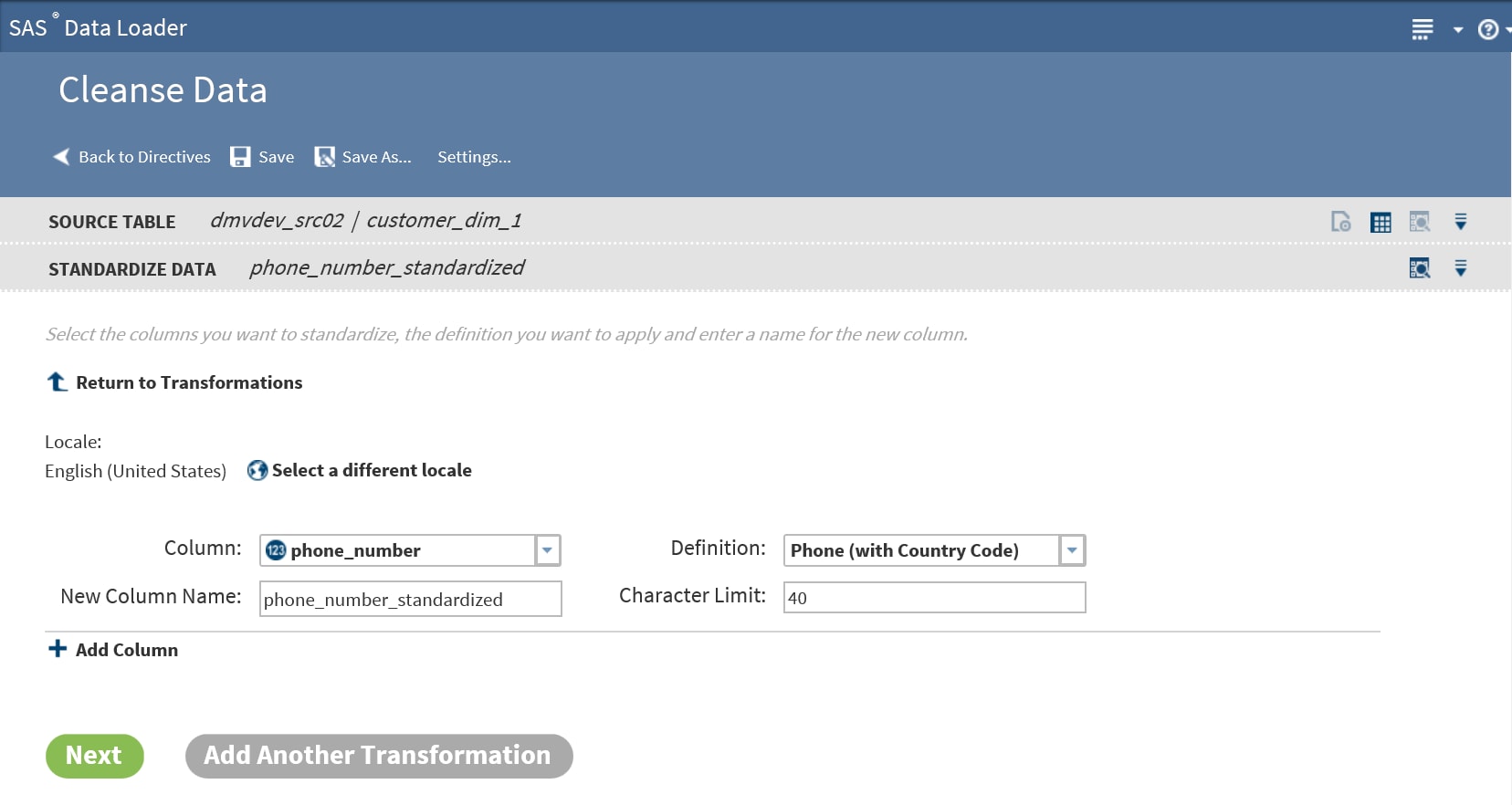

Standardization Transformation

Follow these steps with

your own data to learn how to use the Standardization transformation.

This example creates a job that standardizes a column of state names

in a table of customer data.

-

If this is the first transformation in your job, select a source table.

-

In the Transformation task, click Standardize Data.

-

In the Standardize Data transformation, click Select a Column and select the column from the list.

-

Click Select a Definition and select the standardization definition to be applied to the selected column. Standardization definitions are available for certain character strings and numeric values. Also, standardization definitions are available for generic actions that are independent of content, such as Space Removal and Multiple Space Collapse. To learn about the standardization definitions, Standardization Definitions in the online Help for the SAS Quality Knowledge Base.

-

Standardized values are applied to a new column in the target. You can change the default name of the new column by clicking New column name.

-

To save space or truncate long values, you can change the Character limit from its default value of 256.

-

The standardization transformation is now completely defined. By default, the target table contains both the original source column and the new standardized column. If you would prefer to remove the source column in the target, or make other changes to target columns, add a Manage Columns transformation toward the end of your job.Click Next to select a target table and run your job.To add another data cleansing transformation, click Add Another Transformation and see Select a Data Cleansing Transformation.

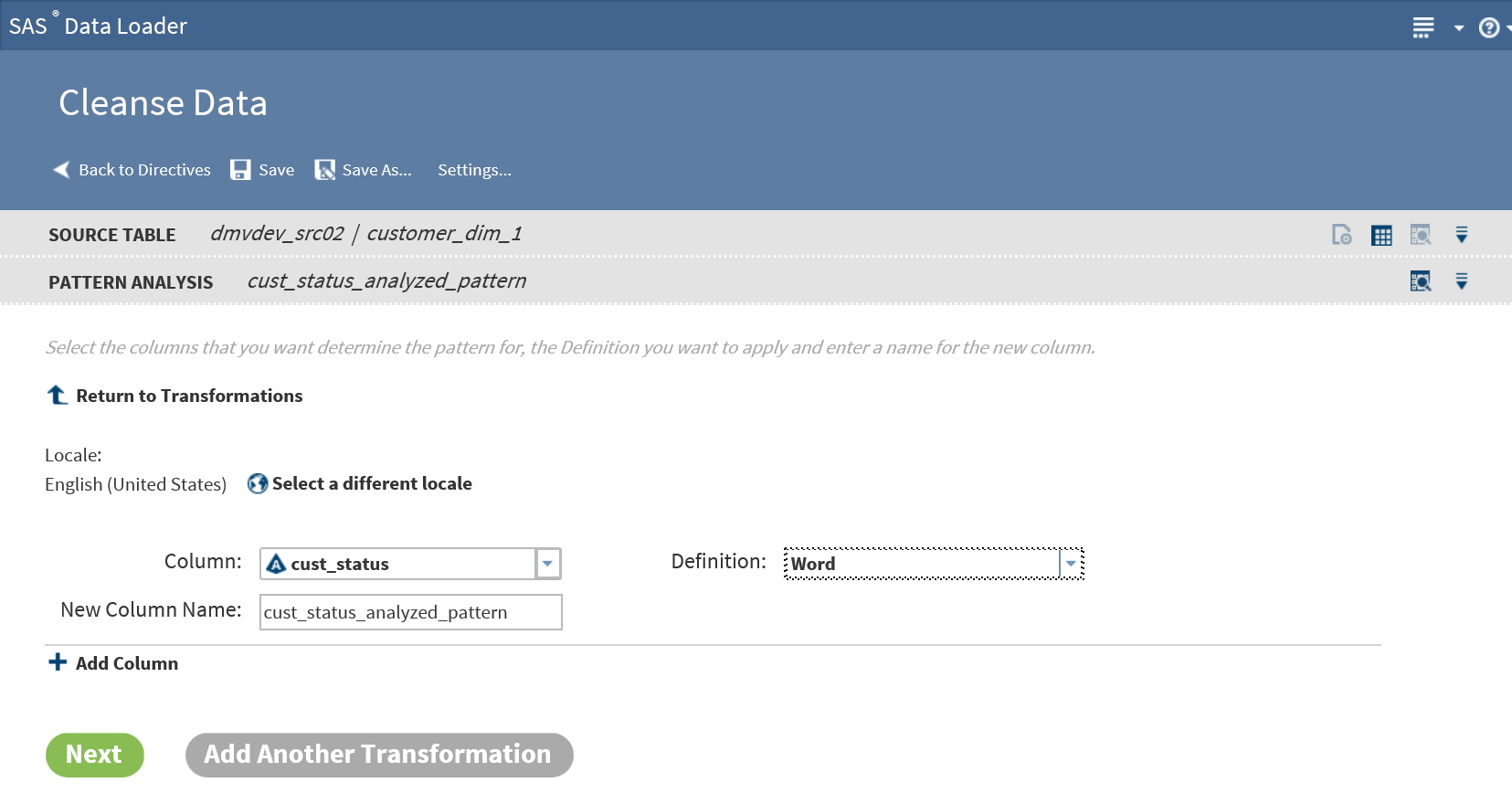

Pattern Analysis Transformation

The Pattern Analysis

transformation reads a source row and generates a corresponding pattern

value in the target. The content of the pattern value describes the

content of the data. For example, character pattern analysis generate

patterns that show if each character is uppercase, lowercase, or numeric.

The patterns form the

basis for structural analysis. For example, you can apply a Filter

transformation to the output of a pattern analysis. The filter can

exclude the expected pattern and write to the target the rows that

are structurally invalid.

Follow these steps to

use the Pattern Analysis transformation:

-

If this is the first transformation in your job, select a source table.

-

In the Transformation task, click Pattern Analysis.

-

In the Pattern Analysis task, accept or change the default Locale. The selected locale needs to reflect the language and region that applies to the content in the source table.

-

Click Select a column and click the column that you want to analyze.

-

Click Definition and select a pattern analysis definition.CharacterGenerates patterns that represent the types of each character in the source. A indicates uppercase, a lowercase, 9 numbers, and * other (punctuation, and so on). Blanks in the source are replicated as blanks in the pattern. Example: the source value 1 877-846-Flux generates the pattern 9 999*999*Aaaa.Character (Script Identification)Generates patterns that identify the Unicode character set of each character in the source. Eleven or more character sets can be detected, including Latin, Arabic, Kanji/Han, Katakana, Cyrillic, and Numeric. Uppercase and lowercase are detected for at least three character sets. Example: (7F, SAS Institute)スズキイチロウ generates *9L* LLL Lllllllll*アアアアアアア.Note: The full mapping of pattern characters to Unicode character sets is provided in the Pattern Analysis Definitions in the online Help for the Contact Information Quality Knowledge Base.WordGenerates patterns that represent the types of words in the source. A represents alphabetic words, 9 numeric, M mixed, and * other. Example: 216 E 116th St generates 9 A M A.Word (Script Identification)Generates patterns that represent the Unicode character set of each word in the source. Eleven or more character sets can be detected, including Latin, Arabic, Kanji/Han, Katakana, Cyrillic, and Numeric. W indicates a potentially invalid word that contains multiple character sets. Example: (7F, SAS Institute)スズキイチロウ generates *9L* L L*ア.

-

Review and update the default New Column Name.

-

Review and update as needed the default New Column Name.

-

To generate patterns for other columns, click

.

.

-

Click Next to select a target table and run your job.To add another data cleansing transformation, click Add Another Transformation and see Select a Data Cleansing Transformation.

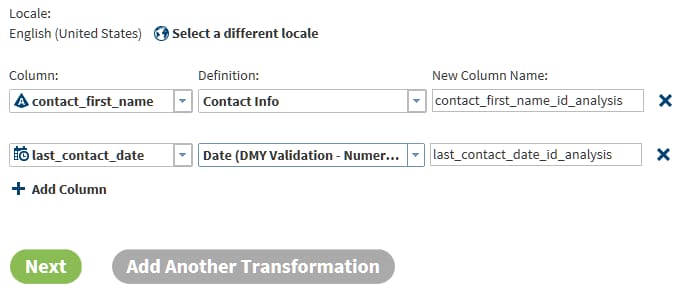

Identification Analysis Transformation

Use the Identification

Analysis transformation to report on the type of the content in a

given column. The content types that can be detected include contact

information, dates, email, field names, offensive content, and phone

numbers. The result of the analysis is added to a new column in the

target table. You can analyze one column for multiple content types,

and you can analyze multiple columns in the source table.

Follow these steps to

use the Identification Analysis transformation:

-

If this is the first transformation in your job, select a source table.

-

In the Transformation task, click Identification Analysis.

-

In the Identification Analysis transformation, click Select a Column, and then select a column for analysis.

-

Click Select a Definition and choose the content type that you want to apply to the source column.

-

In the New Column Name field, a name is suggested for the column that will be added to the target. The new column will contain the results of the identification analysis. Click in the text field for New Column Name to change the suggested column name.

-

To analyze another column, or to analyze the same column with a different definition, click Add Column.

-

Click Next to select a target table and run your job.To add another data cleansing transformation, click Add Another Transformation and see Select a Data Cleansing Transformation.

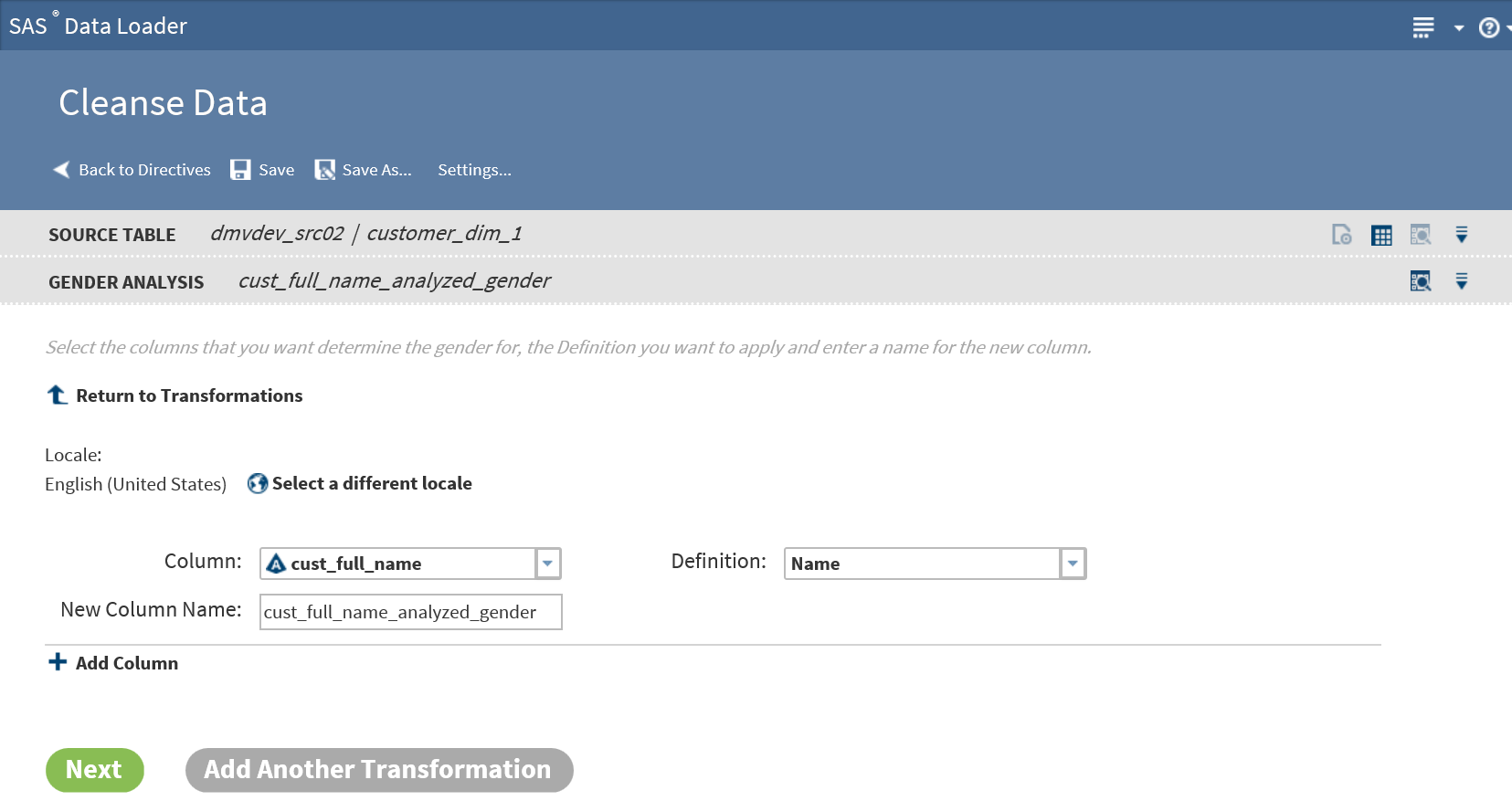

Gender Analysis Transformation

The Gender Analysis

transformation analyzes columns of names and generates columns that

indicate the probable gender of the names.

Follow these steps to

use the Gender Analysis transformation:

-

If this is the first transformation in your job, select a source table.

-

In the Transformation task, click Gender Analysis.

-

In the Gender Analysis transformation, review and update the default Locale as needed to ensure that the locale matches the content of your source data.

-

Click Select a Column and click the column of name data in your source table.

-

Click Definition and click Name.

-

To analyze a second column of name data, click

.

.

-

Review and update as needed the default New Column Name.

-

Click Next to select a target table and run your job.To add another data cleansing transformation, click Add Another Transformation and see Select a Data Cleansing Transformation.

Generate Match Codes Transformation

The Generate Match Codes

transformation generates match codes for specified columns. The generated

match codes are then added to new columns in the target table. The

match codes are generated based on a definition and a sensitivity.

The definition specifies the type of the content in the column. The

sensitivity determines the degree of exactitude that is required in

order for two data values to be declared a match. Higher sensitivity

values specify that data values must be more similar to be declared

a match. Lower sensitivity values enable matching with less similarity.

The level of sensitivity is reflected in the length and complexity

of the match codes.

Match codes can be used

to find columns that contain similar data. For example, you can generate

match codes for name and address columns, and then compare the match

codes to detect duplicates.

Follow these steps to

use the Generate Match Codes transformation:

-

If this is the first transformation in your job, select a source table.

-

In the Transformation task, click Generate Match Codes.

-

In the Generate Match Codes transformation, click Select a Column and then click the column for which you want to generate match codes.

-

Click Select a Definition and then click the definition that you want to use to generate match codes.

-

To change the default sensitivity value, click the Sensitivity field and select a new value. Lower sensitivity numbers give you more matches (identical match codes) and perhaps more matching errors. Higher sensitivity numbers produce the same match code only when data values are nearly identical.

-

Click Next to select a target table and run your job.To add another data cleansing transformation, click Add Another Transformation and see Select a Data Cleansing Transformation.

Manage Columns Transformation

Use the Manage Columns

transformation to remove, reorder, rename, change type, and change

the length of the columns in the target table. You can also use Manage

Columns to add generated data to new columns or to modify or replace

data in existing columns. The new or changed data is generated by

user-written expressions.

Follow these steps to

learn how to use the Manage Columns transformation:

-

If this is the first transformation in your job, select a source table.

-

In the Transformation task, click Manage Columns.

-

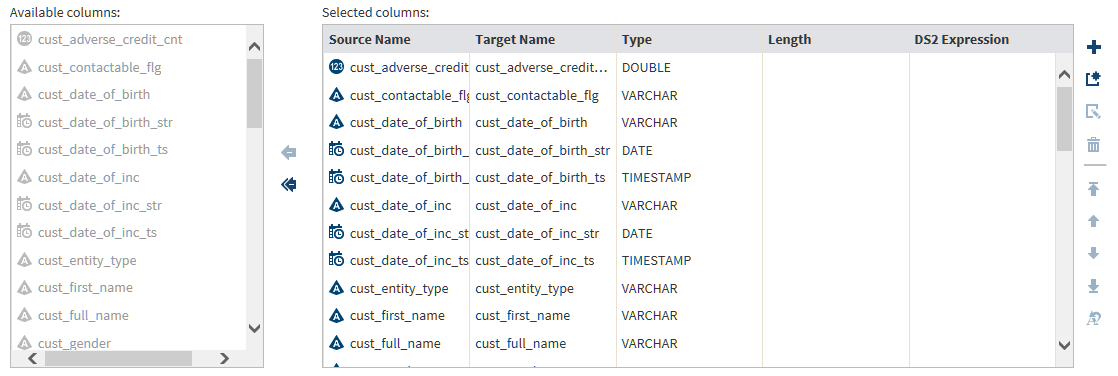

In the Manage Columns transformation, columns are listed in order of appearance. The top column is the first or leftmost column.

Note the arrow icons between Available columns and Selected columns. To remove a column from the target, click the column name on the right and click the top arrow. To move all columns out of the target, click the double-arrow icon. After you remove a column, arrows will appear so that you can move columns from Available to Selected.Initially, all columns are selected for the target table, including all of the new columns that you added in prior transformations.

Note the arrow icons between Available columns and Selected columns. To remove a column from the target, click the column name on the right and click the top arrow. To move all columns out of the target, click the double-arrow icon. After you remove a column, arrows will appear so that you can move columns from Available to Selected.Initially, all columns are selected for the target table, including all of the new columns that you added in prior transformations. -

Locate the icons on the right side of Selected columns. These icons provide the following functions:

-

Add a new column and enter or paste an expression

into the Expression column.

Your expression can use either SAS DS2 functions (with the MapReduce runtime target,) or DataFlux EEL functions (with the Spark runtime target.) Click Settings to display the selected runtime target. To learn more about runtime targets, see Enable Support for Impala and Spark.To learn the requirements for expressions, see Develop Expressions for Directives.

Add a new column and enter or paste an expression

into the Expression column.

Your expression can use either SAS DS2 functions (with the MapReduce runtime target,) or DataFlux EEL functions (with the Spark runtime target.) Click Settings to display the selected runtime target. To learn more about runtime targets, see Enable Support for Impala and Spark.To learn the requirements for expressions, see Develop Expressions for Directives. -

Add a new column and specify an expression using

the Advanced Editor window. To learn how

to use the Advanced Editor, see About Expressions and the Advanced Editor.

Add a new column and specify an expression using

the Advanced Editor window. To learn how

to use the Advanced Editor, see About Expressions and the Advanced Editor. -

Develop an expression using the Advanced Editor.

Develop an expression using the Advanced Editor.

-

Remove the selected column from the target table.

Removed columns appear in Available columns.

Remove the selected column from the target table.

Removed columns appear in Available columns.

-

Move the selected column to the first column position

in the target.

Move the selected column to the first column position

in the target.

-

Move the selected column one position to the left

in the target.

Move the selected column one position to the left

in the target.

-

Move the selected column one position to the right.

Move the selected column one position to the right.

-

Move the selected column to the last column position

in the target (rightmost.)

Move the selected column to the last column position

in the target (rightmost.)

-

Change the name of the selected target column.

Change the name of the selected target column.

-

-

Click Next to select a target table and run your job.To add another data cleansing transformation, click Add Another Transformation and see Select a Data Cleansing Transformation.

Summarize Rows Transformation

Use the Summarize Rows

transformation to add summarized numeric values to your target table.

To generate summaries, you first group rows by one or more columns.

Then you select the columns that you want to summarize for each group

and subgroup. The method of summarization is known as an aggregation.

The number of aggregations depends on the column data type. Numeric

columns have 13 available aggregations.

Follow these steps to

learn how to use the Summarize Rows transformation:

-

If this is the first transformation in your job, select a source table.

-

In the Transformation task, click Summarize Rows.

-

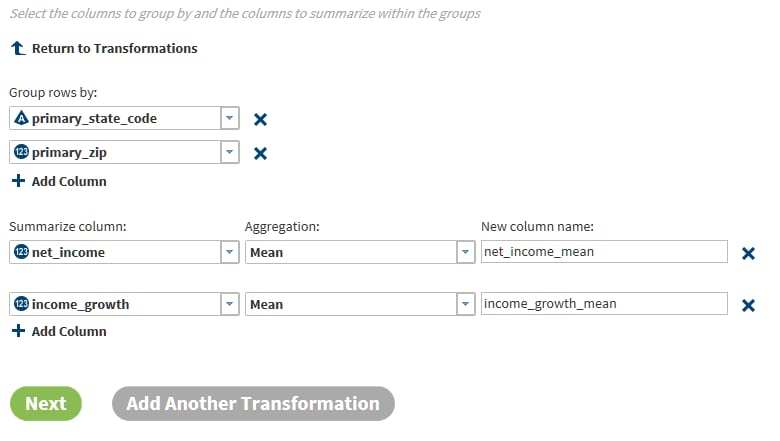

In the Summarize Rows transformation, click the Group rows by field and choose the first column that you want to use to group rows for summarization. In the target table, rows with the same values in the selected column appear together, along with their summary values in new columns.

-

To further subset the initial set of groups, and to generate a second set of summary values, click Add Column. Select a second column. Add additional groups as needed.

-

Click Summarize column and select the first numeric column that you want to summarize.

-

Click Aggregation and select the aggregation that you would like to provide for the selected column.

-

To change the suggested name for the new column that will contain the aggregation values for each group, click New Column Name.

-

To add a second aggregation, click Add Column.

-

Click Next to select a target table and run your job.To add another data cleansing transformation, click Add Another Transformation and see Select a Data Cleansing Transformation.

Select a Target Table and Run Your Job

After you click Next,

follow these steps to select a target table and complete your data

cleansing job:

-

In the Target Table task:To select an existing target table (and completely overwrite any existing content), click the data source, click an existing target table, and then click Next. Or, you can click

and choose from a list of your recent targets.

To create a new target table, click a data source, click New Table

and choose from a list of your recent targets.

To create a new target table, click a data source, click New Table , and specify the table name in the New

Table window. A new table of that name appears in the

grid or list.

To explore the contents of target tables, click a table and click Data Sample

, and specify the table name in the New

Table window. A new table of that name appears in the

grid or list.

To explore the contents of target tables, click a table and click Data Sample , Table Viewer

, Table Viewer  , or (if available) View Profile

, or (if available) View Profile  .

To view or change the target table format or the Hive storage location, click

.

To view or change the target table format or the Hive storage location, click .

.

-

With a target table highlighted in the list or grid, click Next.

-

In the Result task, click Save

or Save As

or Save As  to save your job, and then click Start

Transforming Data.

to save your job, and then click Start

Transforming Data.

-

When the job is complete, you can view the results, the log file, and the code that ran in Hadoop.

About Expressions and the Advanced Editor

The Advanced

Editor window enables you to develop expressions. The

editor is available in the Filter and Manage Columns transformations.

In those transformations, user-written expressions can filter source

rows from the target, modify or replace existing column data, and

generate new data for new target columns.

Expressions are written

using SAS DS2 functions in the MapReduce runtime environment, or DataFlux

EEL functions in the Spark runtime environment. You can determine

or specify the runtime environment using the Settings menu

at the top of the directive. For more information about runtime environments, see Enable Support for Impala and Spark.

In the Advanced Editor,

you can click Save and New to save your expression

and apply its return value to a new target column.

In the Advanced

Editor, the Resources list box

displays the categories of the available DS2 or EEL functions. Within

the categories, each function displays a short description and syntax

help. To add a function to your expression, click the listing and

click  .

.

.

.

The Resources list

box also lists column names. To add a column name to your expression,

click the cursor in the expression text box, click the column name

in Resources, and then click  .

.

.

.

To learn the requirements

of expressions, see Develop Expressions for Directives .

Copyright © SAS Institute Inc. All Rights Reserved.