Introduction to Statistical Modeling with SAS/STAT Software

Sweep Operator

The sweep operator (Goodnight 1979) is closely related to Gauss-Jordan elimination and the Forward Doolittle procedure. The fact that a sweep operation can produce a generalized inverse by in-place mapping with minimal storage and that its application invariably leads to some form of matrix inversion is important, but this observation does not do justice to the pervasive relevance of sweeping to statistical computing. In this section the sweep operator is discussed as a conceptual tool for further insight into linear model operations. Consider the nonnegative definite, symmetric, partitioned matrix

![\[ \bA = \left[ \begin{array}{cc} \bA _{11} & \bA _{12} \cr \bA ^\prime _{12} & \bA _{22} \end{array}\right] \]](images/statug_intromod0491.png)

Sweeping a matrix consists of performing a series of row operations

akin to Gauss-Jordan elimination. Basic row operations are the multiplication of a row by a constant and the addition of a

multiple of one row to another. The sweep operator restricts row operations to

pivots on the diagonal elements of a matrix; further details about the

elementary operations can be found in Goodnight (1979). The process of sweeping the matrix  on its leading

partition is denoted as

on its leading

partition is denoted as  and leads to

and leads to

![\[ \mr{Sweep}(\bA ,\bA _{11}) = \left[\begin{array}{cc} \bA _{11}^{-} & \bA _{11}^{-}\bA _{12} \cr -\bA _{12}’\bA _{11}^{-} & \bA _{22}-\bA _{12}^\prime \bA _{11}^{-}\bA _{12} \end{array}\right] \]](images/statug_intromod0493.png)

If the kth row and column are set to zero when the pivot is zero (or in practice, less than some singularity tolerance), the generalized

inverse in the leading position of the swept matrix is a

reflexive,  -inverse. Suppose that the crossproduct matrix of the linear model is augmented with a "Y-border" as follows:

-inverse. Suppose that the crossproduct matrix of the linear model is augmented with a "Y-border" as follows:

![\[ \bC = \left[\begin{array}{cc} \bX ’\bX & \bX ’\bY \cr \bY ’\bX & \bY ’\bY \end{array}\right] \]](images/statug_intromod0494.png)

Then the result of sweeping on the rows of  is

is

![\begin{align*} \mr{Sweep}(\bC ,\bX ) =& \left[\begin{array}{cc} \left(\bX ’\bX \right)^{-} & \left(\bX ’\bX \right)^{-}\bX ’\bY \cr -\bY ’\bX \left(\bX ’\bX \right)^{-} & \bY ’\bY - \bY ’\bX \left(\bX ’\bX \right)^{-}\bX ’\bY \end{array}\right] \\[0.1in] =& \left[\begin{array}{cc} \left(\bX ’\bX \right)^{-} & \widehat{\bbeta } \cr -\widehat{\bbeta } & \bY ’\bM \bY \end{array}\right] = \left[\begin{array}{cc} \left(\bX ’\bX \right)^{-} & \widehat{\bbeta } \cr -\widehat{\bbeta } & \mr{SSR} \end{array}\right] \end{align*}](images/statug_intromod0495.png)

The "Y-border" has been transformed into the least squares solution and the residual sum of squares.

Partial sweeps are common in model selection. Suppose that the  matrix is partitioned as

matrix is partitioned as ![$[\bX _1 \, \, \bX _2]$](images/statug_intromod0496.png) , and consider the

augmented crossproduct matrix

, and consider the

augmented crossproduct matrix

![\[ \bC = \left[ \begin{array}{ccc} \bX _1’\bX _1 & \bX _1’\bX _2 & \bX _1’\bY \cr \bX _2’\bX _1 & \bX _2’\bX _2 & \bX _2’\bY \cr \bY ’ \bX _1 & \bY ’ \bX _2 & \bY ’\bY \end{array}\right] \]](images/statug_intromod0497.png)

Sweeping on the  partition yields

partition yields

![\[ \mr{Sweep}(\bC ,\bX _1) = \left[ \begin{array}{ccc} \left(\bX _1’\bX _1\right)^{-} & \left(\bX _1’\bX _1\right)^{-}\bX _1’\bX _2 & \left(\bX _1’\bX _1\right)^{-}\bX _1’\bY \cr -\bX _2’\bX _1\left(\bX _1’\bX _1\right)^{-} & \bX _2’\bM _1\bX _2 & \bX _2’\bM _1\bY \cr -\bY ’\bX _1 \left(\bX _1’\bX _1\right)^{-} & -\bY ’\bM _1\bX _2 & \bY ’\bM _1\bY \end{array}\right] \]](images/statug_intromod0498.png)

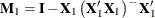

where  . The entries in the first row of this partition are the

generalized inverse of

. The entries in the first row of this partition are the

generalized inverse of  , the coefficients for regressing

, the coefficients for regressing  on

on  , and the coefficients for regressing

, and the coefficients for regressing  on

on  . The diagonal entries

. The diagonal entries  and

and  are the sum of squares and crossproduct matrices for regressing

are the sum of squares and crossproduct matrices for regressing  on

on  and for regressing

and for regressing  on

on  , respectively. As you continue to sweep the matrix, the last cell in the partition contains the residual sum of square of

a model in which

, respectively. As you continue to sweep the matrix, the last cell in the partition contains the residual sum of square of

a model in which  is regressed on all columns swept up to that point.

is regressed on all columns swept up to that point.

The sweep operator is not only useful to conceptualize the computation of least squares solutions, Type I and Type II sums of squares, and generalized inverses. It can also be used to obtain other statistical information. For example, adding the logarithms of the pivots of the rows that are swept yields the log determinant of the matrix.