Introduction to Regression Procedures

Multivariate Tests

Multivariate hypotheses involve several dependent variables in the form

![\[ H \colon \bL \bbeta \bM = \mb{d} \]](images/statug_introreg0111.png)

where  is a linear function on the regressor side,

is a linear function on the regressor side,  is a matrix of parameters,

is a matrix of parameters,  is a linear function on the dependent side, and

is a linear function on the dependent side, and  is a matrix of constants. The special case (handled by PROC REG) in which the constants are the same for each dependent variable

is expressed as

is a matrix of constants. The special case (handled by PROC REG) in which the constants are the same for each dependent variable

is expressed as

![\[ (\bL \bbeta - \mb{cj})\bM = \mb{0} \]](images/statug_introreg0113.png)

where  is a column vector of constants and

is a column vector of constants and  is a row vector of 1s. The special case in which the constants are 0 is then

is a row vector of 1s. The special case in which the constants are 0 is then

![\[ \bL \bbeta \bM = \mb{0} \]](images/statug_introreg0116.png)

These multivariate tests are covered in detail in Morrison (2004); Timm (2002); Mardia, Kent, and Bibby (1979); Bock (1975); and other works cited in Chapter 9: Introduction to Multivariate Procedures.

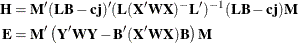

Notice that in contrast to the tests discussed in the preceding section,  here is a matrix of parameter estimates. Suppose that the matrix of estimates is denoted as

here is a matrix of parameter estimates. Suppose that the matrix of estimates is denoted as  . To test the multivariate hypothesis, construct two matrices,

. To test the multivariate hypothesis, construct two matrices,  and

and  , that correspond to the numerator and denominator of a univariate F test:

, that correspond to the numerator and denominator of a univariate F test:

Four test statistics, based on the eigenvalues of  or

or  , are formed. Let

, are formed. Let  be the ordered eigenvalues of

be the ordered eigenvalues of  (if the inverse exists), and let

(if the inverse exists), and let  be the ordered eigenvalues of

be the ordered eigenvalues of  . It happens that

. It happens that  and

and  , and it turns out that

, and it turns out that  is the ith canonical correlation.

is the ith canonical correlation.

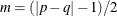

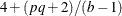

Let p be the rank of  , which is less than or equal to the number of columns of

, which is less than or equal to the number of columns of  . Let q be the rank of

. Let q be the rank of  . Let v be the error degrees of freedom, and let

. Let v be the error degrees of freedom, and let  . Let

. Let  , and let

, and let  . Then the following statistics test the multivariate hypothesis in various ways, and their p-values can be approximated by F distributions. Note that in the special case that the rank of

. Then the following statistics test the multivariate hypothesis in various ways, and their p-values can be approximated by F distributions. Note that in the special case that the rank of  is 1, all these F statistics are the same and the corresponding p-values are exact, because in this case the hypothesis is really univariate.

is 1, all these F statistics are the same and the corresponding p-values are exact, because in this case the hypothesis is really univariate.

Wilks’ Lambda

![\[ \Lambda = \frac{\mr{det}(\bE )}{\mr{det}(\bH +\bE )} = \prod _{i=1}^ n \frac{1}{1+ \lambda _ i} = \prod _{i=1}^ n (1 - \xi _ i) \]](images/statug_introreg0135.png)

then

![\[ F = \frac{1- \Lambda ^{1/t}}{\Lambda ^{1/t}} \cdot \frac{rt-2u}{pq} \]](images/statug_introreg0136.png)

is approximately F distributed, where

![\begin{align*} r =& v - \frac{p -q+1}{2} \\ u =& \frac{pq - 2}{4} \\ t =& \left\{ \begin{array}{ccl} \sqrt {\frac{p^2 q^2 - 4}{p^2 + q^2 - 5}} & & \mr{if } p^2 + q^2 - 5 > 0 \\[0.05in] 1 & & \mr{otherwise} \end{array} \right. \end{align*}](images/statug_introreg0137.png)

The degrees of freedom are  and

and  . The distribution is exact if

. The distribution is exact if  . (See Rao 1973, p. 556.)

. (See Rao 1973, p. 556.)

Hotelling-Lawley Trace

![\[ U = \mr{trace} \left( \bE ^{-1} \bH \right) = \sum _{i=1}^ n \lambda _ i = \sum _{i=1}^ n \frac{\xi _ i}{1 - \xi _ i} \]](images/statug_introreg0145.png)

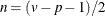

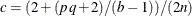

then for

![\[ F = (U/c)((4 + (pq+2)/(b-1))/(pq)) \]](images/statug_introreg0147.png)

is approximately F distributed with  and

and  degrees of freedom, where

degrees of freedom, where  and

and  ; while for

; while for

![\[ F = \frac{2(sn+1)U}{s^2 (2m+s+1)} \]](images/statug_introreg0152.png)

is approximately F with  and

and  degrees of freedom.

degrees of freedom.

Roy’s Maximum Root

![\[ F = \Theta \frac{v-r+q}{r} \]](images/statug_introreg0155.png)

where  is an upper bound on F that yields a lower bound on the significance level. Degrees of freedom are r for the numerator and

is an upper bound on F that yields a lower bound on the significance level. Degrees of freedom are r for the numerator and  for the denominator.

for the denominator.

Tables of critical values for these statistics are found in Pillai (1960).

Exact Multivariate Tests

If you specify the MSTAT=EXACT option in the appropriate statement, p-values for three of the four tests (Wilks’ lambda, the Hotelling-Lawley trace, and Roy’s greatest root) are computed exactly, and the p-values for the fourth test (Pillai’s trace) are based on an F approximation that is more accurate (but occasionally slightly more liberal) than the default. The exact p-values for Roy’s greatest root benefit the most, because in this case the F approximation provides only a lower bound for the p-value. If you use the F-based p-value for this test in the usual way, declaring a test significant if p < 0.05, then your decisions might be very liberal. For example, instead of the nominal 5% Type I error rate, such a procedure can easily have an actual Type I error rate in excess of 30%. By contrast, basing such a procedure on the exact p-values results in the appropriate 5% Type I error rate, under the usual regression assumptions.

The MSTAT=EXACT option is supported in the ANOVA, CANCORR, CANDISC, GLM, and REG procedures.

The exact p-values are based on the following sources:

Note that, although the MSTAT=EXACT p-value for Pillai’s trace is still approximate, it has "substantially greater accuracy" than the default approximation (Muller 1998).

Because most of the MSTAT=EXACT p-values are not based on the F distribution, the columns in the multivariate tests table that correspond to this approximation—in particular, the F value and the numerator and denominator degrees of freedom—are no longer displayed, and the column that contains the p-values is labeled "P Value" instead of "Pr > F." Suppose, for example, that you use the following PROC ANOVA statements to perform a multivariate analysis of an archaeological data set:

data Skulls; input Loc $20. Basal Occ Max; datalines; Minas Graes, Brazil 2.068 2.070 1.580 Minas Graes, Brazil 2.068 2.074 1.602 Minas Graes, Brazil 2.090 2.090 1.613 Minas Graes, Brazil 2.097 2.093 1.613 Minas Graes, Brazil 2.117 2.125 1.663 Minas Graes, Brazil 2.140 2.146 1.681 Matto Grosso, Brazil 2.045 2.054 1.580 Matto Grosso, Brazil 2.076 2.088 1.602 Matto Grosso, Brazil 2.090 2.093 1.643 Matto Grosso, Brazil 2.111 2.114 1.643 Santa Cruz, Bolivia 2.093 2.098 1.653 Santa Cruz, Bolivia 2.100 2.106 1.623 Santa Cruz, Bolivia 2.104 2.101 1.653 ;

proc anova data=Skulls; class Loc; model Basal Occ Max = Loc / nouni; manova h=Loc; ods select MultStat; run;

The default multivariate tests, based on the F approximations, are shown in Figure 4.5

Figure 4.5: Default Multivariate Tests

| MANOVA Test Criteria and F Approximations for the Hypothesis of No Overall Loc Effect H = Anova SSCP Matrix for Loc E = Error SSCP Matrix S=2 M=0 N=3 |

|||||

|---|---|---|---|---|---|

| Statistic | Value | F Value | Num DF | Den DF | Pr > F |

| Wilks' Lambda | 0.60143661 | 0.77 | 6 | 16 | 0.6032 |

| Pillai's Trace | 0.44702843 | 0.86 | 6 | 18 | 0.5397 |

| Hotelling-Lawley Trace | 0.58210348 | 0.75 | 6 | 9.0909 | 0.6272 |

| Roy's Greatest Root | 0.35530890 | 1.07 | 3 | 9 | 0.4109 |

| NOTE: F Statistic for Roy's Greatest Root is an upper bound. | |||||

| NOTE: F Statistic for Wilks' Lambda is exact. | |||||

If you specify MSTAT=EXACT in the MANOVA statement, as in the following statements, then the displayed output is the much simpler table shown in Figure 4.6:

proc anova data=Skulls; class Loc; model Basal Occ Max = Loc / nouni; manova h=Loc / mstat=exact; ods select MultStat; run;

Figure 4.6: Multivariate Tests with MSTAT=EXACT

| MANOVA Tests for the Hypothesis of No Overall Loc Effect H = Anova SSCP Matrix for Loc E = Error SSCP Matrix S=2 M=0 N=3 |

||

|---|---|---|

| Statistic | Value | P-Value |

| Wilks' Lambda | 0.60143661 | 0.6032 |

| Pillai's Trace | 0.44702843 | 0.5521 |

| Hotelling-Lawley Trace | 0.58210348 | 0.6337 |

| Roy's Greatest Root | 0.35530890 | 0.7641 |

Notice that the p-value for Roy’s greatest root is substantially larger in the new table and correspondingly more in line with the p-values for the other tests.

If you reference the underlying ODS output object for the table of multivariate statistics, it is important to note that its structure does not depend on the value of the MSTAT= option. In particular, it always contains columns that correspond to both the default MSTAT=FAPPROX and the MSTAT=EXACT tests. Moreover, because the MSTAT=FAPPROX tests are relatively cheap to compute, the columns that correspond to them are always filled in, even though they are not displayed when you specify MSTAT=EXACT. On the other hand, for MSTAT=FAPPROX (which is the default), the column of exact p-values contains missing values and is not displayed.

![\[ V = \mr{trace} \left( \bH (\bH +\bE )^{-1} \right) = \sum _{i=1}^ n \frac{\lambda _ i}{1+ \lambda _ i} = \sum _{i=1}^ n \xi _ i \]](images/statug_introreg0141.png)

![\[ F = \frac{2n+s+1}{2m+s+1} \cdot \frac{V}{s-V} \]](images/statug_introreg0142.png)