The CANCORR Procedure

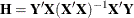

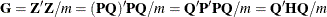

Assume without loss of generality that the two sets of variables, ![]() with p variables and

with p variables and ![]() with q variables, have means of zero. Let n be the number of observations, and let m be

with q variables, have means of zero. Let n be the number of observations, and let m be ![]() .

.

Note that the scales of eigenvectors and canonical coefficients are arbitrary. PROC CANCORR follows the usual procedure of rescaling the canonical coefficients so that each canonical variable has a variance of one.

There are several different sets of formulas that can be used to compute the canonical correlations, ![]() ,

, ![]() , and unscaled canonical coefficients:

, and unscaled canonical coefficients:

-

Let

be the covariance matrix of

be the covariance matrix of  ,

,  be the covariance matrix of

be the covariance matrix of  , and

, and  be the covariance matrix between

be the covariance matrix between  and

and  . Then the eigenvalues of

. Then the eigenvalues of  are the squared canonical correlations, and the right eigenvectors are raw canonical coefficients for the

are the squared canonical correlations, and the right eigenvectors are raw canonical coefficients for the  variables. The eigenvalues of

variables. The eigenvalues of  are the squared canonical correlations, and the right eigenvectors are raw canonical coefficients for the

are the squared canonical correlations, and the right eigenvectors are raw canonical coefficients for the  variables.

variables.

-

Let

and

and  . The eigenvalues

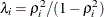

. The eigenvalues  of

of  are the squared canonical correlations,

are the squared canonical correlations,  , and the right eigenvectors are raw canonical coefficients for the

, and the right eigenvectors are raw canonical coefficients for the  variables. Interchange

variables. Interchange  and

and  in the preceding formulas, and the eigenvalues remain the same, but the right eigenvectors are raw canonical coefficients

for the

in the preceding formulas, and the eigenvalues remain the same, but the right eigenvectors are raw canonical coefficients

for the  variables.

variables.

-

Let

. The eigenvalues of

. The eigenvalues of  are

are  . The right eigenvectors of

. The right eigenvectors of  are the same as the right eigenvectors of

are the same as the right eigenvectors of  .

.

-

Canonical correlation can be viewed as a principal component analysis of the predicted values of one set of variables from a regression on the other set of variables, in the metric of the error covariance matrix. For example, regress the

variables on the

variables on the  variables. Call the predicted values

variables. Call the predicted values  and the residuals

and the residuals  . The error covariance matrix is

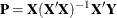

. The error covariance matrix is  . Choose a transformation

. Choose a transformation  that converts the error covariance matrix to an identity—that is,

that converts the error covariance matrix to an identity—that is,  . Apply the same transformation to the predicted values to yield, say,

. Apply the same transformation to the predicted values to yield, say,  . Now do a principal component analysis on the covariance matrix of

. Now do a principal component analysis on the covariance matrix of  , and you get the eigenvalues of

, and you get the eigenvalues of  . Repeat with

. Repeat with  and

and  variables interchanged, and you get the same eigenvalues.

variables interchanged, and you get the same eigenvalues.

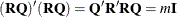

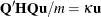

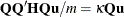

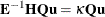

To show this relationship between canonical correlation and principal components, note that

,

,  , and

, and  . Let the covariance matrix of

. Let the covariance matrix of  be

be  . Then

. Then  . Let

. Let  be an eigenvector of

be an eigenvector of  and

and  be the corresponding eigenvalue. Then by definition,

be the corresponding eigenvalue. Then by definition,  ; hence

; hence  . Premultiplying both sides by

. Premultiplying both sides by  yields

yields  and thus

and thus  . Hence

. Hence  is an eigenvector of

is an eigenvector of  and

and  is also an eigenvalue of

is also an eigenvalue of  .

.

-

If the covariance matrices are replaced by correlation matrices, the preceding formulas yield standardized canonical coefficients instead of raw canonical coefficients.

The formulas for multivariate test statistics are shown in the section Multivariate Tests in Chapter 4: Introduction to Regression Procedures. Formulas for linear regression are provided in other sections of that chapter.