| Fit Analyses |

Parameter Estimates for Linear Models

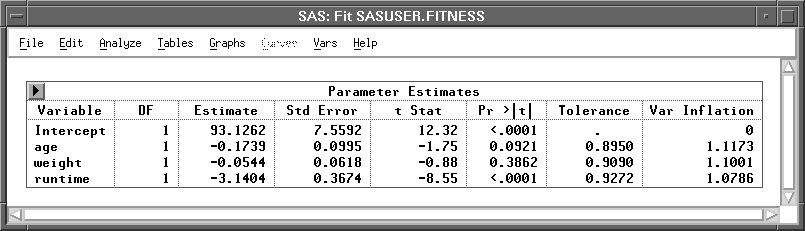

The Parameter Estimates table for linear models, as illustrated by Figure 39.17, includes the following:

- Variable

- names the variable associated with the estimated parameter. The name INTERCEPT represents the estimate of the intercept parameter.

- DF

- is the degrees of freedom associated with each parameter estimate. There is one degree of freedom unless the model is not of full rank. In this case, any parameter whose definition is confounded with previous parameters in the model has its degrees of freedom set to 0.

- Estimate

- is the parameter estimate.

- Std Error

- is the standard error, the estimate of the standard deviation of the parameter estimate.

- t Stat

- is the t statistic for testing that the parameter is 0. This is computed as the parameter estimate divided by the standard error.

- Pr > |t|

- is the probability of obtaining (by chance alone) a t statistic greater in absolute value than that observed given that the true parameter is 0. This is referred to as a two-sided p-value. A small p-value is evidence for concluding that the parameter is not 0.

- Tolerance

- is the tolerance of the explanatory variable on the other variables.

- Var Inflation

- is the variance inflation factor of the explanatory variable.

Figure 39.17: Parameter Estimates Table for Linear Models

The standard error of the jth parameter estimate bj is computed using the equation

Under the hypothesis that

- t = [(bj)/(STDERR( bj))]

When an explanatory variable is nearly a linear combination of other explanatory variables in the model, the affected estimates are unstable and have high standard errors. This problem is called collinearity or multicollinearity. A fit analysis provides several methods for detecting collinearity.

Tolerances (TOL) and variance inflation factors (VIF) measure the strength of inter- relationships among the explanatory variables in the model. Tolerance is 1- R2 for the R2 that results from the regression of the explanatory variable on the other explanatory variables in the model. Variance inflation factors are diagonal elements of (X'X)-1 after X'X is scaled to correlation form. The variance inflation measures the inflation in the variance of the parameter estimate due to collinearity between the explanatory variable and other variables. These measures are related by VIF = 1 / TOL.

If all variables are orthogonal to each other, both tolerance and variance inflation are 1. If a variable is closely related to other variables, the tolerance goes to 0 and the variance inflation becomes large.

When the X'X matrix is singular, least-squares solutions for the parameters are not unique. An estimate is 0 if the variable is a linear combination of previous explanatory variables. The degrees of freedom for the zeroed estimates are reported as 0. The hypotheses that are not testable have t tests printed as missing.

Copyright © 2007 by SAS Institute Inc., Cary, NC, USA. All rights reserved.