Working with the Data Management Service Node

Overview of the Data Management Service Node

You can add a Data Management

Service node to a Flow tab in an orchestration

job to execute the real-time server. The job inputs can either be

passed in one at a time as strings or passed in as a table (DWR_INPUTS)

with name and values. Job-specific inputs are returned in the discovery,

as well as the inputs listed in the table below.

Real-time services are

supposed to run quickly. Currently only real-time services run out

of process have the ability to cancel. Canceling when run out of process

kills the process and allows the server call to go away. The available

batch jobs are automatically populated into the Data management

service field in the Data Management Service tab

of the node.

Inputs and Outputs to the Data Management Service Node

The Data Management

Service Job node can take the inputs and outputs listed in the following

table:

|

Name

|

Description

|

|---|---|

|

Inputs:

|

|

|

DWR_JOBNAME

|

The name of the real-time

service to run on the server

|

|

DWR_PROXY_HOST

|

The proxy server host

name

|

|

DWR_PROXY_PORT

|

The proxy server port

name

|

|

DWR_SERVER_NAME

|

The DataFlux Data Management

Server name to connect to the server

|

|

DWR_TIMEOUT

|

The time-out length

for each call to the server. This value is the time to actually start

the process running. Values >0 = seconds, <0 = microseconds

(10^-6), 0 = no time-out

|

|

Other inputs

|

Any service-specific

inputs are also displayed on the node. Discovery displays a list of

inputs as well.

|

|

Outputs:

|

|

|

DWR_AUTHTYPE

|

The resolved authentication

type

|

|

DWR_RESOLVED_DOMAIN

|

The resolved authentication

domain

|

|

DWR_RESOLVED_HOST

|

The resolved host name,

including the URI scheme (http:// or https://)

|

|

DWR_RESOLVED_PORT

|

The resolved listen

port

|

|

DWR_RESOLVED_PROXY_HOST

-

|

The resolved proxy host

name

|

|

DWR_RESOLVED_PROXY_PORT

|

The resolved proxy port

|

|

Other outputs

|

One output for each

real-time service output that can be pulled back from the discovery

|

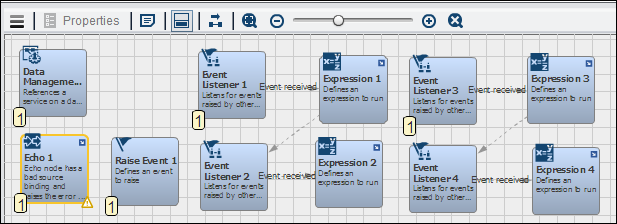

Using the Data Management Service Node

You can create an orchestration

job that uses a Data Management Service node

in the Flow tab to execute a real-time service

in the context of the job. For example, a job could include a Data

Management Service node that executes a real-time service

that is detected by Event Listener nodes.

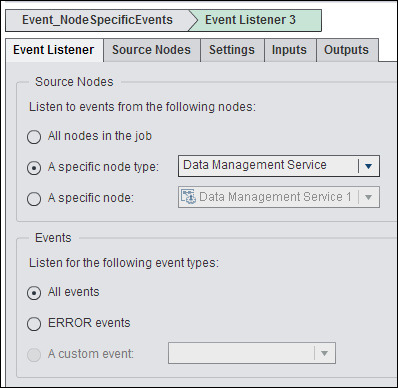

This particular job is designed to demonstrate how Event

Listener nodes can interact with other nodes.

This sample orchestration

job is shown in the following display:

Event Node Job with Specific Events

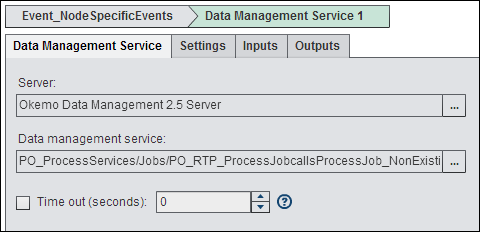

The settings for the Data

Management Service node are shown in the following display:

Data Management Service Settings

Note that the node specifies

the Okemo Data Management 2.5 Server in

the Server field and the PO_ProcessServices/Jobs/PO_RTP_ProcessJobcallsProcessJob_NonExisting.djf in

the Data management service field. These

settings are designed to generate an error that is detected by the Event

Listener 3 and Event Listener 4 nodes.

Events Listener

4 uses identical settings. For information about Event

Listener nodes, see

Working with the Event Listener Node.

Copyright © SAS Institute Inc. All rights reserved.