| The Interior Point Nonlinear Programming Solver -- Experimental |

Overview

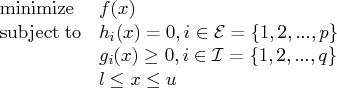

The interior point nonlinear programming (IPNLP) solver is a component of the OPTMODEL procedure. It can solve nonlinear programming (NLP) problems that contain both nonlinear equality and inequality constraints. The general NLP problem can be defined as follows:

It is assumed that the functions ![]() , and

, and ![]() are twice continuously differentiable. Any point satisfying the

constraints of the NLP problem is called a feasible point , and the set of all those points forms the feasible region

of the NLP problem, i.e.,

are twice continuously differentiable. Any point satisfying the

constraints of the NLP problem is called a feasible point , and the set of all those points forms the feasible region

of the NLP problem, i.e., ![]() .

.

The NLP problem can have a unique minimum or many different minima, depending on the type of functions involved in it. If the objective function is convex, the equality constraint functions are linear, and the inequality constraint functions are concave, then the NLP problem is called a convex program and has a unique minimum. All other types of NLP problems are called nonconvex and can contain more than one minimum, usually called local minima. The least of all those local minima is called the global minimum or global solution of the NLP problem. The IPNLP solver can find the unique solution of convex programs as well as a local minimum of a general NLP problem.

The IPNLP solver implements a primal-dual interior point algorithm that shares several powerful features from recent

state-of-the-art algorithms (Yamashita 1998; Armand, Gilbert, and Jan-Jégou 2002; Wächter and Biegler 2006; Vanderbei and Shanno 1999; Akrotirianakis and Rustem 2000).

It uses a line-search procedure and a merit function to ensure

convergence of the iterates to a local minimum. The term "primal-dual" means that the algorithm

iteratively generates better approximations of the decision variables ![]() (usually called "primal" variables)

as well as the dual variables (also referred to as Lagrange multipliers). At every iteration, the algorithm

solves a system of nonlinear equations by using Newton's method. The solution of that system provides the direction

and the steps along which the next approximation of the local minimum will be searched. The algorithm also

ensures that the primal iterates always remain strictly within their bounds -

i.e.,

(usually called "primal" variables)

as well as the dual variables (also referred to as Lagrange multipliers). At every iteration, the algorithm

solves a system of nonlinear equations by using Newton's method. The solution of that system provides the direction

and the steps along which the next approximation of the local minimum will be searched. The algorithm also

ensures that the primal iterates always remain strictly within their bounds -

i.e., ![]() , for

every iteration

, for

every iteration ![]() .

.

The current version of the solver is particularly suited for problems that contain many dense nonlinear inequality constraints, and it is expected to perform better than other nonlinear programming solvers in the SAS/OR suite.

Copyright © 2008 by SAS Institute Inc., Cary, NC, USA. All rights reserved.