Publish Scoring Functions

What Is a Scoring Function?

The Publish

Scoring Function of SAS Model Manager enables you to

publish models that are associated with the Data Step score

code type to a configured database. When you publish a scoring function

for a project, SAS Model Manager exports the project's champion model

to the SAS Metadata Repository. The SAS Scoring Accelerator then creates

scoring functions in the default version that can be deployed inside

the database based on the project's champion model score code. The

scoring function is validated automatically against a default train

table to ensure that the scoring results are correct. A scoring application

or SQL code can then execute the scoring functions in the database.

The scoring functions extend the database's SQL language and can be

used in SQL statements like other database functions.

The Scoring Function

metadata tables are populated with information about the project and

pointers to the scoring function. This feature enables users to review

descriptions and definitions of the exported model. The audit logs

track the history of the model's usage and any changes that are made

to the scoring project.

The Publish Scoring

Function also creates a MiningResult metadata object that is stored

in the SAS Metadata Repository. A typical use of a MiningResult object

is to serve input and output metadata queries made by scoring applications

at the design time of application development.

For more information

about the SAS Scoring Accelerator, see the SAS

In-Database Technology page on

http://support.sas.com.

Note: For more information about

the prerequisites before publishing a scoring function, see Prerequisites for Publishing a Scoring Function.

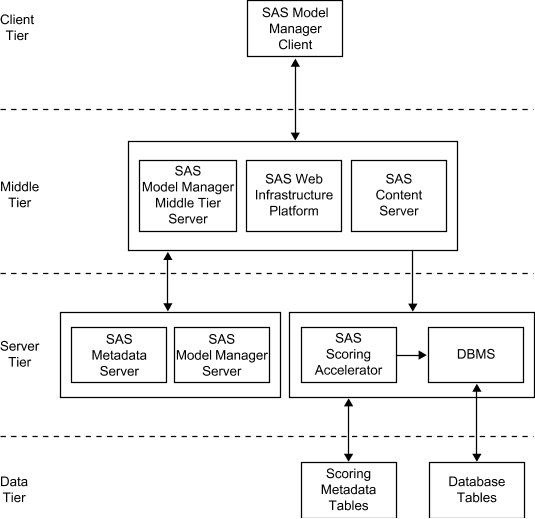

Here

is a diagram that represents the relationship between SAS Model Manager

and SAS Model Manager In-Database Support.

Here are descriptions

of the diagram's components.

The SAS Model Manager

Client handles communication to and from SAS Model Manager. You use

the SAS Model Manager Client to create projects and versions, import

models, connect with data sources, validate models, run modeling reports,

run scoring tasks, set project status, declare the champion model,

and run performance tests.

The SAS Model Manager

Middle Tier Server is a collection of services that are hosted by

an application server that orchestrates the communication and movement

of data between all servers and components in the SAS Model Manager

operational environment.

The SAS Web Infrastructure

Platform (or WIP) is a collection of middle tier services and applications

that provides basic integration services. It is delivered as part

of the Integration Technologies package. As such, all Business Intelligence

applications, Data Integration applications, and SAS Solutions have

access to the Web Infrastructure Platform as part of their standard

product bundling.

The SAS Model Manager

model repository and SAS Model Manager window tree configuration data

and metadata are stored in the SAS Content Server. Communication between

SAS Model Manager and the SAS Content Server uses the WebDAV communication

protocol.

The SAS Model Manager

Server is a collection of macros on the SAS Workspace Server that

generate SAS code to perform SAS Model Manager tasks.

The SAS Scoring Accelerator

creates scoring functions that can be deployed inside a database.

Scoring functions are based on the project's champion model score

code.

The relational databases

in the database management system (DBMS) serve as output data sources

for SAS Model Manager.

Scoring Function Process Flow

This is an example of

the process flow to publish a scoring function. For more information,

see How to Publish a Scoring Function.

-

From SAS Model Manager, you select the Publish Scoring Function for the project that contains the champion model that they want to publish to a specific database. For more information, see How to Publish a Scoring Function.

-

After you complete all the required information about the Publish Scoring Function, SAS Model Manager establishes a JDBC connection to the database using the credentials that were entered. The user-defined part of the scoring function name is validated against the target database. If the user-defined part of the function name is not unique, an error message is displayed.

-

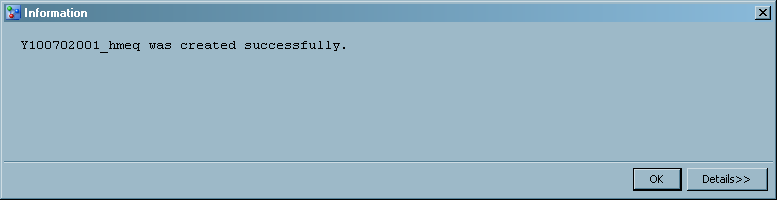

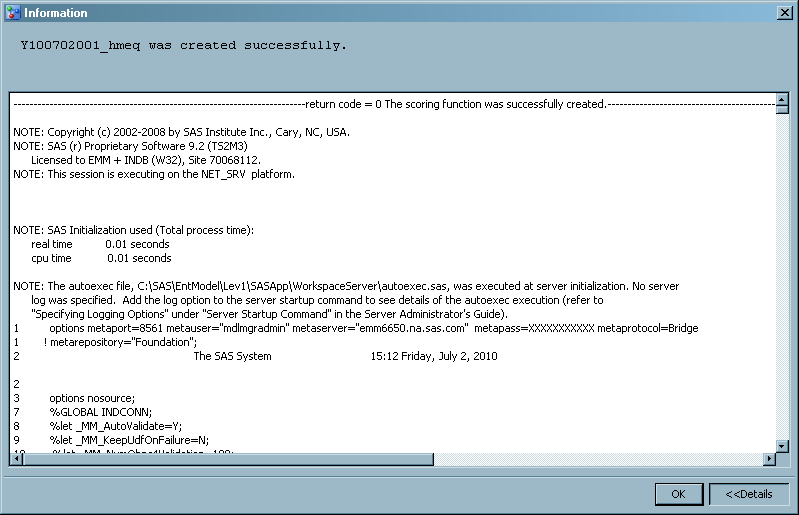

The middle-tier server updates the scoring function metadata tables (for example, table project_metadata). For more information see, Scoring Function Metadata Tables.

-

A message indicates that the scoring function has been successfully created and that the scoring results have been successfully validated.Note: If the publishing job fails, an error message appears. Users can view the workspace logs that are accessible from the message box. If the scoring function is not published successfully, then the previous scoring function for the same project is used in subsequent scoring, based on the SAS Model Manager Java Scoring API.

Prerequisites for Publishing a Scoring Function

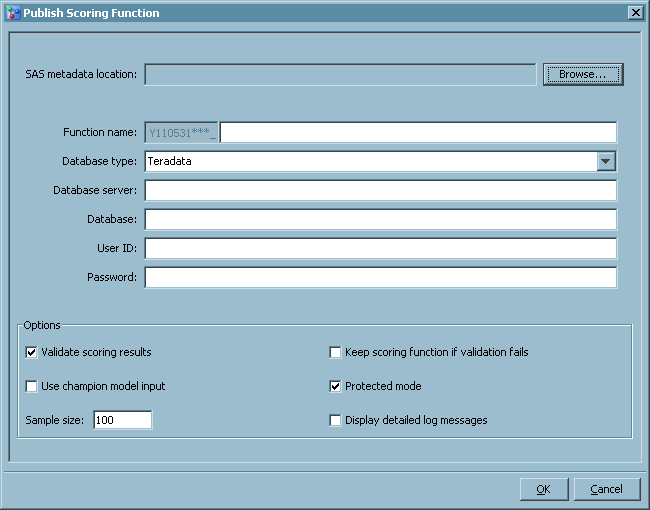

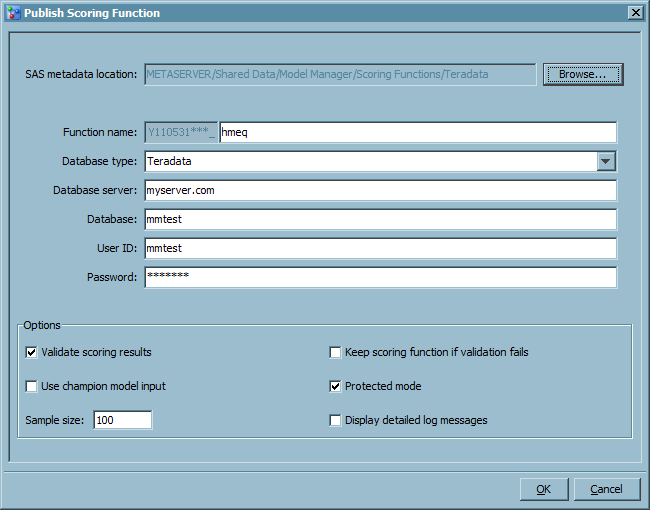

Publish Scoring Function Field Descriptions

specifies the name

of the scoring function, which includes a prefix and a user-defined

value. The prefix is 11 characters long and is in the format of Yyymmddnnn_.

The yymmdd value

in the prefix is the GMT timestamp that identifies the date when you

selected the Publish Scoring Function menu

option. An example of a function name is Y081107001_user_defined_value.

Here are the naming convention requirements:

specifies the user

ID for SAS SFTP. This value enables you to access the machine on which

you have installed the DB2 database. If you do not specify a value

for Server user ID, the value of User

ID is used as the user ID for SAS SFTP.

specifies the schema

name for the database. The schema name is owned by the user that is

specified in the User ID field. The schema

must be created by your database administrator.

specifies to validate

the scoring results when publishing the scoring function. This option

creates a benchmark scoring result on the SAS Workspace Server using

the DATA Step score code. The scoring input data set is used to create

an equivalent database table. Scoring is performed using the new scoring

function and database table. The scoring results are then compared.

specifies to save the

scoring function if the validation of the scoring results fails. Saving

the scoring function is useful for debugging if the scoring function

validation fails.

specifies to use the

champion model input when publishing the scoring function instead

of using the project input, which is the default. This is useful when

the project input variables exceed the limitations for a database.

specifies the mode

of operation to use for the Publish Scoring Function. There are two

modes of operation, protected and unprotected. You specify the mode

by selecting or deselecting the Protected mode option.

The default mode of operation is protected. Protected mode means that

the macro code is isolated in a separate process from the Teradata

database, and an error does not cause database processing to fail.

You should run the Publish Scoring Function in protected mode during

validation. When the model is ready for production, you can run the

Publish Scoring Function in unprotected mode. You might see a significant

performance advantage when you run the Publish Scoring Function in

unprotected mode.

specifies the mode

of operation to use for the Publish Scoring Function. There are two

modes of operation, fenced and unfenced. You specify the mode by selecting

or deselecting the Fenced mode option. The

default mode of operation is fenced. Fenced mode means that the macro

code is isolated in a separate process from the DB2 database, and

an error does not cause database processing to fail. You should run

the Publish Scoring Function in fenced mode during validation. When

the model is ready for production, you can run the Publish Scoring

Function in unfenced mode. You might see a significant performance

advantage when you run the Publish Scoring Function in unfenced mode.

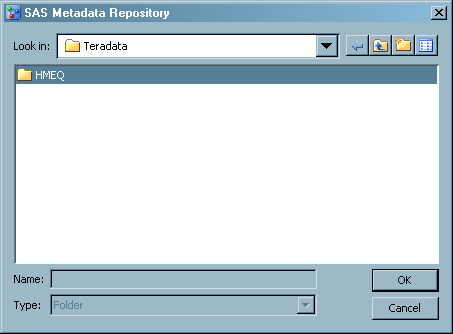

How to Publish a Scoring Function

-

Verify that you have set the default scoring version for the project and have set the champion model for the default version. For more information, see Set a Default Version or Set a Champion Model.

-

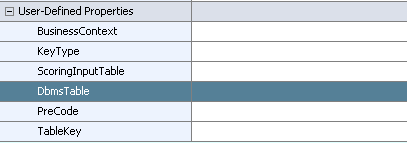

Note: If you plan to use scoring application or SQL Code to score this project, you must first set the DbmsTable property to the name of input table in your database that you want to use for scoring the champion model. When you publish a scoring function the information that is associated with the input table in the database is updated to contain the value of the DbmsTable property. The scoring application or SQL code can then query the database for the input table name to use as the scoring input table.For more information, see User-Defined Properties.

-

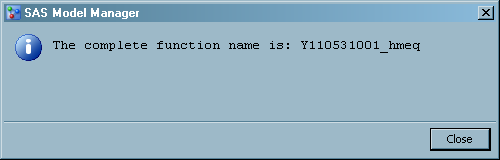

Enter a name for the scoring function. The function name includes a prefix and a user-defined value. Only the user-defined value can be modified. The naming conventions are the following:Note: The user-defined value portion of the scoring function name is used by default for subsequent use of the scoring function from the same project. For more information, see User-Defined Properties.

-

Click OK. A message is displayed that contains the scoring function name that will be published to the database.

Log Messages

A user can view the

log file in the Details window when publishing

a scoring function. As an alternative, a user can view the log file

in the ScoringFunction.log file in the Project Tree. The log file

is named ScoringFunction.log. The time that the process started, who

initiated the process, and when the project was published are recorded.

Error messages are also recorded in the log file. The log file provides

an audit trail of all relevant actions in the publishing process.

Scoring Function Metadata Tables

The following tables

are created in the database when you are publishing a scoring function:

provides information

about the champion model, such as the function name and signature

that are stored within this table.

maps a project to the

champion model, as well as provides information about the current

active scoring model.