The HPSEVERITY Procedure

- Overview

-

Getting Started

-

Syntax

-

Details

Predefined DistributionsCensoring and TruncationParameter Estimation MethodParameter InitializationEstimating Regression EffectsLevelization of Classification VariablesSpecification and Parameterization of Model EffectsEmpirical Distribution Function Estimation MethodsStatistics of FitDistributed and Multithreaded ComputationDefining a Severity Distribution Model with the FCMP ProcedurePredefined Utility FunctionsScoring FunctionsCustom Objective FunctionsInput Data SetsOutput Data SetsDisplayed OutputODS Graphics

Predefined DistributionsCensoring and TruncationParameter Estimation MethodParameter InitializationEstimating Regression EffectsLevelization of Classification VariablesSpecification and Parameterization of Model EffectsEmpirical Distribution Function Estimation MethodsStatistics of FitDistributed and Multithreaded ComputationDefining a Severity Distribution Model with the FCMP ProcedurePredefined Utility FunctionsScoring FunctionsCustom Objective FunctionsInput Data SetsOutput Data SetsDisplayed OutputODS Graphics -

Examples

Defining a Model for Gaussian DistributionDefining a Model for the Gaussian Distribution with a Scale ParameterDefining a Model for Mixed-Tail DistributionsFitting a Scaled Tweedie Model with RegressorsFitting Distributions to Interval-Censored DataBenefits of Distributed and Multithreaded ComputingEstimating Parameters Using Cramér-von Mises EstimatorDefining a Finite Mixture Model That Has a Scale ParameterPredicting Mean and Value-at-Risk by Using Scoring FunctionsScale Regression with Rich Regression Effects

Defining a Model for Gaussian DistributionDefining a Model for the Gaussian Distribution with a Scale ParameterDefining a Model for Mixed-Tail DistributionsFitting a Scaled Tweedie Model with RegressorsFitting Distributions to Interval-Censored DataBenefits of Distributed and Multithreaded ComputingEstimating Parameters Using Cramér-von Mises EstimatorDefining a Finite Mixture Model That Has a Scale ParameterPredicting Mean and Value-at-Risk by Using Scoring FunctionsScale Regression with Rich Regression Effects - References

Example 9.4 Fitting a Scaled Tweedie Model with Regressors

The Tweedie distribution is often used in the insurance industry to explain the influence of regression effects on the distribution of losses. PROC HPSEVERITY provides a predefined scaled Tweedie distribution (STWEEDIE) that enables you to model the influence of regression effects on the scale parameter. The scale regression model has its own advantages such as the ability to easily account for inflation effects. This example illustrates how that model can be used to evaluate the influence of regression effects on the mean of the Tweedie distribution, which is useful in problems such rate-making and pure premium modeling.

Assume a Tweedie process, whose mean  is affected by k regression effects

is affected by k regression effects  ,

,  as follows:

as follows:

![\[ \mu = \mu _0 \exp \left( \sum _{j=1}^{k} \beta _ j x_ j \right) \]](images/etshpug_hpseverity0650.png)

where  represents the base value of the mean (you can think of

represents the base value of the mean (you can think of  as

as  , where

, where  is the intercept). This model for the mean is identical to the popular generalized linear model for the mean with a logarithmic

link function.

is the intercept). This model for the mean is identical to the popular generalized linear model for the mean with a logarithmic

link function.

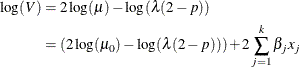

More interestingly, it parallels the model used by PROC HPSEVERITY for the scale parameter  ,

,

![\[ \theta = \theta _0 \exp \left( \sum _{j=1}^{k} \beta _ j x_ j \right) \]](images/etshpug_hpseverity0653.png)

where  represents the base value of the scale parameter. As described in the section Tweedie Distributions, for the parameter range

represents the base value of the scale parameter. As described in the section Tweedie Distributions, for the parameter range  , the mean of the Tweedie distribution is given by

, the mean of the Tweedie distribution is given by

![\[ \mu = \theta \lambda \frac{2-p}{p-1} \]](images/etshpug_hpseverity0655.png)

where  is the Poisson mean parameter of the scaled Tweedie distribution. This relationship enables you to use the scale regression

model to infer the influence of regression effects on the mean of the distribution.

is the Poisson mean parameter of the scaled Tweedie distribution. This relationship enables you to use the scale regression

model to infer the influence of regression effects on the mean of the distribution.

Let the data set Work.Test_Sevtw contain a sample generated from a Tweedie distribution with dispersion parameter  , index parameter

, index parameter  , and the mean parameter that is affected by three regression variables

, and the mean parameter that is affected by three regression variables x1, x2, and x3 as follows:

![\[ \mu = 5 \: \exp (0.25 \: \text {x1} - \text {x2} + 3 \: \text {x3}) \]](images/etshpug_hpseverity0658.png)

Thus, the population values of regression parameters are  ,

,  ,

,  , and

, and  . You can find the code used to generate the sample in the PROC HPSEVERITY sample program

. You can find the code used to generate the sample in the PROC HPSEVERITY sample program hsevex04.sas.

The following PROC HPSEVERITY step uses the sample in Work.Test_Sevtw data set to estimate the parameters of the scale regression model for the predefined scaled Tweedie distribution (STWEEDIE)

with the dual quasi-Newton (QUANEW) optimization technique:

/*--- Fit the scale parameter version of the Tweedie distribution ---*/ proc hpseverity data=test_sevtw outest=estw covout print=all; loss y; scalemodel x1-x3; dist stweedie; nloptions tech=quanew; run;

The dual quasi-Newton technique is used because it requires only the first-order derivatives of the objective function, and it is harder to compute reasonably accurate estimates of the second-order derivatives of Tweedie distribution’s PDF with respect to the parameters.

Some of the key results prepared by PROC HPSEVERITY are shown in Output 9.4.1 and Output 9.4.2. The distribution information and the convergence results are shown in Output 9.4.1.

Output 9.4.1: Convergence Results for the STWEEDIE Model with Regressors

The final parameter estimates of the STWEEDIE regression model are shown in Output 9.4.2. The estimate that is reported for the parameter Theta is the estimate of the base value  . The estimates of regression coefficients

. The estimates of regression coefficients  ,

,  , and

, and  are indicated by the rows of

are indicated by the rows of x1, x2, and x3, respectively.

Output 9.4.2: Parameter Estimates for the STWEEDIE Model with Regressors

If your goal is to explain the influence of regression effects on the scale parameter, then the output displayed in Output 9.4.2 is sufficient. But, if you want to compute the influence of regression effects on the mean of the distribution, then you

need to do some postprocessing. Using the relationship between  and

and  ,

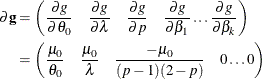

,  can be written in terms of the parameters of the STWEEDIE model as

can be written in terms of the parameters of the STWEEDIE model as

![\[ \mu = \theta _0 \exp \left( \sum _{j=1}^{k} \beta _ j x_ j \right) \lambda \frac{2-p}{p-1} \]](images/etshpug_hpseverity0666.png)

This shows that the parameters  are identical for the mean and the scale model, and the base value

are identical for the mean and the scale model, and the base value  of the mean model is

of the mean model is

![\[ \mu _0 = \theta _0 \lambda \frac{2-p}{p-1} \]](images/etshpug_hpseverity0667.png)

The estimate of  and the standard error associated with it can be computed by using the property of the functions of maximum likelihood estimators

(MLE). If

and the standard error associated with it can be computed by using the property of the functions of maximum likelihood estimators

(MLE). If  represents a totally differentiable function of parameters

represents a totally differentiable function of parameters  , then the MLE of g has an asymptotic normal distribution with mean

, then the MLE of g has an asymptotic normal distribution with mean  and covariance

and covariance  , where

, where  is the MLE of

is the MLE of  ,

,  is the estimate of covariance matrix of

is the estimate of covariance matrix of  , and

, and  is the gradient vector of g with respect to

is the gradient vector of g with respect to  evaluated at

evaluated at  . For

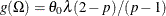

. For  , the function is

, the function is  . The gradient vector is

. The gradient vector is

You can write a DATA step that implements these computations by using the parameter and covariance estimates prepared by PROC

HPSEVERITY step. The DATA step program is available in the sample program hsevex04.sas. The estimates of  prepared by that program are shown in Output 9.4.3. These estimates and the estimates of

prepared by that program are shown in Output 9.4.3. These estimates and the estimates of  as shown in Output 9.4.2 are reasonably close (that is, within one or two standard errors) to the parameters of the population from which the sample

in

as shown in Output 9.4.2 are reasonably close (that is, within one or two standard errors) to the parameters of the population from which the sample

in Work.Test_Sevtw data set was drawn.

Output 9.4.3: Estimate of the Base Value Mu0 of the Mean Parameter

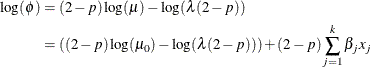

Another outcome of using the scaled Tweedie distribution to model the influence of regression effects is that the regression

effects also influence the variance V of the Tweedie distribution. The variance is related to the mean as  , where

, where  is the dispersion parameter. Using the relationship between the parameters TWEEDIE and STWEEDIE distributions as described

in the section Tweedie Distributions, the regression model for the dispersion parameter is

is the dispersion parameter. Using the relationship between the parameters TWEEDIE and STWEEDIE distributions as described

in the section Tweedie Distributions, the regression model for the dispersion parameter is

Subsequently, the regression model for the variance is

In summary, PROC HPSEVERITY enables you to estimate regression effects on various parameters and statistics of the Tweedie model.