| The NLP Procedure |

Restricting the Step Length

Almost all line-search algorithms use iterative extrapolation

techniques which can easily lead them to (feasible) points

where the objective function ![]() is no longer defined.

(e.g., resulting in indefinite matrices for ML estimation)

or difficult to compute (e.g., resulting in floating point

overflows). Therefore, PROC NLP provides options

restricting the step length

is no longer defined.

(e.g., resulting in indefinite matrices for ML estimation)

or difficult to compute (e.g., resulting in floating point

overflows). Therefore, PROC NLP provides options

restricting the step length ![]() or trust region radius

or trust region radius

![]() , especially during the first main iterations.

, especially during the first main iterations.

The inner product ![]() of the gradient

of the gradient ![]() and the search

direction

and the search

direction ![]() is the slope of

is the slope of ![]() along the search direction

along the search direction ![]() . The default starting value

. The default starting value

![]() in each line-search

algorithm

in each line-search

algorithm ![]() during

the main iteration

during

the main iteration ![]() is computed in three steps:

is computed in three steps:

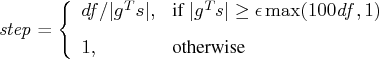

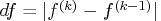

- The first step uses either the difference

of the function values during the last two consecutive

iterations or the final step length value

of the function values during the last two consecutive

iterations or the final step length value  of

the last iteration

of

the last iteration  to compute a first value of

to compute a first value of

.

.

- Not using the DAMPSTEP=

option:

option:

- Not using the DAMPSTEP=

This value of ![]() can be too large and lead

to a difficult or impossible function evaluation, especially

for highly nonlinear functions such as the EXP function.

can be too large and lead

to a difficult or impossible function evaluation, especially

for highly nonlinear functions such as the EXP function.

The INSTEP=![]() option lets you specify

a smaller or larger radius

option lets you specify

a smaller or larger radius ![]() of the

trust region used in the first iteration of the trust region, double

dogleg, and Levenberg-Marquardt algorithms. The default

initial trust region radius

of the

trust region used in the first iteration of the trust region, double

dogleg, and Levenberg-Marquardt algorithms. The default

initial trust region radius ![]() is the length of the

scaled gradient (Moré 1978). This step corresponds to the

default radius factor of

is the length of the

scaled gradient (Moré 1978). This step corresponds to the

default radius factor of ![]() . In most practical applications of

the TRUREG, DBLDOG, and LEVMAR algorithms, this choice is

successful. However, for

bad initial values and highly nonlinear objective functions

(such as the EXP function), the default start radius can result

in arithmetic overflows. If this happens, you may try decreasing

values of INSTEP=

. In most practical applications of

the TRUREG, DBLDOG, and LEVMAR algorithms, this choice is

successful. However, for

bad initial values and highly nonlinear objective functions

(such as the EXP function), the default start radius can result

in arithmetic overflows. If this happens, you may try decreasing

values of INSTEP=![]() ,

, ![]() , until the iteration starts

successfully. A small factor

, until the iteration starts

successfully. A small factor ![]() also affects the trust region

radius

also affects the trust region

radius ![]() of the next steps because the radius is

changed in each iteration by a factor

of the next steps because the radius is

changed in each iteration by a factor ![]() , depending

on the ratio

, depending

on the ratio ![]() expressing the goodness of quadratic function

approximation.

Reducing the radius

expressing the goodness of quadratic function

approximation.

Reducing the radius ![]() corresponds to increasing the ridge

parameter

corresponds to increasing the ridge

parameter ![]() , producing smaller steps directed more closely

toward the (negative) gradient direction.

, producing smaller steps directed more closely

toward the (negative) gradient direction.

Copyright © 2008 by SAS Institute Inc., Cary, NC, USA. All rights reserved.