Copy Data from Hadoop

Prerequisites

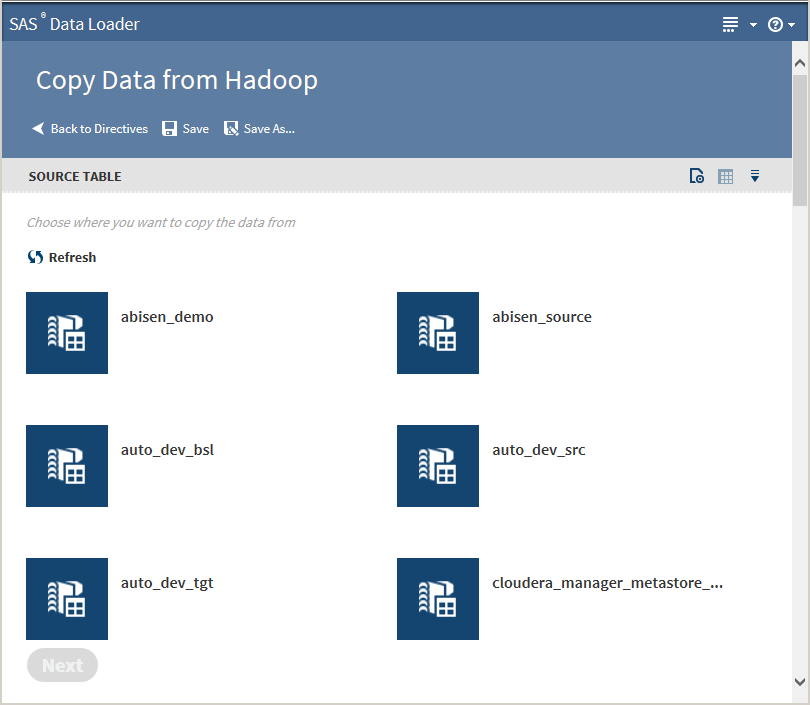

When you open the Copy

Data from Hadoop directive, the Source Tables task

shows the data sources that are on the Hadoop cluster. When you come

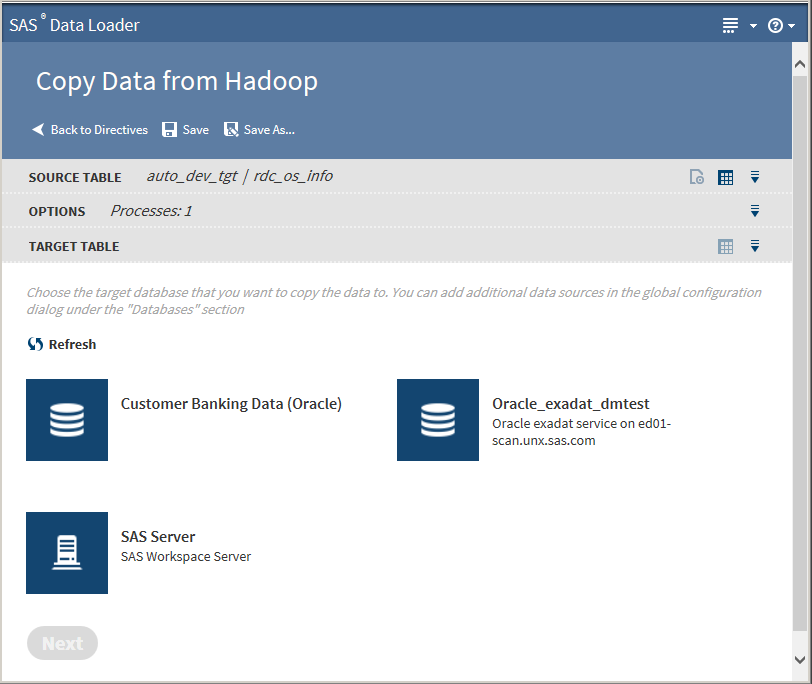

to the Target Tables task, you will see the

databases that are defined in SAS Data Loader.

If you do not see the database to which you want to copy, you must

add a connection to that database. See Databases Panel for more information.

Example

Follow these steps to

copy data from Hadoop into a database:

-

Clicking the Action menu

enables the following actions:

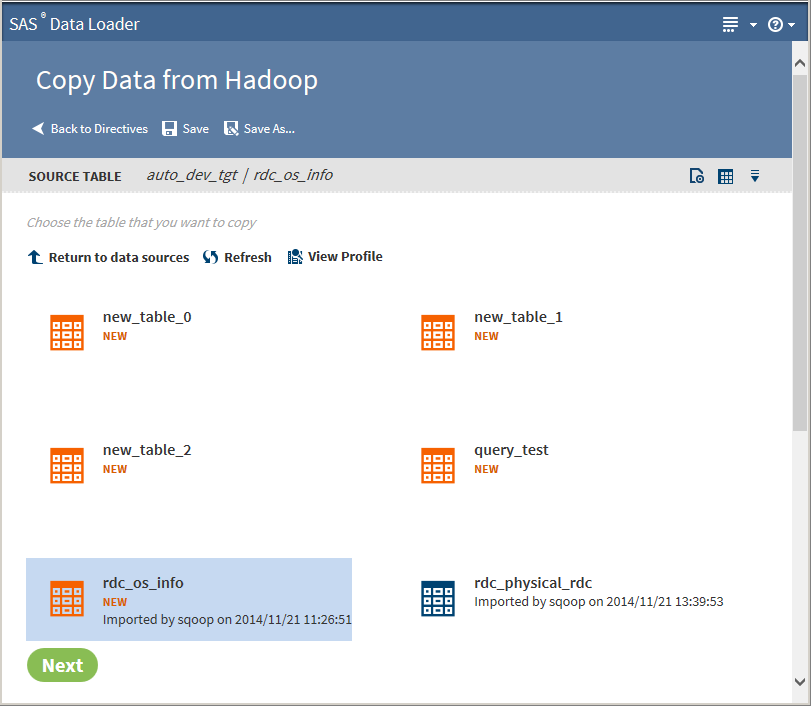

Openopens the current task.Table Viewerenables you to view sample data from a table. Select the table, and then click

enables the following actions:

Openopens the current task.Table Viewerenables you to view sample data from a table. Select the table, and then click to display SAS Table Viewer.

Advanced Optionsopens a dialog box that enables you to specify the maximum length for SAS columns. Entering a value here overrides the value specified in the Configuration options.Note: If the source table has String data types, the resulting SAS data set could be very large. The length of the target field in the SAS data set is determined by the value of this option.When table selection is complete, click Next. The Options task is displayed:

to display SAS Table Viewer.

Advanced Optionsopens a dialog box that enables you to specify the maximum length for SAS columns. Entering a value here overrides the value specified in the Configuration options.Note: If the source table has String data types, the resulting SAS data set could be very large. The length of the target field in the SAS data set is determined by the value of this option.When table selection is complete, click Next. The Options task is displayed: -

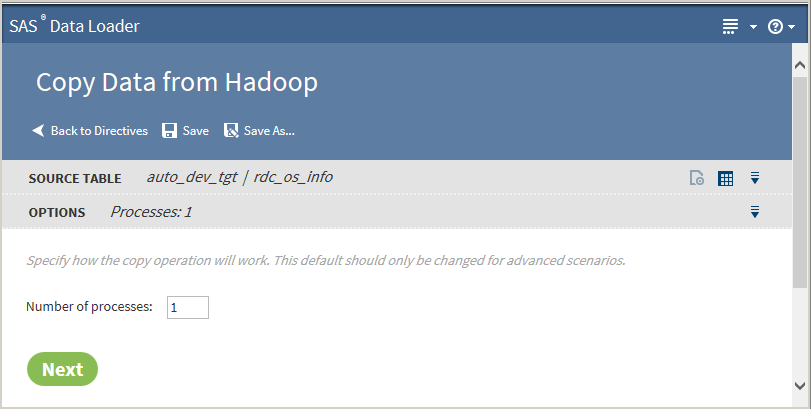

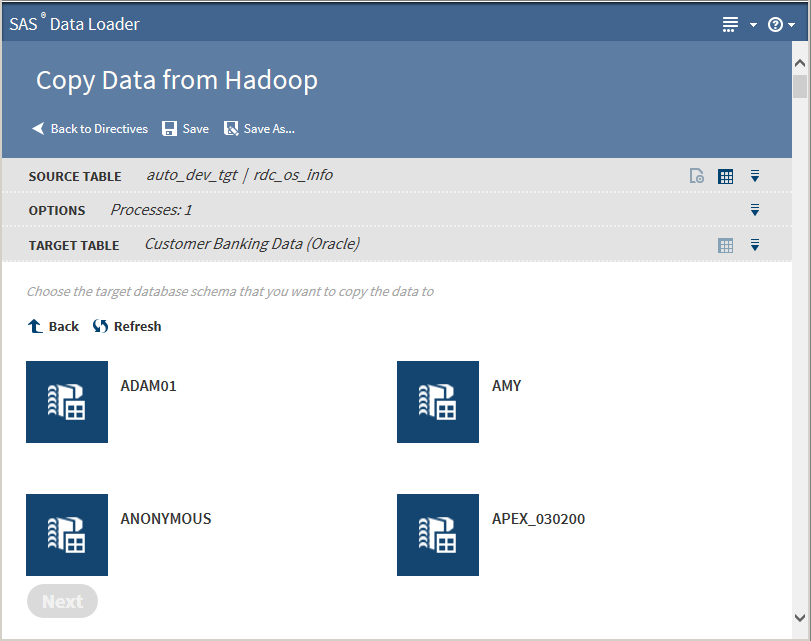

The value on the Options task should not be changed unless you have advanced knowledge of database operations.Note: Changing the number of processes to greater than one expands the number of processes and source data connections that are used to import data.Click Next. The Target Table task is displayed with target databases:

-

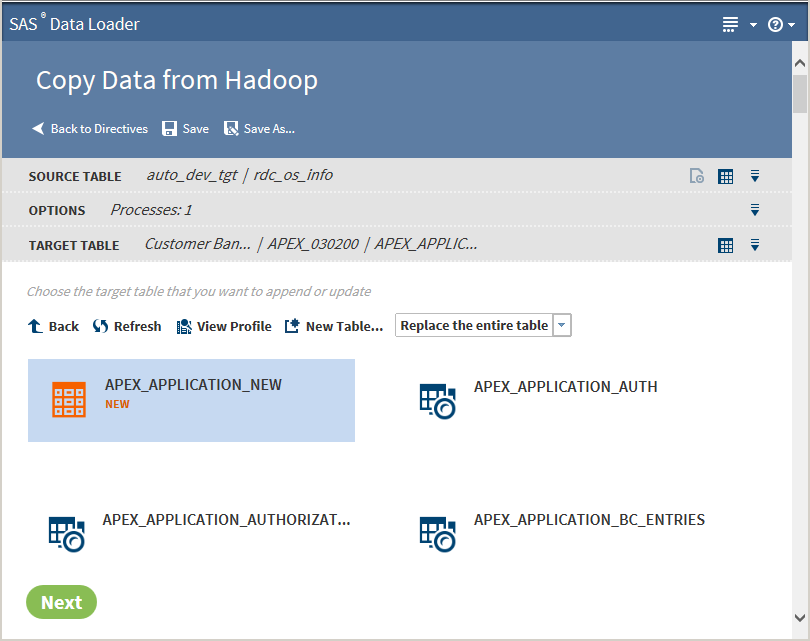

Tip

-

You can create a new table by clicking New Table.

-

If a profile already exists for a table, PROFILED appears next the table icon. You can view the existing profile by selecting the table and clicking View Profile.

Click to enable the following actions:

Openopens the current task.Table Viewerenables you to view sample data from a table. Select the table, and then click

to enable the following actions:

Openopens the current task.Table Viewerenables you to view sample data from a table. Select the table, and then click to display SAS Table Viewer.

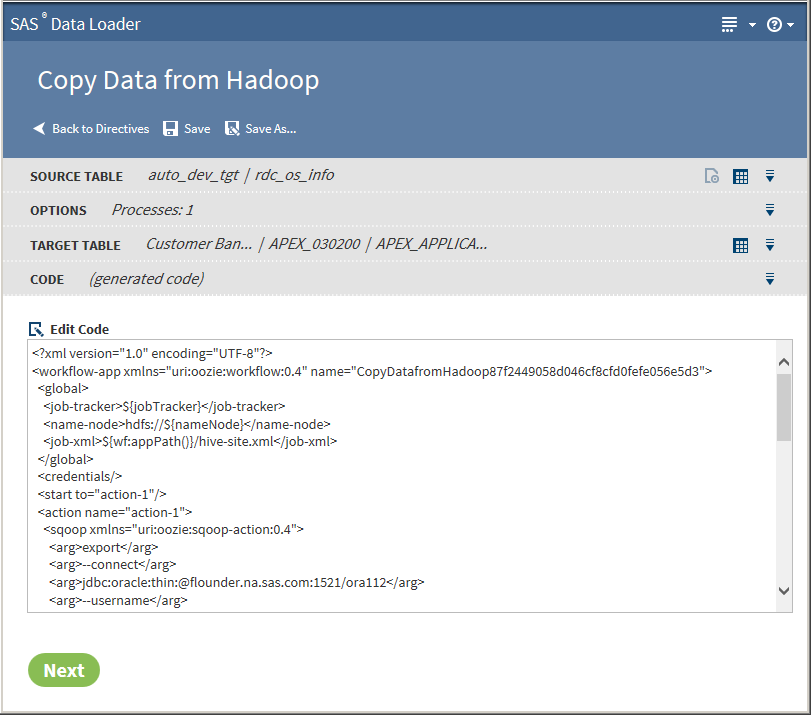

Click Next. The Code task is displayed:

to display SAS Table Viewer.

Click Next. The Code task is displayed: -

-

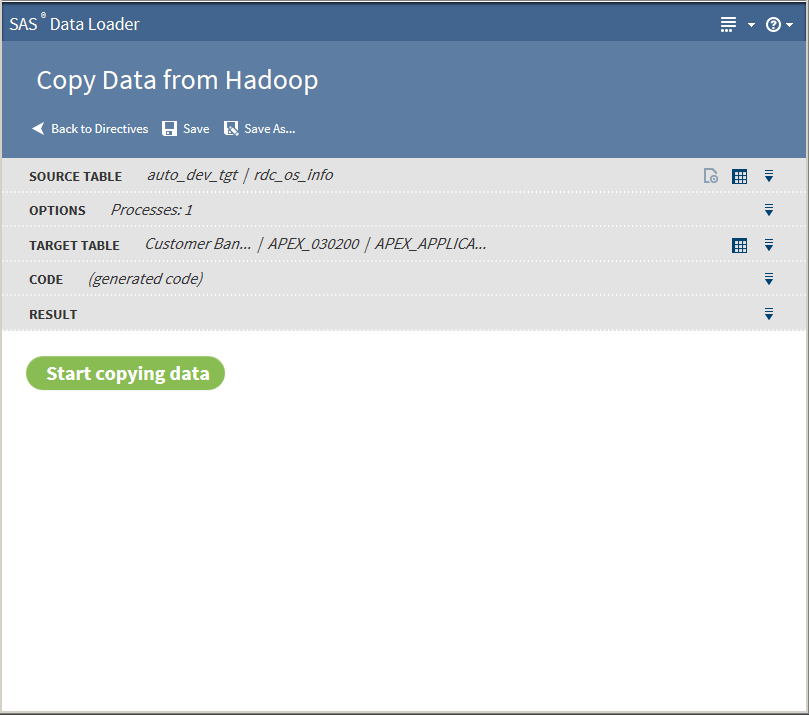

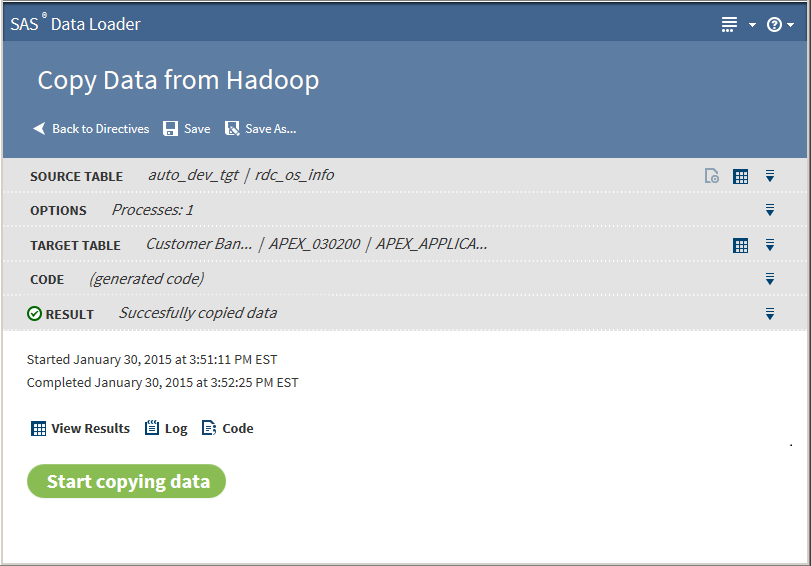

The following actions are available:View Resultsenables you to view the results of the copy process in the SAS Table Viewer.Logdisplays the SAS log that is generated during the copy process.Codedisplays the SAS code that copies the data.

Usage Notes

-

When copying a Hadoop table to a Teradata database, the name of the target table cannot exceed 20 characters. Longer target names cause the job to fail.

-

If necessary, you can change the maximum length of character columns for input tables to this directive. For more information, see Change the Maximum Length for SAS Character Columns.

-

Source tables with a large number of columns can cause Copy From Hadoop jobs to fail. The job runs until the target table reaches the maximum number of columns that are supported in the target database. To resolve the problem, reduce the number of columns that are selected for the target and run the job again.

-

If one or more VARCHAR or STRING columns from a source Hadoop table contains more string data than the target database column, the Copy Data from Hadoop request times out. For example, a source Hadoop table might contain a string column named myString and a target Oracle table might contain a varchar(4000) column also named myString. If data in the Hadoop myString column has a length greater than 4000, then the copy request fails.

-

When copying a Hadoop table to a database, a column name specified in the array of STRUCT in the Hadoop table is not copied to the database table. This happens because of how STRUCT is mapped to VARCHAR in Sqoop.

-

A copy from Hadoop is likely to fail if the name of a source column is also a reserved word in the target database.

-

When copying a Hadoop table to Oracle, a mixed-case schema name generates an error.

-

When copying a Hadoop table to Oracle, timestamp columns in Hadoop generate errors in Oracle. The Hive timestamp format differs from the Oracle timestamp format. To resolve this issue, change the column type in the Oracle target table from timestamp to varchar2.

-

To copy Hadoop tables to Teradata, when the source contains a double-byte character set (DBCS) such as Chinese, follow these steps:

Copyright © SAS Institute Inc. All rights reserved.