Introduction to Statistical Modeling with SAS/STAT Software

Maximum Likelihood Estimation

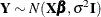

To estimate the parameters in a linear model with mean function ![$\mr{E}[\bY ] = \bX \bbeta $](images/statug_intromod0208.png) by maximum likelihood, you need to specify the distribution of the response vector

by maximum likelihood, you need to specify the distribution of the response vector  . In the linear model with a continuous response variable, it is commonly assumed that the response is normally distributed.

In that case, the estimation problem is completely defined by specifying the mean and variance of

. In the linear model with a continuous response variable, it is commonly assumed that the response is normally distributed.

In that case, the estimation problem is completely defined by specifying the mean and variance of  in addition to the normality assumption. The model can be written as

in addition to the normality assumption. The model can be written as  , where the notation

, where the notation  indicates a multivariate normal distribution with mean vector

indicates a multivariate normal distribution with mean vector  and variance matrix

and variance matrix  . The log likelihood for

. The log likelihood for  then can be written as

then can be written as

![\[ l(\bbeta ,\sigma ^2; \mb{y}) = -\frac{n}{2}\log \{ 2\pi \} -\frac{n}{2}\log \{ \sigma ^2\} - \frac{1}{2\sigma ^2} \left(\mb{y}-\bX \bbeta \right)’\left(\mb{y}-\bX \bbeta \right) \]](images/statug_intromod0401.png)

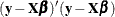

This function is maximized in  when the sum of squares

when the sum of squares  is minimized. The maximum likelihood estimator of

is minimized. The maximum likelihood estimator of  is thus identical to the ordinary least squares estimator. To maximize

is thus identical to the ordinary least squares estimator. To maximize  with respect to

with respect to  , note that

, note that

![\[ \frac{\partial l(\bbeta ,\sigma ^2; \mb{y})}{\partial \sigma ^2} = -\frac{n}{2\sigma ^2} + \frac{1}{2\sigma ^4} \left(\mb{y}-\bX \bbeta \right)’\left(\mb{y}-\bX \bbeta \right) \]](images/statug_intromod0404.png)

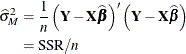

Hence the MLE of  is the estimator

is the estimator

This is a biased estimator of  , with a bias that decreases with n.

, with a bias that decreases with n.