The HPPLS Procedure

Partial Least Squares

Partial least squares (PLS) works by extracting one factor at a time. Let  be the centered and scaled matrix of predictors, and let

be the centered and scaled matrix of predictors, and let  be the centered and scaled matrix of response values. The PLS method starts with a linear combination

be the centered and scaled matrix of response values. The PLS method starts with a linear combination  of the predictors, where

of the predictors, where  is called a score vector and

is called a score vector and  is its associated weight vector. The PLS method predicts both

is its associated weight vector. The PLS method predicts both  and

and  by regression on

by regression on  :

:

![\[ \begin{array}{rclcrcl} \hat{\mb{X}}_0 & = & \mb{t} \mb{p}’, & \textrm{where} & \mb{p}’ & = & (\mb{t}’\mb{t} )^{-1}\mb{t}’\mb{X}_0 \\ \hat{\mb{Y}}_0 & = & \mb{t} \mb{c}’, & \textrm{where} & \mb{c}’ & = & (\mb{t}’\mb{t} )^{-1}\mb{t}’\mb{Y}_0 \\ \end{array} \]](images/stathpug_hppls0016.png)

The vectors  and

and  are called the X- and Y-loadings, respectively.

are called the X- and Y-loadings, respectively.

The specific linear combination  is the one that has maximum covariance

is the one that has maximum covariance  with some response linear combination

with some response linear combination  . Another characterization is that the X-weight,

. Another characterization is that the X-weight,  , and the Y-weight,

, and the Y-weight,  , are proportional to the first left and right singular vectors, respectively, of the covariance matrix

, are proportional to the first left and right singular vectors, respectively, of the covariance matrix  or, equivalently, the first eigenvectors of

or, equivalently, the first eigenvectors of  and

and  , respectively.

, respectively.

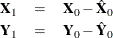

This accounts for how the first PLS factor is extracted. The second factor is extracted in the same way by replacing  and

and  with the X- and Y-residuals from the first factor:

with the X- and Y-residuals from the first factor:

These residuals are also called the deflated  and

and  blocks. The process of extracting a score vector and deflating the data matrices is repeated for as many extracted factors

as are wanted.

blocks. The process of extracting a score vector and deflating the data matrices is repeated for as many extracted factors

as are wanted.