PROC CAPABILITY and General Statements

You can use the NORMALTEST option in the PROC CAPABILITY statement to request several tests of the hypothesis that the analysis variable values are a random sample from a normal distribution. These tests, which are summarized in the table labeled Tests for Normality, include the following:

-

Shapiro-Wilk test

-

Kolmogorov-Smirnov test

-

Anderson-Darling test

-

Cramér-von Mises test

Tests for normality are particularly important in process capability analysis because the commonly used capability indices are difficult to interpret unless the data are at least approximately normally distributed. Furthermore, the confidence limits for capability indices displayed in the table labeled Process Capability Indices require the assumption of normality. Consequently, the tests of normality are always computed when you specify the SPEC statement, and a note is added to the table when the hypothesis of normality is rejected. You can specify the particular test and the significance level with the CHECKINDICES option.

If the sample size is 2000 or less, [16] the procedure computes the Shapiro-Wilk statistic W (also denoted as ![]() to emphasize its dependence on the sample size n). The statistic

to emphasize its dependence on the sample size n). The statistic ![]() is the ratio of the best estimator of the variance (based on the square of a linear combination of the order statistics)

to the usual corrected sum of squares estimator of the variance. When n is greater than three, the coefficients to compute the linear combination of the order statistics are approximated by the

method of Royston (1992). The statistic

is the ratio of the best estimator of the variance (based on the square of a linear combination of the order statistics)

to the usual corrected sum of squares estimator of the variance. When n is greater than three, the coefficients to compute the linear combination of the order statistics are approximated by the

method of Royston (1992). The statistic ![]() is always greater than zero and less than or equal to one

is always greater than zero and less than or equal to one ![]() .

.

Small values of W lead to rejection of the null hypothesis. The method for computing the p-value (the probability of obtaining a W statistic less than or equal to the observed value) depends on n. For n = 3, the probability distribution of W is known and is used to determine the p-value. For n > 4, a normalizing transformation is computed:

|

|

The values of ![]() ,

, ![]() , and

, and ![]() are functions of n obtained from simulation results. Large values of

are functions of n obtained from simulation results. Large values of ![]() indicate departure from normality, and since the statistic

indicate departure from normality, and since the statistic ![]() has an approximately standard normal distribution, this distribution is used to determine the p-values for n > 4.

has an approximately standard normal distribution, this distribution is used to determine the p-values for n > 4.

The Kolmogorov-Smirnov, Anderson-Darling and Cramér-von Mises tests for normality are based on the empirical distribution function (EDF) and are often referred to as EDF tests. EDF tests for a variety of non-normal distributions are available in the HISTOGRAM statement; see the section EDF Goodness-of-Fit Tests for details. For a thorough discussion of these tests, refer to D’Agostino and Stephens (1986).

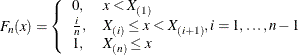

The empirical distribution function is defined for a set of n independent observations ![]() with a common distribution function

with a common distribution function ![]() . Under the null hypothesis,

. Under the null hypothesis, ![]() is the normal distribution. Denote the observations ordered from smallest to largest as

is the normal distribution. Denote the observations ordered from smallest to largest as ![]() . The empirical distribution function,

. The empirical distribution function, ![]() , is defined as

, is defined as

|

Note that ![]() is a step function that takes a step of height

is a step function that takes a step of height ![]() at each observation. This function estimates the distribution function

at each observation. This function estimates the distribution function ![]() . At any value x,

. At any value x, ![]() is the proportion of observations less than or equal to x, while

is the proportion of observations less than or equal to x, while ![]() is the probability of an observation less than or equal to x. EDF statistics measure the discrepancy between

is the probability of an observation less than or equal to x. EDF statistics measure the discrepancy between ![]() and

and ![]() .

.

The EDF tests make use of the probability integral transformation ![]() . If

. If ![]() is the distribution function of X, the random variable U is uniformly distributed between 0 and 1. Given n observations

is the distribution function of X, the random variable U is uniformly distributed between 0 and 1. Given n observations ![]() , the values

, the values ![]() are computed. These values are used to compute the EDF test statistics, as described in the next three sections. The CAPABILITY

procedures computes the associated p-values by interpolating internal tables of probability levels similar to those given by D’Agostino and Stephens (1986).

are computed. These values are used to compute the EDF test statistics, as described in the next three sections. The CAPABILITY

procedures computes the associated p-values by interpolating internal tables of probability levels similar to those given by D’Agostino and Stephens (1986).

The Kolmogorov-Smirnov statistic (D) is defined as

The Kolmogorov-Smirnov statistic belongs to the supremum class of EDF statistics. This class of statistics is based on the

largest vertical difference between ![]() and

and ![]() .

.

The Kolmogorov-Smirnov statistic is computed as the maximum of ![]() and

and ![]() , where

, where ![]() is the largest vertical distance between the EDF and the distribution function when the EDF is greater than the distribution

function, and

is the largest vertical distance between the EDF and the distribution function when the EDF is greater than the distribution

function, and ![]() is the largest vertical distance when the EDF is less than the distribution function.

is the largest vertical distance when the EDF is less than the distribution function.

![\[ \begin{array}{lll} D^{+} & = & \max _{i}\left(\frac{i}{n} - U_{(i)}\right) \\ D^{-} & = & \max _{i}\left(U_{(i)} - \frac{i-1}{n}\right) \\ D & = & \max \left(D^{+},D^{-}\right) \end{array} \]](images/qcug_capability0099.png)

PROC CAPABILITY uses a modified Kolmogorov D statistic to test the data against a normal distribution with mean and variance equal to the sample mean and variance.

The Anderson-Darling statistic and the Cramér-von Mises statistic belong to the quadratic class of EDF statistics. This class

of statistics is based on the squared difference ![]() . Quadratic statistics have the following general form:

. Quadratic statistics have the following general form:

The function ![]() weights the squared difference

weights the squared difference ![]() .

.

The Anderson-Darling statistic (![]() ) is defined as

) is defined as

Here the weight function is ![]() .

.

The Anderson-Darling statistic is computed as

The Cramér-von Mises statistic (![]() ) is defined as

) is defined as

Here the weight function is ![]() .

.

The Cramér-von Mises statistic is computed as

[16] In SAS 6.12 and earlier releases, the CAPABILITY procedure performed a Shapiro-Wilk test for sample sizes of 2000 or smaller, and a Kolmogorov-Smirnov test otherwise. The computed value of W was used to interpolate linearly within the range of simulated critical values given in Shapiro and Wilk (1965). In SAS 7, minor improvements were made to the algorithm for the Shapiro-Wilk test, as described in this section.