| The NLPC Nonlinear Optimization Solver |

| Introductory Examples |

The following introductory examples illustrate how to get started using the NLPC solver and also provide basic information about the use of PROC OPTMODEL.

An Unconstrained Problem

Consider the following example of minimizing the Rosenbrock function (Rosenbrock; 1960):

|

where  . The minimum function value is

. The minimum function value is  at

at  . Note that this problem has no constraints.

. Note that this problem has no constraints.

The following PROC OPTMODEL statements can be used to solve this problem:

proc optmodel;

number a = 100;

var x{1..2};

min f = a*(x[2] - x[1]^2)^2 + (1 - x[1])^2;

solve with nlpc / tech=newtyp;

print x;

quit;

The VAR statement declares the decision variables  and

and  . The MIN statement identifies the symbol

. The MIN statement identifies the symbol  that defines the objective function in terms of

that defines the objective function in terms of  and

and  . The TECH=NEWTYP option in the SOLVE statement specifies that the Newton-type method with line search is used to solve this problem. Finally, the PRINT statement is specified to display the solution to this problem.

. The TECH=NEWTYP option in the SOLVE statement specifies that the Newton-type method with line search is used to solve this problem. Finally, the PRINT statement is specified to display the solution to this problem.

The output that summarizes the problem characteristics and the solution obtained by the solver are displayed in Figure 12.1. Note that the solution has  and

and  , and an objective value very close to

, and an objective value very close to  .

.

| Problem Summary | |

|---|---|

| Objective Sense | Minimization |

| Objective Function | f |

| Objective Type | Nonlinear |

| Number of Variables | 2 |

| Bounded Above | 0 |

| Bounded Below | 0 |

| Bounded Below and Above | 0 |

| Free | 2 |

| Fixed | 0 |

| Number of Constraints | 0 |

Bound Constraints on the Decision Variables

Decision variables often have bound constraints of the form

|

where  is the number of decision variables. The bounds on the variables can be specified with the symbols ">=" and "<=" in the VAR statement.

is the number of decision variables. The bounds on the variables can be specified with the symbols ">=" and "<=" in the VAR statement.

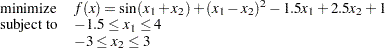

Consider the following bound constrained problem (Hock and Schittkowski; 1981, Example 5):

|

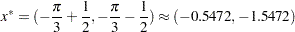

Given a starting point at  , there is a local minimum at

, there is a local minimum at

|

with the objective value  . This problem can be formulated and solved by the following statements:

. This problem can be formulated and solved by the following statements:

proc optmodel;

set S = 1..2;

number lb{S} = [-1.5 -3];

number ub{S} = [4 3];

number x0{S} = [0 0];

var x{i in S} >= lb[i] <= ub[i] init x0[i];

min obj = sin(x[1] + x[2]) + (x[1] - x[2])^2

- 1.5*x[1] + 2.5*x[2] + 1;

solve with nlpc / printfreq=1;

print x;

quit;

The starting point is specified with the keyword INIT in the VAR statement. As usual, the MIN statement identifies the objective function. Since there is no explicit optimization technique specified (using the TECH= option), the NLPC solver uses the trust region method, which is the default algorithm for problems of this size. The PRINTFREQ= option is used to display the iteration log during the optimization process.

In Figure 12.2, the problem is summarized at the top. Then, the details of the iterations are displayed. A message is printed to indicate that the default optimality criteria (ABSOPTTOL= , OPTTOL=

, OPTTOL= E

E ) were satisfied. (See the section Optimality Control for more information.) A summary of the solution shows that the trust region method was used for the optimization and the solution found is optimal. It also shows the optimal objective value and the number of iterations taken to find the solution. The optimal solution is displayed at the end.

) were satisfied. (See the section Optimality Control for more information.) A summary of the solution shows that the trust region method was used for the optimization and the solution found is optimal. It also shows the optimal objective value and the number of iterations taken to find the solution. The optimal solution is displayed at the end.

| Note: | Optimality criteria (ABSOPTTOL=0.001, OPTTOL=1E-6) are satisfied. |

Linear Constraints on the Decision Variables

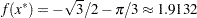

More general linear equality or inequality constraints have the form

|

where  is the number of decision variables and

is the number of decision variables and  is the number of constraints, which can be specified with the CON statement.

is the number of constraints, which can be specified with the CON statement.

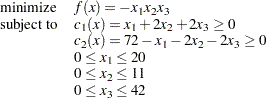

Consider, for example, Rosenbrock’s post office problem (Schittkowski; 1987, p. 74):

|

Starting from  , you can reach a minimum at

, you can reach a minimum at  , with a corresponding objective value

, with a corresponding objective value  . You can use the following SAS code to formulate and solve this problem:

. You can use the following SAS code to formulate and solve this problem:

proc optmodel;

number ub{1..3} = [20 11 42];

var x{i in 1..3} >= 0 <= ub[i] init 10;

min f = -1*x[1]*x[2]*x[3];

con c1: x[1] + 2*x[2] + 2*x[3] >= 0;

con c2: 72 - x[1] - 2*x[2] - 2*x[3] >= 0;

solve with nlpc / tech=congra printfreq=1;

print x;

quit;

As usual, the VAR statement specifies the bounds on the variables, and the starting point; the MIN statement identifies the objective function. In addition, the two CON statements describe the linear constraints  and

and  . To solve this problem, select the conjugate gradient optimization technique by using the TECH=CONGRA option. The PRINTFREQ= option is used to display the iteration log.

. To solve this problem, select the conjugate gradient optimization technique by using the TECH=CONGRA option. The PRINTFREQ= option is used to display the iteration log.

In Figure 12.3, you can find a problem summary and the iteration log. The solution summary and the solution are printed at the bottom.

| Note: | Optimality criteria (ABSOPTTOL=0.001, OPTTOL=1E-6) are satisfied. |

| Solution Summary | |

|---|---|

| Solver | NLPC/Conjugate Gradient |

| Objective Function | f |

| Solution Status | Optimal |

| Objective Value | -3300 |

| Iterations | 3 |

| Absolute Optimality Error | 0 |

| Relative Optimality Error | 0 |

| Absolute Infeasibility | 3.552714E-15 |

| Relative Infeasibility | 4.866731E-17 |

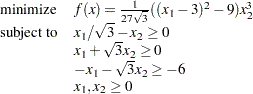

You can formulate linear constraints in a more compact manner. Consider the following example (Hock and Schittkowski; 1981, test example 24):

|

The minimum function value is  at

at  . Assume a feasible starting point,

. Assume a feasible starting point,  .

.

You can specify this model by using the following PROC OPTMODEL statements:

proc optmodel;

number a{1..3, 1..2} = [ .57735 -1

1 1.732

-1 -1.732 ];

number b{1..3} = [ 0 0 -6 ];

number x0{1..2} = [ 1 .5 ];

var x{i in 1..2} >= 0 init x0[i];

min f = ((x[1] - 3)^2 - 9) * x[2]^3 / (27*sqrt(3));

con cc {i in 1..3}: sum{j in 1..2} a[i,j]*x[j] >= b[i];

solve with nlpc / printfreq=1;

print x;

quit;

Note that instead of writing three individual linear constraints as in Rosenbrock’s post office problem, we use a two-dimensional array  to represent the coefficient matrix of the linear constraints and a one-dimensional array

to represent the coefficient matrix of the linear constraints and a one-dimensional array  for the right-hand side. Consequently, all three linear constraints are represented in a single CON statement. This method is especially useful for larger models and for models in which the constraint coefficients are subject to change.

for the right-hand side. Consequently, all three linear constraints are represented in a single CON statement. This method is especially useful for larger models and for models in which the constraint coefficients are subject to change.

The output showing the problem summary, the iteration log, the solution summary, and the solution is displayed in Figure 12.4.

| Note: | Optimality criteria (ABSOPTTOL=0.001, OPTTOL=1E-6) are satisfied. |

| Solution Summary | |

|---|---|

| Solver | NLPC/Trust Region |

| Objective Function | f |

| Solution Status | Optimal |

| Objective Value | -1.000043302 |

| Iterations | 2 |

| Absolute Optimality Error | 0 |

| Relative Optimality Error | 0 |

| Absolute Infeasibility | 2.920841E-16 |

| Relative Infeasibility | 2.527275E-16 |

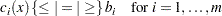

Nonlinear Constraints on the Decision Variables

General nonlinear equality or inequality constraints have the form

|

where  is a general nonlinear constraint, which can be specified with the CON statement.

is a general nonlinear constraint, which can be specified with the CON statement.

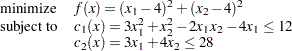

Consider the following nonlinearly constrained problem (Avriel; 1976, p. 456):

|

An initial point is given at  . You can use the following SAS code to formulate and solve this problem:

. You can use the following SAS code to formulate and solve this problem:

proc optmodel;

num x0{1..2} = [2 0];

var x{i in 1..2} init x0[i];

min f = (x[1] - 4)^2 + (x[2] - 4)^2;

con c1: 3*x[1]^2 + x[2]^2 - 2*x[1]*x[2] - 4*x[1] <= 12;

con c2: 3*x[1] + 4*x[2] <= 28;

solve with nlpc / tech=qne printfreq=1;

print x;

quit;

Note that  is a nonlinear constraint and

is a nonlinear constraint and  is a linear constraint. Both can be specified by using the CON statement. The PROC OPTMODEL modeling language automatically recognizes types of constraints to which they belong. The experimental quasi-Newton method is requested to solve this problem.

is a linear constraint. Both can be specified by using the CON statement. The PROC OPTMODEL modeling language automatically recognizes types of constraints to which they belong. The experimental quasi-Newton method is requested to solve this problem.

A problem summary is shown in Figure 12.5. Figure 12.6 displays the iteration log. Note that the quasi-Newton method is an infeasible point algorithm; i.e., the iterates remain infeasible to the nonlinear constraints until the optimal solution is found. The column "Maximum Constraint Violation" displays the infeasibility. The solution summary and the solution are shown in Figure 12.7.

| Problem Summary | |

|---|---|

| Objective Sense | Minimization |

| Objective Function | f |

| Objective Type | Quadratic |

| Number of Variables | 2 |

| Bounded Above | 0 |

| Bounded Below | 0 |

| Bounded Below and Above | 0 |

| Free | 2 |

| Fixed | 0 |

| Number of Constraints | 2 |

| Linear LE (<=) | 1 |

| Linear EQ (=) | 0 |

| Linear GE (>=) | 0 |

| Linear Range | 0 |

| Nonlinear LE (<=) | 1 |

| Nonlinear EQ (=) | 0 |

| Nonlinear GE (>=) | 0 |

| Nonlinear Range | 0 |

| Iteration Log: Quasi-Newton Method with BFGS Update | ||||||

|---|---|---|---|---|---|---|

| Iter | Function Calls |

Objective Value |

Infeasibility | Optimality Error |

Step Size |

Predicted Function Reduction |

| 0 | 0 | 20.0000 | 0 | 1.0000 | . | . |

| 1 | 1 | 0.9570 | 0 | 1.0275 | 1.000 | 1.7256 |

| 2 | 2 | 0.0942 | 0.0480 | 0.1134 | 1.000 | 0.0783 |

| 3 | 3 | 0.1335 | 0.000538 | 0.004636 | 1.000 | 0.00103 |

| 4 | 4 | 0.1340 | 2.9482E-7 | 0.000336 | 1.000 | 5.148E-7 |

| 5 | 5 | 0.1340 | 2.1822E-9 | 2.4734E-6 | 1.000 | 4.2E-9 |

| 6 | 6 | 0.1340 | 1.157E-13 | 6.4421E-9 | 1.000 | 2.101E-9 |

| Note: | Optimality criteria (ABSOPTTOL=0.001, OPTTOL=1E-6) are satisfied. |

Copyright © SAS Institute, Inc. All Rights Reserved.