| Time Series Analysis and Examples |

Example 10.2: Kalman Filtering: Likelihood Function Evaluation

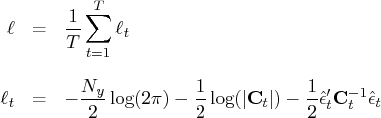

In the following example, the log-likelihood function of the

SSM is computed by using prediction error decomposition.

The annual real GNP series, ![]() , can be decomposed as

, can be decomposed as

It is straightforward to construct the SSM of the real GNP series.

The LIK module computes the average log-likelihood function.

First, the average log-likelihood function is computed

by using the default initial values: Z0=0 and VZ0=![]() I.

The second call of module LIK produces the

average log-likelihood function with the given

initial conditions: Z0=0 and VZ0=

I.

The second call of module LIK produces the

average log-likelihood function with the given

initial conditions: Z0=0 and VZ0=![]() I.

You can notice a sizable difference between the uncertain

initial condition (VZ0=

I.

You can notice a sizable difference between the uncertain

initial condition (VZ0=![]() I) and the almost deterministic

initial condition (VZ0=

I) and the almost deterministic

initial condition (VZ0=![]() I) in Output 10.2.1.

I) in Output 10.2.1.

Finally, the first 15 observations of one-step predictions, filtered values, and real GNP series are produced under the moderate initial condition (VZ0=10I). The data are the annual real GNP for the years 1909 to 1969. Here is the code:

title 'Likelihood Evaluation of SSM';

title2 'DATA: Annual Real GNP 1909-1969';

data gnp;

input y @@;

datalines;

116.8 120.1 123.2 130.2 131.4 125.6 124.5 134.3

135.2 151.8 146.4 139.0 127.8 147.0 165.9 165.5

179.4 190.0 189.8 190.9 203.6 183.5 169.3 144.2

141.5 154.3 169.5 193.0 203.2 192.9 209.4 227.2

263.7 297.8 337.1 361.3 355.2 312.6 309.9 323.7

324.1 355.3 383.4 395.1 412.8 406.0 438.0 446.1

452.5 447.3 475.9 487.7 497.2 529.8 551.0 581.1

617.8 658.1 675.2 706.6 724.7

;

proc iml;

start lik(y,a,b,f,h,var,z0,vz0);

nz = nrow(f);

n = nrow(y);

k = ncol(y);

const = k*log(8*atan(1));

if ( sum(z0 = .) | sum(vz0 = .) ) then

call kalcvf(pred,vpred,filt,vfilt,y,0,a,f,b,h,var);

else

call kalcvf(pred,vpred,filt,vfilt,y,0,a,f,b,h,var,z0,vz0);

et = y - pred*h`;

sum1 = 0;

sum2 = 0;

do i = 1 to n;

vpred_i = vpred[(i-1)*nz+1:i*nz,];

et_i = et[i,];

ft = h*vpred_i*h` + var[nz+1:nz+k,nz+1:nz+k];

sum1 = sum1 + log(det(ft));

sum2 = sum2 + et_i*inv(ft)*et_i`;

end;

return(-.5*const-.5*(sum1+sum2)/n);

finish;

start main;

use gnp;

read all var {y};

f = {1 1, 0 1};

h = {1 0};

a = j(nrow(f),1,0);

b = j(nrow(h),1,0);

var = diag(j(1,nrow(f)+ncol(y),1e-3));

/*-- initial values are computed --*/

z0 = j(1,nrow(f),.);

vz0 = j(nrow(f),nrow(f),.);

logl = lik(y,a,b,f,h,var,z0,vz0);

print 'No initial values are given', logl;

/*-- initial values are given --*/

z0 = j(1,nrow(f),0);

vz0 = 1e-3#i(nrow(f));

logl = lik(y,a,b,f,h,var,z0,vz0);

print 'Initial values are given', logl;

z0 = j(1,nrow(f),0);

vz0 = 10#i(nrow(f));

call kalcvf(pred,vpred,filt,vfilt,y,1,a,f,b,h,var,z0,vz0);

print y pred filt;

finish;

run;

Output 10.2.1: Average Log Likelihood of SSM

|

|

|

| DATA: Annual Real GNP 1909-1969 |

| No initial values are given |

| LOGL |

| -26314.66 |

| Initial values are given |

| LOGL |

| -91884.41 |

Output 10.2.2 shows the observed data, the predicted state vectors, and the filtered state vectors for the first 16 observations.

Output 10.2.2: Filtering and One-Step Prediction

Copyright © 2009 by SAS Institute Inc., Cary, NC, USA. All rights reserved.

![{h}& = & (1, 0) \ {f}& = & [ 1 & 1 \ 0 & 1 ] \ {z}_t & = & (\mu_t, \beta... ...\eta 1} & 0 & 0 \ 0 & \sigma^2_{\eta 2} & 0 \ 0 & 0 & \sigma^2_\epsilon ]](images/timeseriesexpls_timeseriesexplseq430.gif)