| Nonlinear Optimization Examples |

Kuhn-Tucker Conditions

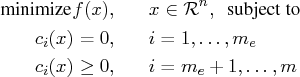

The nonlinear programming (NLP) problem with one objective

function ![]() and

and ![]() constraint functions

constraint functions ![]() , which

are continuously differentiable, is defined as follows:

, which

are continuously differentiable, is defined as follows:

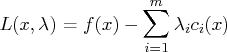

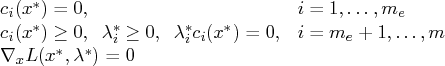

If the functions ![]() and

and ![]() are twice differentiable, the

point

are twice differentiable, the

point ![]() is an isolated local minimizer of the NLP

problem, if there exists a vector

is an isolated local minimizer of the NLP

problem, if there exists a vector ![]() that meets the following conditions:

that meets the following conditions:

- Kuhn-Tucker conditions

- second-order condition

Each nonzero vector ![]() with

with

In practice, you cannot expect the constraint

functions ![]() to vanish within machine

precision, and determining the set of active

constraints at the solution

to vanish within machine

precision, and determining the set of active

constraints at the solution ![]() might not be simple.

might not be simple.

Copyright © 2009 by SAS Institute Inc., Cary, NC, USA. All rights reserved.