Running a Multiple Logs Job

Solution

You can

process the job in the multiple log job template. If you have not

done so already, you should run a copy of the setup job for the multiple

logs template, which is named clk_0010_setup_basic_multi_job. When

you actually process the data, you should run a copy of the multiple

logs job, which is named clk_0200_load_multi_dds. By running a copy,

you protect the original template. For information about running the

setup job and creating a copy of the original job, see Copying the Basic (Multiple) Web Log Templates Folder.

Tasks

Review and Prepare the Job

You can

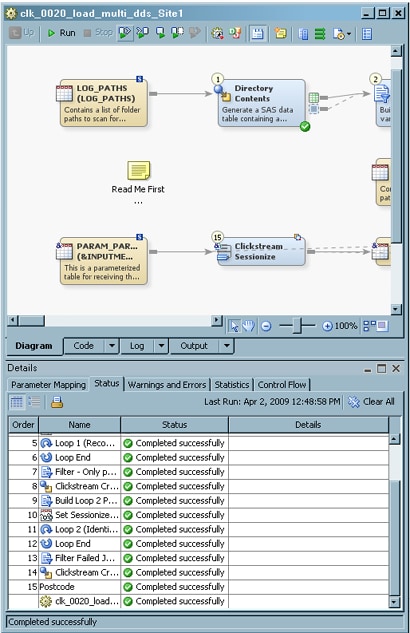

examine the multiple logs template job on the Diagram tab of the SAS Data Integration Studio Job Editor before you run it. You can also configure the job to change the

list of logs that you process, set the number of groups that are used

in the sessionizing loop, and specify parallel and multiple processing

options.

-

For an overview of how the job is processed, see Stages in the Basic (Multiple) Web Log Template Job.

-

Open the Loop Options tabs in the property windows for the two Loop transformations and make sure that the appropriate parallel processing settings are specified. Be particularly careful to ensure that the path specified in the Location on host for log and output files field is correct.For information about the prerequisites for parallel processing, see the “About Parallel Processing” topic in the Working with Iterative Jobs and Parallel Processing chapter in the SAS Data Integration Studio: User's Guide. Of course, your job fails if parallel processing has been enabled but the parallel processing prerequisites have not been satisfied.

-

Open the Parameters tab in the properties window for the template job and review the two parameters Number of Distinct Clickstream Parse Output Paths and Number of Groups into which data should be divided for the job. To access these values, select the parameters and click Edit to access the Edit Prompt window. Then, click Prompt Type and Values to review the number of groups specified in the Default value field. Click OK until you return to the Diagram tab.Note: The value for these parameters must match the value entered for the setup job. The setup job values are entered on the Options tab in the properties window for the Setup transformation in the setup job. If you change either of these values in the template job, you need to rerun the setup job to make sure that the settings match and that the supporting file system structure is generated.

Run the Job and Examine the Output

-

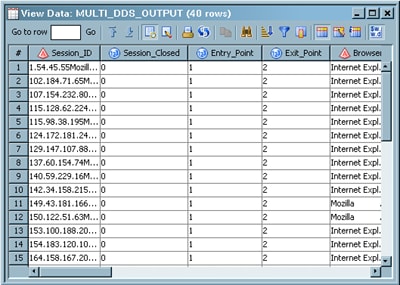

If the job completes without error, right-click the MULTI_DDS_OUTPUT table at the end of the job and select Open from the pop-up menu.If the job does not complete successfully, then you might want to examine the logs for each loop in the job. Since most of the processing is done in the loop portion of the job, this is where most errors occur. Examine the Status tab to determine where the error occurred and refer to the log for that part of the job. A SAS log is saved for each pass through the loops in the Multiple Log Template Job. These logs are placed in a folder called Process Logs under the Loop1 and Loop2 folders in the structure that is created by the template setup job.In order to know which file you are looking for, you should understand the naming conventions for these log files. The files in the ProcessLogs folder are named

Lnn_x.log, wherennis a unique number for this particular Loop transformation andxis a number that represents the iteration of the current loop. For example, if you process 200 Web logs, then the ProcessLogs folder for Loop1 (Clickstream Log transformation and Clickstream Parse transformation) contains 200 logs named Lnn_1.log to Lnn_200.log (wherennis some constant number).The ProcessLogs folder for Loop2 (Clickstream Sessionize transformation) has the same naming convention. However, the log folder for Loop2 contains one log for each group. For example, if the Clickstream Parse transformation in the first loop generated five groups, then the logs are named Lnn_1.log to Lnn_5.log (where nn is a constant number).