The SURVEYPHREG Procedure

- Overview

- Getting Started

-

Syntax

PROC SURVEYPHREG StatementBY StatementCLASS StatementCLUSTER StatementDOMAIN StatementESTIMATE StatementFREQ StatementLSMEANS StatementLSMESTIMATE StatementMODEL StatementNLOPTIONS StatementOUTPUT StatementProgramming StatementsREPWEIGHTS StatementSLICE StatementSTORE StatementSTRATA StatementTEST StatementWEIGHT Statement

PROC SURVEYPHREG StatementBY StatementCLASS StatementCLUSTER StatementDOMAIN StatementESTIMATE StatementFREQ StatementLSMEANS StatementLSMESTIMATE StatementMODEL StatementNLOPTIONS StatementOUTPUT StatementProgramming StatementsREPWEIGHTS StatementSLICE StatementSTORE StatementSTRATA StatementTEST StatementWEIGHT Statement -

Details

Notation and EstimationFailure Time DistributionTime and CLASS Variables UsagePartial Likelihood Function for the Cox ModelSpecifying the Sample DesignMissing ValuesVariance EstimationDomain AnalysisHypothesis Tests, Confidence Intervals, and ResidualsOutput Data SetsDisplayed OutputODS Table NamesODS Graphics

Notation and EstimationFailure Time DistributionTime and CLASS Variables UsagePartial Likelihood Function for the Cox ModelSpecifying the Sample DesignMissing ValuesVariance EstimationDomain AnalysisHypothesis Tests, Confidence Intervals, and ResidualsOutput Data SetsDisplayed OutputODS Table NamesODS Graphics -

Examples

- References

Taylor Series Linearization

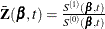

The Taylor series linearization method is the default variance estimation method used by PROC SURVEYPHREG. See the section Notation and Estimation for definitions of the notation used in this section. Let

![\[ S^{(r)}(\bbeta ,t) = \sum _{A} w_{hij}y_{hij}(t) \exp \left( \bbeta ’\bZ _{hij}(t) \right) \bZ _{hij}^{\bigotimes r}(t) \]](images/statug_surveyphreg0071.png)

where  . Let A be the set of indices in the selected sample. Let

. Let A be the set of indices in the selected sample. Let

![\[ \mb{a}^{\bigotimes r} = \left\{ \begin{array}{lrl} \mb{a} \mb{a}‘ & ,& r = 1 \\ I_{\text {dim}(\mb{a})} & , & r = 0 \end{array} \right. \]](images/statug_surveyphreg0073.png)

and let  be the identity matrix of appropriate dimension.

be the identity matrix of appropriate dimension.

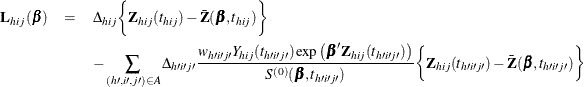

Let  . The score residual for the

. The score residual for the  subject is

subject is

For TIES=EFRON, the computation of the score residuals is modified to comply with the Efron partial likelihood. See the section Residuals for more information.

The Taylor series estimate of the covariance matrix of  is

is

![\[ \hat{\bV }(\hat\bbeta ) = \mc{I}^{-1}(\hat{\bbeta }) \mb{G} \mc{I}^{-1}(\hat{\bbeta }) \]](images/statug_surveyphreg0078.png)

where  is the observed information matrix and the

is the observed information matrix and the  matrix

matrix  is defined as

is defined as

![\[ \mb{G}=\frac{n-1}{n-p} \sum _{h=1}^ H { \frac{n_ h(1-f_ h)}{n_ h-1} \sum _{i=1}^{n_ h} { (\mb{e}_{hi+}-\bar{\mb{e}}_{h\cdot \cdot })’ (\mb{e}_{hi+}-\bar{\mb{e}}_{h\cdot \cdot }) } } \]](images/statug_surveyphreg0081.png)

The observed residuals, their sums and means are defined as follows:

![\begin{eqnarray*} \mb{e}_{hij} & =& w_{hij} \mb{L}_{hij}(\hat\bbeta )\\[0.05in] \mb{e}_{hi+}& =& \sum _{j=1}^{m_{hi}}\mb{e}_{hij} \\ \bar{\mb{e}}_{h\cdot \cdot } & =& \frac1{n_ h}\sum _{i=1}^{n_ h}\mb{e}_{hi+} \end{eqnarray*}](images/statug_surveyphreg0082.png)

The factor  in the computation of the matrix

in the computation of the matrix  reduces the small sample bias that is associated with using the estimated function to calculate deviations (Fuller et al.

(1989), pp. 77–81). For simple random sampling, this factor contributes to the degrees of freedom correction applied to the residual

mean square for ordinary least squares in which p parameters are estimated. By default, the procedure uses this adjustment in the variance estimation. If you do not want to

use this multiplier in the variance estimator, then specify the VADJUST=NONE

option in the MODEL statement.

reduces the small sample bias that is associated with using the estimated function to calculate deviations (Fuller et al.

(1989), pp. 77–81). For simple random sampling, this factor contributes to the degrees of freedom correction applied to the residual

mean square for ordinary least squares in which p parameters are estimated. By default, the procedure uses this adjustment in the variance estimation. If you do not want to

use this multiplier in the variance estimator, then specify the VADJUST=NONE

option in the MODEL statement.