The HPFMM Procedure

Prior Distributions

The following list displays the parameterization of prior distributions for situations in which the HPFMM procedure uses a conjugate sampler in mixture models without model effects and certain basic distributions (binary, binomial, exponential, Poisson, normal, and t). You specify the parameters a and b in the formulas below in the MUPRIORPARMS= and PHIPRIORPARMS= options in the BAYES statement in these models.

- Beta

-

![\[ f(y) = \frac{\Gamma (a+b)}{\Gamma (a)\Gamma (b)} \, y^{a-1} \, (1-y)^{b-1} \]](images/statug_hpfmm0289.png)

where

,

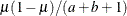

,  . In this parameterization, the mean and variance of the distribution are

. In this parameterization, the mean and variance of the distribution are  and

and  , respectively. The beta distribution is the prior distribution for the success probability in binary and binomial distributions

when conjugate sampling is used.

, respectively. The beta distribution is the prior distribution for the success probability in binary and binomial distributions

when conjugate sampling is used.

- Dirichlet

-

![\[ f(\mb{y}) = \frac{\Gamma \left(\sum _{i=1}^ k a_ i\right)}{\prod _{i=1}^ k \, \Gamma (a_ i)} y_1^{a_1-1} \, \cdots \, y_ k^{a_ k-1} \]](images/statug_hpfmm0295.png)

where

and the parameters

and the parameters  . If any

. If any  were zero, an improper density would result. The Dirichlet density is the prior distribution for the mixture probabilities.

You can affect the choice of the

were zero, an improper density would result. The Dirichlet density is the prior distribution for the mixture probabilities.

You can affect the choice of the  through the MIXPRIORPARMS

option in the BAYES

statement. If k=2, the Dirichlet is the same as the beta

through the MIXPRIORPARMS

option in the BAYES

statement. If k=2, the Dirichlet is the same as the beta distribution.

distribution.

- Gamma

)

) -

![\[ f(y) = \frac{b^ a}{\Gamma (a)} \, y^{a-1} \, \exp \{ -by\} \]](images/statug_hpfmm0300.png)

where

,

,  . In this parameterization, the mean and variance of the distribution are

. In this parameterization, the mean and variance of the distribution are  and

and  , respectively. The gamma distribution is the prior distribution for the mean parameter of the Poisson distribution when conjugate

sampling is used.

, respectively. The gamma distribution is the prior distribution for the mean parameter of the Poisson distribution when conjugate

sampling is used.

- Inverse gamma

-

![\[ f(y) = \frac{b^ a}{\Gamma (a)}\, y^{-a-1} \, \exp \{ -b/y\} \]](images/statug_hpfmm0303.png)

where

,

,  . In this parameterization, the mean and variance of the distribution are

. In this parameterization, the mean and variance of the distribution are  if

if  and

and  if

if  , respectively. The inverse gamma distribution is the prior distribution for the mean parameter of the exponential distribution

when conjugate sampling is used. It is also the prior distribution for the scale parameter

, respectively. The inverse gamma distribution is the prior distribution for the mean parameter of the exponential distribution

when conjugate sampling is used. It is also the prior distribution for the scale parameter  in all models.

in all models.

- Multinomial

-

![\[ f(\mb{y}) = \frac{1}{y_1!\cdots y_ k!} \pi _1^{y_1} \, \cdots \, \pi _ k^{y_ k} \]](images/statug_hpfmm0307.png)

where

,

,  ,

,  , and n is the number of observations included in the analysis. The multinomial density is the prior distribution for the mixture

proportions. The mean and variance of

, and n is the number of observations included in the analysis. The multinomial density is the prior distribution for the mixture

proportions. The mean and variance of  are

are  and

and  , respectively.

, respectively.

- Normal

-

![\[ f(y) = \frac{a}{\sqrt {2\pi b}} \, \exp \left\{ -\frac12 \frac{(y-a)^2}{b}\right\} \]](images/statug_hpfmm0314.png)

where

. The mean and variance of the distribution are

. The mean and variance of the distribution are  and b, respectively. The normal distribution is the prior distribution for the mean parameter of the normal and t distribution when conjugate sampling is used.

and b, respectively. The normal distribution is the prior distribution for the mean parameter of the normal and t distribution when conjugate sampling is used.

When a MODEL statement contains effects or if you specify the METROPOLIS option, the prior distribution for the regression parameters is multivariate normal, and you can specify the means and variances of the parameters in the BETAPRIORPARMS= option in the BAYES statement.