The POWER Procedure

- Overview

-

Getting Started

-

Syntax

-

Details

-

Examples

One-Way ANOVAThe Sawtooth Power Function in Proportion AnalysesSimple AB/BA Crossover DesignsNoninferiority Test with Lognormal DataMultiple Regression and CorrelationComparing Two Survival CurvesConfidence Interval PrecisionCustomizing PlotsBinary Logistic Regression with Independent PredictorsWilcoxon-Mann-Whitney Test

One-Way ANOVAThe Sawtooth Power Function in Proportion AnalysesSimple AB/BA Crossover DesignsNoninferiority Test with Lognormal DataMultiple Regression and CorrelationComparing Two Survival CurvesConfidence Interval PrecisionCustomizing PlotsBinary Logistic Regression with Independent PredictorsWilcoxon-Mann-Whitney Test - References

The power approximation in this section is applicable to the Wilcoxon-Mann-Whitney (WMW) test as invoked with the WILCOXON

option in the PROC NPAR1WAY statement of the NPAR1WAY procedure. The approximation is based on O’Brien and Castelloe (2006) and an estimator called ![]() . See O’Brien and Castelloe (2006) for a definition of

. See O’Brien and Castelloe (2006) for a definition of ![]() , which need not be derived in detail here for purposes of explaining the power formula.

, which need not be derived in detail here for purposes of explaining the power formula.

Let ![]() and

and ![]() be independent observations from any two distributions that you want to compare using the WMW test. For purposes of deriving

the asymptotic distribution of

be independent observations from any two distributions that you want to compare using the WMW test. For purposes of deriving

the asymptotic distribution of ![]() (and consequently the power computation as well), these distributions must be formulated as ordered categorical ("ordinal")

distributions.

(and consequently the power computation as well), these distributions must be formulated as ordered categorical ("ordinal")

distributions.

If a distribution is continuous, it can be discretized using a large number of categories with negligible loss of accuracy. Each nonordinal distribution is divided into b categories, where b is the value of the NBINS parameter, with breakpoints evenly spaced on the probability scale. That is, each bin contains an equal probability 1/b for that distribution. Then the breakpoints across both distributions are pooled to form a collection of C bins (heretofore called "categories"), and the probabilities of bin membership for each distribution are recalculated. The motivation for this method of binning is to avoid degenerate representations of the distributions—that is, small handfuls of large probabilities among mostly empty bins—as can be caused by something like an evenly spaced grid across raw values rather than probabilities.

After the discretization process just mentioned, there are now two ordinal distributions, each with a set of probabilities

across a common set of C ordered categories. For simplicity of notation, assume (without loss of generality) the response values to be ![]() . Represent the conditional probabilities as

. Represent the conditional probabilities as

and the group allocation weights as

The joint probabilities can then be calculated simply as

The next step in the power computation is to compute the probabilities that a randomly chosen pair of observations from the

two groups is concordant, discordant, or tied. It is useful to define these probabilities as functions of the terms ![]() and

and ![]() , defined as follows, where Y is a random observation drawn from the joint distribution across groups and categories:

, defined as follows, where Y is a random observation drawn from the joint distribution across groups and categories:

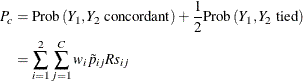

![\begin{align*} Rs_{ij} & = \mr{Prob}\left(Y \mbox{ is concordant with cell} (i,j)\right) + \frac{1}{2} \mr{Prob}\left(Y \mbox{ is tied with cell} (i,j)\right) \\ & = \mr{Prob}\left((\mr{group} < i \mbox{ and } Y < j) \mbox{ or } (\mr{group} > i \mbox{ and } Y > j)\right) + \\ & \quad \frac{1}{2} \mr{Prob}\left(\mr{group} \ne i \mbox{ and } Y = j\right) \\ & = \sum _{g=1}^2 \sum _{c=1}^ C w_ g \tilde{p}_{gc} \left[\mr{I}_{(g-i)(c-j) > 0} + \frac{1}{2} \mr{I}_{g \ne i, c = j} \right] \end{align*}](images/statug_power0521.png)

and

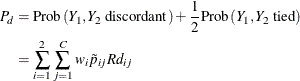

![\begin{align*} Rd_{ij} & = \mr{Prob}\left(Y \mbox{ is discordant with cell} (i,j)\right) + \frac{1}{2} \mr{Prob}\left(Y \mbox{ is tied with cell} (i,j)\right) \\ & = \mr{Prob}\left((\mr{group} < i \mbox{ and } Y > j) \mbox{ or } (\mr{group} > i \mbox{ and } Y < j)\right) + \\ & \quad \frac{1}{2} \mr{Prob}\left(\mr{group} \ne i \mbox{ and } Y = j\right) \\ & = \sum _{g=1}^2 \sum _{c=1}^ C w_ g \tilde{p}_{gc} \left[\mr{I}_{(g-i)(c-j) < 0} + \frac{1}{2} \mr{I}_{g \ne i, c = j} \right] \end{align*}](images/statug_power0522.png)

For an independent random draw ![]() from the two distributions,

from the two distributions,

and

Then

Proceeding to compute the theoretical standard error associated with ![]() (that is, the population analogue to the sample standard error),

(that is, the population analogue to the sample standard error),

![\begin{align*} \mr{SE}(\mr{WMW}_\mr {odds}) = \frac{2}{P_ d} \left[ \sum _{i=1}^2 \sum _{j=1}^ C w_ i \tilde{p}_{ij} \left(\mr{WMW}_\mr {odds} Rd_{ij} - Rs_{ij} \right)^2 /N \right]^{\frac{1}{2}} \end{align*}](images/statug_power0528.png)

Converting to the natural log scale and using the delta method,

The next step is to produce a "smoothed" version of the ![]() cell probabilities that conforms to the null hypothesis of the Wilcoxon-Mann-Whitney test (in other words, independence in

the

cell probabilities that conforms to the null hypothesis of the Wilcoxon-Mann-Whitney test (in other words, independence in

the ![]() contingency table of probabilities). Let

contingency table of probabilities). Let ![]() denote the theoretical standard error of

denote the theoretical standard error of ![]() assuming

assuming ![]() .

.

Finally, compute the power using the noncentral chi-square and normal distributions:

![\begin{align*} \mr{power} = & \left\{ \begin{array}{l} P\left(Z \ge \frac{\mr{SE}_{H_0}(\log (\mr{WMW}_\mr {odds}))}{\mr{SE}(\log (\mr{WMW}_\mr {odds}))} z_{1-\alpha } - \delta ^\star N^\frac {1}{2} \right), \quad \mbox{upper one-sided} \\ P\left(Z \le \frac{\mr{SE}_{H_0}(\log (\mr{WMW}_\mr {odds}))}{\mr{SE}(\log (\mr{WMW}_\mr {odds}))} z_{\alpha } - \delta ^\star N^\frac {1}{2} \right), \quad \mbox{lower one-sided} \\ P\left(\chi ^2(1, (\delta ^\star )^2 N) \ge \left[ \frac{\mr{SE}_{H_0}(\log (\mr{WMW}_\mr {odds}))}{\mr{SE}(\log (\mr{WMW}_\mr {odds}))} \right]^2 \chi ^2_{1-\alpha }(1)\right), \quad \mbox{two-sided} \\ \end{array} \right. \\ \end{align*}](images/statug_power0533.png)

where

is the primary noncentrality—that is, the "effect size" that quantifies how much the two conjectured distributions differ.

Z is a standard normal random variable, ![]() is a noncentral

is a noncentral ![]() random variable with degrees of freedom df and noncentrality nc, and N is the total sample size.

random variable with degrees of freedom df and noncentrality nc, and N is the total sample size.