The POWER Procedure

- Overview

-

Getting Started

-

Syntax

-

Details

-

Examples

One-Way ANOVAThe Sawtooth Power Function in Proportion AnalysesSimple AB/BA Crossover DesignsNoninferiority Test with Lognormal DataMultiple Regression and CorrelationComparing Two Survival CurvesConfidence Interval PrecisionCustomizing PlotsBinary Logistic Regression with Independent PredictorsWilcoxon-Mann-Whitney Test

One-Way ANOVAThe Sawtooth Power Function in Proportion AnalysesSimple AB/BA Crossover DesignsNoninferiority Test with Lognormal DataMultiple Regression and CorrelationComparing Two Survival CurvesConfidence Interval PrecisionCustomizing PlotsBinary Logistic Regression with Independent PredictorsWilcoxon-Mann-Whitney Test - References

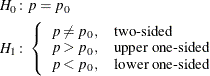

Let X be distributed as ![]() . The hypotheses for the test of the proportion p are as follows:

. The hypotheses for the test of the proportion p are as follows:

The exact test assumes binomially distributed data and requires ![]() and

and ![]() . The test statistic is

. The test statistic is

The significance probability ![]() is split symmetrically for two-sided tests, in the sense that each tail is filled with as much as possible up to

is split symmetrically for two-sided tests, in the sense that each tail is filled with as much as possible up to ![]() .

.

Exact power computations are based on the binomial distribution and computing formulas such as the following from Johnson, Kotz, and Kemp (1992, equation 3.20):

Let ![]() and

and ![]() denote lower and upper critical values, respectively. Let

denote lower and upper critical values, respectively. Let ![]() denote the achieved (actual) significance level, which for two-sided tests is the sum of the favorable major tail (

denote the achieved (actual) significance level, which for two-sided tests is the sum of the favorable major tail (![]() ) and the opposite minor tail (

) and the opposite minor tail (![]() ).

).

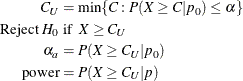

For the upper one-sided case,

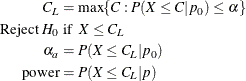

For the lower one-sided case,

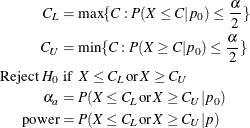

For the two-sided case,

For the normal approximation test, the test statistic is

For the METHOD=EXACT option, the computations are the same as described in the section Exact Test of a Binomial Proportion (TEST=EXACT) except for the definitions of the critical values.

For the upper one-sided case,

For the lower one-sided case,

For the two-sided case,

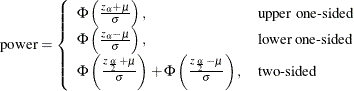

For the METHOD=NORMAL option, the test statistic ![]() is assumed to have the normal distribution

is assumed to have the normal distribution

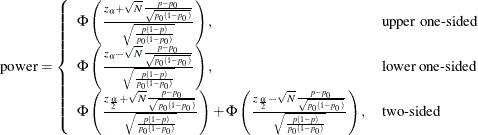

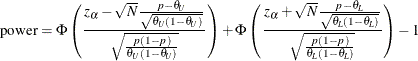

The approximate power is computed as

The approximate sample size is computed in closed form for the one-sided cases by inverting the power equation,

and by numerical inversion for the two-sided case.

For the normal approximation test using the sample variance, the test statistic is

where ![]() .

.

For the METHOD=EXACT option, the computations are the same as described in the section Exact Test of a Binomial Proportion (TEST=EXACT) except for the definitions of the critical values.

For the upper one-sided case,

For the lower one-sided case,

For the two-sided case,

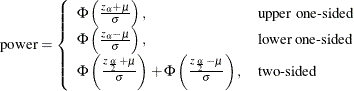

For the METHOD=NORMAL option, the test statistic ![]() is assumed to have the normal distribution

is assumed to have the normal distribution

(see Chow, Shao, and Wang (2003, p. 82)).

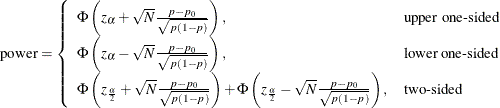

The approximate power is computed as

The approximate sample size is computed in closed form for the one-sided cases by inverting the power equation,

and by numerical inversion for the two-sided case.

For the normal approximation test with continuity adjustment, the test statistic is (Pagano and Gauvreau, 1993, p. 295):

For the METHOD=EXACT option, the computations are the same as described in the section Exact Test of a Binomial Proportion (TEST=EXACT) except for the definitions of the critical values.

For the upper one-sided case,

For the lower one-sided case,

For the two-sided case,

For the METHOD=NORMAL option, the test statistic ![]() is assumed to have the normal distribution

is assumed to have the normal distribution ![]() , where

, where ![]() and

and ![]() are derived as follows.

are derived as follows.

For convenience of notation, define

Then

and

![\begin{align*} \mr {Var} \left[Z_ c(X)\right] & = 4 k^2 N p (1-p) + k^2 \left[ 1 - P(X = N p_0) \right] - k^2 \left[ P(X<Np_0) - P(X>Np_0) \right]^2 \\ & \quad + 4 k^2 \left[ E\left(X 1_{\{ X<Np_0\} }\right) - E\left(X 1_{\{ X>Np_0\} }\right) \right] - 4 k^2 N p \left[P(X<Np_0) - P(X>Np_0)\right] \\ \end{align*}](images/statug_power0191.png)

The probabilities ![]() ,

, ![]() , and

, and ![]() and the truncated expectations

and the truncated expectations ![]() and

and ![]() are approximated by assuming the normal-approximate distribution of X,

are approximated by assuming the normal-approximate distribution of X, ![]() . Letting

. Letting ![]() and

and ![]() denote the standard normal PDF and CDF, respectively, and defining d as

denote the standard normal PDF and CDF, respectively, and defining d as

the terms are computed as follows:

![\begin{align*} P(X=Np_0) & = 0 \\ P(X<Np_0) & = \Phi (d) \\ P(X>Np_0) & = 1 - \Phi (d) \\ E\left(X 1_{\{ X<Np_0\} }\right) & = Np\Phi (d) - \left[ N p (1-p) \right]^\frac {1}{2} \phi (d) \\ E\left(X 1_{\{ X>Np_0\} }\right) & = Np\left[ 1 - \Phi (d) \right] + \left[ N p (1-p) \right]^\frac {1}{2} \phi (d) \\ \end{align*}](images/statug_power0199.png)

The mean and variance of ![]() are thus approximated by

are thus approximated by

and

The approximate power is computed as

The approximate sample size is computed by numerical inversion.

For the normal approximation test with continuity adjustment using the sample variance, the test statistic is

where ![]() .

.

For the METHOD=EXACT option, the computations are the same as described in the section Exact Test of a Binomial Proportion (TEST=EXACT) except for the definitions of the critical values.

For the upper one-sided case,

For the lower one-sided case,

For the two-sided case,

For the METHOD=NORMAL option, the test statistic ![]() is assumed to have the normal distribution

is assumed to have the normal distribution ![]() , where

, where ![]() and

and ![]() are derived as follows.

are derived as follows.

For convenience of notation, define

Then

and

![\begin{align*} \mr {Var} \left[Z_{cs}(X)\right] & \approx 4 k^2 N p (1-p) + k^2 \left[ 1 - P(X = N p_0) \right] - k^2 \left[ P(X<Np_0) - P(X>Np_0) \right]^2 \\ & \quad + 4 k^2 \left[ E\left(X 1_{\{ X<Np_0\} }\right) - E\left(X 1_{\{ X>Np_0\} }\right) \right] - 4 k^2 N p \left[P(X<Np_0) - P(X>Np_0)\right] \\ \end{align*}](images/statug_power0210.png)

The probabilities ![]() ,

, ![]() , and

, and ![]() and the truncated expectations

and the truncated expectations ![]() and

and ![]() are approximated by assuming the normal-approximate distribution of X,

are approximated by assuming the normal-approximate distribution of X, ![]() . Letting

. Letting ![]() and

and ![]() denote the standard normal PDF and CDF, respectively, and defining d as

denote the standard normal PDF and CDF, respectively, and defining d as

the terms are computed as follows:

![\begin{align*} P(X=Np_0) & = 0 \\ P(X<Np_0) & = \Phi (d) \\ P(X>Np_0) & = 1 - \Phi (d) \\ E\left(X 1_{\{ X<Np_0\} }\right) & = Np\Phi (d) - \left[ N p (1-p) \right]^\frac {1}{2} \phi (d) \\ E\left(X 1_{\{ X>Np_0\} }\right) & = Np\left[ 1 - \Phi (d) \right] + \left[ N p (1-p) \right]^\frac {1}{2} \phi (d) \\ \end{align*}](images/statug_power0199.png)

The mean and variance of ![]() are thus approximated by

are thus approximated by

and

The approximate power is computed as

The approximate sample size is computed by numerical inversion.

The hypotheses for the equivalence test are

where ![]() and

and ![]() are the lower and upper equivalence bounds, respectively.

are the lower and upper equivalence bounds, respectively.

The analysis is the two one-sided tests (TOST) procedure as described in Chow, Shao, and Wang (2003) on p. 84, but using exact critical values as on p. 116 instead of normal-based critical values.

Two different hypothesis tests are carried out:

and

If ![]() is rejected in favor of

is rejected in favor of ![]() and

and ![]() is rejected in favor of

is rejected in favor of ![]() , then

, then ![]() is rejected in favor of

is rejected in favor of ![]() .

.

The test statistic for each of the two tests (![]() versus

versus ![]() and

and ![]() versus

versus ![]() ) is

) is

Let ![]() denote the critical value of the exact upper one-sided test of

denote the critical value of the exact upper one-sided test of ![]() versus

versus ![]() , and let

, and let ![]() denote the critical value of the exact lower one-sided test of

denote the critical value of the exact lower one-sided test of ![]() versus

versus ![]() . These critical values are computed in the section Exact Test of a Binomial Proportion (TEST=EXACT). Both of these tests are rejected if and only if

. These critical values are computed in the section Exact Test of a Binomial Proportion (TEST=EXACT). Both of these tests are rejected if and only if ![]() . Thus, the exact power of the equivalence test is

. Thus, the exact power of the equivalence test is

The probabilities are computed using Johnson and Kotz (1970, equation 3.20).

The hypotheses for the equivalence test are

where ![]() and

and ![]() are the lower and upper equivalence bounds, respectively.

are the lower and upper equivalence bounds, respectively.

The analysis is the two one-sided tests (TOST) procedure as described in Chow, Shao, and Wang (2003) on p. 84, but using the null variance instead of the sample variance.

Two different hypothesis tests are carried out:

and

If ![]() is rejected in favor of

is rejected in favor of ![]() and

and ![]() is rejected in favor of

is rejected in favor of ![]() , then

, then ![]() is rejected in favor of

is rejected in favor of ![]() .

.

The test statistic for the test of ![]() versus

versus ![]() is

is

The test statistic for the test of ![]() versus

versus ![]() is

is

For the METHOD=EXACT option, let ![]() denote the critical value of the exact upper one-sided test of

denote the critical value of the exact upper one-sided test of ![]() versus

versus ![]() using

using ![]() . This critical value is computed in the section z Test for Binomial Proportion Using Null Variance (TEST=Z VAREST=NULL). Similarly, let

. This critical value is computed in the section z Test for Binomial Proportion Using Null Variance (TEST=Z VAREST=NULL). Similarly, let ![]() denote the critical value of the exact lower one-sided test of

denote the critical value of the exact lower one-sided test of ![]() versus

versus ![]() using

using ![]() . Both of these tests are rejected if and only if

. Both of these tests are rejected if and only if ![]() . Thus, the exact power of the equivalence test is

. Thus, the exact power of the equivalence test is

The probabilities are computed using Johnson and Kotz (1970, equation 3.20).

For the METHOD=NORMAL option, the test statistic ![]() is assumed to have the normal distribution

is assumed to have the normal distribution

and the test statistic ![]() is assumed to have the normal distribution

is assumed to have the normal distribution

(see Chow, Shao, and Wang (2003, p. 84)). The approximate power is computed as

The approximate sample size is computed by numerically inverting the power formula, using the sample size estimate ![]() of Chow, Shao, and Wang (2003, p. 85) as an initial guess:

of Chow, Shao, and Wang (2003, p. 85) as an initial guess:

The hypotheses for the equivalence test are

where ![]() and

and ![]() are the lower and upper equivalence bounds, respectively.

are the lower and upper equivalence bounds, respectively.

The analysis is the two one-sided tests (TOST) procedure as described in Chow, Shao, and Wang (2003) on p. 84.

Two different hypothesis tests are carried out:

and

If ![]() is rejected in favor of

is rejected in favor of ![]() and

and ![]() is rejected in favor of

is rejected in favor of ![]() , then

, then ![]() is rejected in favor of

is rejected in favor of ![]() .

.

The test statistic for the test of ![]() versus

versus ![]() is

is

where ![]() .

.

The test statistic for the test of ![]() versus

versus ![]() is

is

For the METHOD=EXACT option, let ![]() denote the critical value of the exact upper one-sided test of

denote the critical value of the exact upper one-sided test of ![]() versus

versus ![]() using

using ![]() . This critical value is computed in the section z Test for Binomial Proportion Using Sample Variance (TEST=Z VAREST=SAMPLE). Similarly, let

. This critical value is computed in the section z Test for Binomial Proportion Using Sample Variance (TEST=Z VAREST=SAMPLE). Similarly, let ![]() denote the critical value of the exact lower one-sided test of

denote the critical value of the exact lower one-sided test of ![]() versus

versus ![]() using

using ![]() . Both of these tests are rejected if and only if

. Both of these tests are rejected if and only if ![]() . Thus, the exact power of the equivalence test is

. Thus, the exact power of the equivalence test is

The probabilities are computed using Johnson and Kotz (1970, equation 3.20).

For the METHOD=NORMAL option, the test statistic ![]() is assumed to have the normal distribution

is assumed to have the normal distribution

and the test statistic ![]() is assumed to have the normal distribution

is assumed to have the normal distribution

(see Chow, Shao, and Wang (2003), p. 84).

The approximate power is computed as

The approximate sample size is computed by numerically inverting the power formula, using the sample size estimate ![]() of Chow, Shao, and Wang (2003, p. 85) as an initial guess:

of Chow, Shao, and Wang (2003, p. 85) as an initial guess:

The hypotheses for the equivalence test are

where ![]() and

and ![]() are the lower and upper equivalence bounds, respectively.

are the lower and upper equivalence bounds, respectively.

The analysis is the two one-sided tests (TOST) procedure as described in Chow, Shao, and Wang (2003) on p. 84, but using the null variance instead of the sample variance.

Two different hypothesis tests are carried out:

and

If ![]() is rejected in favor of

is rejected in favor of ![]() and

and ![]() is rejected in favor of

is rejected in favor of ![]() , then

, then ![]() is rejected in favor of

is rejected in favor of ![]() .

.

The test statistic for the test of ![]() versus

versus ![]() is

is

where ![]() .

.

The test statistic for the test of ![]() versus

versus ![]() is

is

For the METHOD=EXACT option, let ![]() denote the critical value of the exact upper one-sided test of

denote the critical value of the exact upper one-sided test of ![]() versus

versus ![]() using

using ![]() . This critical value is computed in the section z Test for Binomial Proportion with Continuity Adjustment Using Null Variance (TEST=ADJZ VAREST=NULL). Similarly, let

. This critical value is computed in the section z Test for Binomial Proportion with Continuity Adjustment Using Null Variance (TEST=ADJZ VAREST=NULL). Similarly, let ![]() denote the critical value of the exact lower one-sided test of

denote the critical value of the exact lower one-sided test of ![]() versus

versus ![]() using

using ![]() . Both of these tests are rejected if and only if

. Both of these tests are rejected if and only if ![]() . Thus, the exact power of the equivalence test is

. Thus, the exact power of the equivalence test is

The probabilities are computed using Johnson and Kotz (1970, equation 3.20).

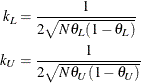

For the METHOD=NORMAL option, the test statistic ![]() is assumed to have the normal distribution

is assumed to have the normal distribution ![]() , and

, and ![]() is assumed to have the normal distribution

is assumed to have the normal distribution ![]() , where

, where ![]() ,

, ![]() ,

, ![]() , and

, and ![]() are derived as follows.

are derived as follows.

For convenience of notation, define

Then

and

![\begin{align*} \mr {Var} \left[Z_{cL}(X)\right] & \approx 4 k_ L^2 N p (1-p) + k_ L^2 \left[ 1 - P(X = N \theta _ L) \right] - k_ L^2 \left[ P(X<N\theta _ L) - P(X>N\theta _ L) \right]^2 \\ & \quad + 4 k_ L^2 \left[ E\left(X 1_{\{ X<N\theta _ L\} }\right) - E\left(X 1_{\{ X>N\theta _ L\} }\right) \right] - 4 k_ L^2 N p \left[P(X<N\theta _ L) - P(X>N\theta _ L)\right] \\ \mr {Var} \left[Z_{cU}(X)\right] & \approx 4 k_ U^2 N p (1-p) + k_ U^2 \left[ 1 - P(X = N \theta _ U) \right] - k_ U^2 \left[ P(X<N\theta _ U) - P(X>N\theta _ U) \right]^2 \\ & \quad + 4 k_ U^2 \left[ E\left(X 1_{\{ X<N\theta _ U\} }\right) - E\left(X 1_{\{ X>N\theta _ U\} }\right) \right] - 4 k_ U^2 N p \left[P(X<N\theta _ U) - P(X>N\theta _ U)\right] \\ \end{align*}](images/statug_power0250.png)

The probabilities ![]() ,

, ![]() ,

, ![]() ,

, ![]() ,

, ![]() , and

, and ![]() and the truncated expectations

and the truncated expectations ![]() ,

, ![]() ,

, ![]() , and

, and ![]() are approximated by assuming the normal-approximate distribution of X,

are approximated by assuming the normal-approximate distribution of X, ![]() . Letting

. Letting ![]() and

and ![]() denote the standard normal PDF and CDF, respectively, and defining

denote the standard normal PDF and CDF, respectively, and defining ![]() and

and ![]() as

as

![\begin{align*} d_ L = \frac{N \theta _ L - N p}{\left[ N p (1-p) \right]^\frac {1}{2}} \\ d_ U = \frac{N \theta _ U - N p}{\left[ N p (1-p) \right]^\frac {1}{2}} \end{align*}](images/statug_power0261.png)

the terms are computed as follows:

![\begin{align*} P(X=N\theta _ L) & = 0 \\ P(X=N\theta _ U) & = 0 \\ P(X<N\theta _ L) & = \Phi (d_ L) \\ P(X<N\theta _ U) & = \Phi (d_ U) \\ P(X>N\theta _ L) & = 1 - \Phi (d_ L) \\ P(X>N\theta _ U) & = 1 - \Phi (d_ U) \\ E\left(X 1_{\{ X<N\theta _ L\} }\right) & = Np\Phi (d_ L) - \left[ N p (1-p) \right]^\frac {1}{2} \phi (d_ L) \\ E\left(X 1_{\{ X<N\theta _ U\} }\right) & = Np\Phi (d_ U) - \left[ N p (1-p) \right]^\frac {1}{2} \phi (d_ U) \\ E\left(X 1_{\{ X>N\theta _ L\} }\right) & = Np\left[ 1 - \Phi (d_ L) \right] + \left[ N p (1-p) \right]^\frac {1}{2} \phi (d_ L) \\ E\left(X 1_{\{ X>N\theta _ U\} }\right) & = Np\left[ 1 - \Phi (d_ U) \right] + \left[ N p (1-p) \right]^\frac {1}{2} \phi (d_ U) \\ \end{align*}](images/statug_power0262.png)

The mean and variance of ![]() and

and ![]() are thus approximated by

are thus approximated by

and

![\begin{align*} \sigma _ L^2 & = 4k_ L^2 \left[Np(1-p) + \Phi (d_ L)\left( 1-\Phi (d_ L) \right) - 2 \left( Np(1-p) \right)^\frac {1}{2} \phi (d_ L) \right] \\ \sigma _ U^2 & = 4k_ U^2 \left[Np(1-p) + \Phi (d_ U)\left( 1-\Phi (d_ U) \right) - 2 \left( Np(1-p) \right)^\frac {1}{2} \phi (d_ U) \right] \\ \end{align*}](images/statug_power0264.png)

The approximate power is computed as

The approximate sample size is computed by numerically inverting the power formula.

The hypotheses for the equivalence test are

where ![]() and

and ![]() are the lower and upper equivalence bounds, respectively.

are the lower and upper equivalence bounds, respectively.

The analysis is the two one-sided tests (TOST) procedure as described in Chow, Shao, and Wang (2003) on p. 84.

Two different hypothesis tests are carried out:

and

If ![]() is rejected in favor of

is rejected in favor of ![]() and

and ![]() is rejected in favor of

is rejected in favor of ![]() , then

, then ![]() is rejected in favor of

is rejected in favor of ![]() .

.

The test statistic for the test of ![]() versus

versus ![]() is

is

where ![]() .

.

The test statistic for the test of ![]() versus

versus ![]() is

is

For the METHOD=EXACT option, let ![]() denote the critical value of the exact upper one-sided test of

denote the critical value of the exact upper one-sided test of ![]() versus

versus ![]() using

using ![]() . This critical value is computed in the section z Test for Binomial Proportion with Continuity Adjustment Using Sample Variance (TEST=ADJZ VAREST=SAMPLE). Similarly, let

. This critical value is computed in the section z Test for Binomial Proportion with Continuity Adjustment Using Sample Variance (TEST=ADJZ VAREST=SAMPLE). Similarly, let ![]() denote the critical value of the exact lower one-sided test of

denote the critical value of the exact lower one-sided test of ![]() versus

versus ![]() using

using ![]() . Both of these tests are rejected if and only if

. Both of these tests are rejected if and only if ![]() . Thus, the exact power of the equivalence test is

. Thus, the exact power of the equivalence test is

The probabilities are computed using Johnson and Kotz (1970, equation 3.20).

For the METHOD=NORMAL option, the test statistic ![]() is assumed to have the normal distribution

is assumed to have the normal distribution ![]() , and

, and ![]() is assumed to have the normal distribution

is assumed to have the normal distribution ![]() , where

, where ![]() ,

, ![]() ,

, ![]() and

and ![]() are derived as follows.

are derived as follows.

For convenience of notation, define

Then

and

![\begin{align*} \mr {Var} \left[Z_{csL}(X)\right] & \approx 4 k^2 N p (1-p) + k^2 \left[ 1 - P(X = N \theta _ L) \right] - k^2 \left[ P(X<N\theta _ L) - P(X>N\theta _ L) \right]^2 \\ & \quad + 4 k^2 \left[ E\left(X 1_{\{ X<N\theta _ L\} }\right) - E\left(X 1_{\{ X>N\theta _ L\} }\right) \right] - 4 k^2 N p \left[P(X<N\theta _ L) - P(X>N\theta _ L)\right] \\ \mr {Var} \left[Z_{csU}(X)\right] & \approx 4 k^2 N p (1-p) + k^2 \left[ 1 - P(X = N \theta _ U) \right] - k^2 \left[ P(X<N\theta _ U) - P(X>N\theta _ U) \right]^2 \\ & \quad + 4 k^2 \left[ E\left(X 1_{\{ X<N\theta _ U\} }\right) - E\left(X 1_{\{ X>N\theta _ U\} }\right) \right] - 4 k^2 N p \left[P(X<N\theta _ U) - P(X>N\theta _ U)\right] \\ \end{align*}](images/statug_power0271.png)

The probabilities ![]() ,

, ![]() ,

, ![]() ,

, ![]() ,

, ![]() , and

, and ![]() and the truncated expectations

and the truncated expectations ![]() ,

, ![]() ,

, ![]() , and

, and ![]() are approximated by assuming the normal-approximate distribution of X,

are approximated by assuming the normal-approximate distribution of X, ![]() . Letting

. Letting ![]() and

and ![]() denote the standard normal PDF and CDF, respectively, and defining

denote the standard normal PDF and CDF, respectively, and defining ![]() and

and ![]() as

as

![\begin{align*} d_ L = \frac{N \theta _ L - N p}{\left[ N p (1-p) \right]^\frac {1}{2}} \\ d_ U = \frac{N \theta _ U - N p}{\left[ N p (1-p) \right]^\frac {1}{2}} \end{align*}](images/statug_power0261.png)

the terms are computed as follows:

![\begin{align*} P(X=N\theta _ L) & = 0 \\ P(X=N\theta _ U) & = 0 \\ P(X<N\theta _ L) & = \Phi (d_ L) \\ P(X<N\theta _ U) & = \Phi (d_ U) \\ P(X>N\theta _ L) & = 1 - \Phi (d_ L) \\ P(X>N\theta _ U) & = 1 - \Phi (d_ U) \\ E\left(X 1_{\{ X<N\theta _ L\} }\right) & = Np\Phi (d_ L) - \left[ N p (1-p) \right]^\frac {1}{2} \phi (d_ L) \\ E\left(X 1_{\{ X<N\theta _ U\} }\right) & = Np\Phi (d_ U) - \left[ N p (1-p) \right]^\frac {1}{2} \phi (d_ U) \\ E\left(X 1_{\{ X>N\theta _ L\} }\right) & = Np\left[ 1 - \Phi (d_ L) \right] + \left[ N p (1-p) \right]^\frac {1}{2} \phi (d_ L) \\ E\left(X 1_{\{ X>N\theta _ U\} }\right) & = Np\left[ 1 - \Phi (d_ U) \right] + \left[ N p (1-p) \right]^\frac {1}{2} \phi (d_ U) \\ \end{align*}](images/statug_power0262.png)

The mean and variance of ![]() and

and ![]() are thus approximated by

are thus approximated by

and

![\begin{align*} \sigma _ L^2 & = 4k^2 \left[Np(1-p) + \Phi (d_ L)\left( 1-\Phi (d_ L) \right) - 2 \left( Np(1-p) \right)^\frac {1}{2} \phi (d_ L) \right] \\ \sigma _ U^2 & = 4k^2 \left[Np(1-p) + \Phi (d_ U)\left( 1-\Phi (d_ U) \right) - 2 \left( Np(1-p) \right)^\frac {1}{2} \phi (d_ U) \right] \\ \end{align*}](images/statug_power0273.png)

The approximate power is computed as

The approximate sample size is computed by numerically inverting the power formula.

The two-sided ![]() % confidence interval for p is

% confidence interval for p is

![\[ \frac{X + \frac{z^2_{1-\alpha /2}}{2}}{N + z^2_{1-\alpha /2}} \quad \pm \quad \frac{z_{1-\alpha /2} N^\frac {1}{2}}{N + z^2_{1-\alpha /2}} \left(\hat{p}(1-\hat{p}) + \frac{z^2_{1-\alpha /2}}{4N} \right)^\frac {1}{2} \]](images/statug_power0275.png)

So the half-width for the two-sided ![]() % confidence interval is

% confidence interval is

![\[ \mbox{half-width} = \frac{z_{1-\alpha /2} N^\frac {1}{2}}{N + z^2_{1-\alpha /2}} \left(\hat{p}(1-\hat{p}) + \frac{z^2_{1-\alpha /2}}{4N} \right)^\frac {1}{2} \]](images/statug_power0276.png)

Prob(Width) is calculated exactly by adding up the probabilities of observing each ![]() that produces a confidence interval whose half-width is at most a target value h:

that produces a confidence interval whose half-width is at most a target value h:

For references and more details about this and all other confidence intervals associated with the CI= option, see Binomial Proportion in Chapter 40: The FREQ Procedure.

The two-sided ![]() % confidence interval for p is

% confidence interval for p is

![\[ \frac{X + \frac{z^2_{1-\alpha /2}}{2}}{N + z^2_{1-\alpha /2}} \quad \pm \quad z_{1-\alpha /2} \left( \frac{\frac{X + \frac{z^2_{1-\alpha /2}}{2}}{N + z^2_{1-\alpha /2}} \left(1 -\frac{X + \frac{z^2_{1-\alpha /2}}{2}}{N + z^2_{1-\alpha /2}} \right)}{N + z^2_{1-\alpha /2}} \right)^\frac {1}{2} \]](images/statug_power0279.png)

So the half-width for the two-sided ![]() % confidence interval is

% confidence interval is

![\[ \mbox{half-width} = z_{1-\alpha /2} \left( \frac{\frac{X + \frac{z^2_{1-\alpha /2}}{2}}{N + z^2_{1-\alpha /2}} \left(1 -\frac{X + \frac{z^2_{1-\alpha /2}}{2}}{N + z^2_{1-\alpha /2}} \right)}{N + z^2_{1-\alpha /2}} \right)^\frac {1}{2} \]](images/statug_power0280.png)

Prob(Width) is calculated exactly by adding up the probabilities of observing each ![]() that produces a confidence interval whose half-width is at most a target value h:

that produces a confidence interval whose half-width is at most a target value h:

The two-sided ![]() % confidence interval for p is

% confidence interval for p is

where

and

The half-width of this two-sided ![]() % confidence interval is defined as half the width of the full interval:

% confidence interval is defined as half the width of the full interval:

Prob(Width) is calculated exactly by adding up the probabilities of observing each ![]() that produces a confidence interval whose half-width is at most a target value h:

that produces a confidence interval whose half-width is at most a target value h:

The two-sided ![]() % confidence interval for p is

% confidence interval for p is

where

and

The half-width of this two-sided ![]() % confidence interval is defined as half the width of the full interval:

% confidence interval is defined as half the width of the full interval:

Prob(Width) is calculated exactly by adding up the probabilities of observing each ![]() that produces a confidence interval whose half-width is at most a target value h:

that produces a confidence interval whose half-width is at most a target value h:

The two-sided ![]() % confidence interval for p is

% confidence interval for p is

So the half-width for the two-sided ![]() % confidence interval is

% confidence interval is

Prob(Width) is calculated exactly by adding up the probabilities of observing each ![]() that produces a confidence interval whose half-width is at most a target value h:

that produces a confidence interval whose half-width is at most a target value h:

The two-sided ![]() % confidence interval for p is

% confidence interval for p is

So the half-width for the two-sided ![]() % confidence interval is

% confidence interval is

Prob(Width) is calculated exactly by adding up the probabilities of observing each ![]() that produces a confidence interval whose half-width is at most a target value h:

that produces a confidence interval whose half-width is at most a target value h: