DataFlux Data Management Studio 2.6: User Guide

Deploying a Data Job as a Real-Time Service

Overview

You can deploy a data job to a DataFlux Data Management Server. Then, the job can be executed with a Web client or another application as a real-time service. Perform the following tasks:

Prerequisites

The following prerequisites must be met:

- You must have a connection to the DataFlux Data Management Server where the jobs will be deployed. For more information, see Connecting to Data Management Servers.

- The DataFlux Data Management Server must have all resources that are required by the deployed job, such as data connections, business rules, or a quality knowledge base.

Create a Data Job That Can Be Deployed as a Real-Time Service

Any data job in the Folders tree can be deployed as a real-time service. However, real-time service jobs typically capture input from or return output to the person who is using the service. To capture input in a data job, start the flow with an External Data Provider node. Add one or more input fields to this node which correspond to the values to be processed by the job.

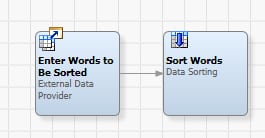

In the example job described in this section (service_Sort_Words), the External Data Provider node enables you to enter a series of words. The words are passed to the job. Finally, the job sorts the words in ascending alphabetical order and displays the sorted list. The following display shows the data flow for the example job:

Perform the following steps to create a data job that can be deployed as a real-time service:

- Create a new data job in the Folders tree. For more information, see Creating a Data Job in the Folders Tree.

- Give the job an appropriate name. The example job could be called service_Sort_Words.

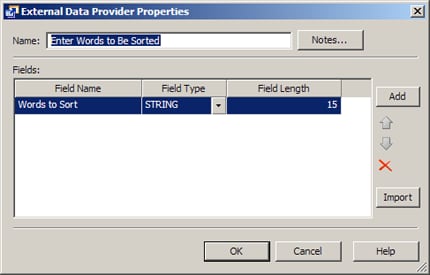

- Add an External Data Provider node to the flow and open the properties dialog for the node.

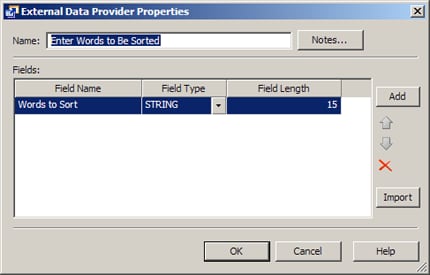

- (Optional) Rename the External Data Provider node to reflect the function of the node in the current job. The first node in the example job enables the user to enter words to be sorted, so the first node could be renamed to Enter Words to Be Sorted.

- Add one or more input fields to this node. The example job requires one input field, which could be called Words to Sort, as shown in the next display.

- Click OK to save your changes to the node.

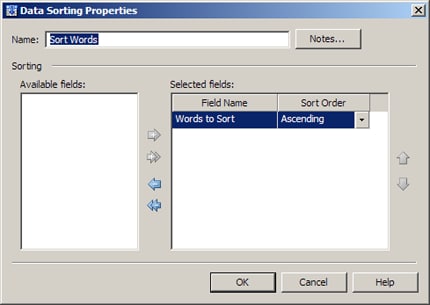

- Add the next node in the flow. The example job requires a terminal output node at this point, a Data Sorting node.

- Open the properties window for the node.

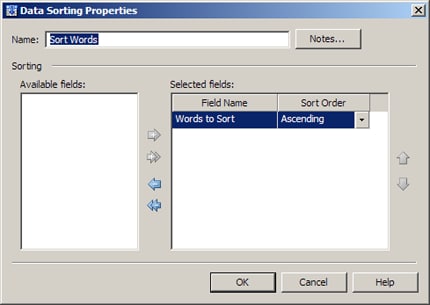

- (Optional) Rename the node to reflect the function of the node in the current job. The terminal node in the example job sorts a list of words, so the output node could be renamed to Sort Words.

- Configure the node. For example, in the Sort Words node, you would select one input field to be sorted, as shown in the next display:

- Click OK to save your changes to the node. At this point you have configured the example job.

Note that when your job ends with more than one output node, you can specify one node as the default target node. Right click the node and select Advanced Properties. Select the Set as default target node checkbox. A small decoration will appear at upper right of the node's icon in the job.

This designation can be necessary because only one target node in a job can pass back data to the service call. If a job does not specify a single node at the end of the flow, then you must specify one node as the default target node. For example, suppose that your job has a flow which ends in a branch. In one branch a node writes an error log, and in the other branch a node passes data back to the service call. In this situation, you should specify the appropriate node as the default target node.

You can debug the service job before you deploy it. Perform the following steps:

- Remove the connection from External Data Provider node to its successor.

- Add a Job Specific Data node and define the same fields as in the External Data Provider node. Note that you can copy and paste the field name and field type into the Advanced Properties dialog.

- Add some test data to the node.

- Use the preview function to review the output of each node.

Deploy a Data Job as a Real-Time Service

Perform the following steps to deploy a data job to a DataFlux Data Management Server:

- Click the Data Management Servers riser.

- Select the server where the jobs will be deployed. Enter any required credentials.

- Navigate to the Real-Time Data Services folder and right-click it to access a pop-up menu. Click Import to display the Import From Repository wizard.

- Navigate to the data job that you just created and select it.

- Click Next to access the Import Location step of the wizard.

- Select the Real-Time Data Services folder.

- Click Import.

- Click Close to close the wizard. The job has been deployed to the server. The next task is to verify that the deployed job will execute properly as a real-time service.

- Right-click the deployed job, and then click Test in the pop-up menu.

- Enter appropriate input. For the example job, you would enter a list of words in the Words to Sort field.

- Click Test to test the inputs that you entered. The results of a test for the example job are shown in the following display:

The data job has now been deployed as a service and tested. It can be accessed and run with a Web client or another application.

|

Documentation Feedback: yourturn@sas.com

Note: Always include the Doc ID when providing documentation feedback.

|

Doc ID: dfU_T_DataJob_Service.html

|