Overview of Scoring Tasks

The purpose of a scoring

task within SAS Model Manager is to run the score code of a model

and produce scoring results that you can use for scoring accuracy

and performance analysis. The scoring task uses data from a scoring

task input table to generate the scoring task output table. The types

of score code for a model that can be imported are a DATA step fragment

and ready-to-run SAS code.

If your environment

has its own means of executing the score code, then your use of the

SAS Model Manager scoring tasks is mostly limited to testing the score

code. Otherwise, you can use the SAS Model Manager scoring tasks both

to test your score code and execute it in a production environment.

Scoring results for a model in a test environment are stored on the

SAS Content Server. Scoring results for a model in a production environment

are written to the location that the output table metadata specifies.

In Windows, the scoring task output table in a SAS library must have

Modify, Read & Execute, Read, and Write security permissions. For more information,

see Scoring Task Output Tables.

CAUTION:

Executing

a scoring task in production mode overwrites the scoring task output

table, which might result in a loss of data.

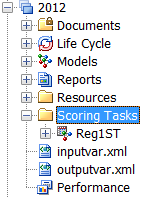

You create a new scoring

task in the Scoring folder of your version.

Here is an example of a Scoring folder under

the version 2012.

These are the tasks

that you perform as part of the Scoring Task workflow:

-

Before creating a scoring task, you must create and register scoring task input and output tables. For more information, see Create Scoring Output Tables and Project Tables.

-

To create a new scoring task for a model, you use the New Scoring Task window. When a new scoring task is successfully created, the new scoring task folder is selected under the Scoring folder. The scoring task tabbed view displays the various views of the scoring task information. For more information, see Create a Scoring Task and Scoring Task Tabbed Views.

-

Before you execute the scoring task it is recommended that you verify the scoring task output variable mappings on the Output Table view. For more information, see Map Scoring Task Output Variables.

-

After the scoring task output variables are mapped to the model output variables, it is recommended that you verify the model input variables against the scoring task input table columns. A convenient way to validate the scoring task input table is to use the Quick Mapping Check

tool. You can then execute the scoring task. For more information,

see Execute a Scoring Task.

tool. You can then execute the scoring task. For more information,

see Execute a Scoring Task. -

To run a scoring task at a scheduled time, you can open the New Schedule window to specify the date, time and frequency that you want the scoring task to run. For more information, see Schedule Scoring Tasks.

-

After the successful execution of the scoring task, you can generate a number of graphical views that represent the contents of the output table. For more information, see Graph Scoring Task Results.