| Global versus Local Optima |

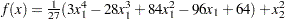

All the IML optimization algorithms converge toward local rather than global optima. The smallest local minimum of an objective function is called the global minimum, and the largest local maximum of an objective function is called the global maximum. Hence, the subroutines can occasionally fail to find the global optimum. Suppose you have the function  , which has a local minimum at

, which has a local minimum at  and a global minimum at the point

and a global minimum at the point  .

.

The following statements use two calls of the NLPTR subroutine to minimize the preceding function. The first call specifies the initial point  , and the second call specifies the initial point

, and the second call specifies the initial point  . The first call finds the local optimum

. The first call finds the local optimum  , and the second call finds the global optimum

, and the second call finds the global optimum  .

.

proc iml;

start F_GLOBAL(x);

f=(3*x[1]**4-28*x[1]**3+84*x[1]**2-96*x[1]+64)/27 + x[2]**2;

return(f);

finish F_GLOBAL;

xa = {.5 1.5};

xb = {3 -1};

optn = {0 2};

call nlptr(rca,xra,"F_GLOBAL",xa,optn);

call nlptr(rcb,xrb,"F_GLOBAL",xb,optn);

print xra xrb;

One way to find out whether the objective function has more than one local optimum is to run various optimizations with a pattern of different starting points.

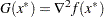

For a more mathematical definition of optimality, refer to the Kuhn-Tucker theorem in standard optimization literature. Using rather nonmathematical language, a local minimizer  satisfies the following conditions:

satisfies the following conditions:

There exists a small, feasible neighborhood of

that does not contain any point

that does not contain any point  with a smaller function value

with a smaller function value  .

. The vector of first derivatives (gradient)

of the objective function

of the objective function  (projected toward the feasible region) at the point

(projected toward the feasible region) at the point  is zero.

is zero. The matrix of second derivatives

(Hessian matrix) of the objective function

(Hessian matrix) of the objective function  (projected toward the feasible region) at the point

(projected toward the feasible region) at the point  is positive definite.

is positive definite.

A local maximizer has the largest value in a feasible neighborhood and a negative definite Hessian.

The iterative optimization algorithm terminates at the point  , which should be in a small neighborhood (in terms of a user-specified termination criterion) of a local optimizer

, which should be in a small neighborhood (in terms of a user-specified termination criterion) of a local optimizer  . If the point

. If the point  is located on one or more active boundary or general linear constraints, the local optimization conditions are valid only for the feasible region. That is,

is located on one or more active boundary or general linear constraints, the local optimization conditions are valid only for the feasible region. That is,

the projected gradient,

, must be sufficiently small

, must be sufficiently small the projected Hessian,

, must be positive definite for minimization problems or negative definite for maximization problems

, must be positive definite for minimization problems or negative definite for maximization problems

If there are  active constraints at the point

active constraints at the point  , the nullspace

, the nullspace  has zero columns and the projected Hessian has zero rows and columns. A matrix with zero rows and columns is considered positive as well as negative definite.

has zero columns and the projected Hessian has zero rows and columns. A matrix with zero rows and columns is considered positive as well as negative definite.