Forecasting Process Details

Equations for the Smoothing Models

Simple Exponential Smoothing

The model equation for simple exponential smoothing is

![\[ Y_{t} = {\mu }_{t} + {\epsilon }_{t} \]](images/etsug_tffordet0068.png)

The smoothing equation is

![\[ L_{t} = {\alpha }Y_{t} + (1-{\alpha })L_{t-1} \]](images/etsug_tffordet0069.png)

The error-correction form of the smoothing equation is

![\[ L_{t} = L_{t-1} + {\alpha }e_{t} \]](images/etsug_tffordet0070.png)

(Note: For missing values,  .)

.)

The k-step prediction equation is

![\[ \hat{Y}_{t}(k) = L_{t} \]](images/etsug_tffordet0072.png)

The ARIMA model equivalency to simple exponential smoothing is the ARIMA(0,1,1) model

The moving-average form of the equation is

![\[ Y_{t} = {\epsilon }_{t} + \sum _{j=1}^{{\infty }}{{\alpha }{\epsilon }_{t-j}} \]](images/etsug_tffordet0074.png)

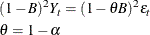

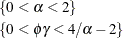

For simple exponential smoothing, the additive-invertible region is

![\[ \{ 0 < {\alpha } < 2\} \]](images/etsug_tffordet0075.png)

The variance of the prediction errors is estimated as

![\[ {var}(e_{t}(k)) = {var}({\epsilon }_{t}) \left[{1 + \sum _{j=1}^{k-1}{{\alpha }^{2}}}\right] = {var}({\epsilon }_{t})( 1 + (k-1){\alpha }^{2}) \]](images/etsug_tffordet0076.png)

Double (Brown) Exponential Smoothing

The model equation for double exponential smoothing is

![\[ Y_{t} = {\mu }_{t} + {\beta }_{t}t + {\epsilon }_{t} \]](images/etsug_tffordet0077.png)

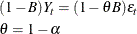

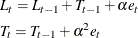

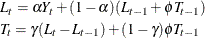

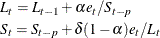

The smoothing equations are

This method can be equivalently described in terms of two successive applications of simple exponential smoothing:

![\begin{gather*} \mi{S} _{t}^{{[1]} } = {\alpha }Y_{t} + (1-{\alpha }) \mi{S} _{t-1}^{{[1]} } \\ \mi{S} _{t}^{{[2]} } = {\alpha } \mi{S} _{t}^{{[1]} } + (1-{\alpha }) \mi{S} _{t-1}^{{[2]} } \end{gather*}](images/etsug_tffordet0079.png)

where ![${ \mi{S} _{t}^{{[1]} } }$](images/etsug_tffordet0080.png) are the smoothed values of

are the smoothed values of  , and

, and ![${ \mi{S} _{t}^{{[2]} } }$](images/etsug_tffordet0082.png) are the smoothed values of

are the smoothed values of ![${ \mi{S} _{t}^{{[1]} } }$](images/etsug_tffordet0080.png) . The prediction equation then takes the form:

. The prediction equation then takes the form:

![\[ \hat{Y}_{t}(k) = (2+{\alpha }k/(1-{\alpha })) \mi{S} _{t}^{{[1]} } - (1+{\alpha }k/(1-{\alpha })) \mi{S} _{t}^{{[2]} } \]](images/etsug_tffordet0083.png)

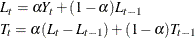

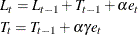

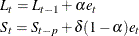

The error-correction forms of the smoothing equations are

(Note: For missing values,  .)

.)

The k-step prediction equation is

![\[ \hat{Y}_{t}(k) = L_{t} + ( (k-1) + 1/{\alpha } )T_{t} \]](images/etsug_tffordet0085.png)

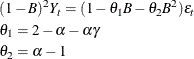

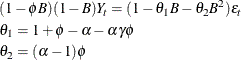

The ARIMA model equivalency to double exponential smoothing is the ARIMA(0,2,2) model,

The moving-average form of the equation is

![\[ Y_{t} = {\epsilon }_{t} + \sum _{j=1}^{{\infty }}{(2{\alpha } + (j-1){\alpha }^{2}) {\epsilon }_{t-j}} \]](images/etsug_tffordet0087.png)

For double exponential smoothing, the additive-invertible region is

![\[ \{ 0 < {\alpha } < 2\} \]](images/etsug_tffordet0075.png)

The variance of the prediction errors is estimated as

![\[ {var}(e_{t}(k)) = {var}({\epsilon }_{t}) \left[{1 + \sum _{j=1}^{k-1}{(2{\alpha } + (j-1){\alpha }^{2})^{2}}}\right] \]](images/etsug_tffordet0088.png)

Linear (Holt) Exponential Smoothing

The model equation for linear exponential smoothing is

![\[ Y_{t} = {\mu }_{t} + {\beta }_{t}t + {\epsilon }_{t} \]](images/etsug_tffordet0077.png)

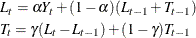

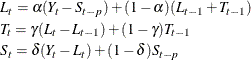

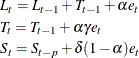

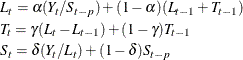

The smoothing equations are

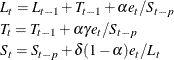

The error-correction form of the smoothing equations is

(Note: For missing values,  .)

.)

The k-step prediction equation is

![\[ \hat{Y}_{t}(k) = L_{t} + kT_{t} \]](images/etsug_tffordet0091.png)

The ARIMA model equivalency to linear exponential smoothing is the ARIMA(0,2,2) model,

The moving-average form of the equation is

![\[ Y_{t} = {\epsilon }_{t} + \sum _{j=1}^{{\infty }}{({\alpha } + j{\alpha }{\gamma }) {\epsilon }_{t-j}} \]](images/etsug_tffordet0093.png)

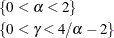

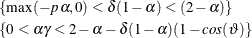

For linear exponential smoothing, the additive-invertible region is

The variance of the prediction errors is estimated as

![\[ {var}(e_{t}(k)) = {var}({\epsilon }_{t}) \left[{1 + \sum _{j=1}^{k-1}{({\alpha } + j{\alpha }{\gamma })^{2}}}\right] \]](images/etsug_tffordet0095.png)

Damped-Trend Linear Exponential Smoothing

The model equation for damped-trend linear exponential smoothing is

![\[ Y_{t} = {\mu }_{t} + {\beta }_{t}t + {\epsilon }_{t} \]](images/etsug_tffordet0077.png)

The smoothing equations are

The error-correction form of the smoothing equations is

![\[ L_{t} = L_{t-1} + {\phi }T_{t-1} + {\alpha }e_{t} T_{t} = {\phi }T_{t-1} + {\alpha }{\gamma }e_{t} \]](images/etsug_tffordet0097.png)

(Note: For missing values,  .)

.)

The k-step prediction equation is

![\[ \hat{Y}_{t}(k) = L_{t} + \sum _{i=1}^{k}{{\phi }^{i}T_{t} } \]](images/etsug_tffordet0098.png)

The ARIMA model equivalency to damped-trend linear exponential smoothing is the ARIMA(1,1,2) model,

The moving-average form of the equation (assuming  ) is

) is

![\[ Y_{t} = {\epsilon }_{t} + \sum _{j=1}^{{\infty }}{({\alpha } + {\alpha }{\gamma } {\phi }({\phi }^{j} - 1)/({\phi } - 1)) {\epsilon }_{t-j}} \]](images/etsug_tffordet0101.png)

For damped-trend linear exponential smoothing, the additive-invertible region is

The variance of the prediction errors is estimated as

![\[ {var}(e_{t}(k)) = {var}({\epsilon }_{t}) \left[ 1 + \sum _{j=1}^{k-1}{({\alpha } + {\alpha }{\gamma } {\phi }({\phi }^{j} - 1)/({\phi } - 1) )^{2}}\right] \]](images/etsug_tffordet0103.png)

Seasonal Exponential Smoothing

The model equation for seasonal exponential smoothing is

![\[ Y_{t} = {\mu }_{t} + s_{p}(t) + {\epsilon }_{t} \]](images/etsug_tffordet0104.png)

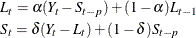

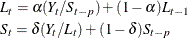

The smoothing equations are

The error-correction form of the smoothing equations is

(Note: For missing values,  .)

.)

The k-step prediction equation is

![\[ \hat{Y}_{t}(k) = L_{t} + S_{t-p+k} \]](images/etsug_tffordet0107.png)

The ARIMA model equivalency to seasonal exponential smoothing is the ARIMA(0,1,p+1)(0,1,0) model,

model,

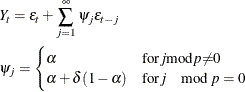

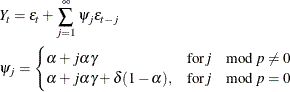

The moving-average form of the equation is

For seasonal exponential smoothing, the additive-invertible region is

![\[ \{ \mr{max} (-p{\alpha },0) < {\delta }(1-{\alpha }) < (2-{\alpha }) \} \]](images/etsug_tffordet0111.png)

The variance of the prediction errors is estimated as

![\[ {var}(e_{t}(k)) = {var}({\epsilon }_{t}) \left[{1 + \sum _{j=1}^{k-1}{{\psi }_{j}^{2}}}\right] \]](images/etsug_tffordet0112.png)

Multiplicative Seasonal Smoothing

In order to use the multiplicative version of seasonal smoothing, the time series and all predictions must be strictly positive.

The model equation for the multiplicative version of seasonal smoothing is

![\[ Y_{t} = {\mu }_{t} s_{p}(t) + {\epsilon }_{t} \]](images/etsug_tffordet0113.png)

The smoothing equations are

The error-correction form of the smoothing equations is

(Note: For missing values,  .)

.)

The k-step prediction equation is

![\[ \hat{Y}_{t}(k) = L_{t} S_{t-p+k} \]](images/etsug_tffordet0116.png)

The multiplicative version of seasonal smoothing does not have an ARIMA equivalent; however, when the seasonal variation is small, the ARIMA additive-invertible region of the additive version of seasonal described in the preceding section can approximate the stability region of the multiplicative version.

The variance of the prediction errors is estimated as

![\[ {var}(e_{t}(k)) = {var}({\epsilon }_{t}) \left[{\sum _{i=0}^{{\infty }}{\sum _{j=0}^{p-1}{({\psi }_{j+ip}S_{t+k}/S_{t+k-j})^{2} }}}\right] \]](images/etsug_tffordet0117.png)

where  are as described for the additive version of seasonal method, and

are as described for the additive version of seasonal method, and  for

for  .

.

Winters Method—Additive Version

The model equation for the additive version of Winters method is

![\[ Y_{t} = {\mu }_{t} + {\beta }_{t}t + s_{p}(t) + {\epsilon }_{t} \]](images/etsug_tffordet0040.png)

The smoothing equations are

The error-correction form of the smoothing equations is

(Note: For missing values,  .)

.)

The k-step prediction equation is

![\[ \hat{Y}_{t}(k) = L_{t} + kT_{t} + S_{t-p+k} \]](images/etsug_tffordet0123.png)

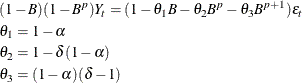

The ARIMA model equivalency to the additive version of Winters method is the ARIMA(0,1,p+1)(0,1,0) model,

model,

![\begin{gather*} (1-{B})(1-{B}^{p})Y_{t} = \left[{1 - { \sum _{i=1}^{p+1}{{\theta }_{i}{B}^{i}}}}\right] {\epsilon }_{t} \\ {\theta }_{j} = \begin{cases} 1 - \alpha - {\alpha }{\gamma } & j = 1 \\ -{\alpha }{\gamma } & 2 \le j \le p-1 \\ 1 - {\alpha }{\gamma } - {\delta }(1-{\alpha }) & j = p \\ (1 - {\alpha })({\delta } - 1) & j = p + 1 \end{cases}\end{gather*}](images/etsug_tffordet0124.png)

The moving-average form of the equation is

For the additive version of Winters method (Archibald 1990), the additive-invertible region is

where  is the smallest nonnegative solution to the equations listed in Archibald (1990).

is the smallest nonnegative solution to the equations listed in Archibald (1990).

The variance of the prediction errors is estimated as

![\[ {var}(e_{t}(k)) = {var}({\epsilon }_{t}) \left[{1 + \sum _{j=1}^{k-1}{{\psi }_{j}^{2}}}\right] \]](images/etsug_tffordet0112.png)

Winters Method—Multiplicative Version

In order to use the multiplicative version of Winters method, the time series and all predictions must be strictly positive.

The model equation for the multiplicative version of Winters method is

![\[ Y_{t} = ({\mu }_{t} + {\beta }_{t}t) s_{p}(t) + {\epsilon }_{t} \]](images/etsug_tffordet0128.png)

The smoothing equations are

The error-correction form of the smoothing equations is

Note: For missing values,  .

.

The k-step prediction equation is

![\[ \hat{Y}_{t}(k) = (L_{t} + kT_{t})S_{t-p+k} \]](images/etsug_tffordet0131.png)

The multiplicative version of Winters method does not have an ARIMA equivalent; however, when the seasonal variation is small, the ARIMA additive-invertible region of the additive version of Winters method described in the preceding section can approximate the stability region of the multiplicative version.

The variance of the prediction errors is estimated as

![\[ {var}(e_{t}(k)) = {var}({\epsilon }_{t}) \left[{\sum _{i=0}^{{\infty }}{\sum _{j=0}^{p-1}{({\psi }_{j+ip}S_{t+k}/S_{t+k-j})^{2} }}}\right] \]](images/etsug_tffordet0117.png)

where  are as described for the additive version of Winters method and

are as described for the additive version of Winters method and  for

for  .

.