Other Applications with Data Quality Functions

SAS Data Loader for Hadoop

SAS Data Loader for

Hadoop addresses data quality concerns with the Cleanse Data, Cluster-Survive

Data. and Match-Merge Data directives. Directives are wizards that

help business users and data scientists perform tasks with their data

without using specialized coding skills.

SAS Data Quality Accelerator

for Hadoop and SAS Code Accelerator for Hadoop are bundled with and

sold only as part of the SAS Data Loader for Hadoop. The data quality

functionality includes match code generation, gender analysis, identification

analysis, casing, and standardization.

The SAS Data Quality

Accelerator for Hadoop and the SAS In-Database Code Accelerator for

Hadoop process SAS DS2 code inside Hadoop. They use the MapReduce

SAS® data-to-decision life cycle framework and the SAS Embedded

Process. This approach minimizes the time spent moving and manipulating

data for analytic processing and cleansing. It also eliminates the

need to know how to code this capability in MapReduce, which improves

performance and execution response times by harnessing the power of

the Hadoop cluster.

SAS Code Accelerator

for Hadoop and SAS Data Quality Accelerator for Hadoop provide the

ability to push processing to the cluster. This ability enables the

SAS programmer to apply data quality routines to data stored in Hadoop.

This includes match coding, standardization routines, and parsing.

SAS Data Quality Accelerator for Teradata

SAS applications are

often built to work with large volumes of data in environments that

demand rigorous IT security and management. When the data is stored

in an external database such as Teradata, the transfer of large data

sets to computers running the SAS System can cause a performance bottleneck.

There are also possible unwanted security and resource management

consequences for local data storage.

SAS Data Quality Accelerator

for Teradata, which is included in SAS In-Database Technologies for

Teradata, addresses these challenges. It moves computational tasks

closer to the data and improves the integration between the SAS System

and the database management system (DBMS).

SAS Data Quality Accelerator

for Teradata provides in-database data quality operations as Teradata

stored procedures. A stored procedure is a subroutine that is stored

in the database and is available to applications that access a relational

database.

The stored procedures

perform the following data quality operations:

-

changing to the proper case

-

attribute extraction

-

gender analysis

-

identification analysis

-

match code generation

-

parsing

-

pattern analysis

-

standardization

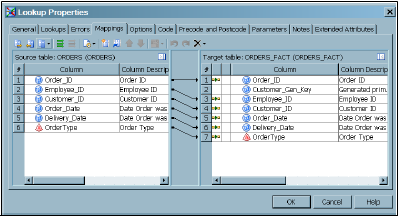

SAS Data Integration Studio

SAS Data Integration

Studio supports data quality improvement with the following transformations

listed under the Data Quality category in the Transformations tree:

-

Apply Lookup Standardization

-

Create Match Code

-

Standardize with Definition

-

DataFlux Batch Job

-

DataFlux Data Service

Like DataFlux Data Management

Studio, SAS Data Integration Studio is included in the SAS Data Management

bundle.

You can use the DataFlux

schemes in the Apply Lookup Standardization transformation to standardize

the format, casing, and spelling of character columns in a source

table. Similarly, you can select and apply DataFlux standardization

definitions in the Standardize with Definition transformation to elements

within a text string. For example, you might want to change all instances

of “Mister” to “Mr.” but only when “Mister”

is used as a salutation. However, this approach requires SAS Data

Quality Server.

The Create Match Code

transformation enables you to analyze source data and generate match

codes based on common information shared by clusters of records. Comparing

match codes instead of actual data enables you to identify records

that are in fact the same entity, despite minor variations in the

data.

The DataFlux Batch Job

and DataFlux Data Service transformations enable you to select and

execute DataFlux jobs and jobs configured as real time from a DataFlux

Data Management Server. Then, you can perform DataFlux quality activities

such as data jobs, process jobs, and profiles.

Many of the features

in SAS Data Quality Server and the DataFlux Data Management Platform

can be used in SAS Data Integration Studio jobs. For example, you

can use DataFlux standardization schemes and definitions in SAS Data

Integration Studio jobs. You can also execute DataFlux jobs, profiles,

and services from SAS Data Integration Studio.

Copyright © SAS Institute Inc. All rights reserved.